Source: Newin

In the early morning of May 15, the Google I/O Developer Conference was officially held. The following is a summary of the 2-hour conference:

1. About Gemini

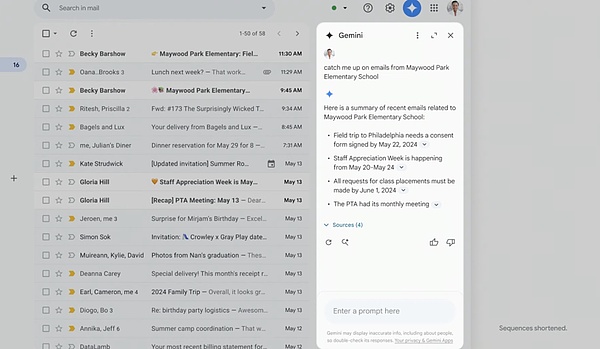

1) Gemini in Gmail

Gmail users will be able to search, summarize and draft emails using Gemini AI technology. It will also be able to take action on emails to perform more complex tasks, such as helping you process e-commerce returns by searching your inbox, finding receipts and filling out online forms.

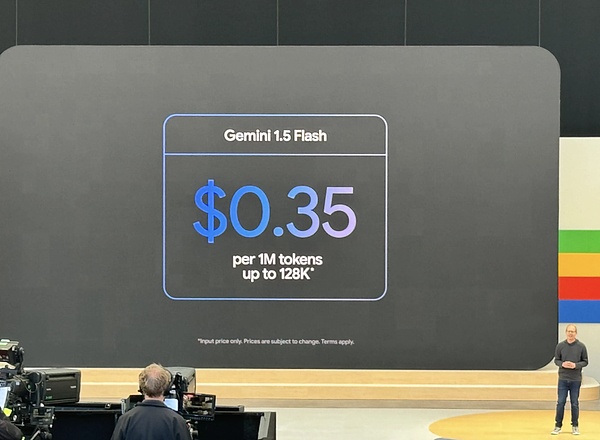

2) Gemini 1.5 Pro & Flash

Another upgrade is that Gemini can now analyze longer documents, code bases, videos, and audio recordings than before. In a private preview of the new version of Google's current flagship model, Gemini 1.5 Pro, it was revealed that it can accommodate up to 2 million tokens. This is twice as much as before, and the new version of Gemini 1.5 Pro supports the largest input of all commercial models.

For less demanding applications, Google has launched a public preview of Gemini 1.5 Flash, a "refined" version of Gemini 1.5 Pro that is a small, efficient model built specifically for "narrow," "high-frequency" generative AI workloads. Flash has up to 2 million token context windows and is multimodal like Gemini 1.5 Pro, meaning it can analyze audio, video, and images as well as text.

In addition, Gemini Advanced users in more than 150 countries and more than 35 languages can take advantage of the greater context of Gemini 1.5 Pro to allow chatbots to analyze, summarize, and answer questions about long documents (up to 1,500 pages).

Gemini Advanced users can start interacting with Gemini 1.5 Pro today, and can also import documents from Google Drive or upload them directly from their mobile devices.

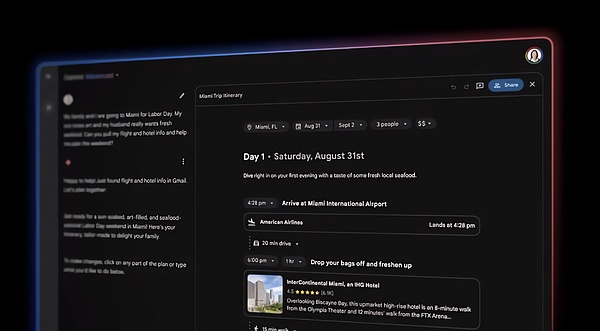

In the coming months, Gemini Advanced will get a new "planning experience" that can create custom travel itineraries based on prompts. Taking into account factors such as flight times (from emails in the user's Gmail inbox), meal preferences and local attractions information (from Google Search and Maps data), as well as the distance between those attractions, Gemini will generate an itinerary that automatically updates to reflect any changes.

In the near future, Gemini Advanced users will be able to create Gems, custom chatbots powered by Google's Gemini model. Along the lines of OpenAI's GPT, Gems can be generated from natural language descriptions — for example, "You are my running coach. Give me a daily running plan" — and shared with others or kept private.

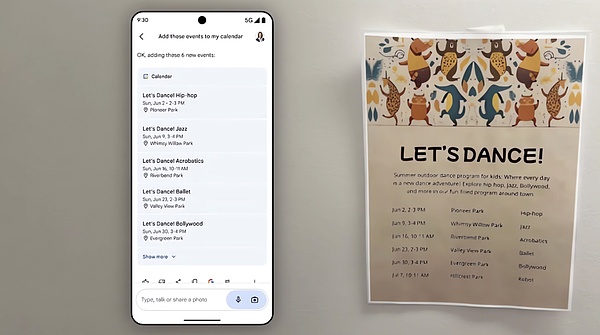

Soon, Gems and Gemini will be able to take advantage of expanded integration with Google services, including Google Calendar, Tasks, Keep, and YouTube Music, to complete a variety of labor-saving tasks.

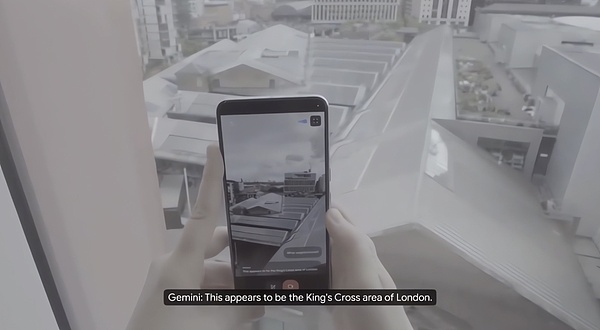

3) Gemini Live

Google previewed a new experience in Gemini called Gemini Live, which lets users have "in-depth" voice chats with Gemini on their smartphones.

Users can interrupt Gemini while the chatbot is talking to ask clarifying questions, and it will adapt to their voice patterns in real time. Gemini can see and react to the user's surroundings through photos or videos taken by the smartphone camera.

Google says it leveraged new techniques in generative AI to deliver superior, less error-prone image analysis, and combined those techniques with an enhanced speech engine for more consistent, emotionally expressive, and realistic multi-turn conversations.

In some ways, Gemini Live is an evolution of Google Lens, Google’s long-standing computer vision platform for analyzing images and videos, and Google Assistant, Google’s AI-driven, speech-generating and -recognizing virtual assistant across phones, smart speakers, and TVs.

It’s a real-time voice interface with extremely powerful multimodal capabilities and long context, said DeepMind chief scientist Oriol Vinyals.

The technical innovations driving Live stem in part from Project Astra, a new initiative within DeepMind that aims to create AI-driven apps and agents for real-time, multimodal understanding.

Demis Hassabis, CEO of DeepMind, said Google has always wanted to build a general intelligent agent that is useful in daily life. Imagine that the agent can see and hear what we do, better understand the environment we are in and respond quickly in the conversation, so that the speed and quality of the interaction feel more natural.

It is reported that Gemini Live will not be launched until later this year. It can answer questions about things within the field of view (or the nearest field of view) of the smartphone camera, such as which community the user may be in or the name of a part on a damaged bicycle. Point to a part of the computer code and Live can explain what the code does. Or, when asked where a pair of glasses might be, Live can tell where it last "saw" the glasses.

Live is also designed as a virtual coach of some kind, helping users rehearse activities, brainstorming, etc. For example, Live can suggest which skills to emphasize in an upcoming job or internship interview, or provide public speaking advice.

One major difference between the new ChatGPT and Gemini Live is that Gemini Live is not free. Once launched, Live will be exclusive to Gemini Advanced, a more sophisticated version of Gemini that is covered by the Google One AI Premium Plan for $20 per month.

4) Gemini Nano

Google is also building the smallest AI model, Gemini Nano, directly into the Chrome desktop client starting with Chrome 126. Google says this will enable developers to use the on-device model to power their own AI features. For example, Google plans to use this new capability to power features like the existing "Help me write" tool in Workspace Lab in Gmail.

Google Chrome product management director Jon Dahlke noted that Google is in talks with other browser vendors to enable this or similar features in their browsers.

Google Chrome product management director Jon Dahlke noted that Google is in talks with other browser vendors to enable this or similar features in their browsers.

5) Gemini on Android

Google's Gemini on Android is an AI alternative to Google Assistant that will soon leverage its deep integration with the Android mobile operating system and Google apps.

Users will be able to drag and drop AI-generated images directly into their Gmail, Google Messages, and other apps.

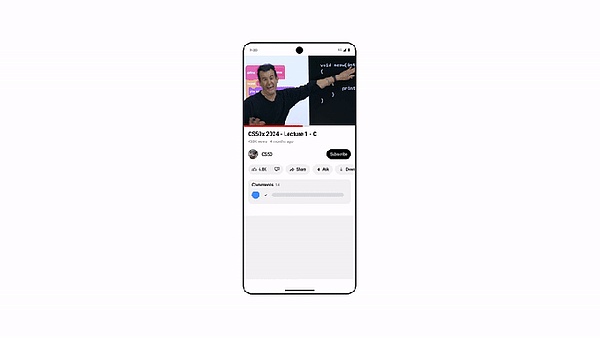

Meanwhile, YouTube users will be able to click "Ask this video" to find specific information from that YouTube video, Google said.

Users who purchase the upgraded Gemini Advanced will also have access to an "Ask this PDF" option that lets you get answers from a document without having to read all the pages. Gemini Advanced subscribers pay $19.99 per month to access the AI and get 2TB of storage, along with other Google One benefits.

Google said the latest features for Gemini for Android will roll out to hundreds of millions of supported devices in the coming months. Over time, Gemini will evolve to offer additional suggestions related to what's on the screen.

Meanwhile, the base model Gemini Nano on Android devices will be upgraded to include multimodality. This means it will be able to handle text input as well as other ways of processing information, including visual, voice, and spoken language.

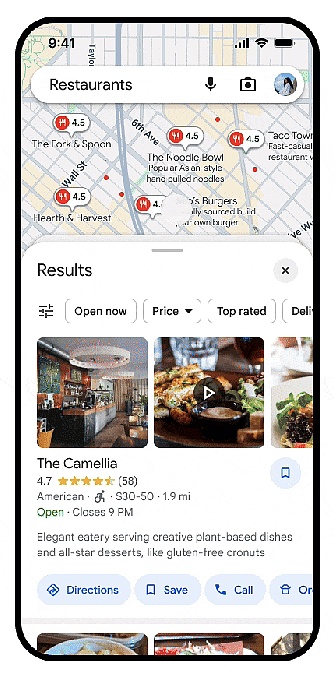

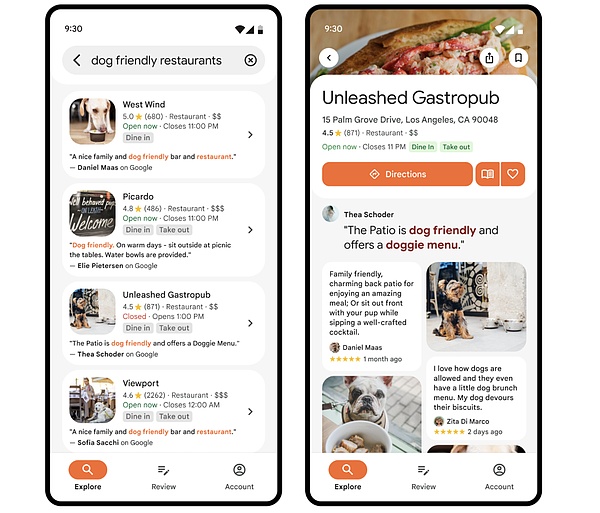

6) Gemini on Google Maps

Starting with the Places API, the Gemini model capabilities will be available on the Google Maps platform for developers to use. Developers can display generated AI summaries of places and areas in their own applications and websites. These summaries are created based on Gemini's analysis of insights from the Google Maps community of more than 300 million contributors.

These summaries are created based on Gemini's analysis of insights from the Google Maps community of more than 300 million contributors. With this new feature, developers will no longer need to write their own custom place descriptions.

For example, if a developer has a restaurant reservation application, this new feature will help users understand which restaurant is best for them. When users search for restaurants in the app, they will be able to quickly see all the most important information, such as restaurant specials, happy hour offers, and restaurant atmosphere.

The new snippets are available for multiple types of places, including restaurants, shops, supermarkets, parks, and movie theaters. Google is also bringing AI-driven contextual search results to the Places API. When users search for places in their products, developers can now display reviews and photos related to their search.

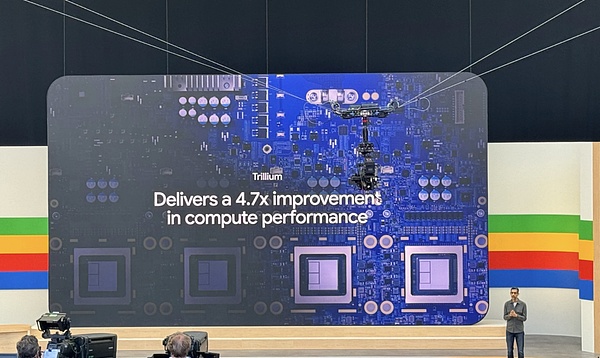

7) TPU performance has been improved

Google has launched the next generation - the sixth generation to be exact - of its TPU AI chips. They are called Trillium and will be available later this year. If you remember, announcing the next generation of TPUs has become a tradition at the I/O conference, even though these chips are only available later this year.

These new TPUs will deliver 4.7 times the compute performance per chip compared to the fifth generation. Trillium features a third-generation SparseCore, which Google describes as a "specialized accelerator for processing very large embeddings common in advanced ranking and recommendation workloads."

Pichai described the new chip as Google's "most energy-efficient" TPU yet, which is especially important as demand for AI chips continues to grow exponentially.

He said the industry's demand for ML compute has grown a million times over the past six years, about ten times per year, and that's unsustainable without investments to reduce the power requirements of these chips. Google promises that the new TPU is 67% more energy efficient than the fifth-generation chip.

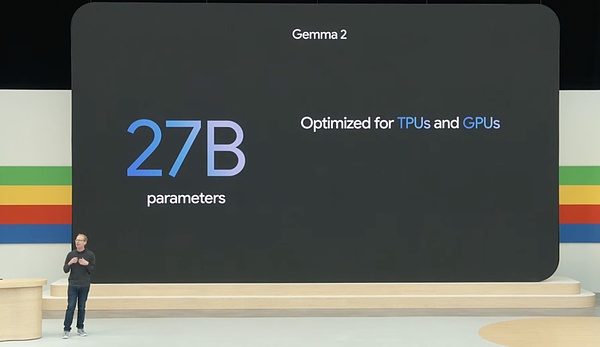

In addition, Google is adding a new 27 billion parameter model in Gemma 2. The next generation of Google Gemma models will be available in June. Google said this size is optimized by Nvidia to run on next-generation GPUs and can be used on a single TPU host and Vertex AI

2. New Models & Projects

1) Imagen3

Google has unveiled the latest iteration of its Imagen model, Imagen 3. DeepMind CEO Demis Hassabis said Imagen 3 is more accurate at understanding textual cues translated into images than its predecessor, Imagen 2, and is more creative and detailed than previous generations.

Google has unveiled the latest iteration of its Imagen model, Imagen 3. DeepMind CEO Demis Hassabis said Imagen 3 is more accurate at understanding textual cues translated into images than its predecessor, Imagen 2, and is more creative and detailed than previous generations.

To allay concerns about the potential for deepfakes, Google said Imagen 3 will use SynthID, a method developed by DeepMind that applies invisible encrypted watermarks to media.

Google's ImageFX tool can sign up for a private preview of Imagen 3, and Google said the model will be available "soon" to developers using Vertex AI, Google's enterprise generative AI development platform.

2) Veo video generation model

Google is taking aim at OpenAI's Sora with Veo, an AI model that can create 1080p video clips about a minute long based on text prompts. Veo can capture different visual and cinematic styles, including landscapes and time-lapse footage, and edit and adjust the generated footage.

It also builds on Google's initial commercial work on video generation that it previewed in April, which leveraged the company's Imagen 2 series of image generation models to create looping video clips.

Demis Hassabis said Google is exploring features like storyboarding and generating longer scenes to see what Veo can do, and that Google has made incredible progress in video.

Veo was trained on a large number of footage. This is how generative AI models work: fed example after example of some form of data, the models pick up patterns in the data that allow them to generate new data — in Veo’s case, videos.

Google has, however, made Veo available to select creators, including Donald Glover (aka Childish Gambino) and his creative agency Gilga.

3) LearnLM Model

Google has launched LearnLM, a new family of generative AI models that are "fine-tuned" for learning. This is a collaboration between Google's DeepMind AI research division and Google Research. Google said the LearnLM model is designed to "conversationally" tutor students across a range of subjects.

LearnLM is already available across Google's platforms and is being used through a pilot program in Google Classroom. Google said LearnLM can help teachers discover new ideas, content, and activities, or find materials that fit the needs of a specific group of students.

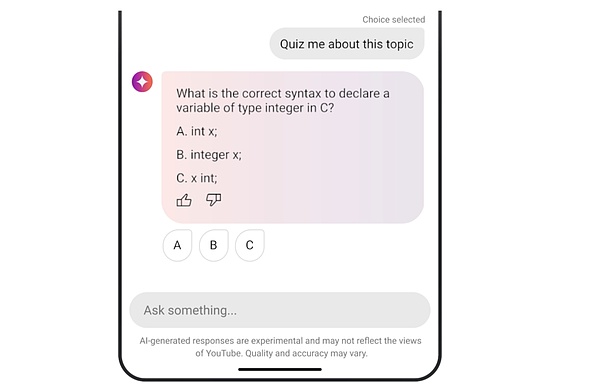

New to YouTube is an AI-generated quiz. This new conversational AI tool allows users to figuratively "raise" their hands while watching an educational video. Viewers can ask clarifying questions, get helpful explanations, or take a quiz on the topic.

This will be alleviated for those who have to watch longer educational videos (such as lectures or seminars) due to the long-context capabilities of the Gemini model. These new features are rolling out to select Android users in the United States.

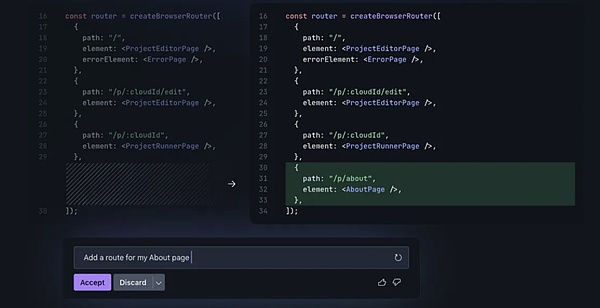

4) Project IDX

Project IDX is Google's next-generation, AI-centric, browser-based development environment, now in public beta.

As AI becomes more ubiquitous, the complexity of deploying all these technologies is indeed becoming more difficult and larger, and Google wants to help solve this challenge, said Jeanine Banks, Google vice president, general manager and head of Developer X. Developer relations, which is why Project IDX was built.

IDX is a multi-platform development experience that makes building applications fast and easy, and you can easily use your favorite framework or language through easy-to-use templates such as Next.js, Astro, Flutter, Dart, Angular, Go, etc.

In addition, Google will add integration with Google Maps Platform to the IDE to help add geolocation capabilities to its applications, and integrate with Chrome Development Tools and Lighthouse to help debug applications. Soon, Google will also support deploying applications to Cloud Run, Google Cloud's serverless platform for running front-end and back-end services.

The development environment will also integrate with Checks, Google's AI-driven compliance platform, which itself will move from beta to general availability on Tuesday. Of course, IDX isn't just about building AI-enabled apps, it's about using AI in the coding process.

To accomplish this, IDX includes many of the features that are now standard, such as code completion and a chat assistant sidebar, as well as innovative features such as the ability to highlight code snippets, and a Generate Fill feature similar to that in Photoshop, asking Google's Gemini model to change a code snippet.

Whenever Gemini suggests code, it links back to the original source and its associated license. Built by Google with open source Visual Studio Code at its core, Project IDX also integrates with GitHub, making it easy to integrate with existing workflows. In one of the latest releases of IDX, Google has also added built-in iOS and Android emulators for mobile developers in the IDE.

3. Application &Tool Updates

1) Application of AI in Search

Liz Reid, Google Search Director, said that Google built a customized Gemini model for search, combining real-time information, Google ranking, long context and multimodal features.

Google is adding more AI to its search, alleviating concerns that the company is losing market share to competitors such as ChatGPT and Perplexity.

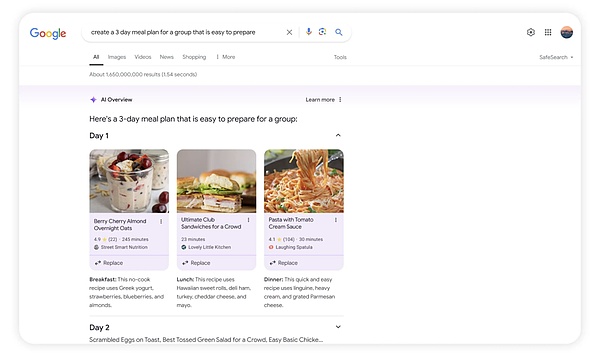

Google is rolling out AI-driven overviews to US users. In addition, the company also hopes to use Gemini as an intelligent agent for matters such as travel planning.

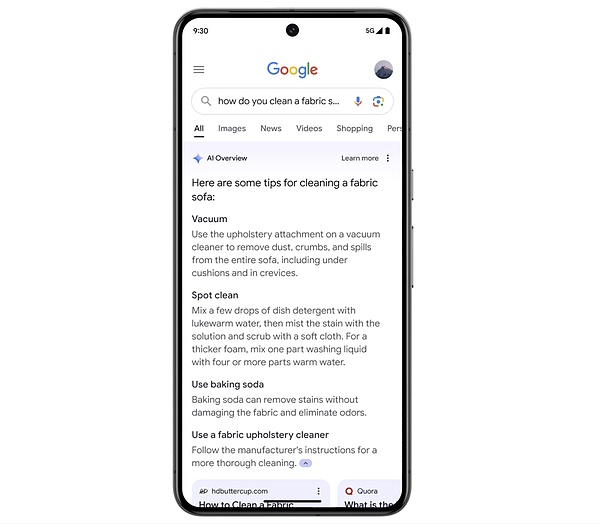

Google plans to use generative AI to organize the entire search results page for certain search results. This is in addition to the existing AI Overview feature, which creates a short snippet with aggregated information about the topic you are searching for. After a period in the Google AI Labs program, the AI Overview feature will be generally available on Tuesday.

Google has been testing AI-powered Overview through its Search Generated Experience (SGE) since last year. Now, it will be rolled out to "hundreds of millions of users" in the United States this week, with the goal of serving it to more than 1 billion people by the end of this year.

She also said that during the testing of its AI Overview feature, Google observed that people clicked on more diverse websites. Users will not see the AI Overview when traditional search is sufficient to provide results, and the feature is more useful for more complex queries with scattered information.

In addition, Google also hopes to use Gemini as an intelligent agent to complete tasks such as meal or travel planning. Users can enter queries such as "plan three days of meals for a family of four" and get links and recipes for these three days.

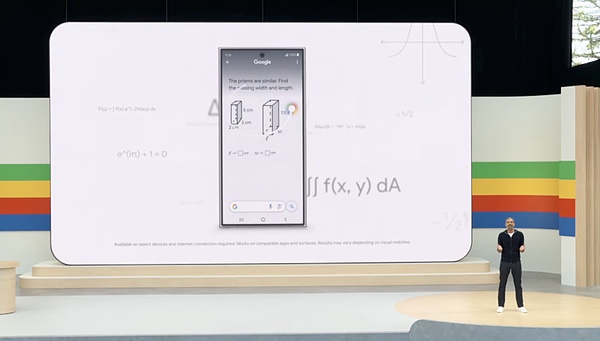

2) Circle Search

The "Circle Search" feature driven by AI allows Android users to get answers immediately using gestures such as spinning in circles, and will now be able to solve more complex psychology and math word problems.

It is designed to allow users to use Google Search more naturally through actions such as circling, highlighting, doodling, or clicking anywhere on their phones.

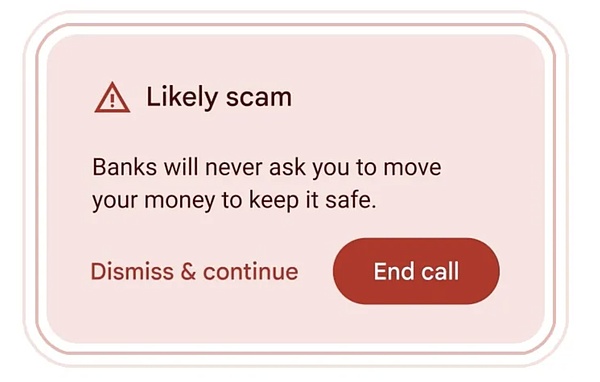

3) Detecting scams during calls

Google has previewed a feature that it believes will alert users to potential scams during calls.

The feature, which will be built into a future version of Android, leverages Gemini Nano, the smallest version of Google’s generative AI product that can run entirely on-device. The system effectively listens in real time for “conversation patterns that are commonly associated with scams.”

Google gave the example of someone pretending to be a “bank representative.” Common scam tactics like password requests and gift cards will also trigger the system. These are well-known ways to extract money from you, but there are still many people in the world who are vulnerable to such scams. Once launched, it will pop up a notification that the user may have fallen prey to an unsavory character.

4) Ask Photos

Google Photos is getting an injection of AI with the launch of an experimental feature, Ask Photos, powered by Google’s Gemini AI model. The new feature, launching later this summer, will allow users to search their Google Photos collection using natural language queries that leverage the AI’s understanding of their photo content and other metadata.

While before users could search for specific people, places, or things in their photos, with the help of natural language processing, the AI upgrades will make finding the right content more intuitive and reduce the manual search process.

5) Firebase Genkit

The Firebase platform has added a new feature called Firebase Genkit, which aims to make it easier for developers to build AI-driven applications using JavaScript/TypeScript, and support for Go is coming soon.

The Firebase platform has added a new feature called Firebase Genkit, which aims to make it easier for developers to build AI-driven applications using JavaScript/TypeScript, and support for Go is coming soon.

It is an open source framework that uses the Apache 2.0 license and enables developers to quickly build AI into new and existing applications.

In addition, Google highlights some use cases for Genkit, including many standard GenAI use cases: content generation and summarization, text translation, and generating images.

6) Google Play

Google Play got some attention with new app discovery features, new ways to acquire users, updates to Play Points, and other enhancements to developer tools like the Google Play SDK Console and the Play Integrity API.

Of particular interest to developers is something called the Engage SDK, which will introduce a way for app makers to present their content to users in a full-screen, immersive experience personalized for individual users. Google says this surface isn’t currently visible to users, though.

Weiliang

Weiliang

Weiliang

Weiliang Brian

Brian CryptoSlate

CryptoSlate dailyhodl

dailyhodl decrypt

decrypt Beincrypto

Beincrypto

Beincrypto

Beincrypto Bitcoinist

Bitcoinist Cointelegraph

Cointelegraph