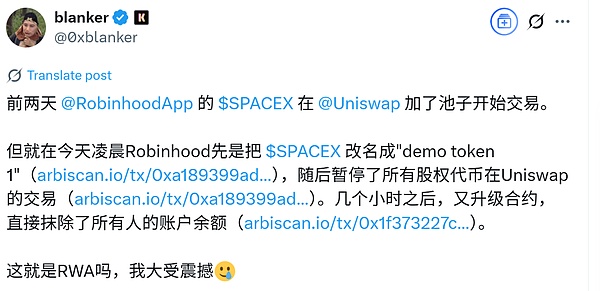

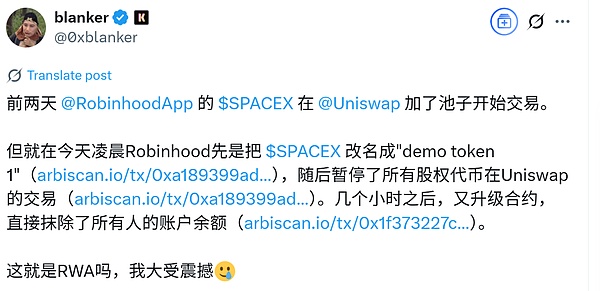

I read somethingX.comPost, talkrobinhoodatuniswapchatgpthas run away, claiming that it can erase the balance of the address holding the token. I doubt the authenticity of this ability to erase the balance, so pleasecome out and investigate.

chatgptgave a similar judgment, claiming that such a description of wiping the balance was unlikely.

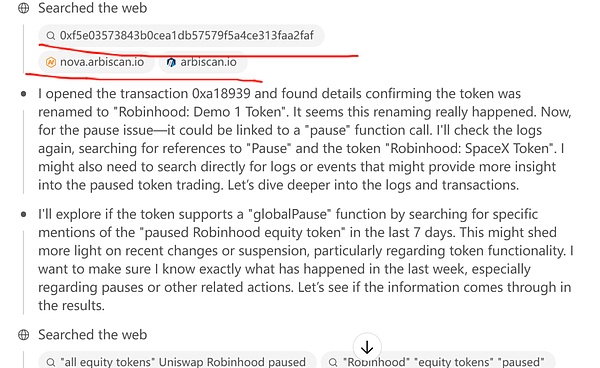

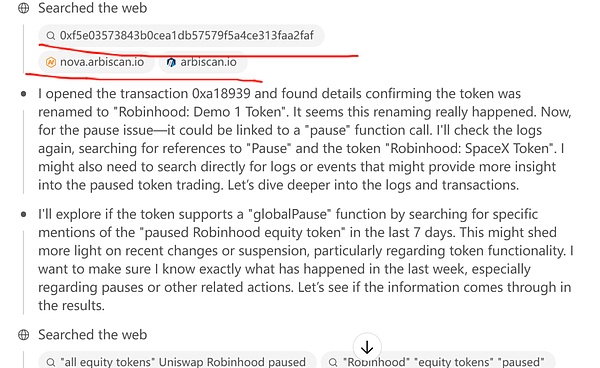

What really surprised me was chatgpt's reasoning process, because I wanted to understand how chatgptmade the judgment, so I read its chain of thinking.

I see that there are several steps in its chain of thought that is to "input" an Ethereum address into the block browser and then view the historical transactions of this address.

Please pay special attention to the double quotes around "input". This is a verb, which means that an operation was performed on the block explorer, which surprised me because it did not match the results of my research on the security of chatgpthalf year ago.

Half a year ago, usingchatGPT O1 Promodel, I used it to investigate the distribution of Ethereum's early profit-taking. I explicitly sent a request to chatgpt o1 pro to query the genesis block address through block browsing to find out how much has not been transferred out, but hatgpt explicitly told me that it cannot perform such an operation because it is a security design.

Chatgpt

can read the page, but cannot performUIoperations on the web page, such as clicking, sliding, and inputting, which are the actions that we humans can perform on a web page. For example, taobao.com can be used by humans to log in and search for specific products, but simulation is explicitly prohibited in Chatgpt. This is the result of my research half a year ago. Why did I research this at that time? Because at that time cl

audethe company created an intelligent agent (agent">that could take over the user's computer), Anthropican Claude 3.5 Sonnet launches experimental function "Computer use (beta)" and can read the screen, move the cursor, click buttons and enter text like a real person, completing a whole set of desktop operations such as web page search, form filling, placing orders and so on. This is quite scary. I thought of the following scenario: What ifclaudegoes crazy one day and directly breaks into my note-taking software to read all my work and life logs, and digs out the private key that I recorded in plain text for convenience, what should I do?

After that investigation, I decided to buy a brand new computer to run AIthe software, and no longer run

Kryptoon the computer I manage. lang="zh-CN">software. Therefore, I have one more windowscomputer and an Android phone. It's so annoying, with so many computers and phones. AIon domestic mobile phone terminals now have similar permissions. Just a few days ago, Yu Chengdong shot a video to promote that Huawei's Xiaoyi can help users book air tickets and hotels on their mobile phones. Honor phones even allowed users to use commands a few months ago

AI,

leaf="">AIExecute the complete process of placing an order for coffee on Meituan. SuchAIcan help you place an order on Meituan, but can it read your WeChat chat history?

This is a bit scary.

Because our mobile phone is a terminal, Xiaoyi is a small model running on the terminal side, so we can still manage the permissions of

AI, such as prohibiting pp, such as encrypting notesdocuments. If you want to read them, you need a password, which can also prevent Xiaoyi from accessing them directly. But likechatgptandclaudeSuch a large cloud model, if you obtain a simulationUIclick, slide, input and other operations, then the trouble will be big. Because chatgptneeds to communicate with the cloud server at any time, that is to say, the information on your screen100%is on the cloud, which is completely different from the information read by the end-side model such as Xiaoyi only locally.

The end-side Xiaoyi is like giving our mobile phone to a computer expert around us, asking him to help us operate this or that app, but this expert cannot copy down the information in our mobile phone and take it home, and we can also take the mobile phone back from this guy at any time. In fact, this kind of thing of asking someone to repair the computer happens frequently, right?

But cloud chatpgsuchLLMis equivalent to remotely controlling our mobile phones and computers, which is equivalent to someone remotely taking over your computer and mobile phone. Just think about how big the risk is. You don't even know what they are doing in your mobile phone and computer.

Seechatgpt's thinking chain exists for block explorers (arbiscan.iohatgpthas not lied to me, then this time I was just alarmed, and chatgpthas not lied to me, then this time I was just alarmed, and chas not lied to me, It has not obtained the permission for simulation UI operation. This time it can access arbiscan.io and "enter" an address to access the transaction records in this address. It is purely a hack skill. I have to marvel at it. lang="en-US">hatgpt o3It's really awesome.

Chatgpt o3is the discovery ofarbiscan.iothe page that generates the input address to search for historical transactionsurl

rule, The rule for querying the URL of a specific transaction or contract address is as follows (https://arbiscan.io/tx/<hash> or /address/<addr>), and after the model understands this rule, it gets a contract address and directly splices it to arbiscan.io/adchatgpt o3 dress, then you can open this page and it can directly read the information on this page. Wow. It is equivalent to when we check the information explained by the block browser of a transaction, we do not enter the transaction through the browser page, then press Enter to see it. Instead, it directly constructs the URL of the page you want to view, and then enters it into the browser to view it directly. Isn't it awesome?

Therefore, chatgptdoes not break the restriction of prohibiting simulationUIoperation.

However, if we really care about the security of computers and mobile phones, we must be careful about these LLMlarge language model permissions over the terminal.

We need to disable various AIin terminals with high security requirements.

Pay special attention to "where the model runs (end or cloud)" as it determines the security boundary more than the intelligence of the model itself - this is also the fundamental reason why I would rather configure an extra isolation device than let the large cloud model run on a computer with a private key.

Kikyo

Kikyo