Understanding Shadow Drive, a decentralized storage platform on the Solana chain

Which decentralized cloud computing platform is the best? New projects may bring new experiences to users.

JinseFinance

JinseFinance

Decentralized storage means that a single entity or part of the group leaves it idle Storage space serves as a unit of storage network, thereby bypassing the absolute control of data by centralized organizations such as AWS and Google Cloud.

Low storage costs, data redundancy backup and token economy are also characteristics of decentralized storage, and a large number of Web3 applications have been established on this infrastructure.

As of June 2023, the overall storage capacity of decentralized storage has exceeded 22,000 petabytes (PB), and network utilization Only about 20%. This suggests there is plenty of room for growth in the future.

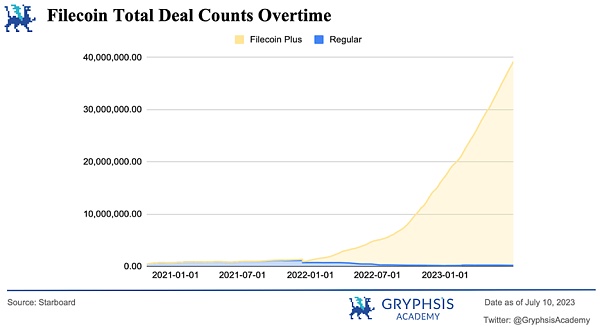

Of the existing storage capacity, approximately more than 80% of the storage capacity is provided by Filecoin, which is undoubtedly the leader in this field sheep. Filecoin has also launched projects such as Filecoin Plus and FVM to incentivize developers and promote ecosystem growth.

With the rise of fields such as artificial intelligence and full-chain games, decentralized computing and storage are expected to usher in exciting developments Excited about growth opportunities.

Cloud storage services like Dropbox and Google Cloud have changed the way we store and share large files like videos and photos online. They allow anyone to store terabytes of data at a much lower cost than buying a new hard drive and access the files from any device when needed.

However, there is a problem: users must rely on the management system of centralized entities, which may revoke their access to their accounts at any time, share them with government agencies files, or even delete files without reason.

This storage model makes the ownership of data assets unclear and effectively gives large Internet companies such as Amazon and Google a monopoly on data. Additionally, downtime for centralized services can often have disastrous consequences.

The storage field is actually natively suitable for decentralized applications. First, it addresses issues such as user data privacy and ownership. Files stored on decentralized file services are not subject to the influence of any centralized authority, such as government agencies that may wish to control and censor content. It also prevents private companies from taking actions such as censoring services or sharing files with law enforcement.

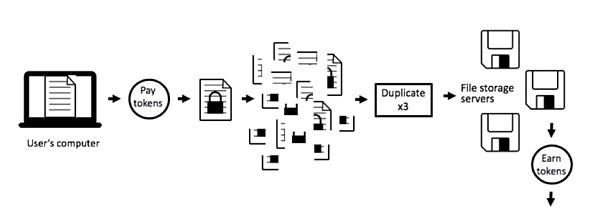

Secondly, storing massive amounts of data in the index itself requires a distributed system. Existing centralized cloud services also use distributed solutions, such as Spanner, TiDB, etc. It can be said that distribution does not mean decentralization, but decentralization must be distributed. Different from the centralized storage architecture, the existing decentralized solution will divide the data into small pieces and store them on various nodes around the world after encryption. This process will create multiple copies of the data and improve the accuracy of the data. Lost resilience.

Third, the resource consumption of ineffective mining is solved. The large amount of electricity consumption caused by Bitcoin's PoW mechanism has always been criticized, and decentralized storage gives users the opportunity to become nodes and use idle storage resources to mine and make profits. A large number of storage nodes also means reduced costs. It is foreseeable that decentralized storage cloud services can even eat up part of the Web2 cloud service market share.

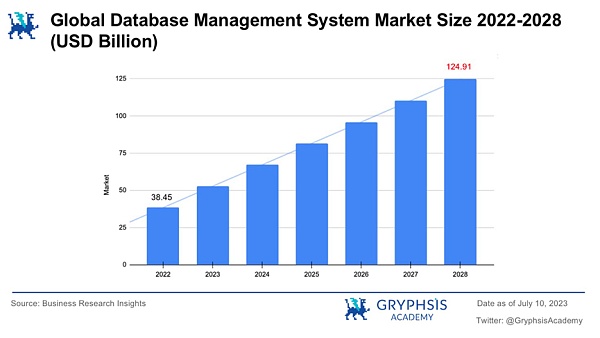

Today, as network bandwidth and hardware services continue to upgrade, this is an extremely huge market. According to Business Research, the global database market will grow by 2028. More than $120 billion.

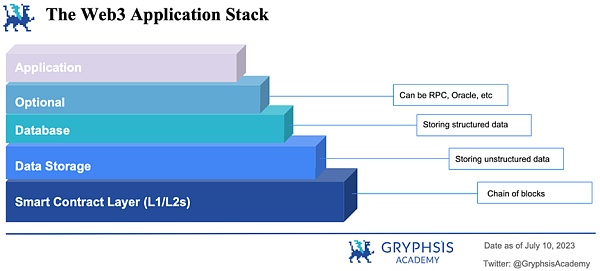

To create truly decentralized applications, go Centralized databases should also be included in Web3 application architecture. It can be divided into four main components: smart contract layer, file storage, database and general infrastructure layer.

The smart contract layer is equivalent to Layer1, while the general infrastructure layer includes but is not limited to oracles, RPC, access control, identity, off-chain computing and indexing networks .

Although not obvious to users, both the file storage and database layers play a vital role in the development of Web3 applications. They provide the necessary infrastructure for storing structured and unstructured data, which is a requirement for various applications. Due to the nature of this report, these two components are described in further detail below.

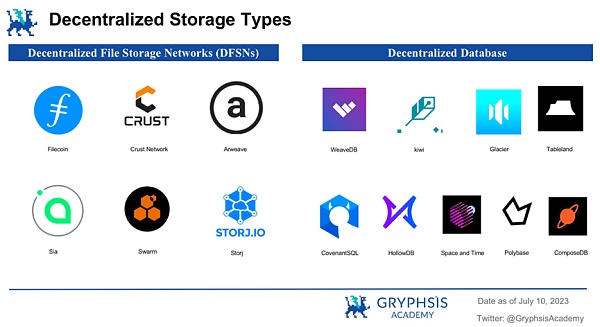

DFSNs like Filecoin, Arweave, and Crust are mainly used for persistent storage of unstructured data that does not follow a predefined format and does not require frequent updates or retrieval. Therefore, DFSNs are commonly used to store various static types of data, such as text documents, images, audio files, and videos.

One advantage of this type of data in a distributed storage architecture is the ability to move data storage closer to the endpoint using edge storage devices or edge data centers. This storage method provides lower network communication costs, lower interaction latency, and lower bandwidth overhead. It also provides greater adaptability and scalability.

For example, in the case of Storj, 1TB of storage costs $4.00 per month, while Amazon S3, the market-leading enterprise cloud storage solution, costs $4.00 per month for the same amount. Data costs approximately $23.00 per month.

Users benefit from more cost-effective storage options compared to traditional centralized cloud storage solutions. The decentralized nature of DFSNs also provides greater data security, privacy, and control, as data is distributed among multiple nodes or miners rather than stored in a single centralized server.

Limitations of storing unstructured files in DFSNs is obvious, especially in terms of efficient data retrieval and updating. These architectures are not ideal for data that needs to be updated frequently.

In this case, traditional databases such as MySQL and Redis are more suitable options for developers, and they have been extensively optimized in the Internet era of Web2.0 and testing.

Especially in applications such as blockchain games and social networks, storing structured data is an unavoidable requirement. Traditional databases provide an efficient way to manage large amounts of dynamic data and control access to it. They provide capabilities such as indexing, querying, and data manipulation that are critical to applications that rely on structured data.

Therefore, whether it is based on DFSNs or self-developed underlying storage. High-performance, high-availability decentralized database is a very important branch in the storage field.

In the current Web3 project, decentralized File storage projects (DFSNs) can be broadly divided into two categories. The first category includes projects based on IPFS implementations such as Filecoin and Crust. The second category includes projects like AR, Sia, and Storj, which have their own underlying protocols or storage systems. Although they have different implementation methods, they all face the same challenge: Ensuring truly decentralized storage while enabling efficient data storage and retrieval.

Since blockchain itself is not suitable for storing large amounts of data on-chain, the associated costs and impact on block space make this approach impractical. actual. Therefore, an ideal decentralized storage network must be able to store, retrieve and maintain data, while ensuring that all participants in the network are incentivized to work and adhere to the trust mechanism of the decentralized system.

We will evaluate the technical characteristics, advantages and disadvantages of several mainstream projects from the following aspects:

Data storage format: The storage protocol layer needs to determine how the data should be stored, such as whether the data should be encrypted and whether the data should be stored as a whole or divided into small hashed chunks.

Data copy backup: Need to decide where to store the data Where, e.g. how many nodes should hold data, should all data be replicated to all nodes, or should each node receive a different fragment to further protect data privacy. Data storage format and propagation will determine the probability of data availability on the network, i.e. durability in the event of device failure over time.

Long-term data availability: Networks need to ensure data Available when and where it should be. This means designing incentives to prevent storage nodes from deleting old data over time.

Proof of stored data: The network not only needs to know Where the data is stored, and storage nodes should be able to prove that they actually store the data they intend to store in order to determine the share of incentives.

Storage Price Discovery: Node expected to be file Pay for persistent storage.

As just mentioned Yes, Filecoin and Crust use IPFS as the network protocol and communication layer for transferring files between peers and storing them on nodes.

The difference is that Filecoin uses erasure coding (EC) to achieve scalability of data storage. Erasure coding (EC) is a data protection method that divides data into fragments, expands and encodes redundant data blocks, and stores them in different locations, such as disks, storage nodes, or other geographical locations.

EC creates a mathematical function to describe a set of numbers, allowing them to be checked for accuracy and restored if one of the numbers is missing.

Source: usenix

The basic equation is n=k+m, where the total data blocks Equal to the original data block plus the check block.

Calculate m check blocks from k original data blocks. By storing these k+m data blocks on k+m hard disks, any m hard disk failures can be tolerated. When a hard disk failure occurs, all original data blocks can be calculated by arbitrarily selecting k surviving data blocks. In the same way, if k+m data blocks are dispersed on different storage nodes, m node failures can be tolerated.

When new data is to be stored on the Filecoin network, users must connect to a storage provider through the Filecoin storage market and negotiate storage terms, Then place a storage order. Also, the user must decide which type of erasure coding to use and the replication factor in it. With erasure coding, the data is broken into constant-size fragments, each fragment is expanded and redundant data is encoded, so only a subset of the fragments need to reconstruct the original file. Replication factor refers to how often data should be copied to more storage sectors of a storage miner. Once the storage miner and the user agree on the terms, the data is transferred to the storage miner and stored in the storage miner's storage sector.

Crust’s data storage method is different. They copy the data to a fixed number of nodes: when a storage order is submitted, the data is Encrypt and send to at least 20 Crust IPFS nodes (the number of nodes can be adjusted). At each node, the data is divided into many smaller fragments, which are hashed into Merkle trees. Each node retains all the fragments that make up the complete file.

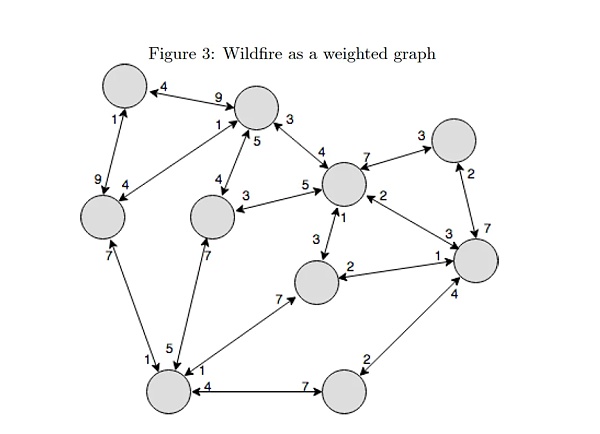

Arweave also uses complete file copying, but Arweave takes a slightly different approach. After a transaction is submitted to the Arweave network, the first single node stores the data as blocks on blockweave (Arweave’s blockchain representation). From there, a very aggressive algorithm called Wildfire ensures that data is replicated quickly across the network, because in order for any node to mine the next block, they must prove that they have access to the previous block.

Sia and Storj also use EC to store files. Actual Crust implementation: 20 complete datasets stored on 20 nodes is very redundant, but also makes the data very durable. But from a bandwidth perspective, this is very inefficient. Erasure coding provides a more efficient way to achieve redundancy by improving data durability without incurring a large bandwidth impact. Sia and Storj propagate EC shards directly to a specific number of nodes to meet certain durability requirements.

The reason why we need to explain the data storage format first is because the choice of technical path directly determines the difference in the proof and incentive layers of each protocol. That is, how to verify that the data to be stored on a specific node is actually stored on that specific node. Only after verification has occurred, the network can use additional mechanisms to ensure that data remains stored over time (i.e., storage nodes do not delete data after the initial storage operation).

Such mechanisms include algorithms that prove data was stored for a specific period of time, financial incentives for successfully completing storage requests for the duration, and disincentives for outstanding requests. This section will introduce the storage and incentive protocols of each protocol.

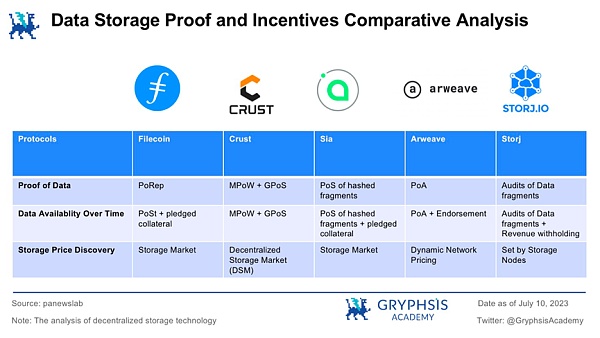

On Filecoin, storage miners are collecting Prior to any storage request, collateral must be deposited into the network as a commitment to provide storage to the network,Once completed, miners can offer storage and price their services on the storage market. At the same time, Filecoin innovatively proposed PoRep and PoSt to perform storage verification for miners.

Source: Filecoin

Proof of Replication (PoRep):Miners need to prove that they store unique copies of the data. The unique encoding ensures that two storage transactions for the same data cannot reuse the same disk space.

Proof of Space and Time (PoSt): During the life cycle of the storage transaction, storage miners need to prove that they are continuing to transfer every 24 hours Dedicated storage space to store this data.

After submitting the proof, the storage space provider will receive FIL in return. If it cannot keep its promise, its mortgaged token will be confiscated (Slash).

But over time, storage miners need to consistently prove their ownership of the stored data by running the algorithm regularly. However, a consistency check like this requires a lot of bandwidth.

The novelty of Filecoin is that in order to prove data storage over time and reduce bandwidth usage, miners use the output of the previous proof as the input of the current proof, press Proofs of replication are generated sequentially. This is performed via multiple iterations representing the duration for which the data is to be stored.

Like Filecoin, the relationship between Crust and IPFS is also the relationship between the incentive layer and the storage layer. In Crust Network, nodes must also deposit collateral before they can accept storage orders on the network. The amount of storage space a node provides to the network determines the maximum amount of collateral that is pledged and allows the node to participate in creating blocks on the network. This algorithm is called Guaranteed Proof of Stake (GPoS), which guarantees that only nodes with interests in the network can provide storage space.

Source: Crust Wiki

Unlike Filecoin, Crust’s The storage price discovery mechanism relies on DSM,nodes and users are automatically connected to the decentralized storage market (DSM), which automatically selects which nodes to store the user's data on. Storage prices are determined based on user demand (such as storage duration, storage space, replication factor) and network factors (such as congestion).

When a user submits a storage order, the data will be sent to multiple nodes on the network, which use the machine's Trusted Execution Environment (TEE: Trusted Execution Environment) splits the data and hashes the fragments. Since the TEE is a closed hardware component that is not accessible even to the hardware owner, the node owner cannot rebuild the files themselves.

After the file is stored on the node, a work report containing the file hash is published to the Crust blockchain along with the node's remaining storage. From here ensuring that the data is stored over time, the network periodically requests random data checks: in the TEE, a random Merkle tree hash is retrieved along with the associated file fragment, which is decrypted and rehashed. The new hash is then compared to the expected hash. The implementation of this proof of storage is called Meaningful Proof of Work (MPoW).

GPoS is a PoS consensus algorithm that uses storage resources to define quotas. Through the workload report provided by the first-layer MPoW mechanism, the storage workload of all nodes can be obtained on the Crust chain, while the second-layer GPoS algorithm calculates a Staking quota for each node based on the node workload. Then based on this amount, PoS consensus is carried out. That is, the block reward is proportional to the mortgage amount of each node, and the upper limit of the mortgage amount of each node is limited by the storage amount provided by the node.

Compared to the first two pricing models, Arweave uses a very different pricing model. The core is that on Arweave, all stored data is permanent, and its storage price depends on storing the data on the network. 200 years of cost.

The bottom layer of the Arweave data network is based on Bockweave's block generation model. A typical blockchain, such as Bitcoin, is a single chain structure, that is, each block will be linked to the previous block in the chain. In the network structure of blockweave, each block is also linked to a random recall block in the previous history of the blockchain based on the previous block. The recalled block is determined by the hash value of the previous block in the block history and the height of the previous block, which is a deterministic but unpredictable way. When a miner wants to mine or validate a new block, the miner needs to have access to the information about the recalled block.

Arweave's PoA uses the RandomX hash algorithm. The miner's block probability = the probability of randomly recalling the block * the probability of being the first to find the hash. . Miners need to find the appropriate hash value to generate a new block through the PoW mechanism, but the random number (Nounce) relies on the previous block and any random recall block information. The randomness of recall blocks encourages miners to store more blocks, thereby obtaining a relatively high calculation success rate and block rewards. PoA also incentivizes miners to store "scarce blocks", that is, blocks that others have not stored, to obtain greater block production probability and rewards.

Source: Arweave Yellow Paper

When a one-time fee is charged, subsequent data reading is free Service sustainability means that users can access data at any time. So how to motivate miners in the long term to be willing to provide data reading services with zero income?

Source: Arweave Yellow Paper

Game Theory Strategies in BitTorrent" The optimistic tit-for-tat algorithm is designed so that nodes are optimistic and will cooperate with other nodes, and uncooperative behavior will be punished. Based on this, Arweave designed Wildfire, a node scoring system with implicit incentives.

Each node in the Arweave network will score adjacent nodes based on the amount of data received and response speed, and the node will give priority to peers with higher rankings. party sends a request. The higher the node ranking, the higher its credibility, the greater the probability of producing blocks, and the greater the possibility of obtaining scarce blocks.

Wildfire is actually a game, a highly scalable game. There is no "ranking" consensus among nodes, and there is no obligation to report the generation and determination of rankings. The "good and evil" between nodes are regulated by an adaptive mechanism to determine rewards and penalties for new behaviors.

Like Filecoin and Crust, storage nodes must deposit collateral to provide storage services. On Sia, nodes have to decide how much collateral to post: collateral directly affects the price of storage for users, but posting low collateral at the same time means nodes have nothing to lose if they disappear from the network. These forces push nodes towards balanced collateral.

Users connect to storage nodes through the automated storage market, which functions similarly to Filecoin: the node sets the storage price, and the user sets the expected price based on the target price and expected storage duration. Users and nodes are then automatically connected to each other.

Source: Crypto Exchange

Among these projects, Sia’s consensus protocol uses The simplest way is to store the contract on the chain. After the user and node agree on a storage contract, funds are locked in the contract and erasure coding is used to split the data into fragments, each fragment is hashed individually using a different encryption key, and each fragment is then copied to on several different nodes. The storage contract recorded on the Sia blockchain records the terms of the agreement as well as the Merkle tree hash of the data.

To ensure that data is stored within the expected storage time, storage proofs are periodically submitted to the network. These proofs of storage are created based on a randomly selected portion of the original storage file and a list of hashes from the Merkle tree of the file recorded on the blockchain. Nodes are rewarded for every proof of storage they submit over a period of time, and finally when the contract is completed.

On Sia, storage contracts can last up to 90 days. To store files beyond 90 days, users must manually connect to the network using Sia client software to extend the contract for another 90 days. Skynet is another layer on top of Sia, similar to the Filecoins Web3.Storage or NFT.Storage platforms, automating this process for users by having Skynet’s own client software instance perform contract renewals for them. While this is a workaround, it is not a Sia protocol level solution.

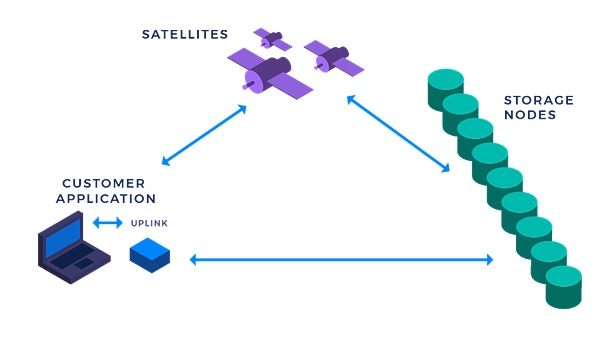

In the Storj decentralized storage network, there is no blockchain or blockchain-like structure. No blockchain also means the network has no network-wide consensus about its state. Instead, tracking data storage locations is handled by satellite nodes, and data storage is handled by storage nodes. Satellite nodes can decide which storage nodes to use to store data, and storage nodes can decide which satellite nodes to accept storage requests from.

In addition to handling data location tracking across storage nodes, Satellite is also responsible for billing and payment for storage and bandwidth usage of storage nodes. Under this arrangement, storage nodes set their own prices, and satellites connect them to each other as long as users are willing to pay those prices.

Source: Storj GitHub

When a user wants to store data on Storj, the user A satellite node must be selected to connect to and share its specific storage requirements. The satellite node then selects storage nodes that meet the storage needs and connects the storage nodes to the user. The user then transfers the file directly to the storage node while paying the satellite. Satellite then pays a monthly storage node fee for files saved and bandwidth used.

Such a technical solution is actually very centralized, and the development of satellite nodes is completely defined by the project party. It also means that the project Fang has the pricing power. Although the centralized architecture also brings performance-efficient services to Storj, as mentioned at the beginning, distributed storage does not necessarily mean decentralization. Storj, the ERC-20 token released by Storj on Ethereum, does not use any smart contract functions. It essentially only provides a variety of payment methods.

This has a lot to do with Storj's business model. They focus on enterprise-level storage services, directly benchmarking Amazon's S3 service, and have established an alliance with Microsoft Azure. The partnership hopes to provide enterprises with services that are comparable to or even exceed Amazon storage in various performance indicators. In the case of unknown performance data, their storage costs are indeed much more cost-effective than Amazon's, which to a certain extent shows that the decentralized storage business model can work.

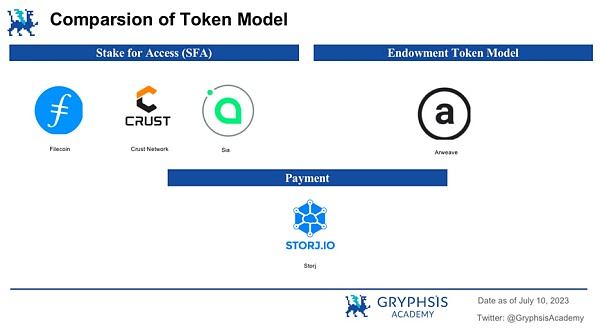

The choice of technical path also affects to a certain extent Design of token model. Each of the four major decentralized storage networks has its own economic model.

Filecoin, Crust, and Sia all use the Stake for Access (SFA) token model. In this model, storage providers must lock the network’s native assets in order to accept storage transactions. The amount locked is proportional to the amount of data the storage provider can store. This creates a situation where storage providers must increase their collateral as they store more data, thereby increasing the demand for network-native assets. In theory, the price of an asset should increase as the amount of data stored on the network increases.

Arweave utilizes a unique donation token model, in which a significant portion of the one-time storage fee for each transaction is added to the donation pool. Over time, tokens in the donation pool accumulate interest in the form of stored purchasing power. Over time, donations are distributed to miners to ensure data persistence on the network. This donation model effectively locks the tokens in the long term: as storage demand increases on Arweave, more tokens are removed from circulation.

Compared to the other three networks, Storj’s token model is the simplest. Its token $STORJ is used as a means of payment for storage services on the network, both for end users and storage providers, as well as for all other networks. Therefore, the price of $STORJ is a direct function of the demand for $STORJ services.

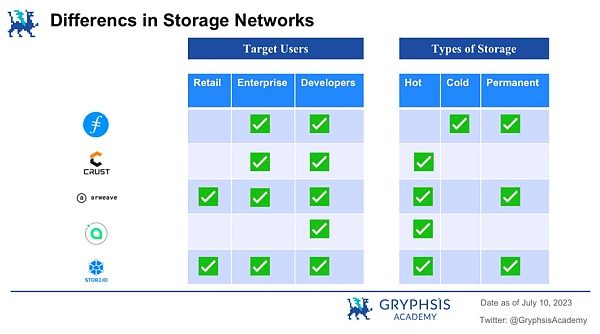

It's hard to say that one storage network is objectively better than another. When designing a decentralized storage network, there is no single best solution. Depending on the purpose of the network and the problem it is trying to solve, there must be tradeoffs in technical design, token economics, community building, and more.

Filecoin mainly provides cold storage solutions for enterprises and application development. Its competitive pricing and accessibility make it an attractive alternative for Web2 entities seeking cost-effective storage for large amounts of archived data.

Crust ensures excess redundancy and fast retrieval, making it suitable for high-traffic dApps and efficient retrieval of popular NFT data. However, its lack of persistent redundancy severely affects its ability to provide permanent storage.

Arweave stands out from other decentralized storage networks with its concept of permanent storage for storing Web3 data such as blockchain state data and NFTs. Especially popular. Other networks are primarily optimized for hot or cold storage.

Sia targets the hot storage market, primarily focusing on developers looking for a fully decentralized and private storage solution with fast retrieval times. While it currently lacks native AWS S3 compatibility, access layers like Filebase provide such a service.

Storj seems to be more comprehensive, but sacrifices some decentralization. Storj significantly lowers the barrier to entry for AWS users, catering to the key target audience of enterprise hot storage optimization. It provides AmazonS3 compatible cloud storage.

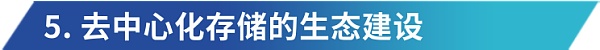

In terms of ecosystem construction, we can mainly discuss two types: the first type is upper-layer Dapps which are completely built on the storage network and aim to enhance the functions and ecosystem of the network; secondly, existing decentralized applications and Protocols such as Opensea, AAVE, etc. choose to integrate with specific storage networks to become more decentralized.

In this section, we will focus on Filecoin, Arweave, and Crust, since Sia and Storj do not have outstanding performance in terms of ecosystem.

Source: Filecoin

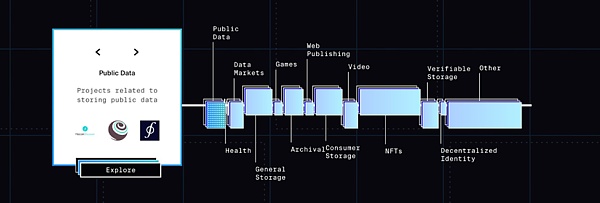

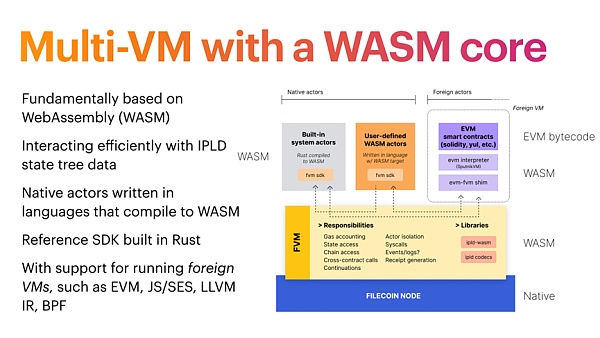

In the ecosystem displayed by Filecoin, there are already 115 projects that fall into the first category above. These projects are all based entirely on Filecoin. underlying structure. It can be observed that most projects are focused on general storage, NFT and consumer storage. Another important milestone in the Filecoin ecosystem is the Filecoin Virtual Machine (FVM), which, similar to the Ethereum Virtual Machine (EVM), provides the environment needed to deploy and execute code in smart contracts.

Source: Filecoin

With FVM, the Filecoin network is on top of the existing storage network Gained the ability to execute smart contracts. In FVM, developers do not program users' stored data, but define how these data will automatically or conditionally perform relevant operations after they are stored in the network through smart contracts (in a trustless manner). The conceivable scenarios are as follows:

Distributed based on the data stored on Filecoin Compute(Compute the data where it is stored without moving it first)

Crowdfunded data set preservation plan - For example, anyone can fund the storage of some data that is important to society, such as crime data or environmental warming-related data

< p>Smart storage market- For example, according to different time of day, replication level, Dynamically adjust storage rates based on accessibility within a certain area)

Hundreds of years of storage and perpetual hosting - like storing data so it can be used by generations

Locally stored NFTs - like and tracking NFTs registration records to co-locate NFT content

Time-locked data retrieval Back- Such as unlocking the relevant data set only after the company's records are made public

Mortgage loans (such as issuing loans to storage providers for a certain purpose, such as accepting FIL+ transaction proposals from specific users, or increasing capacity in a certain time window)

Source: Filecoin

At the same time, from a core point of view, the FVM virtual machine is based on WebAssembly(WASM). This option allows developers to write native upper-layer applications in any programming language that can be compiled to WASM. This feature makes it easier for Web3 developers to get started by allowing them to use knowledge they already possess and bypass the learning curve associated with a specific language.

Developers can also port existing Ethereum smart contracts with minimal (or no) changes to the source code. The ability to reuse audited and battle-tested smart contracts on the Ethereum network allows developers to save development costs and time, while users can enjoy their utility with minimal risk.

Also worth mentioning is Filecoin Plus, a program designed to subsidize users to store large, valuable data sets at a discount. Customers who want to upload data to the network can apply to a select group of members of the community called notaries, who review and allocate resources called DataCaps (data quotas) to customers. Customers can then use DataCap to subsidize their deals with storage providers.

The Filecoin Plus plan brings many benefits, making the Filecoin network more active, and the storage of valuable data continues to generate block demand; customers with extremely competitive Prices get better service; rising as block rewards increase, 18x more data will be stored after the launch of Filecoin Plus in 2022 compared to 2021.

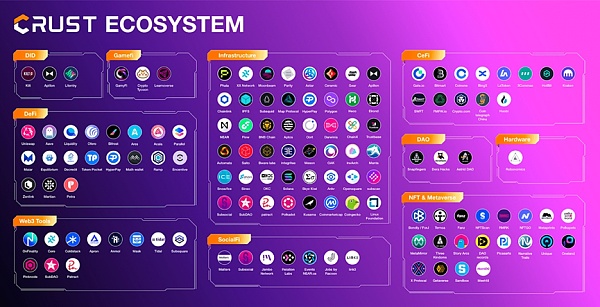

Compared with Filecoin and Arweave, Crust has a different path in ecosystem construction. It prefers to work directly with and serve existing Web3 applications rather than incentivizing third-party developers to build their own ecosystem applications on top of Crust. The main reason is that Crust is built on Polkadot. Although the Ethereum and Cosmos ecosystems were options considered by the Crust project team in the early stages, they are not sufficiently compatible with their technical paths. Crust prefers Polkadot’s Substrate framework for the highly customizable development space, on-chain upgrades, and on-chain governance it provides.

Source: Crust Network

Crust does a great job with developer support. It introduces the Crust Development Kit, which includes js SDK, Github Actions, Shell Scripts, and IPFS Scan to meet the integration preferences of different Web3 projects. Currently, the development toolkit is integrated into various Web3 projects such as Uniswap, AAVE, Polkadot Apps, Liquidity, XX Messenger, and RMRK.

According to data provided on the official website, there are currently more than 150 projects integrated with Crust Network. A large portion of these applications (more than 34%) are DeFi projects. This is because DeFi projects often have high performance requirements for data retrieval.

As mentioned previously, on Crust Network, data is replicated to at least 20 nodes, and in many cases, to more than 100 nodes. While this does require greater initial bandwidth, the ability to retrieve data from multiple nodes simultaneously speeds up file retrieval and provides strong redundancy in the event of a failure or a node leaving the network. Crust Network relies on this high level of redundancy because it does not have data replenishment or repair mechanisms like other chains. Among these decentralized storage networks, Crust Network is the youngest.

Source: Arweave, the newest ecosystem landscape

As shown in the picture above, Arweave also has a strong ecosystem. About 30 applications are highlighted, which are developed entirely based on Arweave. Although not as many as Filecoin’s 115 applications, these applications still meet the basic needs of users and cover a wide range of areas, including infrastructure, exchanges, social, NFTs, etc.

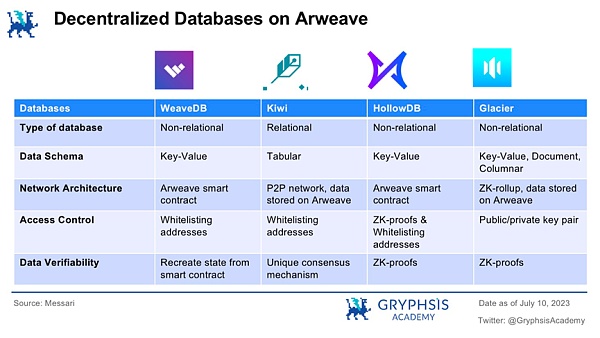

Of particular note is the decentralized database built on Arweave. Arweave primarily uses its block organization for data storage while performing off-chain computation on the user side. Therefore, the cost of using Arweave is determined solely by the amount of data stored on-chain.

This separation of computation and chain, known as the storage-based consensus paradigm (SCP), solves the scalability challenges of blockchain. SCP is feasible on Arweave, and since data inputs are stored on-chain, off-chain computations can be trusted to produce the same state as on-chain computations.

The successful implementation of SCP opened the door to the development of numerous databases on Arweave. Four different databases built on Arweave:

WeaveDB:As a key-value database built by smart contracts on Arweave, It uses whitelisted addresses for access control logic.

HollowDB: A key-value database built as a smart contract on Arweave, which uses whitelisted addresses and ZK proof to ensure data verifiability. ZK proofs are also used to ensure the verifiability of data.

Kwil: A SQL database, run your own P2P node network, but using Arweave as the storage layer. It uses public/private key pairs for access control logic and its own consensus mechanism for data verification.

Glacier:A NoSQL database with ZK architecture -Rollup, uses Arweave as its data availability layer. It uses public/private key pairs for access control logic and ZK proofs for data verifiability.

The growth of decentralized storage depends on several core factors, which can be divided into three major categories according to their characteristics: Overall market prospects , technology and public awareness. These factors are interrelated and complementary, and can be further divided into more granular subcategories. Subsequent paragraphs provide a more detailed breakdown of each factor.

As the Internet penetrates into contemporary life, cloud storage Service is important to almost everyone. The global cloud storage market reached a staggering $78.6 billion in 2022, with a growth trajectory that shows no signs of abating. A market study suggests that the industry could be worth $183.75 billion by 2027.

At the same time, IDC predicts that the cloud storage market will be valued at US$376 billion by 2029. The growing demand for data storage is further illustrated by IDC’s forecast, which predicts that the global datasphere will expand to 175 zettaytes by 2025. Given these promising prospects, it can be concluded that decentralized storage, as an alternative to its Web2 counterparts, will benefit from overall market growth, pushing it on an upward trajectory.

As one of the key infrastructures of Web3, decentralization The growth of centralized storage is intrinsically linked to the expansion of the overall cryptocurrency market. Even without taking into account the surge in storage demand, the market size of decentralized storage is likely to grow steadily if the adoption of digital assets continues to rise. True decentralization cannot be achieved without decentralized infrastructure. Increased cryptocurrency adoption may signal greater public understanding of the importance of decentralization, driving the use of decentralized storage.

The value of data is often reflected in the analysis it provides Meaning, this requires data calculation. However, in the existing decentralized storage market, the apparent lack of mature computing-based products is a significant obstacle to large-scale data applications. Projects like Bacalhau and Shale are addressing this challenge and focusing their efforts on Filecoin. Other notable projects include Fluence and Space and Time, which are developing artificial intelligence query systems and computing markets respectively.

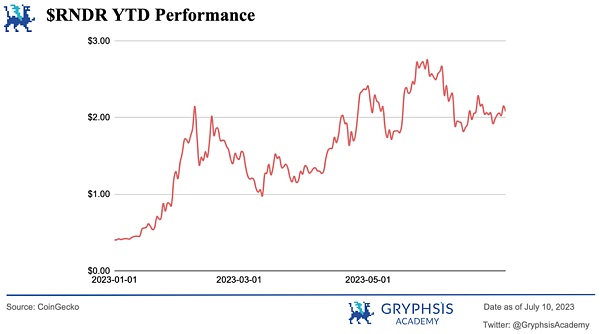

As computing-based products flourish, the demand for computing resources will also grow. This demand can be glimpsed through the price trajectory of $RNDR, a peer-to-peer GPU computing network for users in need of additional computing power. Its year-to-date results are up a staggering 500%, reflecting investor expectations of rising demand. As these industries mature and the ecosystems become more comprehensive, the adoption of decentralized storage will increase significantly with the influx of users.

Decentralized Physical Infrastructure Network (DePIN) is a blockchain-based network that integrates real-world digital infrastructure Integrated into the Web3 ecosystem. Key areas for DePIN include storage, compute, content delivery networks (CDNs) and virtual private networks (VPNs). These transformative networks seek to increase efficiency and scalability by employing cryptoeconomic incentives and blockchain technology.

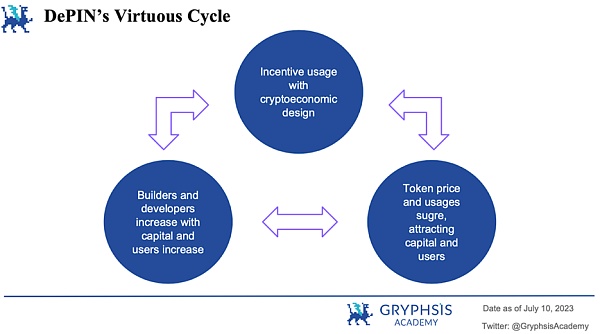

The strength of DePIN lies in its potential to generate a virtuous cycle, consisting of three important components. First, the protocol adopts a token economic design to incentivize participants, often by enhancing actual application and network usage through tokens. As the economic model solidified, soaring token prices and protocol usage quickly attracted attention, prompting an influx of users and capital. This growing capital pool and expanding user base attract more ecological builders and developers into the industry, perpetuating the cycle. As DePIN’s core track, storage will also become one of the main beneficiaries of DePIN’s expansion.

The rapid development of artificial intelligence is expected to catalyze the growth of the crypto ecosystem and accelerate the development of various fields of digital assets. Artificial intelligence creates incentives for decentralized storage in two main ways - by stimulating storage demand and increasing the importance of decentralized physical infrastructure networks (DePIN).

As the number of products based on generative AI grows exponentially, so does the data they generate. The proliferation of data has spurred the demand for storage solutions, thereby driving the growth of the decentralized storage market.

Although Generative AI has seen significant growth, it is expected to continue this momentum in the long term. According to EnterpriseAppsToday, by 2025, generative AI will account for 10% of all generated data globally. Furthermore, CAGR predicts that generative AI will grow at a compound annual growth rate of 36.10% to reach $188.62 billion by 2032, indicating its huge potential.

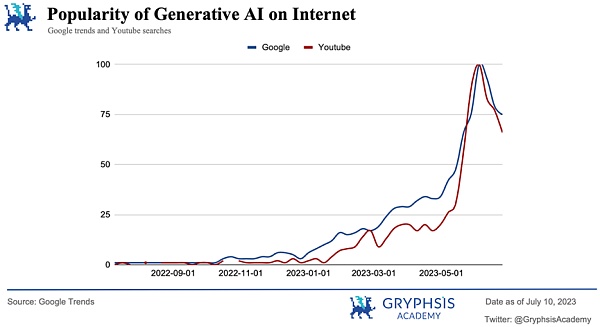

Generative AI has grown in popularity significantly over the past year, as evidenced by Google Trends and YouTube searches. This growth further highlights the positive impact of artificial intelligence on the demand for decentralized storage solutions.

The surge in storage and computing resources required for artificial intelligence technology highlights the value of DePIN. With the Web 2.0 infrastructure market becoming a monopoly controlled by central entities, DePIN becomes an attractive alternative for users looking for cost-effective infrastructure and services. By democratizing access to resources, DePIN offers significantly lower costs, thereby increasing adoption. As artificial intelligence continues its upward trajectory, its demand will further stimulate DePIN's growth. This, in turn, helps the decentralized storage industry expand.

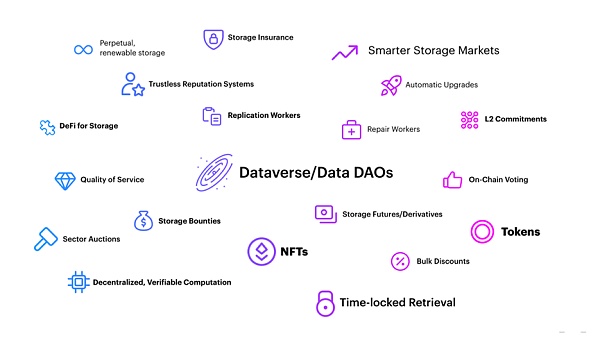

Filecoin Virtual Machine (FVM) not only unleashes the potential of Filecoin itself, but also revolutionizes the entire decentralized storage market. Since Filecoin is the largest decentralized storage provider and captures a large portion of the market, its growth has essentially paralleled the expansion of the industry as a whole. The emergence of FVM transforms Filecoin from a data storage network into a comprehensive decentralized data economy. In addition to enabling permanent storage, FVM integrates DeFi into the ecosystem, resulting in greater revenue opportunities and attracting a larger user base and capital inflow into the industry.

As of June 22, FVM’s 100th day online, more than 1,100 unique smart contracts supporting dApps have been deployed on the Filecoin network. Additionally, more than 80,000 wallets have been created to enable interaction with these FVM-powered dApps. The total balance of FVM accounts and contracts has exceeded 2.8 million FIL.

Currently, the protocols within the FVM ecosystem are all related to DeFi, enhancing the utility of $FIL. As this upward trend continues, we expect a large number of applications to emerge, which may trigger another wave of growth in the storage market.

In addition, we also expect other storage networks to introduce virtual machine mechanisms similar to FVM, triggering an ecological boom. For example, Crust Network officially launched its EVM storage on July 17, combining the Crust mainnet, Polkadot and EVM contracts to build a new Crust protocol to seamlessly provide storage services for any EVM public chain.

Whether it is a game or a social application, a decentralized database service is needed that can resist censorship and achieve high-speed reading and writing. Decentralized databases can enhance current Web3 applications and enable the development of new applications and experiences in different areas.

Decentralized Social - By integrating large amounts of social data Stored in a decentralized database, users will have greater control over their data, able to move between platforms and unlock content monetization opportunities.

Game- Manage and store player data, games Internal assets, user settings and other game-related information is an important aspect of blockchain-based games. A decentralized database ensures that this data can be seamlessly exchanged and combined by other applications and games. A hot topic in the GameFi field right now is full-chain gaming, which means deploying all core modules, including static resource storage, game logic calculations, and asset management, to the blockchain. A decentralized database with high-speed read and write capabilities is an important infrastructure for realizing this vision.

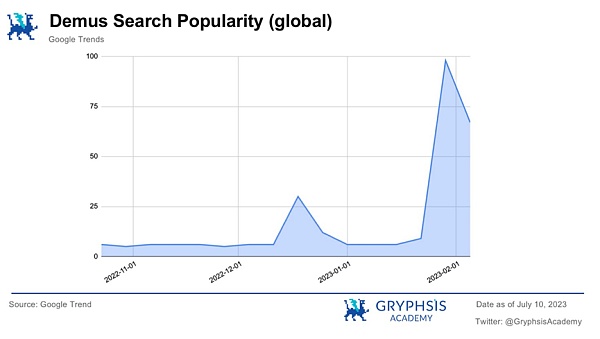

Games and social applications are the industries with the largest number of Internet users, and are also the industries most likely to produce killer applications, such as Demus, which broke out in February this year. We believe that the explosion of Web3 games and social applications will also bring huge demand for decentralized databases.

Apart from market prospects and technology, public awareness is a key component driving the growth of the decentralized storage market. A comparison of centralized and decentralized storage clearly highlights the many advantages of the latter.

However, the ability to attract more users depends on more and more people becoming aware of these benefits. This can be a lengthy process that requires the entire industry to work together.

From content output to brand exposure marketing, industry practitioners must work hard to convey how decentralized storage can revolutionize the cloud storage field. This effort complements other growth factors, amplifying the impact of market expansion and technological evolution.

Overall, decentralized storage is a technology The infrastructure industry faces huge challenges and has a long investment cycle but huge growth potential.

The long investment cycle is mainly due to the long iteration cycle of distributed technology itself. Project developers need to find a delicate balance between decentralization and efficiency. Providing efficient, highly available data storage and retrieval services while ensuring data privacy and ownership will undoubtedly require extensive exploration. Even IPFS often experiences unstable access, and other projects like Storj are not decentralized enough.

The potential growth space of this market is also highly anticipated. In 2012 alone, AWS S3 stored 1 trillion objects. Considering that an object may be between 10 and 100 MB, this means that AWS S3 alone uses 10,000 to 100,000 PB of storage space.

As of the end of 2022, the storage utilization rate of Filecoin, the largest provider, was only about 3%, according to Messari data. This means that only about 600 petabytes of storage space on Filecoin is actively utilized. Obviously, the decentralized storage market still has a lot of room for development.

And with the rise of artificial intelligence DePin, we maintain a bright outlook for the future of decentralized storage, as several key growth drivers will promote market expansion.

Reference materials

[1]The Essential Guide to Decentralized Storage Networks

[2]Decentralized Databases: The Missing Piece of Web3

[3 ]Crust Wiki

[4]Arweave: A Protocol for Economically Sustainable Information Permanence

[ 5]Blogs from Filecoin

[6]The most comprehensive analysis of decentralized storage technology

Which decentralized cloud computing platform is the best? New projects may bring new experiences to users.

JinseFinance

JinseFinanceImagine that you upload a photo album of your family vacation to network storage today, and 200 years from now, your descendants can still view the photo album.

JinseFinance

JinseFinanceDuring this Chinese Spring Festival, a big event happened in the Arweave ecosystem.

JinseFinance

JinseFinanceCurrently, the mainstream decentralized storage on the market includes Arweave, Filecoin, and Storj. Each of them has unique characteristics and design concepts.

JinseFinance

JinseFinanceTeen CEO Álvaro Pintado Santaularia acquires the 'hello.app' domain for $115,000, aiming to disrupt the status quo data storage landscape. The new platform promises to be the world's first decentralised storage network compatible with mobiles, iPads, and PCs.

YouQuan

YouQuanIsraeli authorities have taken strong measures to shut down cryptocurrency accounts and seize substantial digital assets to cut off financial ties between the crypto markets and Hamas, as a response to the group's attacks on Israel

Jixu

JixuConventional storage is like a centralised corporation, while decentralised storage, using blockchain, is like secure personal lockboxes, ensuring individual security.

CoinBold

CoinBoldDecentralized storage is an indispensable infrastructure for Web3. But at this stage, whether it is storage scale or performance, decentralized storage is still in its infancy and is far from centralized storage. This article selects some representative storage projects: Storj, Filecoin, Arweave, Stratos Network, Ceramic, summarizes and compares their performance, cost, market positioning, market value and other information, and analyzes the technical principles, Ecological progress is summarized.

链向资讯

链向资讯Decentralized storage providers are proving to be the backbone of Web3, but what does this mean for centralized web service providers?

Cointelegraph

CointelegraphFilecoin has become the official storage partner of the Flow blockchain, a company dedicated to providing decentralized storage for NFTs minted on the Dapper Lab network.

Cointelegraph

Cointelegraph