Author: Lu Shiming

The emergence of Manus has triggered a double shock in the technology and capital markets. For a time, AI Agent-related concept stocks collectively soared, and technology giants such as Alibaba, Google, and Microsoft intensively released intelligent agent research and development plans...

Behind this craze is the paradigm shift of AI technology from "passive response" to "active execution".

Although the market's evaluation is mixed, it cannot be denied that Manus is a breakthrough in that it has verified the commercial feasibility of general AI Agents in complex scenarios for the first time.

Although traditional large language models can generate text, it is difficult to execute tasks in a closed loop. Manus transforms the cognitive ability of large AI models into productivity tools through the "planning-verification-execution" architecture.

According to multiple authoritative reports such as McKinsey, driven by diversified demands, the AI Agent market is experiencing explosive growth. The global AI Agent market size will be approximately US$5.1 billion in 2024, and is expected to soar to US$47.1 billion in 2030, with a compound annual growth rate of 44.8%.

However, this "intelligent agent wave" is not a smooth road. The collision of technical bottlenecks and business ambitions makes the competition of AI Agents both imaginative and risky.

Breakthrough battle

In essence, AI Agent is a digital workforce with human thinking paradigm.

If chatbots are still in the "dialogue" stage, then Agents have already begun to "act". Simply put, it can be understood as a smarter and more autonomous AI application that can not only answer questions, but also perform tasks and complete transactions.

They can be applied to various scenarios, such as customer service, financial analysis, software development, etc., greatly improving productivity and efficiency.

With the large language model as the "brain", AI Agent can not only understand the surface semantics of instructions, but also capture implicit needs. For example, if a user says "find a hotel with a good price-performance ratio", Manus will infer "budget-sensitive" or "experience-first" needs based on context such as seasons and local activities.

What can be expected is that with the continuous breakthroughs in the multimodal capabilities of large models, especially the iterative upgrades of multimodal fusion technology, AI Agent will be able to more accurately analyze and feedback user needs, and gradually realize human-like audio-visual perception and interaction capabilities.

This will enable AI Agent to be applied to a wider range of fields, such as medical diagnosis, autonomous driving, and smart security.

While the single intelligence is continuously optimized, it is also conceivable that the AI Agent of the future may also be able to break through the single-machine operation mode and reconstruct the complex task processing and decision-making chain through a collaborative mechanism.

This multi-agent system (MAS) can make each agent like a human team with specialized division of labor through a role positioning mechanism.

For example, in the scenario of software development, each AI Agent has its own expertise. Some are good at programming, some are good at design, and some are specialized in quality inspection. As long as they can collaborate well, they can complete a high-quality software project together.

In addition, the MAS system can also simulate the human decision-making process. Just as people will consult with others when they encounter problems, multi-agents can also simulate the behavior of collective decision-making, so as to provide users with better information support, especially in some complex situations.

For example, in an emergency, these AI agents can help users simulate all possible situations and provide useful information in a timely manner, so that users can make decisions faster and better.

It can be said that this "human-like" intelligent paradigm is reconstructing the cost structure of life and work. Starting from Manus, it seems that AI Agent has entered the critical point of large-scale implementation from the proof of concept stage.

The race among giants

The craze of AI Agent is not accidental, but an inevitable product of technological evolution.

As early as the 2024 Sequoia AI Summit, Professor Andrew Ng predicted that "AI Agent is the next key stage in the development of AI". In fact, during 2024, many technology giants have laid out AI Agent.

For example, Google released its latest version of the large model Gemini2.0 series in December 2024, and introduced multiple intelligent agent applications, such as Project Astra. Microsoft also released multiple AI agents for sales, operations and other scenarios in October and November 2024, and launched the Copilot Studio platform to support users in building autonomous agents.

Entering 2025, the popularity of Manus has completely driven the market sentiment.

Overseas, OpenAI's recently announced commercialization plan further confirms the B-side potential of AI Agent. Its "doctoral level" Agent is aimed at scientific research and software development scenarios, with a monthly service fee of up to 20,000 US dollars, covering the full chain of needs from basic analysis to complex tasks.

In China, Ali Qianwen QwQ-32B model also integrates capabilities related to intelligent agent, enabling it to think critically while using tools and adjust the reasoning process according to environmental feedback.

On the other hand, new products such as OpenManus and OWL have emerged in the open source community. Reproduction and innovation based on Manus are expected to promote the flourishing of Agent products.

The enthusiasm of the capital market further confirms this trend.

On the day of Manus's release, more than 150 AI intelligent concept stocks in A-shares hit the daily limit, and Cube Holdings, Coolte Intelligence and others rose by more than 20%.

Source: Oriental Fortune

In addition, after the release of Manus, brokerage PPTs and analyst roadshows were quickly launched. According to incomplete statistics, dozens of brokerage research institutes such as CICC, Huatai, China Merchants, and Zhongtai conducted roadshows, among which analysts launched multiple roadshows. The content of the roadshows ranged from technical principles, AI applications, beneficiary directions to landing scenarios and industrial circle deductions, and the content was rich.

Of course, behind the craze, there are also different voices. Many industry insiders believe that Manus is an elementary application of AI Agent and the market reaction is too large.

This is indeed the case. AI will further narrow the information gap. A large amount of work on collecting information and organizing data can be handed over to AI, but there is undoubtedly a long way to go before it can really generate investment decisions.

Among them, the biggest challenge is that the ghost of AI illusion always lingers.

Technical bottleneck

In the competition of AI Agent, the entrance is king.

When manufacturers with more user traffic are expected to achieve a positive cycle of "traffic-data-user experience", and with the upgrade of open source model capabilities to make up for the technical generation gap between large and small factories, AI product engineering capabilities may widen the gap in product user experience.

But despite showing great potential, the outbreak of AI Agent still faces multiple obstacles. From business models to technical bottlenecks, from lack of regulations to user awareness, every link is testing the patience of the industry.

The first reason is that existing technologies cannot effectively solve the problem of AI hallucinations.

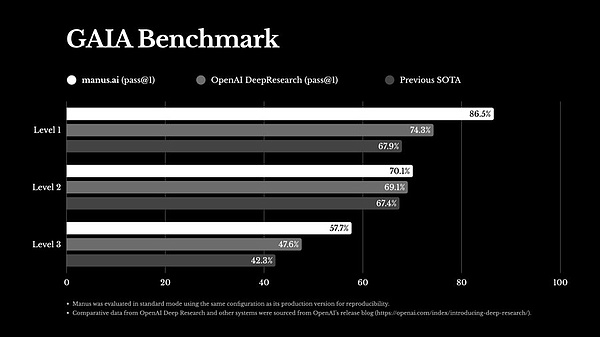

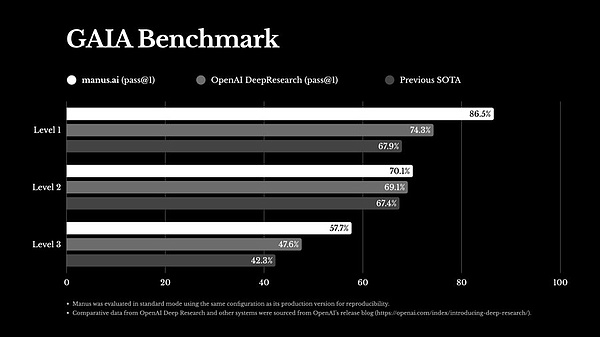

For the popular Manus, although it has achieved excellent results in the GAIA benchmark test, there are still some unstable situations in actual applications.

GAIA benchmark ranking source: ManusAIX platform

According to actual user feedback, when processing complex tasks, Manus occasionally fails to execute tasks or has inaccurate results. When analyzing stock data, Manus may cause deviations in analysis results due to temporary failures in the data interface or slight changes in the data format.

Take OpenAI's GPT4.5 as an example. There is no doubt that this is the strongest large language model at present. However, in the SimpleQA benchmark test, GPT-4.5 has an accuracy of 62.5% and a hallucination rate of 7.1%. Although this result is much better than models such as GPT-4o, OpenAIo1 and o3-mini, there is still a very high hallucination rate.

And this kind of hallucination, in high-risk fields such as finance and medical care, any error may cause systemic risks.

Assuming that a medical diagnosis agent has a 3% probability of misjudging a rare disease case, if it is applied to a user group of tens of millions, the potential number of misdiagnoses will be as high as 300,000.

In addition to hallucinations, the contradiction between data islands and general capabilities follows.

The effectiveness of AI Agent is highly dependent on scenario data. For example, financial risk control requires real-time transaction data, while medical diagnosis relies on the patient's medical history database. Data fragmentation will make it difficult for general-purpose Agents to migrate across fields.

Finally, there is the lag in ethics and regulation. The autonomous decision-making of AI Agent involves ethical issues such as privacy leakage and responsibility attribution, such as calling user health data, autonomous driving accidents, etc., and the global regulatory framework is not yet mature.

It can be seen that the breakthrough path of AI Agent needs to be promoted in a coordinated manner from the three ends of technology, ecology and supervision. In the future, whoever can break through the technical bottleneck first and build a compliant ecology will undoubtedly dominate the "Normandy Landing" in this era of intelligent bodies.

Weatherly

Weatherly