“Everyone ignores that MegaETH actually almost eliminates the EVM’s gas limit” -@0x_ultra

This has caused some attention on the X-timeline - let’s break down how this works and its impact.

Typical Blockchain Network

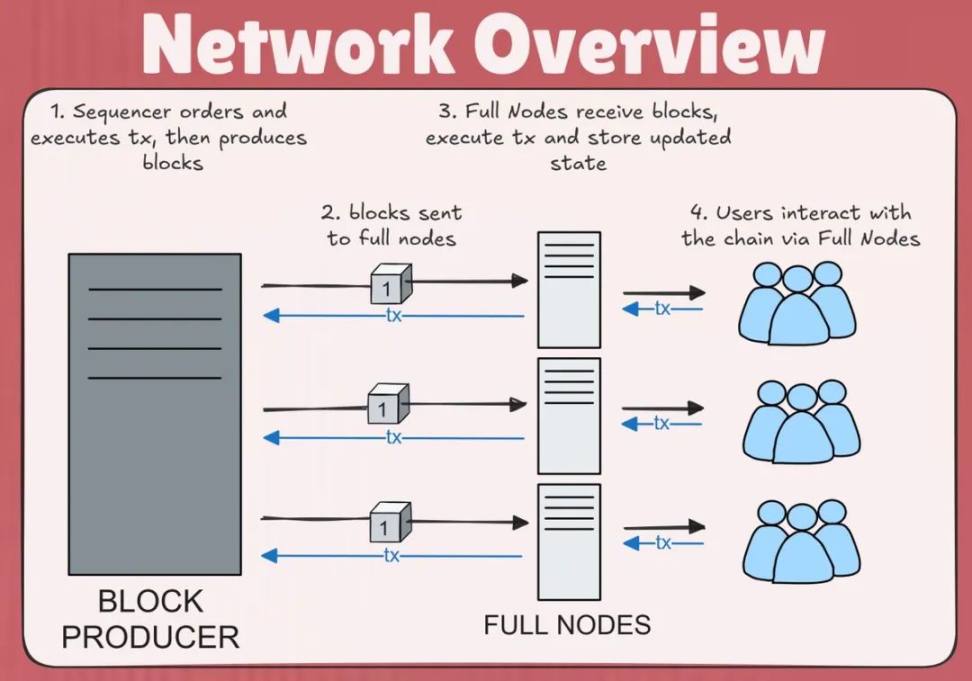

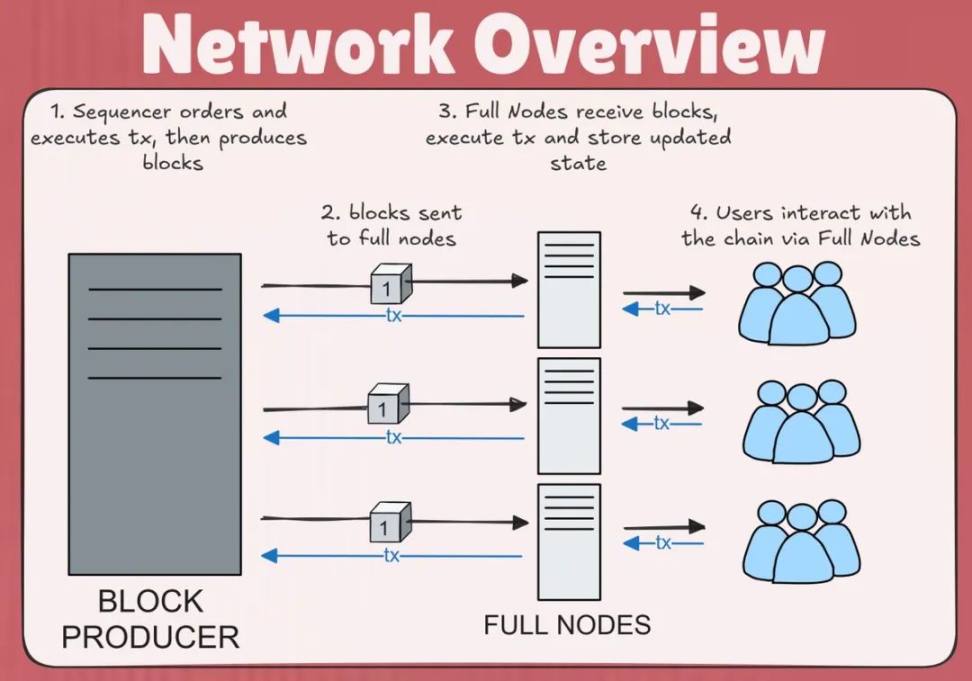

First, an overview of what a traditional network consists of so that we can highlight the differences.

I'll simplify the explanation with an image (if this helps you, you can skip this part):

Common roles in a blockchain network: block producers, node networks, and users.

Now let's analyze what these roles represent.

Common Network Roles

This is the entity responsible for creating blocks that can be appended to the chain.

For L1, this is a diverse and distributed set of validators, randomly selected to hold this role, while for L2, a common construction gives this role to a single machine: the orderer.

The key difference between the two parties filling the block producer role is that orderers typically have much larger hardware requirements and either do not relinquish the role or do so very rarely, whereas validators rotate constantly (e.g., Solana’s leaders rotate after ~1.2 seconds).

These machines receive blocks produced by block producers (whether validators or sorters), execute them themselves to verify their accuracy with the existing chain history, and then update their local "truth" to keep in sync with the chain itself.

Once in sync, they can provide this information to application users, developers who want to obtain chain information, etc. This is the "network" of the blockchain.

It is important to note that your network is only as fast as its slowest entity.

This means that if these entities providing chain information cannot keep up with the blocks produced by the validators/sorters and verify their correctness, then your network will operate at this slowed down speed.

That's you. When you read information from your application or submit transactions to the chain, it's all routed through full nodes that are in sync with the block producers. This one is pretty self-explanatory.

Hardware Protocol

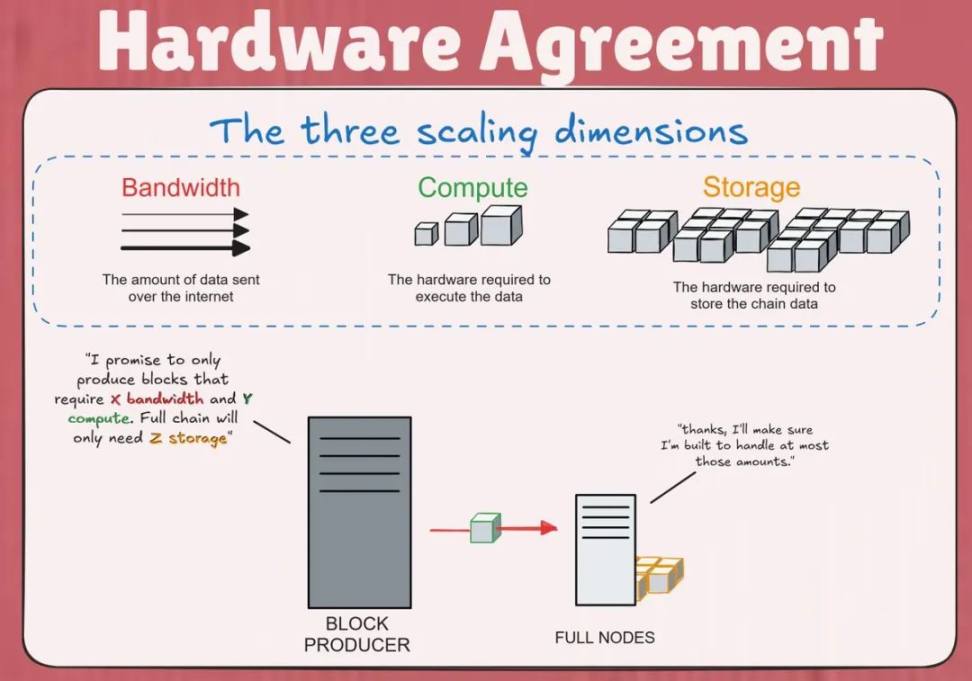

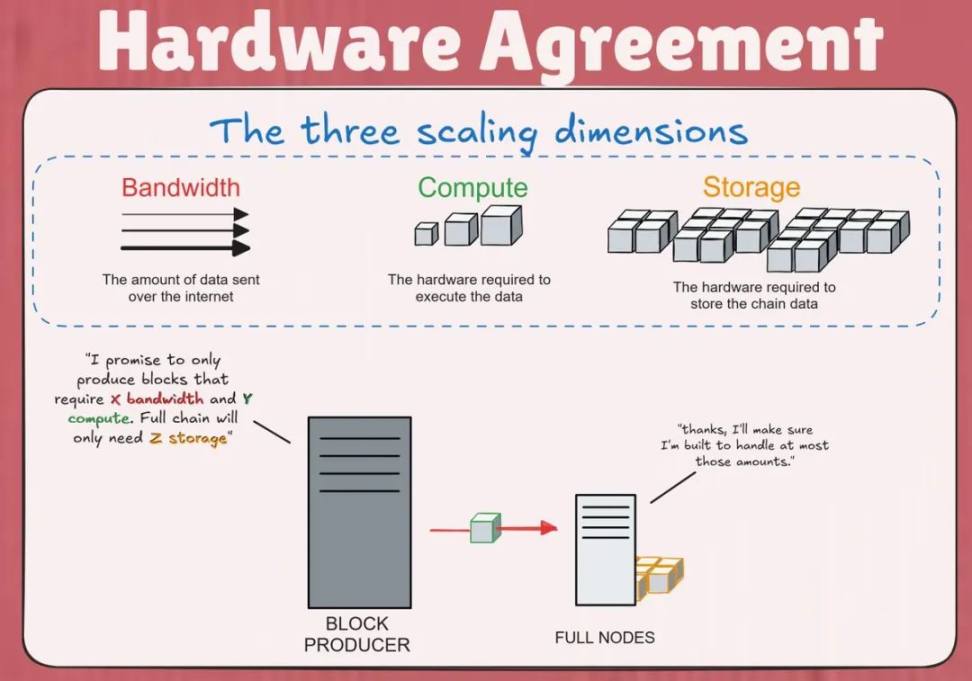

So, these are the parties - great. But what does this have to do with gas limits? To understand this, we have to talk about what gas, along with the other two scaling dimensions, represent in a distributed network.

In short, the gas limit represents the complexity of the computation or block on the chain, and is a promise made by the network to its nodes: in order to keep up with the blocks it's producing, you only need X amount of hardware to process the blocks it's producing without falling behind. This is essentially a method of throttling.

It's not the only dimension that determines a chain's throughput, though.

Two other factors are:

Bandwidth - the upload/download speed of a node, enabling it to communicate with the rest of the network

Storage - the hardware requirements for a node to store chain information. The more history is processed, the more information needs to be stored.

Along with computation, these constitute the implicit “hardware protocol” of the network:

Three-dimensional scaling that affects network throughput

In the traditional setup of cryptocurrencies, it is common to have a single machine (a full node) running in isolation and able to handle the maximum possible requirements in all three dimensions.

A full node must have:

Bandwidth to download/upload all blocks

Computational power to re-execute all transactions in all blocks

Storage capacity to store the entire chain state

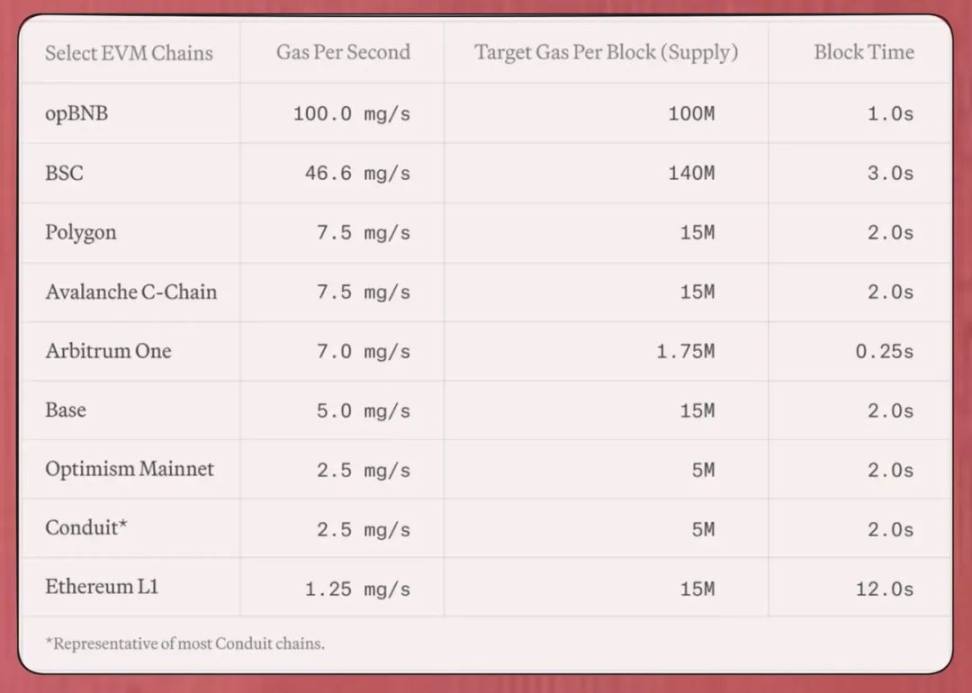

Of the above, compute is generally the most limiting in the average EVM network, which is why block limits are roughly similar in well-distributed networks:

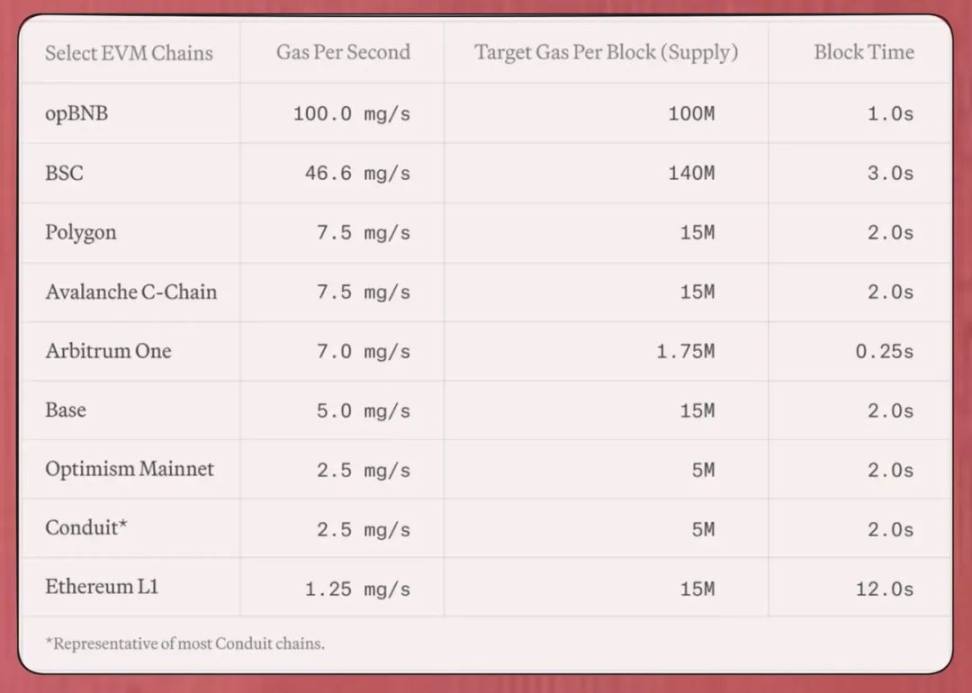

Table: Comparison of EVM on-chain gas parameters in 2024 (Source: Paradigm [https://www.paradigm.xyz/2024/04/reth-perf ])

So the problem is identified as the computational power required by a single machine to keep up with the block producers on the chain.

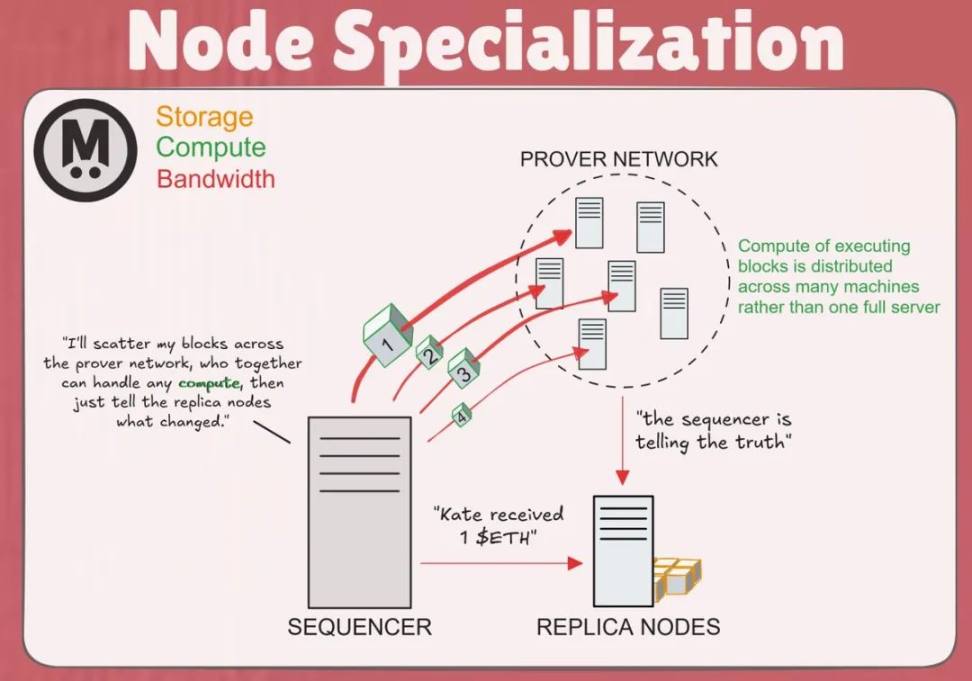

How to solve this problem? Node specialization.

Node specialization: MEGAETH's answer

What the hell is node specialization?

It just means that we take the approach of splitting this traditional single entity (full node) into a group of specialized machines that serve specific functions.

Then: full nodes must handle the maximum bandwidth, computation, and storage results of block producers.

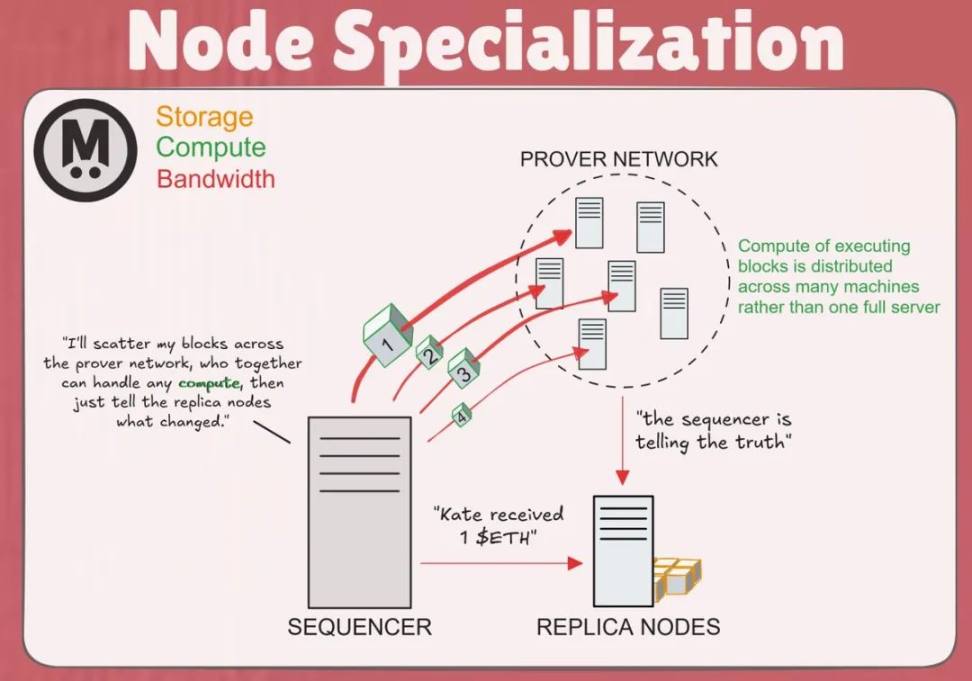

Now: The full node is replaced with a replica node, which only receives state differences instead of full blocks, and full blocks are distributed throughout the network of proving nodes, which execute them independently and then report proof that the block is valid to the replica nodes.

Visualization:

Visualization of the proof network and replica node relationship

The impact of the above is:

Since computation (i.e. transaction complexity) is no longer processed by a single entity for each block, but is instead spread across a set of machines in the proof network, it is no longer the most pressing limiting dimension for scaling, virtually eliminating the possibility of it being a constraint

The above shifts the problem to bandwidth and storage, with storage size being our current focus due to state growth. To address this, we are iterating on a pricing model based on the number of kvs updated rather than transaction complexity (gas)

By splitting a single machine into a cluster of machines, it injects some trust assumptions into this particular setup.

On this last point, it’s important to note that MegaETH will also offer a full node option for those who wish to validate 100% of the chain state themselves.

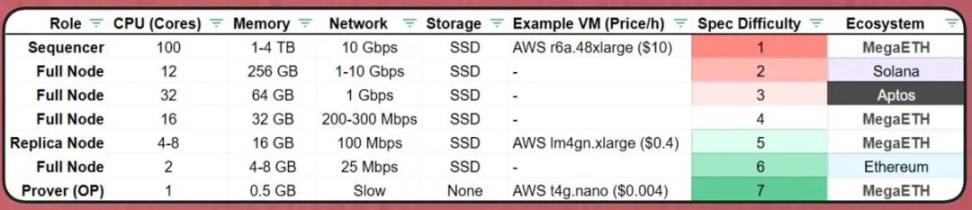

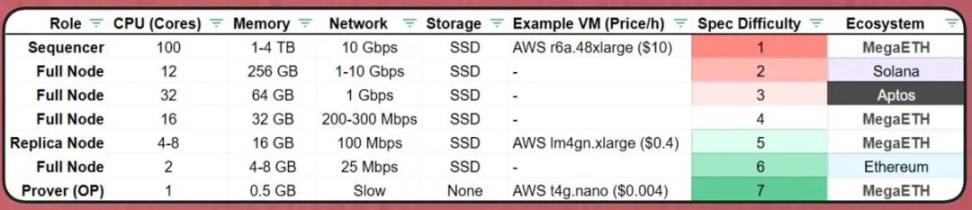

Latest node spec provided by MegaETH

Great, compute/gas limits are gone — what does this mean for me?

Impact of no gas limit

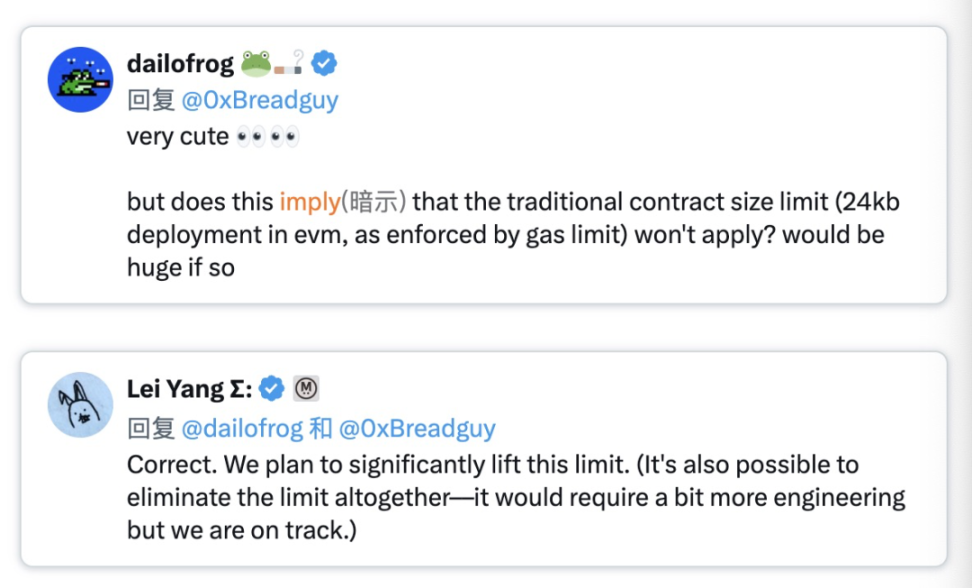

At the highest level, this simply means "people can do more complex things on-chain", which often manifests itself in strict size limits on contracts and transactions.

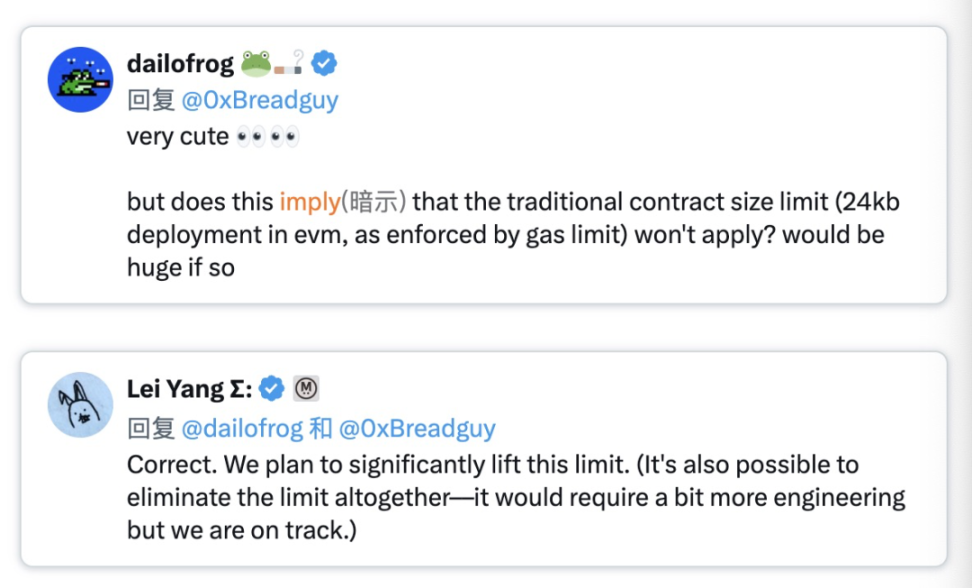

@yangl1996's direct answer to @dailofrog (an avid on-chain artist):

In addition, there are some example categories:

Complex on-chain calculations

Running machine learning models directly in smart contracts

Real-time price calculations

Full sort of large arrays without loop restrictions

Graph algorithms that can traverse entire networks/relationships

Storage and state management

Maintain larger in-contract data structures

Keep more historical data accessible in contract storage

Process batches of operations in a single transaction

Protocol design

left;">Real-time automated market makers with complex formulas

Ultimately, this is just on-chain creativity. It’s a mindset shift away from scarcity, gas optimization, and contract optimization to a rich EVM paradigm.

We’ll see how teams ultimately leverage this, but I think it’ll be something the ecosystem quietly celebrates for a long time.

Kikyo

Kikyo