Author: Doug Petkanics, Livepeer CEO; Translation: 0xxz@金财经

Introduction

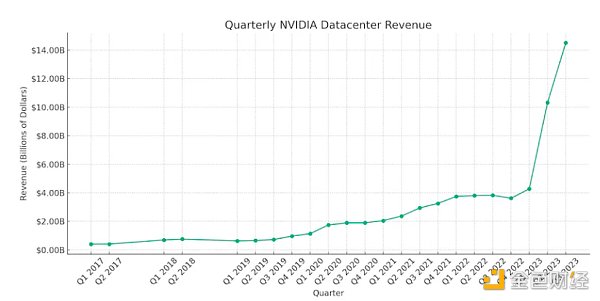

When people discovered that the Livepeer network had thousands of GPUs actively used for transcoding per week, When looking at millions of minutes of video, one of the most common questions is whether these GPUs can be used to perform other types of calculations. In particular, with the rise of artificial intelligence in 2023 and the related increase in demand for GPUs (hardware used to perform artificial intelligence training and inference), one would naturally think that the Livepeer network could use its computing power to move into artificial intelligence infrastructure. , AI infrastructure costs billions of dollars. NVidia's data center business, which provides GPUs for AI computing, saw revenue growth of $14 billion last quarter alone, up from $4 billion a year earlier.

Source: NVidia Quarterly Report via @Thomas_Woodside on X

Those who make these assumptions are correct - the Livepeer network can certainly be used by those looking for disruptive cost-effective AI processing. With the growth in Livepeer video usage through Livepeer Studi in recent months and the launch of new community-managed Livepeer vaults laying the groundwork, now is the time to bring AI video computing capabilities to Livepeer.

The following sections of this article will explain how to introduce artificial intelligence video computing on the Livepeer network, as well as the plans, strategies and timelines to make it a reality.

Mission Positioning - Video Filters

Background on Livepeer’s Mission and Commitment

Livepeer remains committed to its mission: to build the world’s open video infrastructure. Other computing platforms attempt to become a general-purpose "AWS on blockchain" or "run any type of computing task" type market, but this creates market entry challenges due to a lack of capabilities for industry-specific solutions. Instead, Livepeer focuses on video computing through transcoding and is able to build targeted products and GTMs for specific industries (the $100 billion+ video streaming market) to address real use cases and tap into existing needs, rather than marketing to no one Desired general abstract solution.

The focus on video means Livepeer avoids overreacting and turning to the latest hot trends like ICOs, NFTs or DeFi, instead always asking how these innovations can be applied to video. The highs weren’t that high, but more importantly, the lows weren’t that low either. This also attracts a mission-focused team and community with deep video expertise who are excited about what we’re doing over a long period of time, rather than leaving when this month’s trend loses steam. Community.

Currently, no trend is hotter than the rapid rise of artificial intelligence. But unlike many cryptocurrency teams and projects, Livepeer isn’t abandoning its mission and “pivoting to artificial intelligence.” Instead, we asked the question: How will AI impact the future of video? Artificial intelligence has lowered the barriers to entry for video creators in many ways. Two important factors are reducing the time and cost of creating in the first place, and reducing the time, cost and expertise of producing and outputting high-quality videos.

On the creative side, generative AI can be used to create video clips based on text or image prompts. Where in the past, setting up a scene required a crew, sets, cameras, scripts, actors, editors, etc., now it may simply require the user to enter a text prompt on the keyboard and then wait a few minutes for the GPU to generate a sample of the potential results. Generating video won’t replace high-quality work, but it can provide significant cost savings at various stages of the process.

In terms of production, whether created by artificial intelligence or submitted by creators, functions such as upscaling, frame interpolation, and subtitle generation can quickly improve the quality and usability of video content. Accessibility. Advanced features such as interactivity in video can be enabled through automatic object detection, masking and scene type classification.

The timing for Livepeer to leverage this AI feature set is exciting due to the recent release of open source base models including Stable Video Diffusion, ESRGAN, FAST, and more All in sync with closed source proprietary models. The goal is for the world's open video infrastructure to support running open source models that are accessible to everyone, that already exist today and are rapidly getting better thanks to innovation from the open source AI community.

AI Background - Where Livepeer Fits in

Training, Fine-tuning, Inference

There are many stages in the AI life cycle, but three usually require a lot of computing power The stages are training, fine-tuning, and inference. In short:

Training requires creating models and running calculations on very large data sets. Sometimes this requires thousands or hundreds of millions of dollars worth of computation in training a base model, such as one trained through OpenAI or Google.

Fine-tuning is more cost-effective and takes an existing base model but adjusts the weights based on a specific set of inputs for a specific task.

Inference is the act of taking a trained and tuned model and letting it produce output or make predictions based on an input set. For an inference job, this is often computationally cheap relative to the first two stages, but is often performed over and over millions of times, so the cost of inference exceeds the cost of training, thus justifying the investment in training .

Training and fine-tuning require access to large data sets and densely networked GPUs so that they can communicate with each other and share information quickly. Networks like Livepeer are not well suited to training out of the box and require significant updates to get the job done. While decentralized networks are attractive for training as an alternative to proprietary large-scale technical training clouds, they are not competitive from a cost perspective due to network overhead and inefficiencies in training underlying models. Strength is questionable.

Inference, on the other hand, is where decentralized networks like Livepeer can come into play. Each node operator can choose to load a given model onto its GPU and can compete on cost to execute inference jobs based on user input. Just like in the Livepeer transcoding network, users can submit jobs to the Livepeer network to perform AI inference and should reap the benefits of open market competitive pricing, taking advantage of currently idle GPU power and thus seeing cost benefits.

GPUs are the lifeblood of the artificial intelligence boom. NVidia's data center business, built on GPU demand, has grown exponentially over the past year. Elon Musk jokingly said that GPUs are harder to buy than drugs. However, DePIN networks like Livepeer have shown that through their open market dynamics, and by channeling incentives through inflationary token rewards, they can attract global GPU supply ahead of demand, thereby elastically supporting the growth of new users and applications . Near unlimited pay-as-you-go capacity. Developers no longer need to reserve hardware in advance at a high price that sits idle when not in use, but can instead pay the lowest possible market price. This is a huge opportunity for decentralized networks to drive a boom in artificial intelligence.

Livepeer opportunity - submit AI inference tasks to the network instead of the GPU

Let the 1000 GPUs connected to Livepeer work< /span>

Cloud providers such as GCP or AWS allow you to "reserve GPU servers" on their enterprise clouds. Open networks like Akash go a step further and allow you to rent servers on demand from one of many decentralized providers around the world. But regardless of the above options, you will have to manage the rented server to run the model and perform tasks. If you want to build an application that can perform multiple tasks simultaneously, you have to scale it. You have to link workflows together.

Livepeer abstracts things into "jobs" that you can submit to the network and trust that it will be completed. Livepeer already does this with video transcoding, which works by submitting a 2-second video clip for transcoding. You simply send a job to the network and can be confident that your broadcast nodes will complete the job reliably, taking care of worker node selection, failover, and redundancy.

For artificial intelligence video computing tasks, it can work in the same way. There might be a "generate video from text" job. You can trust that your node will do the job, and you can scale this to any number of jobs you want submitted simultaneously via a single node that can leverage a network of thousands of GPUs to perform the actual computation. Taking a step forward - this is still in the design phase - you can submit an entire workflow, for example

Networking can do this for you without you having to deploy separate models to separate machines, manage IO, shared storage, etc. No more managing servers, scaling servers, doing failovers, etc. Livepeer is a scalable infrastructure that is maximally cost-effective and highly reliable. If the network can deliver on these promises of AI video computing, as it has done with its video transcoding efforts, it will deliver a new level of developer experience and cost reduction not yet seen in the open AI world .

Plan to quickly introduce AI video computing and verify the cost-effectiveness of the network

AI video subnet

In line with Livepeer’s journey over the past 7 years, this project will To demonstrate real, usable, functional, open source software and network capabilities, and then promote "Livepeer has this". Here is a short version of the plan to achieve this:

Choose specific initial use cases for other job types, not just video transcoding Code: AI-based generated video, supported by AI upscaling and frame interpolation. Great open models, such as Stable Video Diffusion, are growing every day in this space.

Move quickly by building within forks/spikes of the node software to add these features to our coordinator (supply side) nodes and broadcasters (demand side) node. Catalyst, Livepeer's open media server, should support an interface for requesting and consuming these video-generating tasks.

Users running this spike will form some kind of sub-network on Livepeer, but they will use the Livepeer protocol to discover and pay nodes running this new feature through the Livepeer mainnet.

Work with consumer-facing front-end applications to leverage Livepeer’s cost-effective open computing network and capture and present data that validates Livepeer’s cost-effectiveness relative to public cloud .

As we validate this, merge into the core Livepeer client, add additional job types, and grow the ecosystem around leveraging other forms of AI-based video computing.

The AI video sub-network node runs together with the transcoding node, while using the Livepeer mainnet for payment.

AI Video SPE

Livepeer recently introduced an on-chain vault-managed community to the protocol with its Delta upgrade and has been using LPT for several months to fund public goods programs. There is already a pre-proposal under discussion and approaching a vote to fund a special purpose entity (or SPE) dedicated to making the promise of artificial intelligence video computing a reality. The first proposal aims to achieve core development to complete the first 4 tasks listed above, including:

Develop these AI capabilities Forking Livepeer nodes

Node operators can form a sub-network and perform these tasks by paying on the Livepeer mainnet.

A front-end application that exposes these capabilities to consumers.

A collection of benchmarks and data showing the cost-effectiveness of the Livepeer network for performing artificial intelligence inference at scale.

It also raises potential future funding milestones to provide infrastructure credits from the coffers to cover the initial cost of consumer use during this data collection period.

The #ai-video channel in the Livepeer Discord has become a hot spot for discussion and collaboration around the initiative, and anyone who believes in open AI infrastructure and the future of video AI computing should stop by, say hello, and get involved . Node operators have begun benchmarking different hardware, becoming familiar with running these open video models, and addressing the challenges of moving from video transcoding expertise to other video-specific job types. It's a fun time to be part of a rapidly growing project team.

The future

While this initial milestone can show that Livepeer is cost-effective for the specific forms of AI video computing it supports, the real ultimate power lies in the ability of AI developers to BYO models, BYO Weighting, BYO fine-tuning or deploying custom LoRA builds on the network's existing base model.

Supporting these initial capabilities, across a diverse set of models and compute modalities, will result in rapid development of node operations, model loading/unloading on the GPU, node discovery and negotiation, failover, payments, validation, and more. Learn when it comes to artificial intelligence video computing. From there, we can evaluate future milestones for producing and supporting arbitrary AI video computing job types on the Livepeer network.

Early on, video-specific platforms such as Livepeer Studio made it possible for video developers to build APIs and products to take advantage of supported models. Consumer applications, such as those proposed in the AI Video SPE, can use these capabilities directly on the Livepeer network through Catalyst nodes. But as these capabilities expand, new creator-focused AI businesses can form and leverage Livepeer’s global GPU network to cost-effectively build customized experiences without relying on expensive large technology clouds and their proprietary Models as business backbone.

This is an exciting road that requires running to get there. There is no doubt that artificial intelligence will change the world of video faster than we can imagine in the coming years, and we expect the world's open video infrastructure to become the most cost-effective and accessible future for all the computing required to enable this bold new technology. Scalable and reliable backbone.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Catherine

Catherine Bitcoinist

Bitcoinist