Author: Vtalik, co-founder of Ethereum; Translation: 0xjs@金财经

In the past week, an article about a company losing $25 million was widely circulated because of a financial job Personnel were persuaded to send a bank wire to a scammer posing as the CFO...and what appeared to be a very convincing deepfake video call.

Deepfakes , that is, fake audio and video generated by artificial intelligence) are appearing with increasing frequency in the cryptocurrency space and elsewhere. Over the past few months, my deepfakes have been used to promote various scams, as well as Dogecoin. The quality of deepfakes is improving rapidly: While the deepfakes of 2020 were obvious and embarrassingly bad, the deepfakes of the past few months have become increasingly difficult to distinguish. People who know me well can still identify the recent deepfake video of me because it made me say "let's f***ing go" whereas I only use "LFG" for "looking for group" but have only heard of it a few times People with my voice are easily persuaded.

Security experts surrounding the aforementioned $25 million theft unanimously confirmed that it was a rare and embarrassing failure of corporate operational security on multiple levels: It is standard practice to detect any transfers approaching that size. Multiple levels of sign-offs are required before formal approval is possible. But even so, the fact remains that by 2024, a person's audio or even video stream will no longer be a secure way to verify their identity.

This raises the question: What is the safe way?

Encryption alone doesn’t solve the problem

Being able to securely verify people’s identities can be valuable to all kinds of people in a variety of situations: social recovery or multi-purpose Individuals signing wallets, businesses approving commercial transactions, individuals approving large transactions for personal use (e.g. investing in a startup, purchasing a home, remittances) whether in cryptocurrency or fiat, or even family members in an emergency Mutual identity verification is also required. Therefore, it is important to have a good solution that can survive the coming era of relatively easy deepfakes.

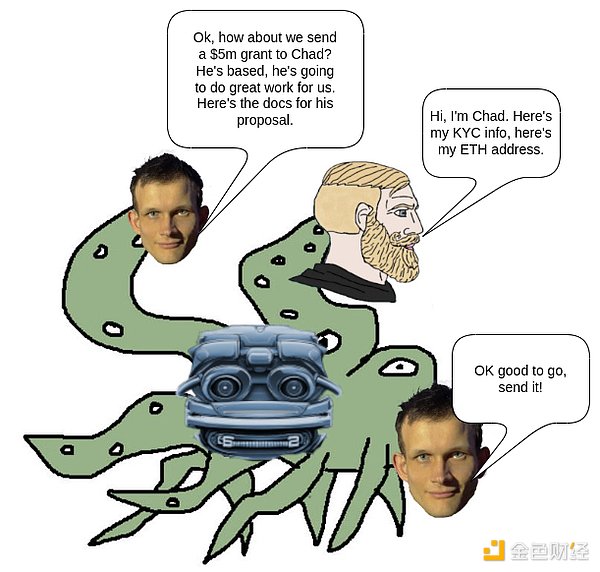

One answer to this question I often hear in crypto circles is: "You can authenticate yourself by providing a cryptographic signature from an address attached to your ENS/Proof of Humanity/Public PGP key identity of". This is an attractive answer. However, it completely ignores why it is useful to have other people involved when signing a deal. Let's say you are an individual with a personal multi-signature wallet, and you are sending a transaction that you want some co-signers to approve. Under what circumstances will they approve? If they are convinced that you are the one who actually wants to make the transfer. If it's a hacker who steals your keys, or a kidnapper, they won't approve. In an enterprise environment, you typically have more layers of defense; but even then, it's possible for an attacker to impersonate a manager, not just for the final request, but also in the early stages of the approval process. They may even hijack legitimate requests in progress by providing incorrect addresses.

So, in many cases, if you sign with a key, the other signers will accept that you are who you are, which defeats the whole point: it turns the entire contract into a 1-of-1 multi Signatures, where someone only needs to control your single key in order to steal funds!

This is where we get an answer that actually makes sense: Security.

Security Issues

Suppose someone sends you a text message claiming to be your friend. They text from an account you've never seen before and claim to have lost all their devices. How do you determine if they are who they say they are?

There’s an obvious answer: Ask them something about their lives that only they know. These things should be:

You know

You want them to remember

p>I don’t know online

It’s hard to guess

Ideally, Even people who have hacked into corporate and government databases don't know

The natural question to ask them is about shared experiences. Possible examples include:

When the two of us last met, which restaurant did we have for dinner and what did you have? food?

Which of our friends made a joke about an ancient statesman? Which politician is that?

What movie did we watch recently that you didn’t like?

You suggested last week that I discuss with ____ the possibility of them helping us with our ____ study?

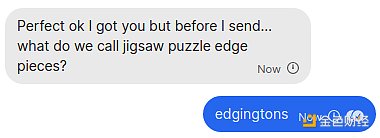

A practical example of a recent security question someone used to verify my identity.

The more unique your question, the better. Questions that are on the edge where people have to think about it for a few seconds and might even forget the answer are good: but if the person you're asking does claim to have forgotten, make sure you ask them three more questions. Asking for "micro" details (what someone likes or dislikes, specific jokes, etc.) is generally better than "macro" details because the former are usually harder for a third party to accidentally dig up (e.g., even if someone posted on Instagram A photo of a dinner, a modern LLM is likely to capture that photo fast enough and provide the location in real time). If your question is likely to be guessable (in the sense that only a few potential options make sense), increase the entropy by adding another question.

People often stop participating in security practices if they are boring, so it’s healthy to make security issues fun! They can be a way to remember positive shared experiences. They can be motivation to actually have these experiences.

Supplementary security issues

No security strategy is perfect, so it is best to stack multiple technologies together.

Pre-agreed password: When you are together, intentionally agree on a shared password that you can use to authenticate each other in the future.

It may even be possible to agree on a coercion word: you can inadvertently insert a word into a sentence, quietly signaling to the other person that you are being coerced or threatened. The word should be common enough that it feels natural when you use it, but rare enough that you don't accidentally insert it into your speech.

When someone sends you an ETH address, ask them to confirm it through multiple channels (such as Signal and Twitter DMs, company websites, or even through mutual acquaintances)

Prevent man-in-the-middle attacks: Signal "safe numbers", Telegram emoticons and similar features are easy to understand and watch out for.

Daily Limits and Delays: Simply impose delays on actions with severe and irreversible consequences. This can be done at the policy level (agreeing in advance with signers that they will wait N hours or days before signing) or at the code level (imposing restrictions and delays in the smart contract code)

A potentially sophisticated attack in which an attacker impersonates executives and assignees at multiple steps in the approval process.

Both security issues and delays prevent this from happening; use both Could be better.

Security questions are good because, unlike many other technologies that fail because they are impersonal, security questions are built on information that humans are inherently good at remembering. I've been using safety questions for years, and it's a habit that actually feels very natural and non-awkward, and worth incorporating into your workflow in addition to other layers of protection.

Please note that the above use cases for "person-to-person" security issues are very different from "business-to-person" security issues, such as when you travel to another country and your credit card is deactivated 17 times and you call your bank to reset it. When activated, after you've queued for 40 minutes of annoying music, a bank employee appears and asks for your name, birthday, and maybe your last three transactions. The types of questions to which individuals know the answers are very different from the types of questions to which businesses know the answers. Therefore, it is worth considering both cases separately.

Everyone’s situation is unique, so the kinds of unique shared information you have with people who may need to authenticate will be different for different people. Generally speaking, it's better to adapt technology to people rather than people adapting to technology. A technology doesn't need to be perfect to work: the ideal approach is to layer multiple technologies together at the same time and choose the one that works best for you. In a post-deepfake world, we do need to adapt our strategies to the new reality of what is now easy to fake and what is still hard to fake, but as long as we do so, it is still entirely possible to stay safe.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Olive

Olive Catherine

Catherine dailyhodl

dailyhodl Bitcoinist

Bitcoinist Beincrypto

Beincrypto Beincrypto

Beincrypto Coindesk

Coindesk 链向资讯

链向资讯 Ftftx

Ftftx