Source: Higress

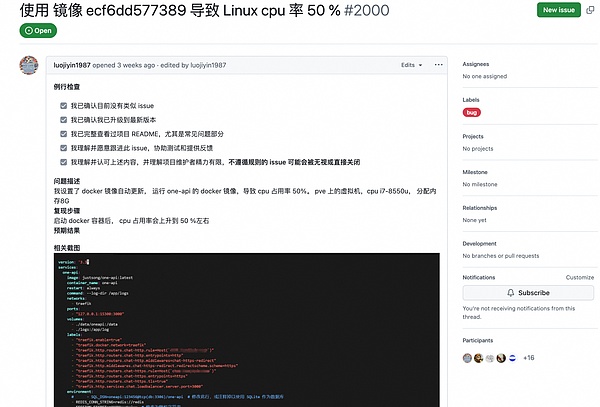

01 What happened

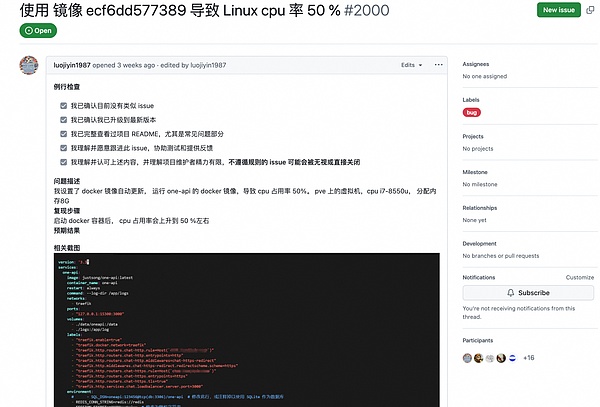

OneAPI is an open source code hosting platform The AI gateway tool has 20,000 stars on GitHub. Users of the tool found that after installing the latest version of the image, a certain percentage of CPU usage would be consumed[1].

The final determination is that the DockerHub image was poisoned, and the XMR Monero mining script was implanted into the image, causing the CPU to run high:

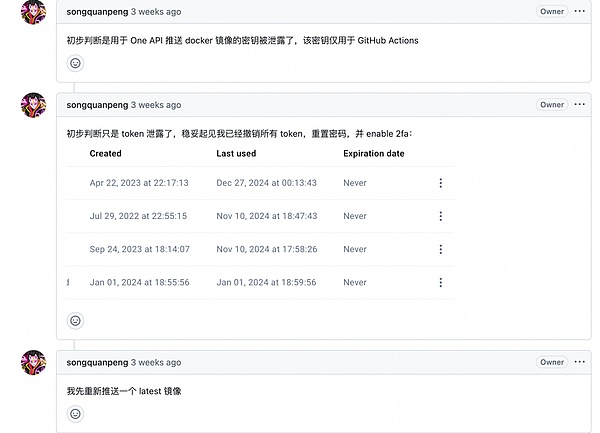

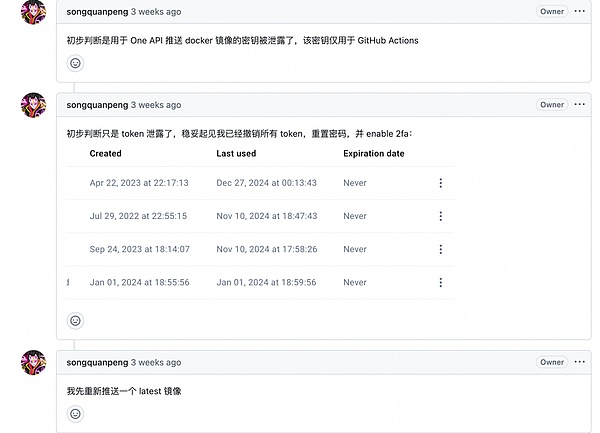

Currently, it can be confirmed that the push key of DockerHubwas leaked, causing hackers to implant mining scripts into multiple image versions:

Due to the hot cryptocurrency market, security incidents related to mining scripts have been on the rise in recent years. Hackers will look for DockerHub repositories of popular open source projects to break into.

Aviv Sasson, a security researcher at Palo Alto Network, once found 30 Docker images implanted with mining Trojans. These images have been downloaded a total of 20 million times. It is estimated that they helped hackers mine cryptocurrencies worth $200,000.

Docker images injected with mining scripts are not an isolated phenomenon, but a security issue that needs to be taken seriously.

02 Some background knowledge

2.1. DockerHub

DockerHub is the world's largest container image hosting service, with more than 100,000 container images from software vendors and open source projects.

A container image is a complete package of a software and its operating environment. When installing, you can save the complicated configuration process.

Container Image Hosting Serviceis an online platform for storing and sharing container images of software. You can think of it as a "big store" for applications, which contains various software packages.

Because DockerHub provides free services, many open source software projects choose to publish their container images here. This makes it easy for users to obtain, install and use these software.

2.2. AI Gateway

OneAPI, which was implanted with a Trojan by hackers, is an open source AI gateway tool.

With more and more AI vendors, the capabilities of LLM models are gradually converging. In order to meet various needs in use, the AI gateway tool has emerged. The AI gateway can uniformly receive user questions and then forward them to different LLM models for processing.

There are several common usage scenarios for using the AI gateway:

Improve the stability of the overall service: When a model has a problem, you can switch to another model.

Reduce costs: You can replace expensive models with cheaper models when appropriate, such as using DeepSeek instead of ChatGPT.

Moreover, this switch is imperceptible to users, and the user experience will not be affected. Through the AI gateway, various model resources can be used more flexibly and efficiently. Therefore, it is widely adopted by AI application developers.

03 How to prevent similar risks

The author of this article is one of the maintenance members of Higress, another open source AI gateway project. When paying attention to OneAPI, I saw this problem, so I would like to share with you Higress's experience in preventing such risks.

Higress is an open-source gateway software developed by Alibaba Cloud[1] . Unlike OneAPI, a tool that only supports AI gateways, Higress builds AI gateway capabilities on top of API gateway capabilities and is jointly maintained by the R&D team behind the commercial product Alibaba Cloud API Gateway, rather than a personal project.

Higress has always used Alibaba Cloud Container Mirror Service for image storage and has its own official Helm repository (installation package management for K8s environments).

There are at least two benefits to using Alibaba Cloud Container Mirror Service:

It is not affected by the DockerHub network ban, is more user-friendly to domestic users, and has a faster image pull speed.

Image security scans can be performed to automatically intercept risky image submissions

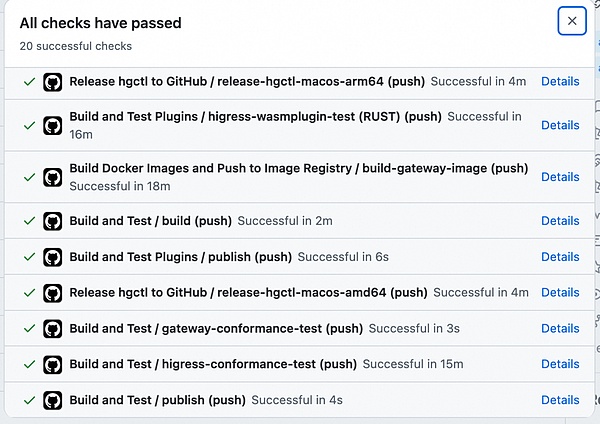

The second point is also the core of preventing open source image poisoning, as shown in the following screenshot:

Based on the cloud native delivery chain function of Alibaba Cloud Container Mirror Service, malicious script scanning can be performed immediately after the image is pushed. If risks are found, the image can be deleted immediately.

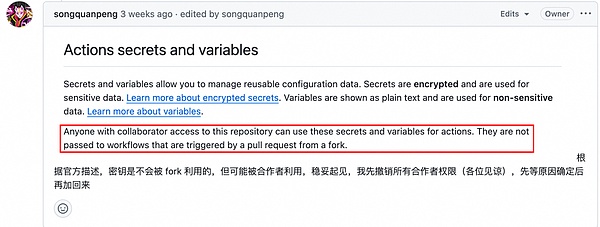

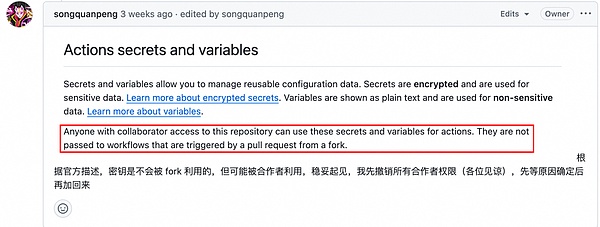

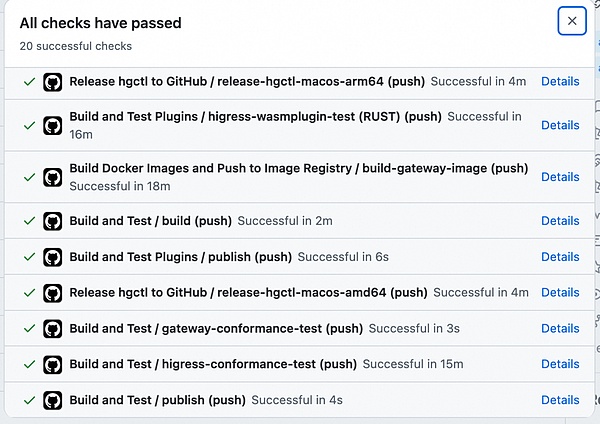

In addition, it is also important that each new version is released automatically by the program instead of relying on people. After each version release, the Higress community will automatically create container images and installation packages through GitHub Action, and the image repository key is stored based on GitHub Secret. The permission to publish the version can be given to other collaborators in the community, but there is no need to provide the collaborators with the password of the mirror repository.

04 How to prevent similar risks and quickly experience Higress AI Gateway

Higress AI Gateway supports one-line command installation:

curl -sS https://higress.cn/ai-gateway/install.sh | bash

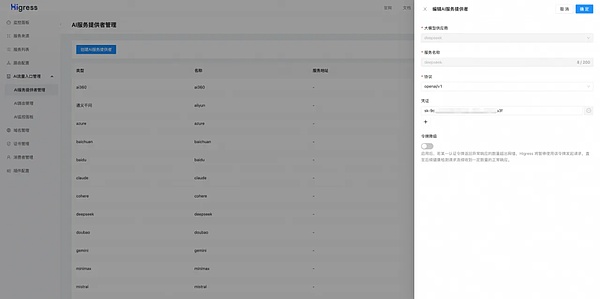

After executing the command, you can initialize the configuration through the command line. You can see that Higress's AI gateway capabilities support docking with all mainstream LLM model suppliers at home and abroad:

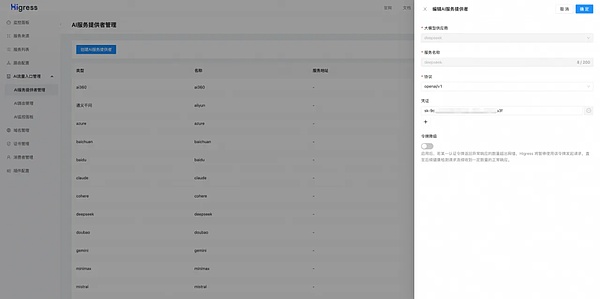

You can also choose to skip this step and go to the Higress console to configure the API Key of the corresponding supplier:

After configuration, you can use it directly, for example, using OpenAI's SDK:

import json

from openai import OpenAI

client = OpenAI(

api_key=xxxxx, # ? You can use Higress to generate a consumer Key to achieve secondary sub-rental of the API key

base_url="http://127.0.0.1:8080/v1"

)

completion = client.chat.completions.create(

# model="qwen-max",

# model="gemini-1.5-pro",

model="deepseek-chat", # ? You can fill in any model name, Higress routes to the corresponding supplier based on the model name

messages=[

{"role": "user", "content": "你好"}

],

stream=True

)

for chunk in completion:

print(chunk.choices[0].delta)

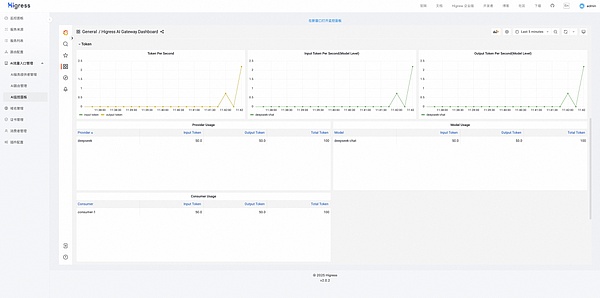

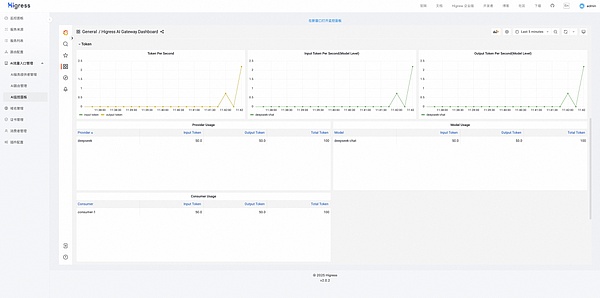

You can see the token consumption and call delay of each model and each consumer in the monitoring panel:

In addition, compared with OneAPI, Higress provides more practical functions, such as:

API Key Governance: Supports configuration of API Key pool to achieve multi-Key balance, API Key Unavailable situations such as throttling will be automatically blocked and automatically restored when available.

Consumer management: By creating consumers, you can implement secondary sub-rental of API Keys without exposing the real supplier API Key to the caller, and you can finely manage the calling permissions and calling quotas of different consumers.

Backup model: Supports configuration of backup models, for example, when a request for a DeepSeek model fails, it automatically downgrades to an OpenAI model.

Model grayscale: Supports model smoothing and proportional grayscale, please refer to 《DeepSeek-R1 is here, how to smoothly migrate from OpenAI to DeepSeek》.

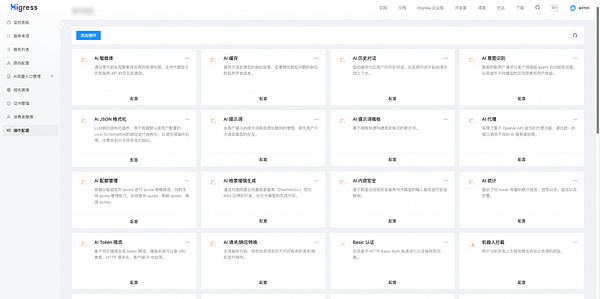

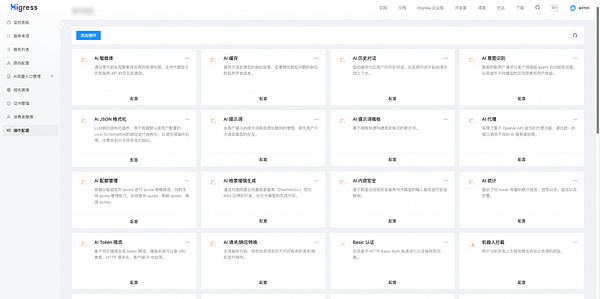

There are many out-of-the-box plug-ins in Higress's plug-in market, such as prompt word templates, AI caching, data desensitization, content security, etc.:

The plug-in codes are also open source, and support self-developed plug-ins, hot loading on the gateway, and no loss to traffic. This is very friendly to real-time session scenarios such as RealTime API, and will not disconnect long connections.

Weiliang

Weiliang