ZachXBT Sounds Alarm on Scammers Associated with DeFi Protocol

This group of scammers has been associated with numerous rug pulls, including projects like Magnate, Kokomo, Solfire, and Lendora.

Kikyo

Kikyo

Author: Shlok Khemani, Oliver Jaros Source: Decentralised.co Translation: Shan Ouba, Golden Finance

Today's post is an explanation of agent frameworks, and our assessment of how far they have come. This is also a call for proposals, targeting founders working at the intersection of Internet money rails (crypto) and agents.

Over the past year, Decentralised.co has delved deeply into the intersection of crypto and AI. We even built a product used by over 70,000 people that tracks AI agents and agent infrastructure. Although the frenzy around the space has subsided in recent weeks, the impact of AI on technology and society is something we have not seen since the internet. If cryptocurrency is to become the financial rail of the future, as we predict, then its intertwining with AI will be a recurring theme, not a one-off.

One of the more interesting categories of projects emerging from this wave is crypto-native AI agent frameworks. They are a fascinating experiment that brings the core principles of blockchain — permissionless value transfer, transparency, and aligned incentives — to AI development. Their open-source nature provides us with a rare opportunity to peek into their inner workings and analyze not only their promises, but how they actually work.

In this post, we first dissect what agent frameworks actually are and why they’re important. We then tackle the obvious question: why do we need crypto-native frameworks when mature options like LangChain exist? To do this, we analyze the leading crypto-native frameworks and their strengths and limitations for different use cases. Finally, if you’re building an AI agent, we’ll help you decide which framework might be right for your needs. Or, if you should build with a framework at all.

Let’s dive in.

"The progress of civilization consists in extending the number of important operations which we can perform without thinking." - Alfred North Whitehead

Think about how our ancestors lived. Each family had to grow its own food, make its own clothes, and build its own shelter. They spent countless hours on basic survival tasks, leaving little time for anything else. Even two centuries ago, nearly 90% of people worked in agriculture. Today, we buy our food from supermarkets, live in houses built by experts, and wear clothes produced in faraway factories. Tasks that once consumed the efforts of generations have become simple transactions. Today, only 27% of the world's population is engaged in agriculture (it falls to less than 5% in developed countries).

When we begin to master a new technology, familiar patterns emerge. We start by understanding the fundamentals—what works, what doesn’t, and which patterns keep emerging. Once those patterns become clear, we package them into abstractions that are easier, faster, and more reliable. These abstractions free up time and resources for more diverse and meaningful challenges. The same is true for building software.

Take web development as an example. In the early days, developers needed to write everything from scratch—handling HTTP requests, managing state, and creating UIs—tasks that were complex and time-consuming. Then frameworks like React emerged that greatly simplified these challenges by providing useful abstractions. Mobile development has followed a similar path. Initially, developers needed deep, platform-specific knowledge until tools like React Native and Flutter emerged, allowing them to write code once and deploy anywhere.

A similar pattern of abstraction is happening in machine learning. In the early 2000s, researchers discovered the potential of GPUs for ML workloads. At first, developers had to wrestle with graphics primitives and languages like OpenGL’s GLSL — tools that weren’t built for general-purpose computing. That all changed in 2006 when NVIDIA introduced CUDA, making GPU programming more accessible and bringing ML training to a wider group of developers.

As ML development gained momentum, specialized frameworks emerged to abstract the complexity of GPU programming. TensorFlow and PyTorch enable developers to focus on model architectures rather than getting bogged down in low-level GPU code or implementation details. This has accelerated iterations of model architectures and the rapid advances in AI/ML we’ve seen over the past few years.

We are now seeing a similar evolution with AI agents - a software program that can make decisions and take actions to achieve a goal, just like a human assistant or employee. It uses a large language model as its "brain" and can leverage different tools, such as searching the web, making API calls, or accessing a database, to complete tasks.

To build an agent from scratch, developers must write complex code to handle each aspect: how the agent thinks about a problem, how it decides what tools to use and when, how it interacts with those tools, how it remembers the context of earlier interactions, and how to break large tasks into manageable steps. Each pattern must be solved separately, leading to duplicated work and inconsistent results.

This is where AI agent frameworks come in. Just as React simplified web development by handling the tricky parts of UI updates and state management, these frameworks solve common challenges in building AI agents. They provide ready-made components for the patterns we have found to be effective, such as how to structure an agent's decision-making process, integrate different tools, and maintain context across multiple interactions.

With a framework, developers can focus on what makes their agent unique—its specific capabilities and use cases—rather than rebuilding these basic components. They can create sophisticated AI agents in days or weeks rather than months, more easily experimenting with different approaches and learning from best practices discovered by other developers and the community.

To better understand the importance of a framework, consider a developer building an agent that helps doctors review medical reports. Without a framework, they would need to code all of this from scratch: handling email attachments, extracting text from PDFs, entering text into LLMs in the correct format, managing conversation history to keep track of what has been discussed, and ensuring the agent responds appropriately. That’s a lot of complex code for tasks that aren’t unique to their specific use case.

With an agent framework, many of these building blocks are available right out of the box. The framework handles reading emails and PDFs, provides patterns for building medical knowledge prompts, manages the flow of conversations, and even helps keep track of important details across multiple exchanges. Developers can focus on what makes their agents unique, such as fine-tuning medical analysis prompts or adding specific safety checks for diagnostics, rather than reinventing common patterns. What might have taken months to build from scratch can now be prototyped in days.

LangChain has become the Swiss Army Knife of AI development, providing a flexible toolkit for building LLM-based applications. While not strictly an agent framework, it provides the basic building blocks on which most agent frameworks are built, from chains for ordering LLM calls to memory systems for maintaining context. Its broad ecosystem of integrations and rich documentation make it a top starting point for developers looking to build practical AI applications.

Then there are multi-agent frameworks like CrewAI and AutoGen, which enable developers to build systems where multiple AI agents work together, each with its own unique roles and capabilities. Rather than simply performing tasks sequentially, these frameworks emphasize agent collaboration through dialogue to solve problems together.

For example, when assigned a research report, one agent might outline its structure, another might collect relevant information, and a third might comment on and refine the final draft. It's like forming a virtual team where AI agents can discuss, debate, and work together to improve solutions. Multi-agent systems that work together in this way to achieve high-level goals are often referred to as AI agent "clusters."

Although not a traditional framework, AutoGPT pioneered the concept of autonomous AI agents. It shows how AI can take a high-level goal, break it down into subtasks, and complete them independently with minimal human input. Despite its limitations, AutoGPT has sparked a wave of innovation in autonomous agents and influenced the design of subsequent, more structured frameworks.

All of this background ultimately brings us to the rise of crypto-native AI agent frameworks. At this point, you might be wondering, why does Web3 need its own framework when we already have relatively mature frameworks like Langchain and CrewAI in Web2? Surely developers can use these existing frameworks to build whatever agents they want? This skepticism is reasonable given the industry’s penchant for forcing Web3 into any and all narratives.

We believe there are three good reasons for Web3-specific agent frameworks to exist.

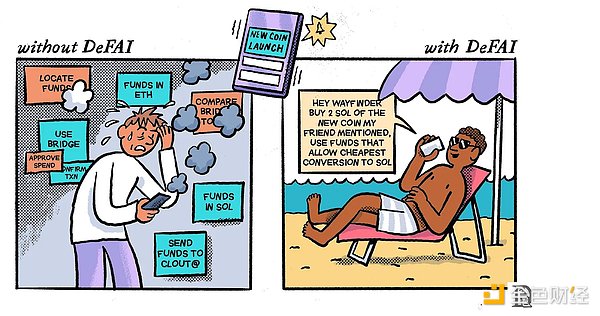

We believe that the majority of financial transactions in the future will take place on blockchain rails. This has accelerated the need for a class of AI agents that can parse on-chain data, execute blockchain transactions, and manage digital assets across multiple protocols and networks. From automated trading bots that can detect arbitrage opportunities to portfolio managers that execute yield strategies, these agents rely on deep integration of blockchain capabilities in their core workflows.

Traditional Web2 frameworks do not provide native components for these tasks. You must cobble together third-party libraries to interact with smart contracts, parse raw on-chain events, and handle private key management - introducing complexity and potential vulnerabilities. In contrast, dedicated Web3 frameworks handle these functions out of the box, allowing developers to focus on their agents’ logic and policies rather than wrestling with low-level blockchain plumbing.

Blockchains are about more than just digital currencies. They provide a global, trust-minimized system of record, with built-in financial tools that enhance multi-agent coordination. Developers can use on-chain primitives like staking, escrow, and incentive pools to coordinate the interests of multiple AI agents rather than relying on off-chain reputation or siloed databases.

Imagine a group of agents collaborating on a complex task (e.g., data labeling for training a new model). Each agent’s performance can be tracked on-chain, with rewards automatically distributed based on contribution. The transparency and immutability of blockchain-based systems allow for fair compensation, more robust reputation tracking, and incentive schemes that evolve in real time.

Crypto-native frameworks can embed these capabilities explicitly, letting developers design incentive structures using smart contracts without having to reinvent the wheel every time an agent needs to be trusted or paid to another agent.

While frameworks like LangChain already have mindshare and network effects, the AI agent space is still in its infancy. It’s unclear what the end state of these systems will look like, and there’s no single way to lock in the market.

Cryptoeconomic incentives open up new possibilities for how frameworks are built, governed, and monetized that don’t map neatly onto traditional SaaS or Web2 economics. Experimentation at this early stage can unlock new monetization strategies for the frameworks themselves, not just the agents built on top of them.

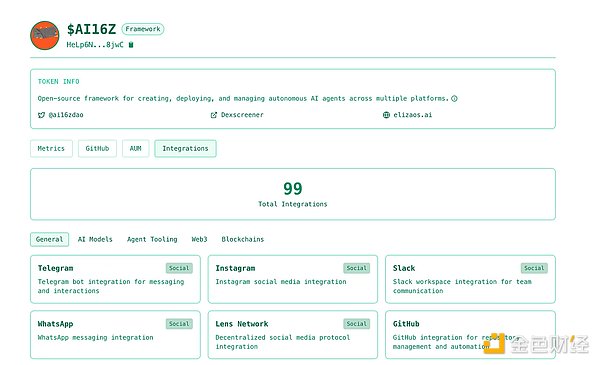

ElizaOS, associated with the popular project AI16Z, is a Typescript-based framework for creating, deploying, and managing AI agents. It is designed to be a Web3-friendly AI agent operating system, allowing developers to build agents with unique personalities, flexible tools for blockchain interaction, and easily scale with multi-agent systems.

Rig is an open source AI agent framework developed by Playgrounds Analytics Inc., built with the Rust programming language, for creating modular, extensible AI agents. It is associated with the AI Rig Complex (ARC) project.

Daydreams is a generative agent framework that was originally created to create autonomous agents for on-chain games, but was later expanded to perform on-chain tasks.

Pippin is an AI agent framework developed by Yohei Nakajima, the founder of BabyAGI, to help developers create modular and autonomous digital assistants. Yohei first built a standalone agent and then expanded it into a general-purpose framework.

ZerePy is an open-source Python framework designed to deploy autonomous agents across multiple platforms and blockchains, with a focus on creative AI and social media integration. Like Pippin, Zerepy started as a standalone agent, Zerebro, and later expanded into a framework.

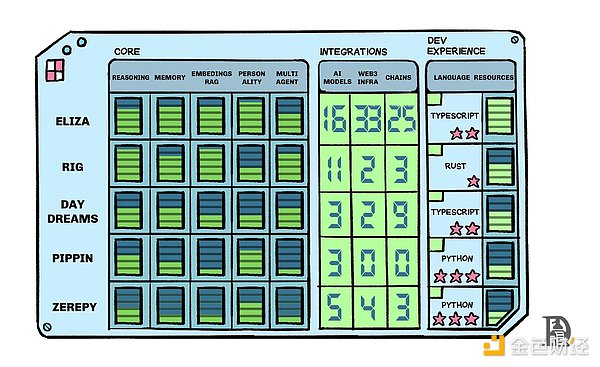

To evaluate the strength of each framework, we put ourselves in the shoes of a developer who wants to build an AI agent. What would they care about? We thought it was useful to break the evaluation into three main categories: core, features, and developer experience.

You can think of the core of a framework as the foundation upon which all other agents are built. If the core is weak, slow, or not evolving, agents created using that framework will be similarly limited. The core can be evaluated on the following criteria:

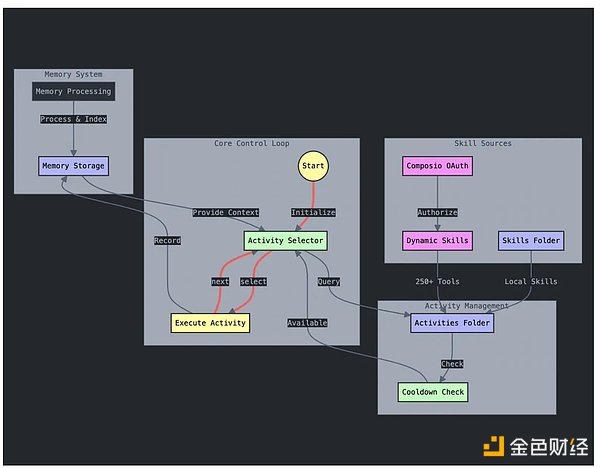

Core Reasoning Loop:The brains of any agent framework; how it solves problems. Strong frameworks support everything from basic input-output flows to complex patterns like chains of thoughts. Without strong reasoning capabilities, agents can’t effectively break down complex tasks or evaluate multiple options, reducing them to fancy chatbots.

Memory Mechanisms:Agents need both short-term memory for ongoing conversations and long-term storage for persistent knowledge. Good frameworks do more than just remember—they understand the relationships between different pieces of information and can prioritize which ones are worth keeping and which ones are worth forgetting.

Embedding and RAG Support:Modern agents must use external knowledge, like documents and market data. A strong framework can easily embed this information and retrieve it contextually through RAG, thus basing responses on specific knowledge rather than relying solely on base model training.

Personality Configuration:The ability to shape the way an agent communicates (tone, etiquette, and personality) is critical to user engagement. A good framework can easily configure these characteristics, recognizing that the personality of an agent can significantly impact user trust.

Multi-agent Coordination:A strong framework provides built-in patterns for agent collaboration, whether through structured conversations, task delegation, or shared memory systems. This can create specialized teams, with each agent bringing unique capabilities to work together to solve problems.

Beyond the core functionality, the actual utility of a framework depends heavily on its capabilities and integrations. Tools greatly expand the actual capabilities of an agent. An agent with only LLM access can participate in a conversation, but grant it access to a web browser and it can retrieve real-time information. Connect it to your calendar API and it can schedule meetings. Each new tool increases the capabilities of an agent exponentially. From a developer’s perspective, the greater the number of tools, the greater the optionality and scope for experimentation.

We evaluate the capabilities of crypto-native frameworks along three dimensions:

AI model support and capabilities:Strong frameworks offer native integration with a wide range of language models — from OpenAI’s GPT family to open source alternatives like Llama and Mistral. But it’s not just about LLMs. Support for additional AI features like text-to-speech, browser usage, image generation, and local model inference can greatly expand the capabilities of an agent. Strong model support is becoming a must-have for many of these frameworks.

Web3 infrastructure support:Building crypto agents requires deep integration with blockchain infrastructure. This means supporting necessary Web3 components, such as wallets for transaction signing, RPCs for chain communication, and indexers for data access. A strong framework should integrate with essential tools and services across the ecosystem, from NFT marketplaces and DeFi protocols to identity solutions and data availability layers.

Chain Coverage:While Web3 infrastructure support determines what agents can do, chain coverage determines where they can do it. The crypto ecosystem is evolving into a decentralized, multi-chain behemoth, highlighting the importance of broad chain coverage.

In the end, even the most powerful framework is only as good as the developer experience. A framework can have best-in-class features, but if developers struggle to use it effectively, it will never be widely adopted.

The language a framework is written in directly impacts who can build with it. Python dominates both AI and data science, making it a natural choice for AI frameworks. Frameworks written in niche languages may have unique advantages, but may isolate themselves from the broader developer ecosystem. JavaScript's ubiquity in web development makes it another strong contender, especially for frameworks targeting web integration.

Clear, comprehensive documentation is the lifeline for developers adopting a new framework. This goes beyond just API references, although those are critical, too. Strong documentation includes conceptual overviews that explain core principles, step-by-step tutorials, well-commented sample code, educational tutorials, troubleshooting guides, and established design patterns.

The following table summarizes how each framework performed across the parameters we just defined (ranked 1-5).

While discussing the reasons behind each data point is beyond the scope of this article, here are some of the standout impressions that each framework left on us.

Eliza is by far the most mature framework on this list. Given that the Eliza framework has become the Schelling point for the crypto ecosystem to engage with AI in the recent proxy wave, one of its standout features is the sheer number of features and integrations it supports.

Due to the popularity it has generated, every blockchain and development tool has rushed to integrate itself into the framework (it currently has nearly 100 integrations!). At the same time, Eliza has also attracted more developer activity than most frameworks. Eliza benefits from some very clear network effects, at least in the short term. The framework is written in TypeScript, a mature language used by both beginners and experienced developers, which further drives its growth.

Eliza also stands out for the wealth of educational content and tutorials it provides for developers to use the framework.

We’ve seen a range of agents using the Eliza framework, including Spore, Eliza (agent), and Pillzumi. A new version of the Eliza framework is expected to be released in the coming weeks.

Rig’s approach is fundamentally different from Eliza’s. It stands out for having a powerful, lightweight, and high-performance core. It supports a variety of reasoning patterns, including prompt chaining (applying prompts sequentially), orchestration (coordinating multiple agents), conditional logic, and parallelism (concurrent execution of operations).

However, Rig itself does not have that rich integration. Instead, it takes a different approach, which the team calls the “Arc Handshake.” Here, the Arc team is working with different high-quality teams in Web2 and Web3 to extend Rig’s capabilities. Some of these collaborations include working with Soulgraph on agent personality, and with Listen and Solana Agent Kit on blockchain capabilities.

Nevertheless, Rig has two drawbacks. First, it is written in Rust, which, while extremely performant, relatively few developers are familiar with it. Second, we have only seen a limited number of Rig-powered agents in real-world applications (AskJimmy is an exception), which makes it difficult to assess true developer adoption.

Before starting Daydreams, founder lordOfAFew was a major contributor to the Eliza framework. This exposed him to that framework's growth and, more importantly, some of its shortcomings. Daydreams differs from other frameworks in that it focuses on thought-chain reasoning to help agents achieve long-term goals. This means that, when given a high-level and complex goal, the agent engages in multi-step reasoning, proposes various actions, accepts or discards them based on whether they help achieve the goal, and continues this process to make progress. This makes agents created with Daydreams truly autonomous.

The founders' background in building game projects influenced this approach. Games, especially on-chain games, are an ideal breeding ground for training agents and testing their capabilities. Not surprisingly, some of the early use cases for Daydreams agents were in games like Pistols, Istarai, and PonziLand.

The framework also features a powerful implementation of multi-agent collaboration and orchestration workflows.

Similar to Daydreams, Pippin is also a latecomer to the framework game. We covered its launch in detail in this post. At the heart of Yohei’s vision is to enable agents to become a “digital being” that, with access to the right tools, can operate intelligently and autonomously. This vision is embodied in Pippin’s simple yet elegant core. With just a few lines of code, it’s possible to create a sophisticated agent that can operate autonomously and even write code for itself.

The downside of this framework is that it lacks even basic features like support vector embedding and RAG workflow. It also encourages developers to use a third-party library, Composio, for most integrations. It is simply not mature enough compared to the other frameworks discussed so far.

Some of the agents built using Pippin include Ditto and Telemafia.

Zerepy has a relatively simple core implementation. It efficiently selects a task from a set of configured tasks and executes it when needed. However, it lacks complex reasoning patterns like goal-driven or thought-chain planning.

While it supports reasoning calls to multiple LLMs, it lacks any embedding or RAG implementation. It also lacks any primitives for memory or multi-agent coordination.

This lack of core functionality and integration is reflected in Zerepy's adoption. We have yet to see any actual agents go live using the framework.

If all of this sounds technical and theoretical, we don't blame you. A simpler question is "What kind of agents can I build using these frameworks without having to write a bunch of code myself?".

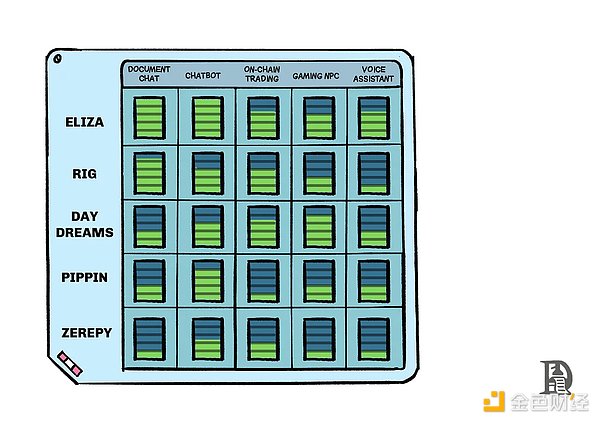

To evaluate these frameworks in practice, we identified five common agent types that developers often want to build. They represent different levels of complexity and test various aspects of each framework's functionality.

Document Chat Agent:Tests core RAG functionality including document handling, context maintenance, reference accuracy, and memory management. This test reveals the framework's ability to navigate the gap between true document understanding and simple pattern matching.

Chatbot:Evaluates memory systems and behavioral consistency. The framework must maintain a consistent personality, remember key information across conversations, and allow for personality configuration, essentially turning a stateless chatbot into a persistent digital entity.

On-Chain Trading Bot:Stress tests external integrations by processing real-time market data, performing cross-chain trades, analyzing social sentiment, and implementing trading strategies. This reveals how the framework handles complex blockchain infrastructure and API connections.

Game NPCs:Although the world has only started paying attention to agents in the past year, agents have played a vital role as non-player characters (NPCs) in games for decades. Game agents are transitioning from rule-based agents to intelligent agents driven by LLMs and remain a primary use case for frameworks. Here we test the ability of agents to understand their environment, reason about scenarios autonomously, and achieve long-term goals.

Voice Assistants: Real-time processing and user experience are evaluated through speech processing, fast response times, and messaging platform integration. This tests whether the framework can support truly interactive applications, not just simple request-response patterns.

We gave each framework a score out of 5 for each agent type. Here’s how they perform:

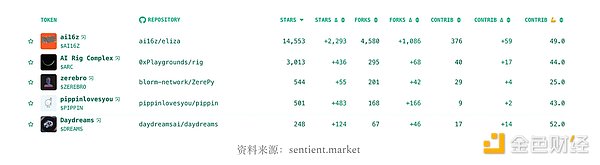

When evaluating these frameworks, most analyses place great emphasis on GitHub metrics, such as stars and forks. Here, we'll quickly cover what these metrics are, and to what extent they indicate the quality of a framework.

Stars act as the most obvious popularity signal. They are essentially bookmarks that developers give to projects they find interesting or want to follow. While a high star count indicates widespread awareness and interest, it can be misleading. Projects sometimes accumulate stars through marketing rather than technical merit. Think of stars as social proof rather than a measure of quality.

The number of forks tells you how many developers have created their own copies of the codebase to build on top of it. More forks generally indicate that developers are actively using and extending the project. That said, many forks are eventually abandoned, so the original fork count needs context.

The number of contributors reveals how many different developers have actually committed code to the project. This is often more meaningful than stars or forks. A healthy number of regular contributors indicates that the project has an active community maintaining and improving it.

We went a step further and designed our own metric - Contributor Score. We evaluate each developer's public history, including their past contributions to other projects, frequency of activity, and popularity of their account, to assign a score to each contributor. We then average this across all contributors to a project and weight it by the number of contributions they made.

What do these numbers mean for our framework?

In most cases, the number of stars is negligible. They are not a meaningful indicator of adoption. The exception here is Eliza, which at one point became the number one trending repository of all projects on GitHub, which is consistent with its becoming the Schelling point of all crypto AI. In addition, well-known developers like 0xCygaar have contributed to the project. This is also reflected in the number of contributors - 10 times more than other projects - Eliza attracts contributors.

Besides this, Daydreams is interesting to us simply because it attracts high-quality developers. As a latecomer that launched after the peak of hype, it did not benefit from Eliza’s network effects.

If you are a developer, we hope we’ve at least given you a starting point for choosing which framework to build on (if you need one). Beyond that, you still have to work on testing whether the core reasoning and integrations of each framework are suitable for your use case. There’s no getting around it.

From an observer’s perspective, it’s important to remember that all of these AI agent frameworks are less than three months old. (Yes, it feels longer.) In that time, they’ve gone from extremely hyped to being called “castles in the air.” That’s the nature of technology. Despite this volatility, we believe this space is an interesting and enduring new experiment in crypto.

What’s important next is how these frameworks mature in terms of both technology and monetization.

In terms of technology, the biggest advantage a framework can create for itself is enabling agents to interact seamlessly on-chain. This is the number one reason developers choose crypto-native frameworks over general-purpose frameworks. Additionally, agents and agent-building technology are cutting-edge technical problems around the world, with new developments happening every day. Frameworks must also evolve and adapt to these developments.

How frameworks monetize is even more interesting. In these early days, creating a launchpad inspired by Virtuals is low-hanging fruit for the project. But we think there’s a lot of room for experimentation here. We’re heading toward a future with millions of agents specializing in every imaginable niche. Tools that help them coordinate efficiently can capture a huge amount of value from transaction fees. As a gateway for builders, frameworks are certainly best suited to capture that value.

At the same time, framework monetization also masquerades as the problem of open source project monetization and rewarding contributors, who have historically done free, thankless work. If a team can crack the code on how to create a sustainable open source economy while keeping its fundamental ethos, the impact will extend far beyond the agent framework.

These are themes we hope to explore in the coming months.

This group of scammers has been associated with numerous rug pulls, including projects like Magnate, Kokomo, Solfire, and Lendora.

Kikyo

KikyoCoinbase files for an interim appeal in its legal battle with the SEC, aiming to challenge the regulator's classification of digital asset transactions as investment contracts. If successful, this move could have significant implications for the U.S. crypto sector.

Miyuki

MiyukiJapan's crypto traders may soon see significant tax reforms, as the LDP moves forward with its plans to support the crypto industry and embrace the web3 revolution.

Weiliang

WeiliangThe crypto exchange is pursuing an appeal following a recent court decision that denied its motion to dismiss, specifically targeting the SEC's authority to classify secondary trades as investment contracts.

Catherine

CatherineJapan's Liberal Democratic Party is pushing for urgent crypto tax reforms, aiming to separate profits and losses from crypto transactions for fairer taxation. Prime Minister Kishida's support for web3 technologies adds momentum to the reform, suggesting a positive shift towards embracing blockchain innovations in Japan's regulatory landscape.

Anais

AnaisThe proposed agreement with record labels stipulates that prior consent and fair compensation must be obtained before releasing songs utilising digital replicas of artists' voices.

Kikyo

KikyoBitcoin's upcoming halving will slash miner rewards, potentially causing a $10 billion annual revenue drop. Miners face increasing competition for power and must innovate to survive.

Weatherly

WeatherlyHong Kong's approval of bitcoin and ether ETFs signals its growing role in cryptocurrency innovation, contrasting with China's stricter stance. While this move promises investment opportunities and financial growth, it also poses risks due to cryptocurrency volatility and regulatory uncertainties.

Anais

AnaisAnalysts anticipate a significant liquidation of Bitcoin by miners following the halving, potentially leading to a reversal in the supply/demand balance, according to recent market assessments.

Alex

AlexNigerian government tracks Binance executive Nadeem Anjarwalla to Kenya, aims to extradite him for charges including tax evasion and money laundering.

Miyuki

Miyuki