Author: ArweaveOasis, Source: @Arweave Oasis Twitter

This speech is the keynote speech given by Sam at the first AO Developer Conference in South Carolina, focusing on AO and the Erlang language. The following is the full text of the speech, Enjoy!

This is a particularly exciting speech for me. First, I plan to take everyone on a journey to explain how AO was formed, how Erlang fits into it, and how this fits into the broad distributed computing experiments we are conducting.

My first exposure to computers was a computer my parents bought me with a 550 MHz CPU, 32 megabytes of ram. It was probably in the mid-nineties.

The moment I started using it, I was hooked, and that feeling never even stopped. That was when the Internet was starting to take off. Not only could I do all the fun stuff on my own computer, I could connect to other people's computers and access information on other people's computers. I thought that was so cool and exciting, and it really shaped my journey as an individual. This idea that we could have a shared information space.

As time went on, as all of us as a species were pulled more and more into Cyberspace, it became more of a place to browse information, and more of a place to interact and get to know each other. It's like there are a lot of people in this room that I know well, but we probably only spend about 2% or 3% of our time face-to-face offline.

So anyway, when I encountered the Internet, I was fascinated by it. But I thought the coolest thing in the world was supercomputers.

They look amazing, you can do a lot of calculations and have a machine solve your problem. But this is a supercomputer. It was built in the 90s, I believe, by the Japanese government to do some climate science experiments. I guess at that time, the number of people who could actually run personal programs on it during the entire life of the machine was about three dozen people.

That got me thinking, we have this information sharing engineering, so why can't it share a huge computer with everyone?

If we have the Internet, why can't we let everyone share the application space and build programs that can interact with each other?

It seems like a good idea. There were actually some implementations of these ideas back then, like you could send packets between servers, but it was so clunky and so slow that it never really took off in a constructive way.

It wasn't until I was just about to go to college that I encountered Erlang. That was the first time I had found a programming language or an operating system that was able to intuitively express this idea that processes of computation, like small units of computation, could be run by anyone and interact with each other naturally in this parallel environment. That's exactly what Erlang tried to provide. You could spin up a machine and run this environment in it. It was almost like an operating system that ran many different applications in the same environment.

Then I went to college and I had this experience where we had a large shared Unix box, which was the same idea again, but expressed on a small scale, that when you have a lot of different people sharing the same computer, their applications can interact with each other very easily.

This was at a scale of about 80 to 100 people. But you could still see some of the fun and composability. I remember we would write shell scripts, and we would have one user poke another user in the system. When they were poked. They would go and poke another, forming a chain reaction. It was a pleasant platform, but it was still far from the dream of a distributed, open supercomputer.

Then after that, I first came across Ethereum, which was called the world's computer at the time.

I thought, ah! Maybe this is what I'm looking for, this environment not only has distributed computing, but in computer science it's called a single system image (SSI). We take many different computers and make them look like one computer, allowing you to operate across them seamlessly.

So it has an SSI, which is cool, and it's also trustless, which is a pretty remarkable feature. Once a program is executed on this computer, you don't need to trust anyone. This is different from the structure of the Internet today and all the web services we use, in fact, almost all the services we use today, you need to trust the person providing the service.

Ethereum offers a different view of the world. We can have a supercomputer distributed all over the world, unifying all of humanity's computation, and every program in it is not controlled by any one person or group. "Code is law" was the idea at the time, but later it became a controversial phrase when they deliberately broke the protocol to break the "code is law" creed.

Anyway, I thought it was cool. I naively spent $15 to participate in their ICO at the time because I thought, I want these tokens so I can run my computations.

When they built this thing, the original vision of a distributed world computer did not happen. Instead, they built a huge financial economy on it. It turns out that when you have true trustless state changes, you can give birth to a whole decentralized financial ecosystem. We're looking at over $100 billion of value being stored in these machines. So that's really an amazing innovation. It's profound.

But where's my supercomputer, man? It's not a supercomputer. What happened, what went wrong? When you try to use Ethereum now, it gets, frankly, ridiculous.

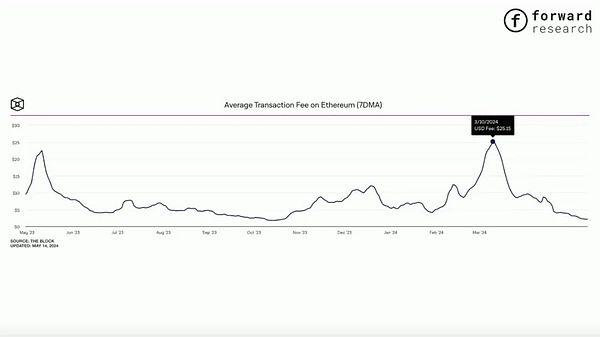

We can't deny that they've done some really incredible work. But it's a far cry from the world computer it started out as. The average Ethereum transaction fee often soars to around $25, just to do a little bit of computation to update the state record of who owns what.

Basically, it's like an IBM mainframe from the seventies. How did that happen?

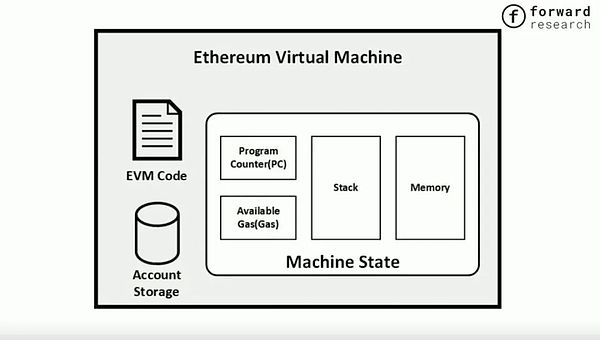

Well, the answer is a shared memory system. So in Ethereum, they have a global shared memory between every user of the network. It has somewhere between 10,000 and 60,000 network nodes that are all maintaining trust in those program states.

So everybody is verifying, hey, this state transition is valid. Nobody's making programs do things they shouldn't do, which is great, but the memory for those programs is shared across a single thread of execution for every program and every user. So when you want to do a computation on Ethereum, there's a single thread, a single queue, and everybody adds programs to that queue and queues up to do it.

In normal distributed system design, we would call this Stop the world state updates. So when a user comes over, we stop the world, nobody can use it, and then the next user can go. It's a pure single thread queue, which is obviously the opposite of a supercomputer. This is even less computation than you can do on a calculator.

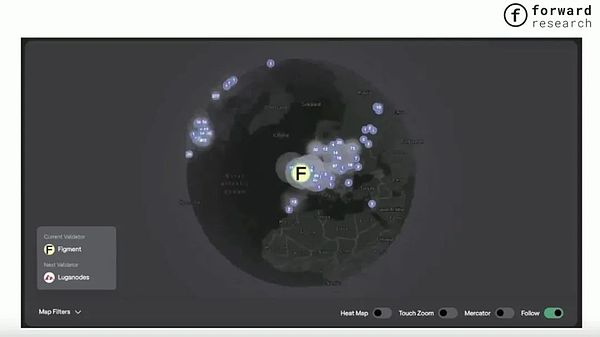

This is a map of Solana. It has many different nodes around the world, and it's much faster than Ethereum, but much faster isn't fast enough to be what we're talking about here.

So if we want to create a world computer, a distributed computer. Queuing blocks one by one obviously doesn't work, and Ethereum first took the shared memory approach, and it's become a fetish now, and everyone is using it as a solution. And everything is built in this mindset.

But in reality, shared memory is just one of the two main paradigms for trying to achieve parallelism. So when I was thinking about how to try to explain all of this, I remembered a quote, Shared memory is evil.

This quote comes from Joe Armstrong, the other co-inventor of Erlang. I think it summarizes the problem very accurately. And it's an interesting question because if you're in the crypto space, you'll find that no one is talking about it, but in computer science, it's been debated for 60 years.

Joe wrote that threads that share data cannot run independently in parallel. On a single-core machine, this doesn't matter, but on a multi-core CPU, it matters.

At the point in execution where they share data, their execution becomes serial instead of parallel. This is exactly what is happening with Ethereum, which has only one thread of execution. With Solana, they have a number of parallel threads, but only within the same machine. Every time you want to transfer information or interact with the same agent, you need to lock access to that memory again, which makes it go from parallel to serial. Critical regions in threads introduce serial bottlenecks that limit scalability.

If we really want high performance, we have to make sure that applications don't share anything. This way we can replicate the solution across many independent CPU cores, where you can swap CPU cores for threads, which works in a decentralized environment.

I think this is an accurate explanation of why current decentralized computing mechanisms don't scale.

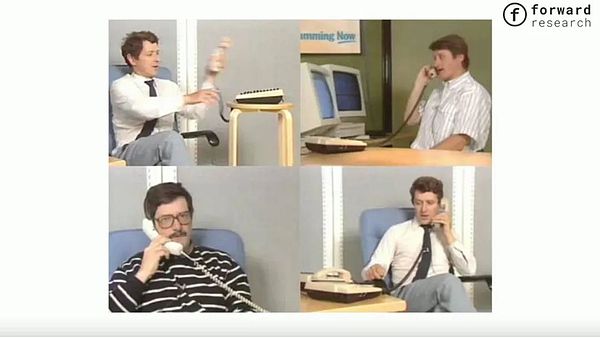

So fortunately, we are not the only ones in history to have this problem. This image shows the work of Robert Verding, the creator of Erlang, in 1985. Interestingly, the first use case was how to have many different phone calls all managed by different threads of execution.

Obviously, they didn't solve the problem by waiting on the same locks and sharing memory. They tried a different solution back in 1985, and I think this is the best example of how this can be done. It's surprising that no one has applied it to cryptography yet.

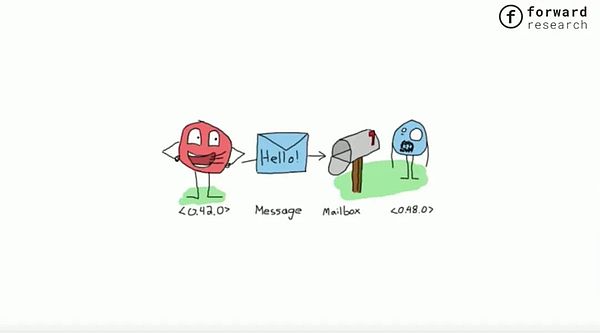

The basic idea of this solution is that you can have two threads locking and waiting on each other to access a piece of state. One of them updates it, the other waits, then the other updates it, and then the next person can access it. In that giant queue, we just send the pieces of state we need. We only share the information we need and don't wait for each other. Everything runs asynchronously and in parallel.

For example, Tom is running a computational process in AO. He sends me a message, and I might reply, but I don't wait for Tom's reply, I just go on to do something else. He doesn't wait for me either. Fundamentally, everything is asynchronous, and this starts to look like an architecture that can really create a global computer. So, when you connect it up, you start to see this picture where information only flows to the right people. You have local shared state instead of global shared state, and only the information that needs to be passed is passed, and everything runs more efficiently.

So, with this model, you can have many different threads that are processing different messages at the same time, or even more. There's no limit, even though this looks a little complicated, but it's true in an academic sense.

There was a great paper written about this by a guy named Andy, who was the barrelfish team that built a distributed multi-core operating system in 2008. They did a simulation in their research, and when they took a shared memory machine and scaled it up to thousands of cores, they concluded that it would spend about 92% of its computation time in contention using locks. But if you have a system where everything is asynchronous and only shares information that is needed for other people to compute at the right point in time, then everything runs much more smoothly, and you can scale it arbitrarily.

So that's how we came up with this solution called AO.

AO stands for "actor oriented". The previous name of AO was actually Hyperbeam. Hyperbeam is the virtual machine that Erlang runs on.

So Erlang is deeply intertwined with AO and everything we build, not just architecturally, but also philosophically and almost aesthetically. We're not doing that eighties phone call thing anymore, but it's still pretty close. And we even designed a logo for it called hyperbeam.

So when you're practicing AO, you start to see this asynchronous communication, this power of parallel execution. This approach that Erlang pioneered, we've now applied to a decentralized environment. The community is already making great use of this.

This is an app called Gather Chat. It's built on top of AO, and every individual character on the screen can be a process, and they can all do any number of computations in parallel, but they can interact with each other, and they only share memory as needed. They send parts of their state, and they don't wait for each other, which allows you to create really rich experiences. We intuitively felt that the first thing to build on top of AO should be a chatroom.

You can't imagine a chatroom being built on Ethereum. Because if it costs $25 to send a message, it won't be that interesting for people.

This is a game called The Grid made on top of AO by a team from India. It's a process on top of AO that only sends the right messages to the right people, and builds this experience of having a trustless, verifiably neutral cyberspace. In this game, every bot is an AI agent running on a process. It can do any number of computations, and then it interacts with others through a messaging system.

We launched the AO testnet about three months ago, and there's already a pretty large and exciting ecosystem developing around it. It seems to really unlock people's imagination and creativity in the decentralized computing space. We've even been able to run Llama 3 on top of AO, implementing a fully decentralized LLM (Large Language Model). Even so, you won't notice it, because because it runs asynchronously, such a large-scale calculation will not affect the operation of your process.

So the core principle of this parallel, asynchronous computing model is that my calculation should not affect your calculation unless we really want to talk. And that's just a matter of you sending me a message and then I figure out how to handle this message. So it starts to look like a global shared computer for humanity. We really think this is the ultimate form of decentralized computing.

Our Arweave perpetual network is a very important part of this giant global computer, and we will allow you to access data in Arweave just like you access your local hard drive. Just imagine that this means that the existing 5 billion pieces of information in the network suddenly become part of your local hard drive. So now you can build anything you want, and people can trust that your application will not be modified after it is released. You can do all the decentralized finance stuff we talked about.

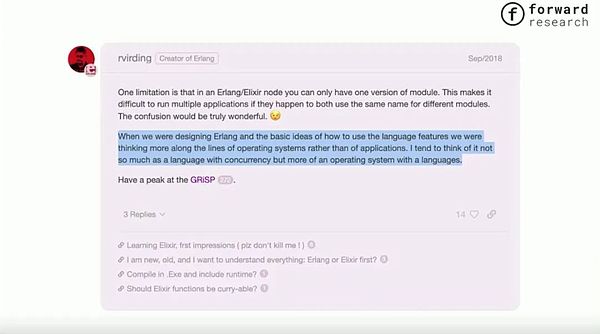

Last night, when I was working on this talk slide, I stumbled across this post from Robert, the author of Erlang, on some forum. This paragraph outlines something that I have intuitively known in my heart for a long time but never expressed in words so elegantly.

When we were designing Erlang and thinking about how to use its language features, we thought more along the lines of an operating system rather than an application. I tend to think of it less as a language with concurrency and more like an operating system with a language.

If you've used Erlang, you'll feel the same way. It's not so important what language you use, it's actually the semantics underneath this idea that all transactions in the system are done by sending messages. And it also points to this idea of an operating system.

So when we were building AO, we realized that in order for people to use it more conveniently, there needs to be a user-friendly environment in which they can operate. So that's why we built AOS.

If you've worked in the Crypto space, you'll find that when you try to deploy a smart contract on Ethereum, you'll encounter incredible waste because it costs thousands or even tens of thousands of dollars to get your code into the machine because it has to be written into this Stop the world state updates mechanism.

Where in AOS, you just type in AOS and it'll start a new process for you that you can use for specific things. The interaction felt like a normal command line interface, and one of the biggest pieces of feedback we got from early users of the system was that it felt natural and fun to use. Like when you get a message and you don't have a processor installed yet, a snippet of code in response to that message, it's printed out directly to the console, so you can watch what's happening to your process in real time. It's like you've plugged your terminal into this giant distributed supercomputer.

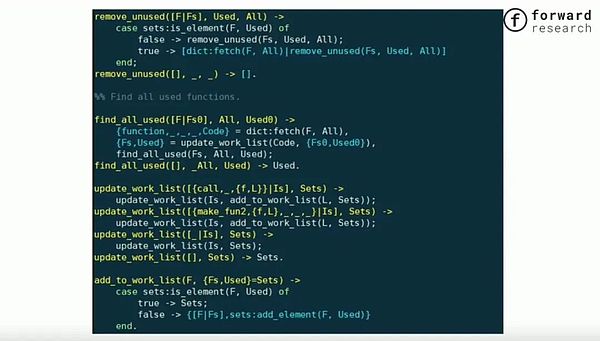

What about the Erlang language itself? Well, frankly, it looks like this, and it's not very user-friendly by modern standards. Probably because it's based on a system from the sixties called Prolog.

So we ended up actually going with LUA, which is a very simple and easy to learn language. It was built in the early nineties and is very stable. It should have been JavaScript, but unfortunately it wasn't. JavaScript is like a lot of crazy stuff on top of Erlang, and then it's just like a growing pile of disasters that just got bloated over time.

But LUA is the core essence of that language, it's simple and easy to understand, it doesn't have all the crazy stuff that's been added to it over time. It's just simple and pure and easy to learn. A few days ago on X, a guy called @DeFi_Dad contacted me, he's not a developer, but he really likes this system, he can use it, he can actually start programming with it.

Yeah, LUA looks intuitive and friendly, making it possible for anyone to start building applications with it. I really think that even if you're a non-technical person, here you have a good chance of building your own first application on top of this distributed supercomputer. Interestingly, we're not the only ones who thought of combining Lua and Erlang.

In fact, Robert built this thing called Lurel, and I'll let him tell you more about it.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Jasper

Jasper Catherine

Catherine Catherine

Catherine Beincrypto

Beincrypto Clement

Clement Beincrypto

Beincrypto Others

Others Bitcoinist

Bitcoinist Nulltx

Nulltx