Source: Quantum Bit

On the first day of DeepSeek's open source week, the cost reduction method was made public -

FlashMLA, which directly broke through the H800 computing limit.

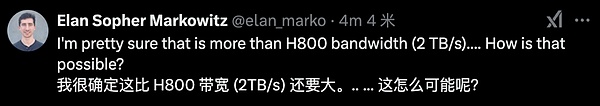

Netizens: How is this possible? ?

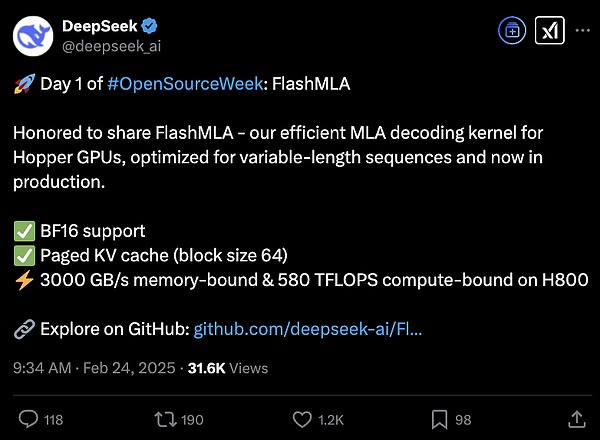

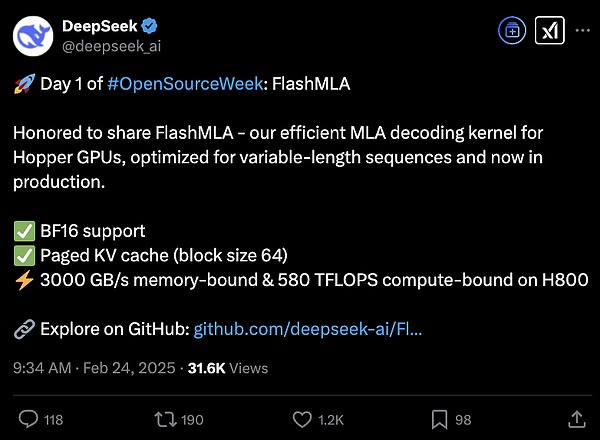

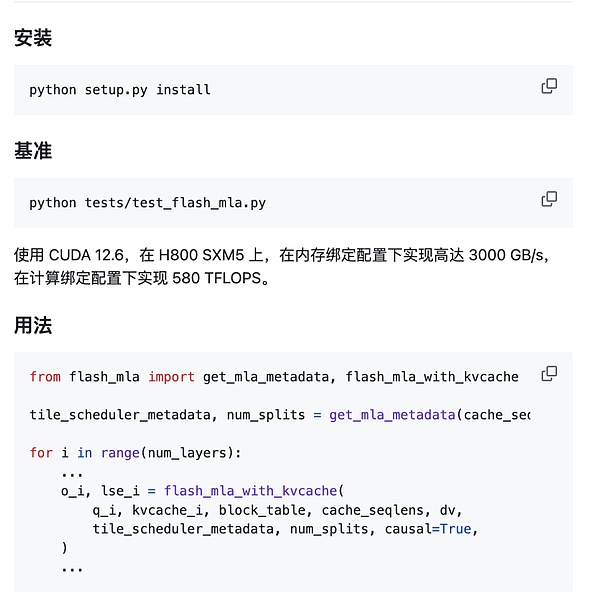

It is an efficient MLA decoding kernel developed for Hopper GPU, which is specially optimized for variable-length sequences and has been put into production.

MLA is the innovative attention architecture proposed by DeepSeek. Starting from V2, MLA has enabled DeepSeek to achieve a significant reduction in cost in a series of models, but the computing and reasoning performance can still be on par with the top models.

According to the official introduction, after using FlashMLA, H800 can reach 3000GB/s memory and achieve 580TFLOPS computing performance.

Netizens gave thumbs up: High respect to the engineering team for squeezing every FLOP out of Hopper's tensor core. This is how we push LLM services to a new frontier!

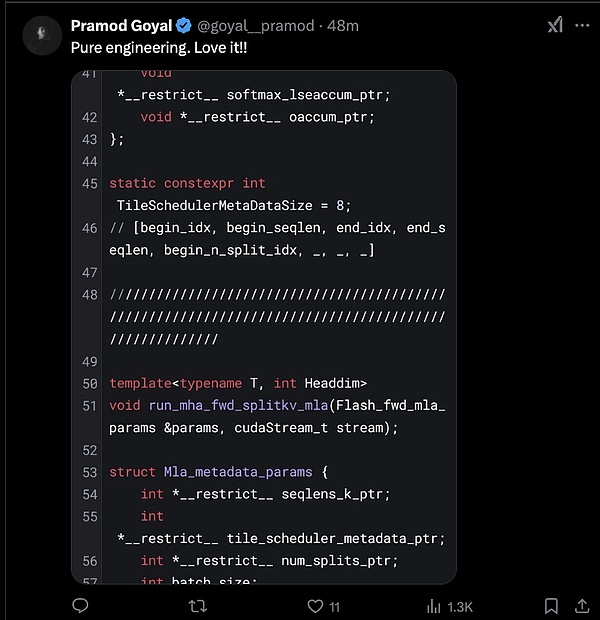

Some netizens have already used it.

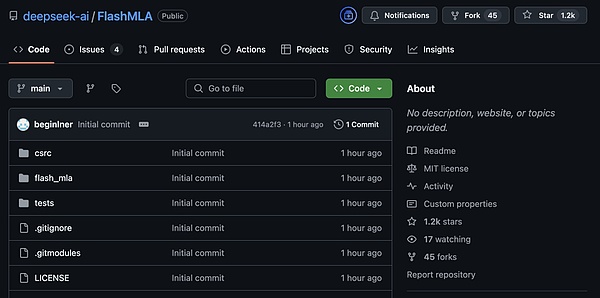

Open Source Day 1: FlashMLA

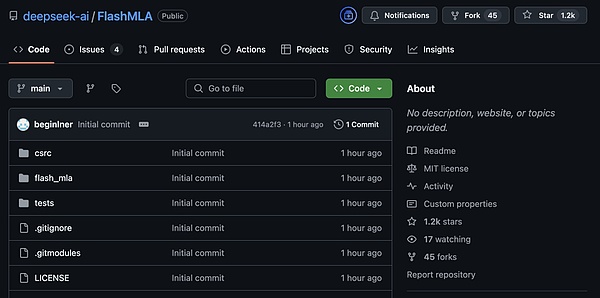

The GitHub page has been updated. In just one hour, the number of stars has exceeded 1.2k.

This time, the following has been released:

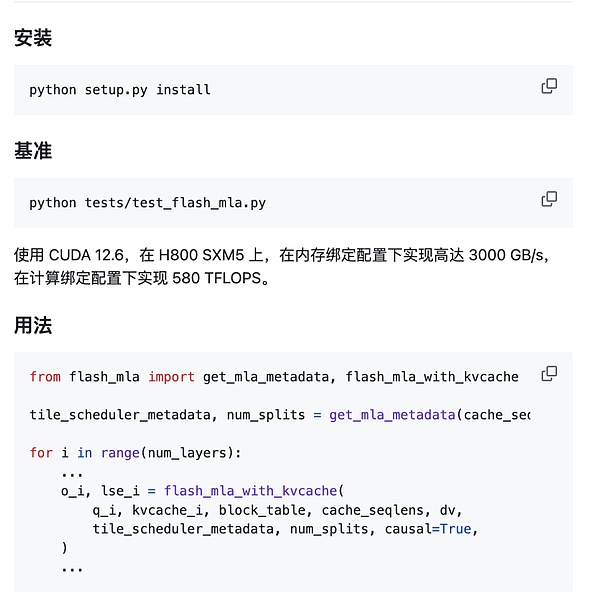

Quick start:

Environment requirements:

Hopper GPU

CUDA 12.3 and above

PyTorch 2.0 and above

At the end of the project, it also stated that this was influenced by FlashAttention 2&3 and NVIDIA's CUTLASS project.

FlashAttention is a fast and memory-efficient precise attention, used by mainstream large models. The latest third generation can make H100 utilization soar to 75%. Training speed increased by 1.5-2 times, computing throughput up to 740TFLOPs/s under FP16, reaching 75% of the theoretical maximum throughput, making better use of computing resources, which could only be achieved at 35% before.

The core author is Tri Dao, a Princeton expert and chief scientist of Together AI.

NVIDIA CUTLASS is a collection of CUDA C++ template abstractions for implementing high-performance matrix-matrix multiplication (GEMM) and related calculations of all levels and scales within CUDA.

MLA, DeepSeek basic architecture

Finally, MLA, the multi-head potential attention mechanism, is the basic architecture of the DeepSeek series of models, which aims to optimize the inference efficiency and memory usage of the Transformer model while maintaining model performance.

It projects the key and value matrices in the multi-head attention into a low-dimensional latent space through the low-rank joint compression technology, thereby significantly reducing the storage requirements of the key-value cache (KV Cache). This method is particularly important in long sequence processing, because traditional methods need to store the complete KV matrix, while MLA retains only key information through compression.

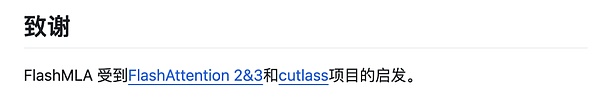

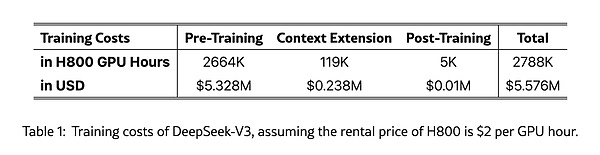

In the V2 version, this innovative architecture reduces the memory usage to 5%-13% of the most commonly used MHA architecture in the past, achieving a significant cost reduction. Its inference cost is only 1/7 of Llama 370B and 1/70 of GPT-4 Turbo.

In V3, this cost reduction and speed increase are even more obvious, which directly attracts global attention to DeepSeek.

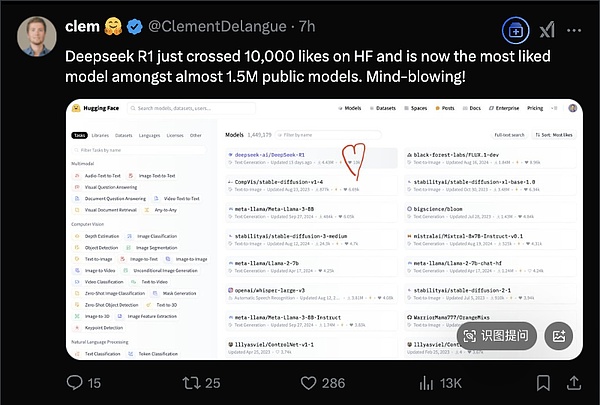

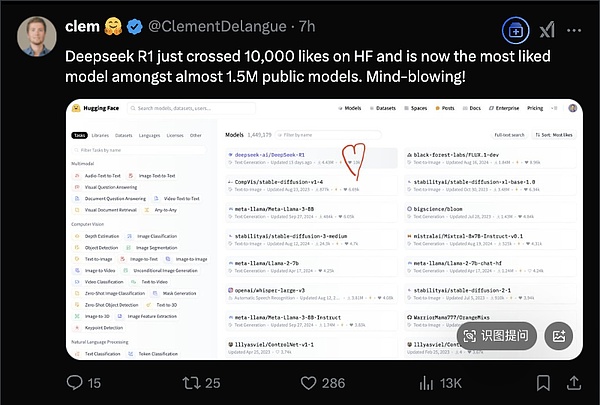

Just today, DeepSeek-R1 received more than 10,000 likes on HuggingFace, becoming the most popular large model among the nearly 1.5 million models on the platform.

HuggingFace CEO published a post announcing the good news.

The whale is making waves! The whale is making waves!

Okay, let's look forward to what will be released in the next four days?

Catherine

Catherine