Author: Kevin, BlockBooster

TLDR:

The emergence of DeepSeek shattered the computing power moat, and computing power optimization led by open source models has become a new direction;

DeepSeek is beneficial to the model layer and application layer in the upstream and downstream of the industry, and has a negative impact on the computing power protocol in the infrastructure;

The positive impact of DeepSeek inadvertently burst the last bubble in the Agent track, and DeFAI is most likely to give birth to a new life;

The zero-sum game of project financing is expected to come to an end, and the new financing method of community launch + a small amount of VC may become the norm.

The impact caused by DeepSeek will have a profound impact on the upstream and downstream of the AI industry this year. DeepSeek successfully enabled home consumer graphics cards to complete large model training tasks that were originally undertaken by a large number of high-end GPUs. The first moat around the development of AI, computing power, began to collapse. When the algorithm efficiency is running at a rate of 68% per year, and the hardware performance follows the linear climb of Moore's Law, the valuation model that has been deeply rooted in the past three years is no longer applicable. The next chapter of AI will be opened by the open source model.

Although the AI protocol of Web3 is completely different from that of Web2, it is inevitably affected by DeepSeek. This impact will have a new use case for the upstream and downstream of Web3 AI: infrastructure layer, middleware layer, model layer and application layer.

1.Sort out the collaborative relationship between upstream and downstream protocols

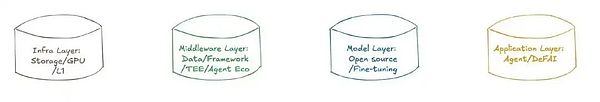

Through the analysis of technical architecture, functional positioning and actual use cases, I divide the entire ecosystem into: infrastructure layer, middleware layer, model layer, application layer, and sort out their dependencies:

1. Infrastructure layer

The infrastructure layer provides decentralized underlying resources (computing power, storage, L1), among which the computing power protocols include: Render, Akash, io.net, etc.; the storage protocols include: Arweave, Filecoin, Storj, etc.; the L1s include: NEAR, Olas, Fetch.ai, etc.

The computing power layer protocol supports model training, reasoning and framework operation; the storage protocol saves training data, model parameters and on-chain interaction records; L1 optimizes data transmission efficiency and reduces latency through dedicated nodes.

2.Middleware layer

The middleware layer is a bridge connecting the infrastructure and upper-layer applications, providing framework development tools, data services and privacy protection. The data annotation protocols include: Grass, Masa, Vana, etc.; the development framework protocols include: Eliza, ARC, Swarms, etc.; the privacy computing protocols include: Phala, etc.

The data service layer provides fuel for model training, the development framework relies on the computing power and storage of the infrastructure layer, and the privacy computing layer protects the security of data in training/reasoning.

3Model layer

The model layer is used for model development, training and distribution, including the open source model training platform: Bittensor.

The model layer relies on the computing power of the infrastructure layer and the data of the middleware layer; the model is deployed on the chain through the development framework; the model market delivers the training results to the application layer.

4Application layer

The application layer is an AI product for end users, including Agents: GOAT, AIXBT, etc.; DeFAI protocols include: Griffain, Buzz, etc.

The application layer calls the pre-trained model of the model layer; relies on the privacy computing of the middleware layer; complex applications require real-time computing power of the infrastructure layer.

Second,Negative impact on decentralized computing power

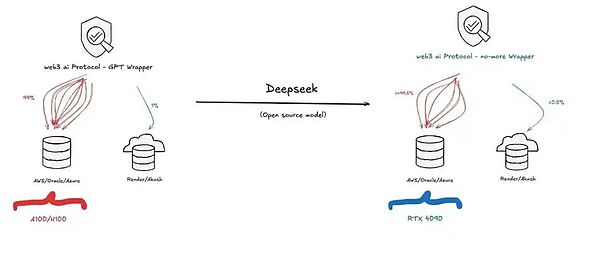

According to a sample survey, about 70% of Web3 AI projects actually call OpenAI or centralized cloud platforms, only 15% of projects use decentralized GPUs (such as Bittensor subnet models), and the remaining 15% are hybrid architectures (sensitive data is processed locally, and general tasks are on the cloud).

The actual utilization rate of decentralized computing power protocols is far lower than expected, and does not match its actual market value. There are three reasons for the low utilization rate: Web2 developers continue to use the original tool chain when migrating to Web3; decentralized GPU platforms have not yet achieved price advantages; some projects evade data compliance reviews in the name of "decentralization", and actual computing power still relies on centralized clouds.

AWS/GCP accounts for more than 90% of the AI computing power market share, while Akash's equivalent computing power is only 0.2% of AWS. The moats of centralized cloud platforms include: cluster management, RDMA high-speed network, and elastic expansion and contraction; decentralized cloud platforms have improved versions of the above technologies in web3, but the defects that cannot be improved are: latency issues: distributed node communication latency is 6 times that of centralized clouds; tool chain fragmentation: PyTorch/TensorFlow does not natively support decentralized scheduling.

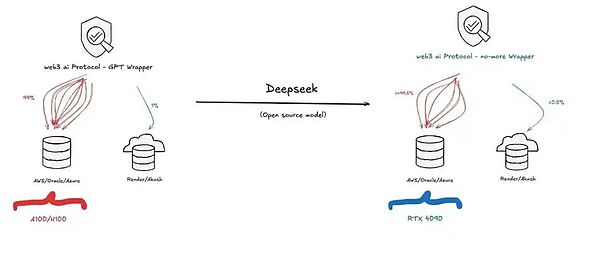

DeepSeek reduces computing power consumption by 50% through sparse training, and dynamic model pruning enables consumer-grade GPU training of models with tens of billions of parameters. The market's expectations for high-end GPU demand in the short term have been significantly lowered, and the market potential of edge computing has been revalued. As shown in the figure above, before the emergence of DeepSeek, the vast majority of protocols and applications in the industry used platforms such as AWS, and only a few use cases were deployed in decentralized GPU networks. Such use cases valued the latter's price advantage in consumer-grade computing power and did not pay attention to the impact of latency.

This situation may be further exacerbated with the emergence of DeepSeek. DeepSeek has released the limitations of long-tail developers, and low-cost and efficient reasoning models will be popularized at an unprecedented rate. In fact, the above-mentioned centralized cloud platforms and many countries have begun to deploy DeepSeek. The significant reduction in reasoning costs will give rise to a large number of front-end applications, which have a huge demand for consumer-grade GPUs. Faced with the upcoming huge market, centralized cloud platforms will launch a new round of user competition, not only competing with the head platforms, but also competing with countless small centralized cloud platforms. The most direct way to compete is to lower prices. It is foreseeable that the price of 4090 on centralized platforms will be reduced, which is a disaster for Web3 computing power platforms. When price is not the only moat of the latter, and computing power platforms in the industry are also forced to lower prices, the result is unbearable for io.net, Render, and Akash. The price war will destroy the latter's remaining valuation ceiling, and the death spiral caused by declining revenue and user loss may lead to a new direction for the transformation of decentralized computing power protocols.

III.Significance to upstream and downstream protocols

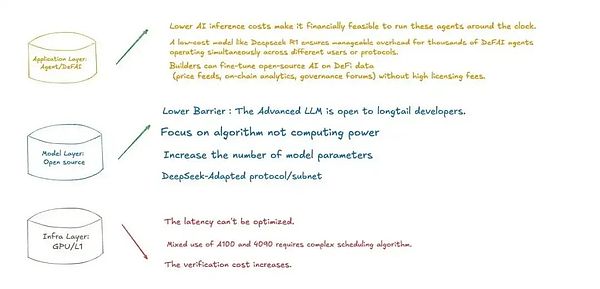

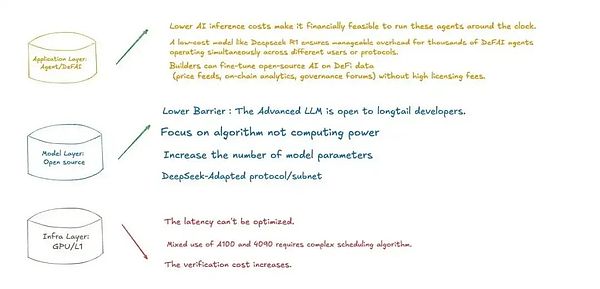

As shown in the figure, I think DeepSeek will have different impacts on the infrastructure layer, model layer and application layer. From the positive impact:

The application layer will benefit from the significant reduction in reasoning costs. More applications can use low cost to ensure that Agent applications are online for a long time and complete tasks in real time;

At the same time, the low-cost model overhead of DeepSeek allows the DeFAI protocol to form a more complex SWARM. Thousands of Agents are used for one use case, and each Agent The division of labor will be very subtle and clear, which can greatly improve the user experience and prevent user input from being incorrectly disassembled and executed by the model;

Developers at the application layer can fine-tune the model to feed DeFi-related AI applications with prices, on-chain data and analysis, and protocol governance data without having to pay high license fees.

After the birth of DeepSeek, the significance of the open source model layer has been proven. Opening high-end models to long-tail developers can stimulate a widespread development boom;

The high wall of computing power built around high-end GPUs in the past three years has been completely broken, developers have more choices, and the direction of open source models has been established. In the future, AI models will no longer compete on computing power but on algorithms. The change of belief will become the cornerstone of confidence for open source model developers;

Specific subnets around DeepSeek will emerge in an endless stream, model parameters under the same computing power will increase, and more developers will join the open source community.

From the negative impact:

The objective usage delay of the computing power protocol in the infrastructure cannot be optimized;

and the hybrid network composed of A100 and 4090 has higher requirements for coordination algorithms, which is not the advantage of decentralized platforms.

Fourth,Burst the Agent bubble, DeFAI breeds new life

Agent is the last hope of AI in the industry. The emergence of DeepSeek has liberated the computing power limit and depicted the future expectation of application explosion. It was originally a huge benefit to the Agent track, but due to the strong correlation between the industry and the US stock market and the Federal Reserve's policy, the remaining bubble was punctured and the track market value fell to the bottom.

In the wave of integration of AI and the industry, technological breakthroughs and market games always go hand in hand. The chain reaction caused by the shock of NVIDIA's market value is like a magic mirror, reflecting the deep dilemma of AI narrative in the industry: from On-chain Agent to DeFAI engine, the seemingly complete ecological map hides the cruel reality of weak technical infrastructure, hollow value logic, and capital dominance. The seemingly prosperous on-chain ecology hides hidden diseases: a large number of high FDV tokens compete for limited liquidity, old assets rely on FOMO emotions to survive, and developers are trapped in PVP involution to consume innovation potential. When incremental funds and user growth hit the ceiling, the entire industry falls into the "innovator's dilemma"-desiring breakthrough narratives, but it is difficult to get rid of the shackles of path dependence. This torn state provides a historic opportunity for AI Agent: it is not only an upgrade of the technical toolbox, but also a reconstruction of the value creation paradigm.

Over the past year, more and more teams in the industry have found that the traditional financing model is failing - the routine of giving VC a small share, highly controlling the market, and waiting for the exchange to pull the market is no longer sustainable. Under the triple pressure of VC's tightening pockets, retail investors refusing to take over, and the high threshold for listing coins on large exchanges, a new set of gameplay that is more suitable for the bear market is emerging: joint top KOL + a small number of VCs, large-scale community launch, and low market value cold start.

Innovators represented by Soon and Pump Fun are opening up a new path through "community launch" - joint top KOL endorsement, 40%-60% of tokens are distributed directly to the community, and the project is launched at a valuation level as low as 10 million US dollars FDV, achieving millions of dollars in financing. This model builds consensus FOMO through KOL influence, allowing the team to lock in profits in advance, while exchanging high liquidity for market depth. Although it gives up the short-term control advantage, it can repurchase tokens at a low price in the bear market through a compliant market-making mechanism. In essence, this is a paradigm shift in the power structure: from the VC-led game of passing the parcel (institutions take over - exchanges sell - retail investors pay), to a transparent game of community consensus pricing, in which the project party and the community form a new symbiotic relationship in the liquidity premium. When the industry enters a transparency revolution cycle, projects that stick to traditional control logic may become the afterimage of the times under the wave of power migration.

The short-term pain of the market just proves the irreversibility of the long tide of technology. When AI Agent reduces the cost of on-chain interaction by two orders of magnitude, and when the adaptive model continues to optimize the capital efficiency of the DeFi protocol, the industry is expected to usher in the long-awaited Massive Adoption. This change does not rely on concept hype or capital acceleration, but is rooted in the technical penetration of real needs - just as the electricity revolution has never stagnated due to the bankruptcy of light bulb companies, Agent will eventually become a real golden track after the bubble bursts. And DeFAI may be the fertile ground for the birth of new life. When low-cost reasoning becomes a daily routine, we may soon see the birth of use cases where hundreds of Agents are combined into a Swarm. Under equivalent computing power, a significant increase in model parameters can ensure that Agents in the open source model era can be more fully fine-tuned. Even in the face of complex user input instructions, they can be split into task pipelines that can be fully executed by a single Agent. Each Agent optimizes on-chain operations, which may promote the increase in overall DeFi protocol activity and liquidity. More complex DeFi products, led by DeFAI, will appear, and this is where new opportunities emerge after the last round of bubbles burst.

Catherine

Catherine