Most of the material in this article is taken from "Exploring the Design Space and Challenges for Oracle Implementations in DeFi Protocols", and there are a lot of changes on this basis

Abstract: Oracles have always played an indispensable role in the DeFi ecosystem. Since smart contracts can only access on-chain data and cannot directly obtain information from outside the chain, oracles are needed to act as a medium to introduce off-chain data to the chain so that smart contracts can automatically process transactions based on off-chain data. Most DeFi protocols rely on oracle price feeds to process derivative contracts, liquidate non-performing assets, etc.

Currently, the amount of funds in the DeFi ecosystem exceeds US$80 billion, most of which are related to oracles. However, traditional oracles have hysteresis in price updates, which has led to a special MEV for oracles: OEV. Common scenarios for OEV include oracle front-running transactions, arbitrage, and liquidation profits. Now more and more solutions are proposed to mitigate the negative impact of OEV.

This article will introduce various existing OEV solutions, discuss their advantages and disadvantages, and propose two new ideas to explain their values, problems to be solved and limitations.

MEV (OEV) generated by oracles

In order to make it easier for everyone to understand the main content of this article, we first briefly popularize push oracles and pull oracles. Push oracles refer to that the oracle actively sends data to the smart contract on the chain, such as Chainlink, which is mainly push-based; pull oracles are actively requested by DApp, and the oracle provides data after receiving the request.

The difference between these two modes is that the data effectiveness of push oracles is stronger, which is suitable for scenarios that are more sensitive to real-time data, but in this mode, the oracle frequently submits data to the chain, which consumes more gas. Pull oracles are more flexible and only provide new data when DApp needs data. This consumes less gas, but the data is delayed.

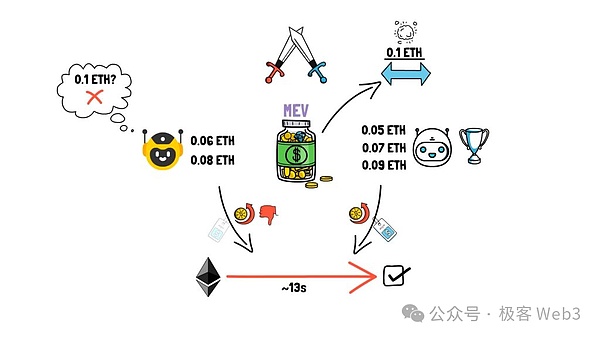

Since Defi platforms require oracles to provide price feed data, if the price feed update is lagging, MEV may be captured by arbitrage robots. This MEV formed by relying on oracles is called OEV. The main profit scenarios related to OEV include front-running transactions, arbitrage, and liquidation. In the following discussion, we will outline the various profit scenarios caused by OEV and explore different OEV solutions, as well as their advantages and disadvantages.

OEV generation and capture methods

According to the results observed in practice, there are three main ways to achieve OEV:

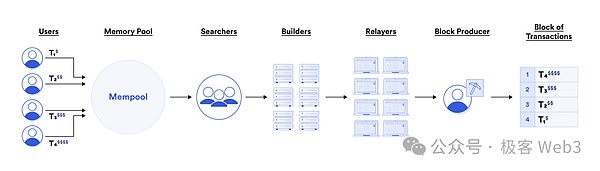

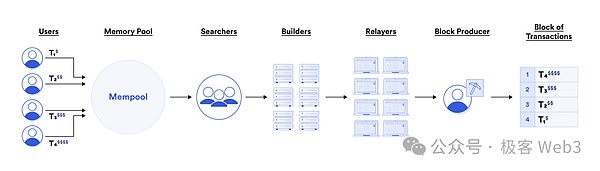

1. Front-running transactions. For example, the MEV Searcher in the Ethereum network monitors the transaction data to be uploaded to the chain in real time to find MEV opportunities. The oracle updates the price feed and submits data to the chain. These data will be accumulated in the transaction pool before being uploaded to the chain. The Searcher will monitor these pending transactions, predict the upcoming price fluctuations of assets on the chain, and ambush some buy and sell orders before the price is updated.

Many derivatives platforms have suffered from the negative impact of front-running transactions. For example, GMX suffered a 10% reduction in profits due to frequent front-running transactions. It was not until the protocol was updated that GMX handed over the accessed oracles to KeeperDAO for unified scheduling, and the problem of OEV capture was alleviated. We will briefly explain the solution adopted by GMX later.

2. Arbitrage:Use the delay in oracle data updates to conduct risk-free arbitrage between different markets.For example, the asset price update of a certain on-chain derivatives platform has a 10-second delay. If the ETH spot price of Binance suddenly rises, and the ETH price on the chain does not change in time, the arbitrage robot can immediately open a long contract on the chain and close the position after the Chainlink feed price is updated to make a profit.

The above example simplifies the actual situation, but it illustrates the arbitrage opportunities that price update delays can create.Arbitrageurs can capture OEV from Defi platforms, but of course these captured OEVs will eventually lead to losses for LPs (the wool comes from the sheep).

Oracle front-running and arbitrage phenomena are often referred to as "toxic flow" in derivatives protocols because there is information asymmetry behind these transactions. Arbitrageurs can capture risk-free profits, but will harm the interests of LPs/liquidity providers in Defi protocols. Since 2018, old DeFi protocols such as Synthetix have been plagued by such OEV attacks and have tried many ways to mitigate their negative effects. We will briefly explain such countermeasures later.

3. Liquidation:For lending protocols, if asset price updates are delayed, it is profitable for some quick-reacting liquidators to capture inefficient liquidations caused by untimely price updates and obtain additional benefits. These behaviors will weaken market efficiency and have a negative impact on the fairness of DeFi platforms.

The liquidation component is the core of any DeFi protocol involving leverage, and the granularity of the price feed update plays a key role in liquidation efficiency. If the push oracle is threshold-based, that is, the price feed is updated only when the price change reaches a certain amplitude, it may affect the liquidation process. Suppose the off-chain ETH price falls, and the position on a lending agreement has reached the liquidation line, but the price volatility does not meet the threshold for the oracle to update the price feed, so the oracle does not update the data, which will affect the execution of the liquidation work, which may further cause negative effects.

To give a simple example, a collateral position faces liquidation due to an urgent drop in price, but the on-chain price has not changed due to the oracle's untimely data update. In this window period, the Searcher sends a liquidation transaction request in advance and pays a higher Gas to obtain the advantage of priority packaging on the chain. When the on-chain price is updated, the Searcher directly becomes the liquidator and makes a profit. At the same time, due to the hysteresis of price updates, the original collateral holder has no time to cover the position and suffers additional losses.

Usually, DeFi protocols will give part of the liquidated collateral as a reward to the liquidator. Large DeFi protocols such as Aave distributed more than $38 million in liquidation incentives on Ethereum alone in 2022, which not only overcompensated third-party liquidators but also harmed users. In addition, gas wars will spread MEV capture opportunities from places with MEV effects to the entire MEV supply chain.

Among them, OEV captured in front-running attacks and arbitrage behaviors will harm the interests of DeFi liquidity providers; and OEV captured in liquidation, for borrowers, will lose considerable funds in the liquidation process, and for lenders, there is a delay in oracle quotes, resulting in the value of the collateral received being lower than expected.

In short, no matter how OEV is captured, it will cause losses to others in the market, and in the end only the OEV capturer himself will benefit, which has a negative impact on the fairness and UX of DeFi.

Current OEV Solutions

Below, we will discuss push, pull, and other oracle models in the above context, as well as the OEV solutions currently available on the market that are built on them, analyze their effectiveness, and delve into the trade-offs these solutions make to solve OEV problems, including increasing centralization or trust assumptions, or sacrificing UX.

What if we only use pull oracles?

We mentioned pull oracles earlier, and their characteristic is that Dapps need to actively request data from oracles.As a representative of pull oracles, one of the advantages of Pyth is that it can use the high TPS and low latency of the Solana architecture to create a Pythnet network to collect, aggregate, and distribute data. On Pythnet, publishers update price information every 300ms, and DApps in need can query the latest data through the API and publish it to the chain.

It should be noted here that the publisher updates the price information every 300ms, which sounds like the logic of a push oracle. However, the logic of Pyth is "push update, pull query", that is, although the data enters Pythnet through push update, the on-chain application or other blockchain network can "pull" the latest data through the Pyth API or the cross-chain bridge Wormhole's messaging layer.

However, using only pull-type oracles cannot completely solve the problem of front-running and arbitrage. Users can still choose to trade at a price that meets specific conditions, leading to the "opponent selection" problem. Specifically in the scenario of oracles, due to the delay in the oracle updating the price, the searcher can monitor the time difference of the price update on the chain and actively choose a time node that is beneficial to it for trading. The price of this time node is often an inaccurate price that is expired but not updated in the future. This behavior leads to unfair market prices, allowing searchers to take advantage of information asymmetry to obtain risk-free profits, but at the expense of the interests of other market participants.

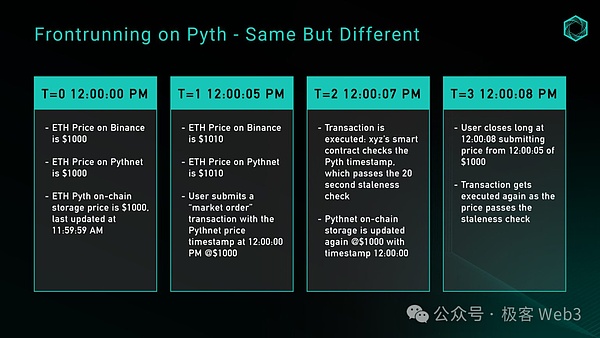

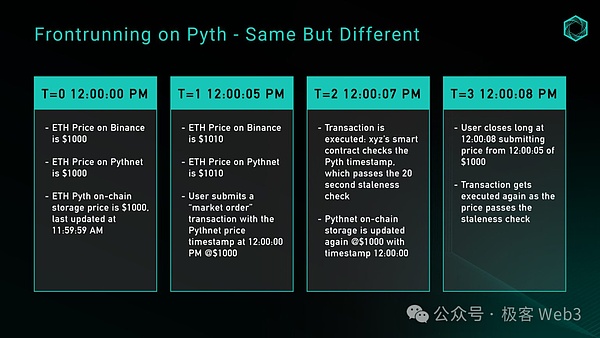

That is to say, in the pull-type oracle, arbitrage attacks caused by price delays still exist. In Pyth's documentation, it is proposed to prevent this attack through "staleness check". "Staleness check"is a mechanism used to ensure the immediacy of data or price information used in transactions.

Specifically, staleness check verifies whether the price data used is generated within a reasonable time window to prevent traders from trading with outdated price information, thereby reducing arbitrage and unfair trading practices.

But in specific implementation, it is difficult to determine the best time threshold. We can revisit the previous example to understand staleness check. Assume that the perpetual contract exchange uses Pyth's ETH/USD price source and sets a 20-second staleness check threshold, which means that the timestamp of the Pyth price can only have a time difference of 20 seconds from the timestamp of the block that executes the downstream transaction. If this time range is exceeded, the price will be considered outdated and cannot be used. This design is intended to prevent arbitrage using expired prices.

Shortening the time threshold of staleness check seems to be a good solution, but this may cause transaction rollbacks on networks with uncertain block times, thus affecting user experience. Pyth's price source relies on cross-chain bridges. Taking Wormhole as an example, its Guardian node is called "Wormhole Keeper". The oracle must have enough buffer time for the Wormhole Keeper to confirm the price and for the target chain to process the transaction and record it in the block.

Oracle Order Flow Auction (OFA)

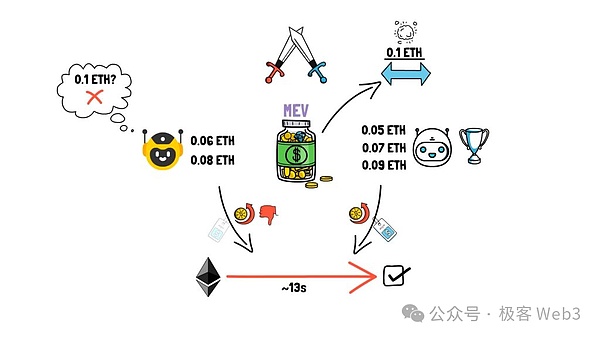

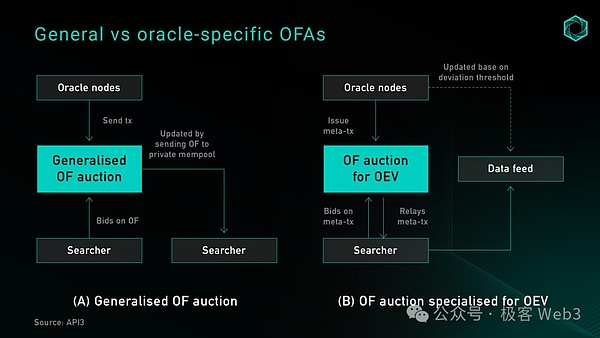

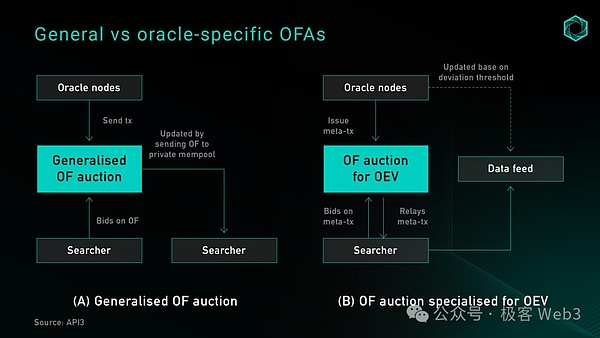

In order to deal with the negative impact of MEV, a new solution, Oracle-exclusive Order Flow Auction (OFA), has gradually emerged and has achieved remarkable results. OFA is a general third-party auction service that allows the oracle to sign the latest price feed information and send it to the off-chain auction platform, and someone else submits the price feed information to the chain on its behalf. Once this type of operation of updating the feed price is on the chain, it will lead to MEV opportunities, so the MEV Searcher will monitor the feed price information submitted by the oracle to the auction platform and make full use of the opportunities here.

Searchers are often willing to bid and apply to push the feed price message to the chain instead of the oracle. Then the Searcher takes this opportunity to construct MEV transactions and make himself the biggest beneficiary. Of course, the Searcher has to participate in the auction. During the auction, he will pay some funds, which will be distributed by the auction platform to the oracle or more people. This is equivalent to distributing part of the profit of the MEV player to others, so as to alleviate the OEV problem.

The specific process of OFA is as follows:

1. Transaction submission

All pending transaction flows will be routed to a private OFA transaction pool instead of being sent directly to the chain. To ensure fairness, the transaction pool remains private and is only accessible to auction participants.

2. Auction Bidding

The trading pool is the platform where OFA conducts auctions. Searchers participate in the auction here and obtain the right to execute orders. The price of the auction is based on the expected value that can be extracted from the order, including factors such as transaction type, current gas price, and expected MEV profit.

3. Selection and Execution

The winning Searcher pays the auction amount and obtains the right to execute the transaction. For his own benefit, he will arrange the transaction in a way that can extract the maximum MEV and submit the transaction to the chain.

4. Profit Distribution

This step is the core of OFA. In order to obtain MEV opportunities, Searchers will pay an additional auction amount, which will be deposited in the smart contract and distributed according to a certain proportion to compensate the protocol and users for the value lost in OFA.

From the data, OFA has significantly alleviated the MEV and OEV problems.The adoption rate of such solutions is showing a rapid upward trend. Currently, more than 10% of Ethereum transactions are conducted through private channels (including private RPC and OFA). It can be foreseen that OFA has great potential for development in the future.

However, there is a problem in implementing a general OFA solution, that is, the oracle cannot foresee whether the update will generate OEV, and if OEV is not generated, OFA will introduce additional delays because the oracle needs to perform additional operations to send the transaction to the auction platform. On the other hand, the simplest way to optimize OEV and reduce delays is to hand over all oracle order flows to a dominant searcher, but doing so will obviously bring significant centralization risks, encourage rent extraction and censorship in disguise, and ultimately damage the user experience.

OFA's auction price updates do not include existing rule-based updates, which are still updated through the public memory pool. This mechanism ensures that oracle price updates, and any additional benefits generated by them, can be retained within the application layer. At the same time, this mechanism also improves the granularity of data, allowing searchers to request data source updates without having to let oracle nodes bear the additional cost of more frequent updates.

OFA is particularly ideal during the liquidation process because it can provide more refined price updates, maximize the return of capital to the liquidated stakers, reduce the rewards paid by the protocol to the liquidators, and eliminate the additional benefits of the auction liquidators to give back to users.

However, although OFA solves front-running transactions and arbitrage to a certain extent, there are still some problems that have not been solved. In the scenario of perfect competition and one-price sealed auction, the auction should make the additional revenue from the front-running transaction close to the block space cost required to perform this MEV operation. At the same time, the increased granularity of price updates will also reduce the generation of arbitrage opportunities.

Currently, to implement OFA exclusive to the oracle, you can choose to integrate with a third-party auction service (such as OEV-Share), or directly use the auction service as a DeFi application and let it build it yourself.

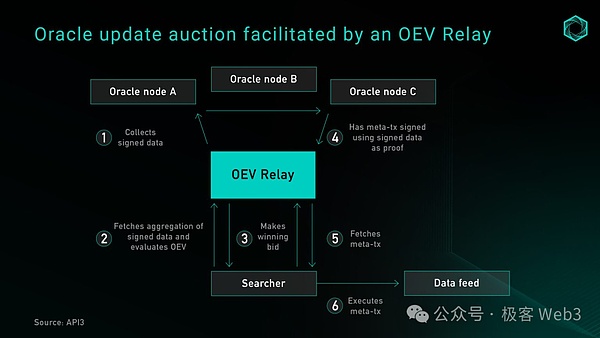

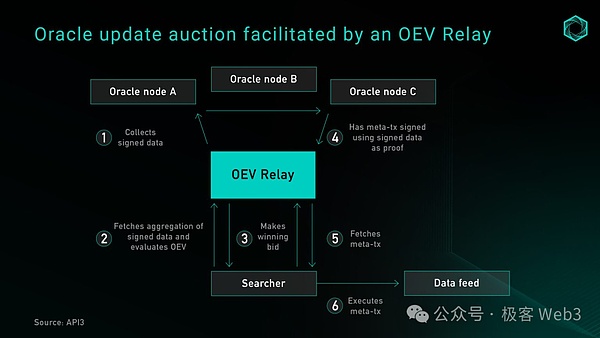

API3 uses the OEV repeater based on the Flashbots concept as an API to provide DoS protection services during auctions. The repeater is responsible for collecting meta transactions from the oracle, screening and aggregating the searcher's bids, and distributing the proceeds in a trustless environment. The winner of the bid needs to transfer the bid amount to the proxy contract controlled by the protocol, and then the signature data provided by the repeater will update the price source.

Another option is for the protocol to build its own native auction service without relying on intermediaries to capture and strip all the extra revenue extracted from OEV. The BBOX project plans to embed auctions into its liquidation mechanism to capture OEV and return it to applications and users. In this way, the protocol is able to better distribute value and reduce dependence on third-party services, thereby enhancing the autonomy of the system and improving user benefits.

Running a centralized node or Keeper

In the early days of Web3, oracle-driven perpetual contract exchanges proposed the idea of running a centralized Keeper (node or entity dedicated to trading) network to deal with the OEV problem. The core idea is to aggregate prices from third-party sources such as centralized exchanges and use Chainlink data feeds as a backup. This model was promoted by GMX v1 and applied in many subsequent forks. Its main value lies in the Keeper network managed by a single operator, which completely prevents the problem of front-running.

Of course, this method has obvious centralization risks. The centralized Keeper system can determine the execution price without verifying the source of the price. The Keeper in GMX v1 is not an on-chain transparent mechanism, but a program run by its team address on a centralized server, and the authenticity and source of the execution price cannot be verified.

For the extraction of OEV, the searcher will monitor the "oracle data update instructions" in the memory pool, and through the MEV infrastructure, bundle the update transaction instructions of the oracle data with the transaction instructions initiated by itself, and finally execute them to obtain benefits. Of course, for arbitrage and liquidation transactions, the OEV Searcher only needs to monitor the deviation between the on-chain price and the off-chain price, and finally ensure that the transaction initiated by itself is executed on the chain first through the MEV infrastructure.

No matter which process the searcher uses, we can see that the benefits of OEV are distributed to the MEV infrastructure and the OEV searcher, and the protocol that "captures" the value of OEV does not obtain its due benefits. (According to some data, the OEV problem has previously caused the GMX platform's profits to be drained by almost 10%). In order to solve this problem, GMX, which has contributed a lot of OEV value and is an on-chain derivatives trading platform, adopted a simple approach: let some people designated by themselves capture the OEV value, and then return these OEV values to the GMX platform as much as possible.

In response to this, GMX introduced Rook and whitelists. In short, GMX's oracle updates are executed through Rook, and Rook will perform OEV extraction operations based on the current market situation to obtain OEV in the market. 80% of these OEVs will be returned to the GMX protocol.

To sum up, GMX gives Rooks the right to update the oracle through the whitelist, extracts OEV through Rook to avoid being extracted by other searchers, and returns 80% of OEV to the GMX system. This routine is actually a bit simple and crude.

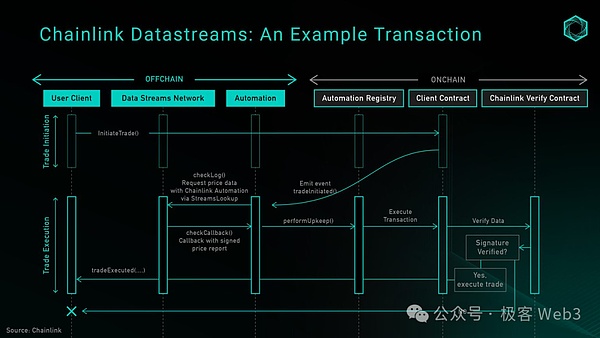

In response to the centralization risks caused by the above-mentioned single operator Keeper network, third-party service providers can be introduced to build a more decentralized automation network. The representative product is Chainlink Automation. Chainlink Automation is used in conjunction with Chainlink's new pull-based, low-latency oracle service Chainlink Data Streams. It was announced to enter closed testing at the end of 2023, but it has been put into practical application in GMX v2.

We can refer to the logic of the GMX v2 system to explore how to integrate the Chainlink Data Streams design into actual DeFi applications.

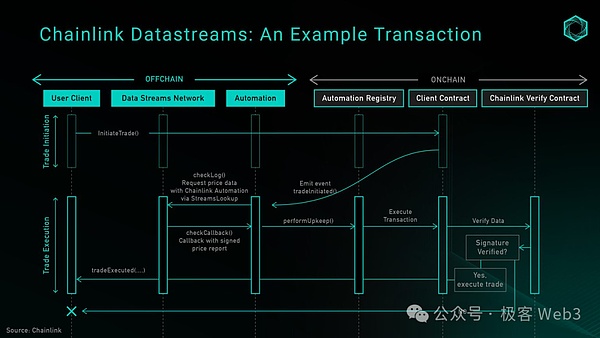

Overall, Chainlink Data Streams consists of three main components: data DON, automation DON, and on-chain verification contracts. DataDON is an off-chain data network with a Pythnet-like architecture for data maintenance and aggregation. AutomatedDON is a Keeper network maintained by the same node operators as DataDON, which is used to pull prices from DataDON to the chain. Finally, the on-chain verification contract is used to ensure the correctness of the off-chain signature.

The figure above shows the process of calling the open transaction function, where the automated DON is responsible for obtaining prices from the data DON and updating the on-chain storage. Currently, only whitelisted users have the right to query the data DON directly, so the protocol can choose to hand over maintenance tasks to the automated DON or run the Keeper itself. However, as the product progresses through the development cycle, it is expected to gradually transform into a permissionless structure.

In terms of security, relying on automated DON and using data DON alone has the same trust assumptions, which is a significant improvement over the design of a single Keeper. However, handing the right to update prices to automated DONs also means that OEV will be exclusive to nodes in the Keeper network, a trust assumption similar to Ethereum's attitude towards Lido node operators. Lido's node operators tend to be institutions with greater social reputations, and they occupy a large share of the Ethereum staking market. Ethereum uses the constraints of social consensus to prevent Lido from colluding as a cartel and forming a monopoly effect.

Pull Oracle: Delayed Settlement

Decentralized perpetual contract exchange Synthetix v2 introduced Pyth price data for settlement contracts, which is a very big improvement. Users' orders can choose between Chainlink or Pyth prices, as long as the price deviation does not exceed the predetermined threshold and the timestamp passes the staleness check. However, simply switching to a pull oracle cannot solve all OEV-related problems. To combat front-running, many DeFi protocols have introduced a "last look" pricing mechanism, which is a delayed order that splits a user's market order into two parts:

1. The user submits an "intent" to open a market order to the chain, along with order parameters such as size, leverage, collateral, and slippage tolerance, and pays an additional keeper fee.

2. The keeper receives the order, requests the latest Pyth price data, and calls the Synthetix execution contract in the transaction. The contract checks the predefined parameters, and if all pass, the order is executed, the on-chain price storage is updated, and the position is opened. The keeper collects the fees paid by the user to compensate for the gas fees and network maintenance costs it uses.

This approach prevents prices that are unfavorable to users from being submitted to the chain, effectively solving the front-running and arbitrage problems in the protocol. However, this design makes certain trade-offs in user experience: executing such market orders requires two transactions, and users have to pay for the gas fee as well as the cost of updating the oracle chain storage.

Previously, the fee for updating the oracle chain storage was a fixed $2, but it was recently changed to a dynamic fee based on the Optimism gas oracle + premium, which changes according to the activity of Layer2. In short, this solution sacrifices a certain user experience for traders while increasing the profits of liquidity providers.

Future OEV Solution Idea Outlook

Pull Oracle: Optimistic Settlement Mechanism

As delayed orders introduce additional fees for users, and these fees increase proportionally with L2's DA fees, someone has conceived an alternative order settlement model called "optimistic settlement" that aims to reduce user costs while maintaining decentralization and protocol security. As the name suggests, the optimistic settlement mechanism allows traders to execute market transactions atomically, the system optimistically accepts all prices, and provides a time window for searchers to submit proofs to reveal whether the order is malicious.

This article will outline several iterations of this idea, show its thinking process in the process, and briefly describe the problems that remain to be solved in this idea.

The original idea was that when a user opens a market order, they submit a price through parsePriceFeedUpdates, and then allow the user or any third party to submit a settlement transaction, using the price data to complete the transaction confirmation. At settlement, if there is a negative difference between the two prices, the difference will act as slippage on the user's profit and loss.

The advantage of this approach is that it reduces the cost burden on users and mitigates the risk of front-running transactions. However, this method also introduces a two-step settlement process, which is a disadvantage we have found in the Synthetix delayed settlement model. The additional settlement transaction may be redundant in most cases, especially when the fluctuations between order placement and settlement do not exceed the system-defined front-running threshold.

Another solution to circumvent the above problem is to allow the system to accept orders optimistically and then open a permissionless challenge period. During this period, anyone can submit evidence to prove that the price deviation between the price timestamp and the block timestamp exists, and there is a profitable front-running opportunity. The optimistic mechanism effectively reduces potential arbitrage behavior in the system by introducing a challenge period, and increases the transparency and fairness of the trading process.

The specific process is as follows:

1. The user creates a market order at the current market price and sends this price along with the embedded Pyth price data as an order creation transaction.

2. The smart contract optimistically verifies and stores this information.

3. After the order is confirmed on the chain, there will be a challenge period during which the Searcher can submit proof that the trader has malicious intent. This proof must include evidence that the trader used past prices with the intention of arbitrage. If the system accepts the proof, the price difference will be applied to the trader's execution price as slippage, and the original OEV earnings will be given to the Keeper as a reward.

4. After the challenge period ends, all prices will be considered valid by the system.

This optimistic model has two advantages:First, it reduces the cost burden of users. Users only need to pay the gas fee for order creation and oracle update in the same transaction, without additional transaction settlement fees. Secondly, it inhibits front-running transactions and protects the integrity of the liquidity pool by submitting proof of system front-running through economic incentives while ensuring a healthy keeper network.

This idea certainly has great potential, but there are still some open problems that need to be solved if it is to be implemented:

Define the 'opponent selection' problem:That is, how the system distinguishes between users who submit expired prices due to network delays and users who deliberately use delays to arbitrage. One initial idea is to measure volatility within the time of the staleness check (such as 15 seconds). If the volatility exceeds the net execution fee, the order may be marked as a potential arbitrage behavior.

Set a suitable challenge period:Given that the open time of malicious order flow may be very short, the keeper should have a reasonable time window to challenge the price. Although batch verification may save gas, the unpredictability of order flow makes it difficult to ensure that all price data can be verified or challenged in a timely manner.

Keeper's economic incentives:The Gas cost of submitting verification is not low. In order to ensure that Keeper has a positive effect on the system, the reward for submitting verification must be greater than the submission cost. However, the difference in order size may make this assumption not necessarily true in all cases.

Is it necessary to establish a similar mechanism for closing orders? If so, what impact may it have on user experience?

Ensure that users are not affected by "unreasonable" slippage:In the case of a market flash crash, there may be a huge price difference between order creation and on-chain confirmation, and some kind of stop loss measure or circuit breaker mechanism is needed. Here we consider using the EMA price provided by Pyth to ensure the stability of the price source.

ZK coprocessor - another form of data consumption

Another direction worth exploring is the use of ZK coprocessors. These processors are designed to handle complex calculations off-chain and have access to on-chain status, while providing proofs to ensure that the calculation results can be verified without permission. Projects like Axiom allow contracts to query historical blockchain data, perform calculations off-chain, and submit ZK proofs to ensure that the results are correctly calculated based on valid on-chain data. Coprocessors make it possible to build a manipulation-resistant custom TWAP oracle based on multiple DeFi native liquidity sources (such as Uniswap+Curve).

Compared with traditional oracles, ZK coprocessors will expand the range of data that can be safely provided to dApps. Currently, traditional oracles mainly provide the latest asset price data (such as EMA prices provided by Pyth). With ZK coprocessors, applications can introduce more business logic based on historical blockchain data to improve protocol security or enhance user experience.

However, ZK coprocessors are still in the early stages of development and will face some bottlenecks, such as:

The proof process may be too long when processing large amounts of blockchain data.

Limited to blockchain data, it cannot solve the need for secure communication with non-Web3 applications.

De-oracle-the future of DeFi?

A new line of thought suggests that the oracle dependency problem in DeFi can be solved by designing a primitive that fundamentally removes the need for external price data, and recently there have been designs that use various AMM LP tokens as pricing tools. This is based on a core concept: in a constant function market maker, the LP position represents the preset weights of two assets in the trading pool, and the transaction follows an automatic pricing formula (such as xy=k). By using LP tokens, the protocol can directly obtain information that usually requires an oracle to provide, giving rise to solutions without an oracle. Such solutions reduce the reliance of DeFi protocols on oracles, and some projects are building applications in this direction.

Conclusion

Price data remains a core component of many decentralized applications today, and the total asset value protected by oracles is still increasing, which further proves the importance of oracles in the market. This article aims to draw attention to the risks associated with the current oracle excess earnings (OEV) and explore the potential of alternative designs such as push, pull, and using AMM LPs or off-chain coprocessors.

Catherine

Catherine