Source: Heart of the Metaverse

Global technology giant Google recently launched the Gemma 3 series of open source AI models, the latest version of its open AI model family, which aims to set a new benchmark for the popularization of artificial intelligence technology.

As an upgraded version of the Gemini 2.0 model architecture, Gemma 3 is lightweight, portable and highly adaptable, and developers can use it to develop AI applications on various devices.

On the first anniversary of the Gemma model, its cumulative downloads have exceeded 100 million times, and community developers have derived more than 60,000 improved versions based on it. This ecosystem, called the "Gemmaverse", is becoming the backbone of promoting the democratization of AI technology.

01. Analysis of Gemma 3 Core Highlights

Gemma 3 provides a variety of model sizes to choose from - with parameters of 1 billion, 4 billion, 12 billion and 27 billion, and developers can flexibly choose according to their hardware conditions and performance requirements. These models can run quickly even on ordinary computing devices while ensuring that functionality and accuracy are not affected.

Its core advantages include:

Support for more than 140 languages: To serve global users, Gemma 3 has built-in pre-training capabilities for more than 140 languages. Developers can create applications that are more suitable for users to communicate in their native language, greatly expanding the global influence of the project.

Advanced text and visual analysis: With advanced text, image and short video reasoning capabilities, developers can use Gemma 3 to develop highly interactive and intelligent applications, covering a variety of scenarios from content analysis to creative processes.

Ultra-large context window: Gemma 3 provides a context window of up to 128,000 tokens, which can analyze and integrate large-scale data sets, and is very suitable for applications that require deep content understanding.

Lightweight quantized models: Gemma 3 has launched an official quantized version, which significantly reduces the model size while maintaining output accuracy, which is particularly beneficial for optimizing applications on mobile devices or resource-constrained environments.

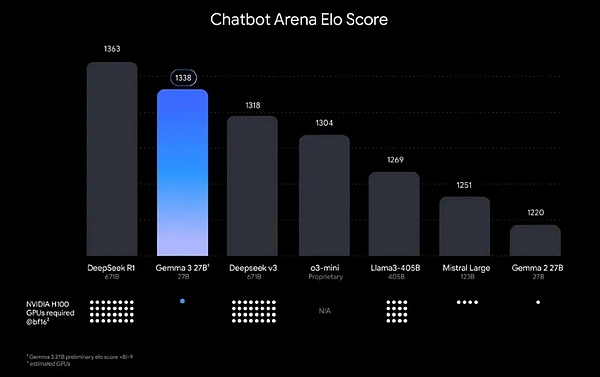

Gemma 3's performance advantage is clear on the Chatbot Arena Elo score leaderboard. With just one NVIDIA H100 GPU, its flagship 2.7 billion parameter model ranks among the top chatbots with an Elo score of 1338. Many competing products require up to 32 GPUs to achieve similar performance.

Another major advantage of Gemma 3: seamless integration into developers' existing workflows. It can flexibly adapt to developers' existing workflows to make the development process smoother, including:

Compatible with multiple tools: Gemma 3 supports many mainstream AI libraries and tools, such as Hugging Face Transformers, JAX, PyTorch, and Google AI Edge. If you need to optimize deployment, platforms such as Vertex AI and Google Colab allow developers to easily get started with almost no additional configuration.

NVIDIA performance optimization: Whether users use entry-level Jetson Nano GPUs or top-notch Blackwell chips, Gemma 3 can perform at its best. With the support of NVIDIA API Catalog, the entire optimization process becomes simpler.

Wide hardware support: In addition to NVIDIA, Gemma 3 is also compatible with AMD GPUs through the ROCm technology stack, and can even run on CPUs using Gemma.cpp, making it highly adaptable.

If you want to try it right away, developers can use Gemma 3 models directly through platforms such as Hugging Face or Kaggle, or quickly deploy them in the browser with the help of Google AI Studio.

02. Promote responsible AI development

Google said: "We believe that open models require careful risk assessment, and our approach is to find a balance between innovation and safety."

The development team of Gemma 3 has adopted a strict management strategy to ensure that the model meets ethical standards through fine-tuning and strong benchmarking.

Given that the model's capabilities in the STEM (science, technology, engineering and mathematics) fields have been greatly improved, the team has conducted a targeted assessment to reduce the risk of its abuse, such as the generation of harmful substances.

Google calls on the industry to work together to create a moderate safety framework for increasingly powerful models.

To fulfill its responsibilities, Google launched ShieldGemma 2, an image safety inspection tool with 4 billion parameters, based on the Gemma 3 architecture, which can generate safety labels for categories such as dangerous content, explicit materials and violence. It not only provides an out-of-the-box solution, but developers can also customize the tool according to specific safety needs.

"Gemmaverse" is not only a technical ecosystem, but also a community-driven movement. Projects like AI Singapore's SEA-LION v3, INSAIT's BgGPT, and Nexa AI's OmniAudio demonstrate the power of collaboration within this ecosystem.

To support academic research, Google has also launched the Gemma 3 Academic Program. Researchers can apply for $10,000 worth of Google Cloud credits to accelerate their AI-related projects. Applications start today and are open for four weeks.

With its ease of use, powerful features, and wide compatibility, Gemma 3 has the potential to become a cornerstone of the AI development community.

Catherine

Catherine

Catherine

Catherine Hui Xin

Hui Xin Alex

Alex Clement

Clement Brian

Brian Catherine

Catherine Hui Xin

Hui Xin Kikyo

Kikyo Aaron

Aaron Alex

Alex