Source: PermaDAO

AO is an asynchronous communication network designed for on-chain AI. By combining with Arweave, it realizes high-performance off-chain computing and permanent data storage. The article introduces the steps to run AI processes on AO. Although it currently only supports small models, it will support more complex computing capabilities in the future, and AI has broad prospects for development on the chain.

What is AI on AO?

AO is designed for on-chain AI

2023 is called the first year of AI, and various large models and AI applications are emerging in an endless stream. In the world of Web3, the development of AI is also a key link. However, the "blockchain impossible triangle" has always kept blockchain computing expensive and congested, hindering the development of AI on Web 3. But now this situation has been initially improved on AO and has shown unlimited potential.

AO is designed as a message-driven asynchronous communication network. Based on the Storage Consensus Paradigm (SCP), AO runs on Arweave and achieves seamless integration with Arweave. In this innovative paradigm, storage (consensus) and computing are effectively separated, making off-chain computing and on-chain consensus possible.

High-performance computing:The calculation of smart contracts is performed off-chain and is no longer subject to the block consensus process on the chain, which greatly expands the computing performance. Individual processes on different nodes can independently perform parallel calculations and local verifications without waiting for all nodes to complete repeated calculations and global consistency verification as in the traditional EVM architecture. Arweave provides AO with permanent storage of all instructions, intermediate states, and calculation results as the data availability layer and consensus layer of AO. Therefore, high-performance computing (including computing using GPUs) has become possible.

Permanent data:This is what Arweave has been committed to doing. We know that a key link in AI training is the collection of training data, which happens to be Arweave's strength. The permanence of data for at least 200 years gives the AO + Arweave ecosystem a rich data set.

In addition, Sam, the founder of AO and Arweave, demonstrated the first AI process based on aos-llama at a press conference in June this year. In order to ensure performance, Lua, which has been used before, wasm compiled in C was used instead.

The model used is llama 2, which is open source on huggingface. The model can be downloaded on Arweave, which is a model file of about 2.2GB.

Llama land

Llama Land is a cutting-edge massively multiplayer online (MMO) game that is built on the advanced AO platform with AI technology at its core. It is also the first AI application on the AO + Arweave ecosystem. The most important feature is the issuance of llama coins, which is 100% controlled by AI, that is, users pray to Llama king and get llama coins rewarded by Llama king. In addition, Llama Joker and Llama oracle in the map are also NPCs completed based on AI processes.

Then let's see how to run an AI process on AO.

AI Demo

1. Overall introduction

We use the AI service that Sam has deployed on AO to implement our own AI application. The AI service deployed by Sam consists of two parts: llama-herd and llama-worker (multiple llama-workers). Among them, llama-herd is responsible for the dispatch of AI tasks and the pricing of AI tasks. llama-worker is the process that actually runs the large model. Then, our AI application realizes AI capabilities by requesting llama-herd, and a certain amount of wAR will be paid at the same time.

Note: You may wonder why we don’t run llama-worker ourselves to realize our own AI application? Because the AI module requires 15GB of memory when instantiated as a process, and we will get an error of insufficient memory when instantiating it ourselves.

2. Create a process and recharge wAR

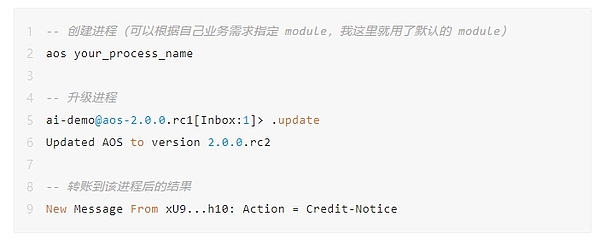

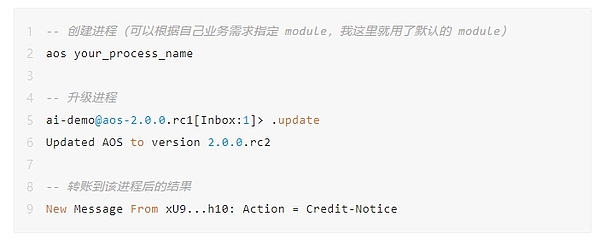

First, we need to create a process and try to upgrade the process to the latest version before performing subsequent operations. It can avoid some errors and save a lot of time.

It takes a small amount of wAR to run the AI process. After the transfer is successful through arconnect, you will see a message Action = Credit-Notice in the process. It takes wAR to execute AI once, but the consumption is not much. For demo purposes, just transfer 0.001 wAR to the process.

Note: wAR can be obtained through the AOX cross-chain bridge, and the cross-chain takes 3 to 30 minutes.

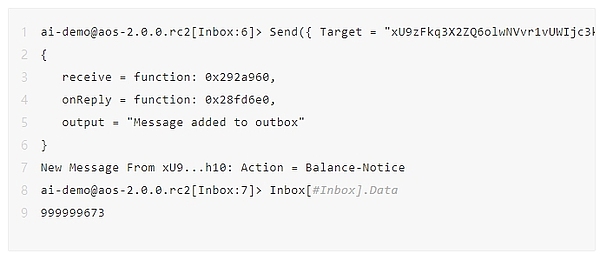

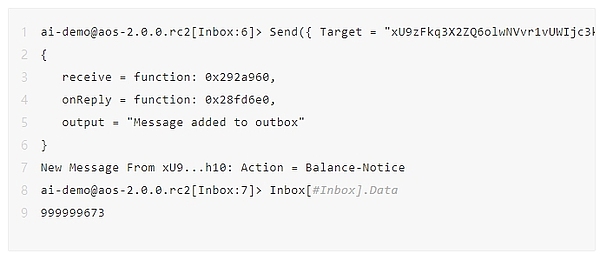

You can use the following command to view the balance of wAR in the current process. Below is the wAR left after I executed it about 5 times. The amount consumed is related to the length of the token and the real-time price of the current large model run. In addition, if the current request is in a congested state, an additional fee will also be required. (At the end of the article, I will analyze the fee calculation in detail based on the code, and those who are interested can take a look)

Note: The decimal point here is 12 digits, that is, 999999673 means 0.000999999673 wARs.

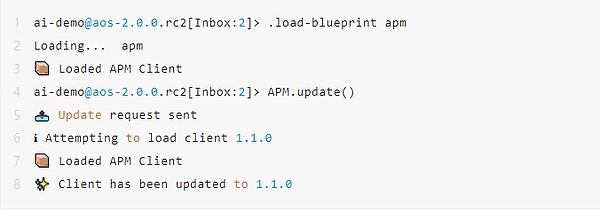

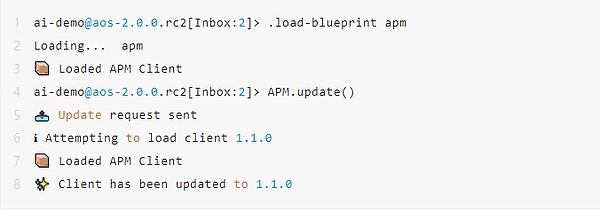

3. Install/update APM

APM stands for ao package management. To build an AI process, you need to install the corresponding package through APM. Execute the above command and the corresponding prompt will appear, which means that the installation/update of APM is successful.

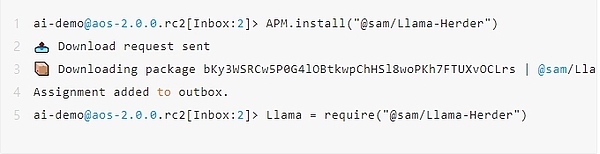

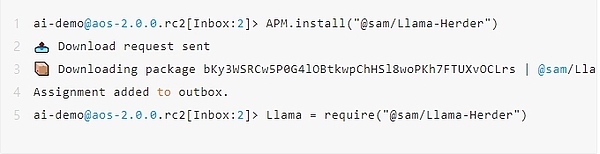

4. Install Llama Herder

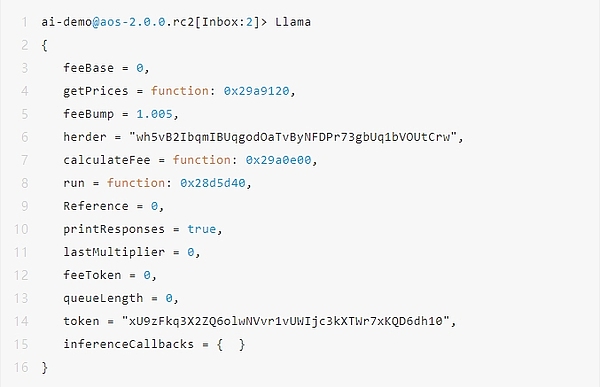

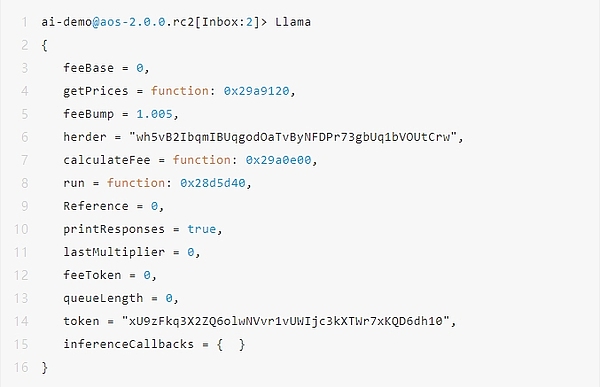

After execution, there will be a Llama object in the process, which can be accessed by entering Llama, then Llama Herder is successfully installed.

Note: If there is not enough wAR in the running process, the Llama.run method cannot be executed and a Transfer Error will appear. You need to recharge wAR according to the first step.

5. Hello Llama

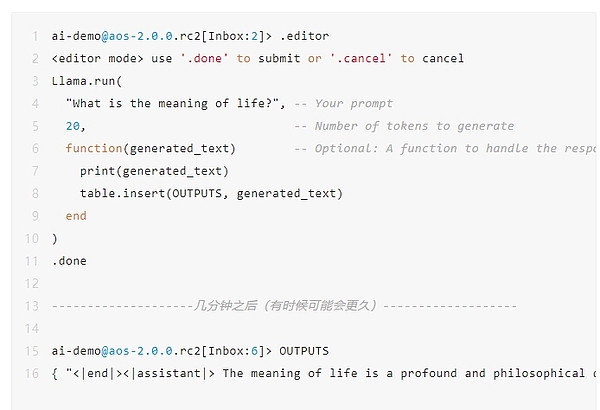

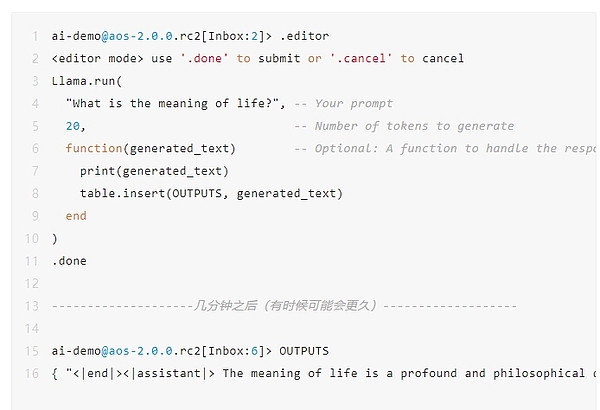

Next, let's do a simple interaction. Ask the AI process "What is the meaning of life?", and limit the generation of a maximum of 20 tokens. Then put the result in OUTPUTS. The execution of the AI process takes several minutes. If there are AI tasks queued, you need to wait longer.

As shown in the return code below, AI replies, "The meaning of life is a profound and philosophical question that has always fascinated humans."

Note: If there is not enough wAR in the running process, the Llama.run method cannot be executed and a Transfer Error will appear. You need to recharge wAR according to the first step.

6. Make a Llama Joker

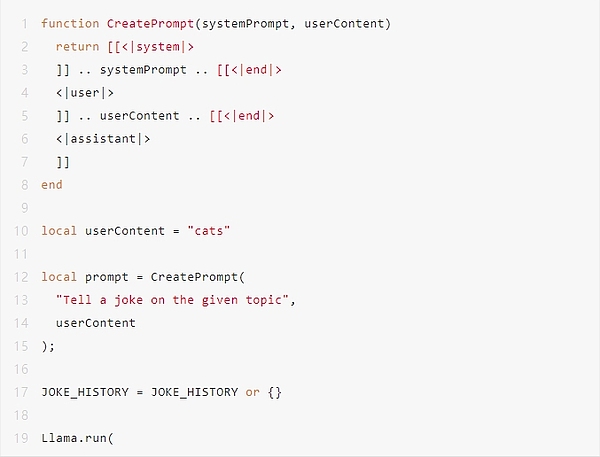

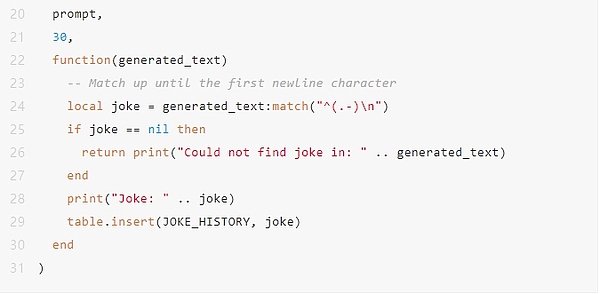

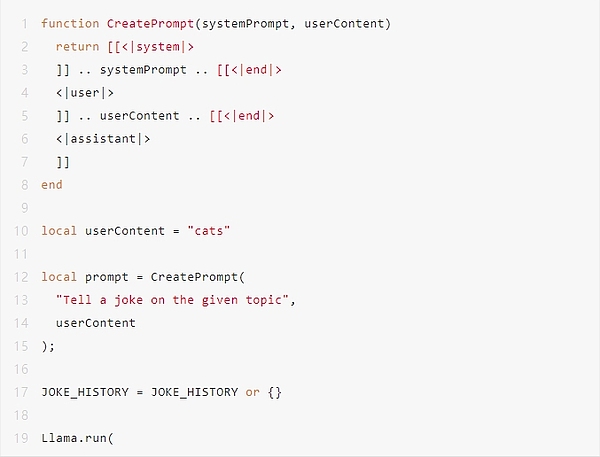

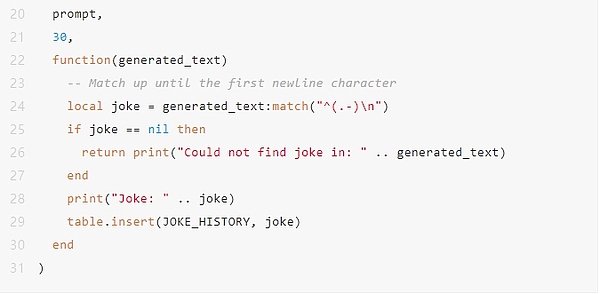

Going a step further, let's take a look at the implementation of Llama Joker. (Due to limited space, only AI-related core code is shown.)

Building a Llama Joker is actually quite simple, similar to building a chatbot in Web 2 AI applications.

First, use <|system|> / <|user|> / <|assistant|> to distinguish different roles in the built Prompt.

Second, determine the fixed prompt part. In the example of Llama Joker, it is the content of <|system|> "Tell a joke on the given topic".

Finally, build the Llama Joker Npc to interact with the user. However, for the sake of space, the variable local userContent = "cats" is directly defined here. It means that the user wants to hear a joke related to cats.

Isn't it so easy?

More

The ability to realize AI on the chain was unimaginable before. Now it is possible to realize a high degree of completion of applications based on AI on AO. Its prospects are exciting and give everyone unlimited imagination space.

But for now, the limitations are also quite obvious. Currently, it can only support "small language models" of about 2 GB, and it is not possible to use GPU for calculations. Fortunately, AO's architecture design also has corresponding solutions to these shortcomings. For example, compile a wasm virtual machine that can use GPU.

I hope that in the near future, AI can bloom more gorgeous flowers on the AO chain.

Appendix

A pit left in the front, let's take a look at the cost calculation of Llama AI.

The following is the Llama object after initialization, and I give my understanding of the important objects respectively.

M.herder: Stores the identifier or address of the Llama Herder service.

M.token: The token used to pay for AI services.

M.feeBase: Base fee, used to calculate the base value of the total fee.

M.feeToken: The fee corresponding to each token, used to calculate the additional fee based on the number of tokens in the request.

M.lastMultiplier: The multiplier factor of the last transaction fee, which may be used to adjust the current fee.

M.queueLength: The length of the current request queue, which affects the fee calculation.

M.feeBump: The fee increase factor, which is set to 1.005 by default, meaning a 0.5% increase each time.

The initial value of M.feeBase is 0.

The latest price information is requested from Llama Herder through the M.getPrices function.

Among them, M.feeBase, feeToken, M.lastMultiplier, and M.queueLength are all requested from M.herder, and after receiving the Info-Response message, they change in real time. This ensures that the latest price-related field values are always kept.

Specific steps for calculating fees:

According to M.feeBase plus the product of feeToken and the number of tokens, an initial fee is obtained.

Based on the initial fee, multiply it by M.lastMultiplier.

Finally, if there is a request queue, it will be multiplied by M.feeBump, which is 1.005, to get the final fee.

Reference link

1. Model address on Arweave:

https://arweave.net/ISrbGzQot05rs_HKC08O_SmkipYQnqgB1yC3mjZZeEo

2. aos llama source code:

https://github.com/samcamwilliams/aos-llama

3. AOX cross-chain bridge:

https://aox.arweave.dev/

Weatherly

Weatherly