AI's first step to subvert humanity: understanding people's hearts

In the great discussion about AI, the role people assign to it is either our most capable and efficient assistant, or the "machine army" that will subvert us. Whether it is an enemy or a friend, AI must not only be able to complete the tasks assigned by humans, but also be able to "read" people's hearts, and this mind-reading ability is the highlight of the AI field this year.

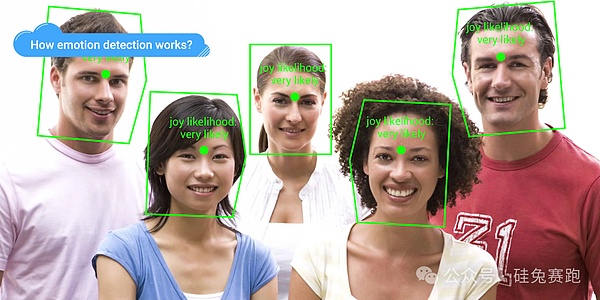

In the enterprise SaaS emerging technology research report released by PitchBook this year, "emotional AI" has become a major technical highlight. It refers to the use of emotional computing and artificial intelligence technology to perceive, understand and interact with human emotions, and try to understand human emotions by analyzing text, facial expressions, sounds and other physiological signals. Simply put, emotional AI hopes that machines can "read" emotions like humans, or even better than humans.

Its main technologies include:

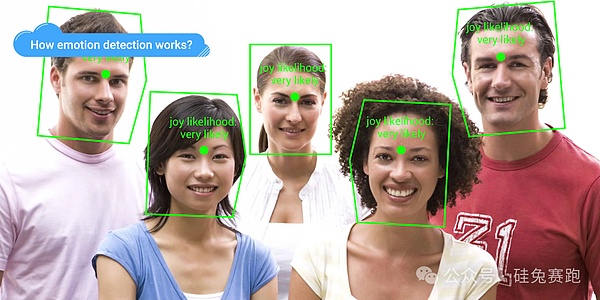

Facial expression analysis: Detect micro-expressions and facial muscle movements through cameras, computer vision and deep learning.

Voice analysis: Identify emotional states through voiceprints, intonation and rhythm.

Text analysis: Interpret sentences and contexts with the help of natural language processing (NLP) technology.

Physiological signal monitoring: Use wearable devices to analyze heart rate, skin reactions, etc. to enhance the personalization and emotional richness of interactions.

Emotion AI

The predecessor of emotion AI is sentiment analysis technology, which mainly analyzes through text interaction, such as analyzing and extracting user emotions through text on social media. With the support of AI and the integration of multiple input methods such as vision and audio, emotion AI promises more accurate and complete sentiment analysis.

VCs spend money, startups receive huge financing

Silicon Rabbit observes that the potential of emotion AI has attracted the attention of many investors. Some startups focusing on this field, such as Uniphore and MorphCast, have already received a lot of investment in this field.

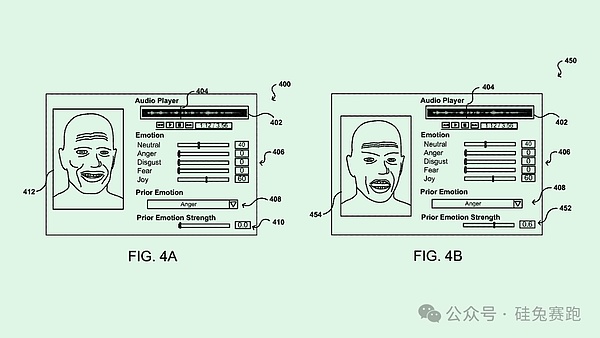

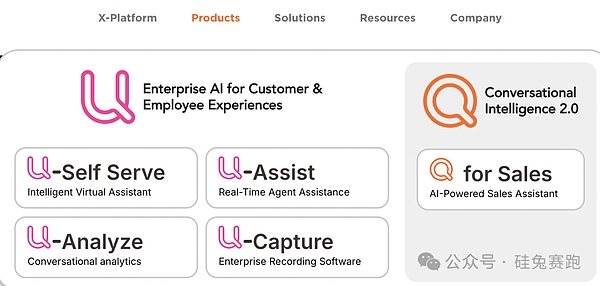

California-based Uniphore has been exploring the provision of automated conversational solutions for enterprises since 2008, and has developed multiple product lines including U-Self Serve, U-Assist, U-Capture and U-Analyze to help customers conduct more personalized and emotionally rich interactions through voice, text, vision and emotional AI technologies. U-Self Serve focuses on accurately identifying emotions and tones in conversations, allowing companies to provide more personalized services to improve user participation satisfaction; U-Self Serve U-Assist can improve the efficiency of customer service agents through real-time guidance and workflow automation; U-Capture can provide companies with deep insights into customer needs and satisfaction through automated emotional data collection and analysis; and U-Analyze can help customers identify key trends and emotional changes in interactions, and provide data-driven decision support to enhance brand loyalty. Uniphore's technology is not just about making machines understand language, but hopes that they can capture and interpret the emotions hidden behind tone and expression when interacting with humans. This capability enables companies to better meet customers' emotional needs rather than just respond mechanically when interacting with them. By using Uniphore, companies can achieve 87% user satisfaction and improve customer service performance by 30%.

Uniphore has completed more than $620 million in financing so far. The latest round of investment came from $400 million led by NEA in 2022. Existing investors such as March Capital also participated in the investment. The valuation after this round reached $2.5 billion.

Uniphore

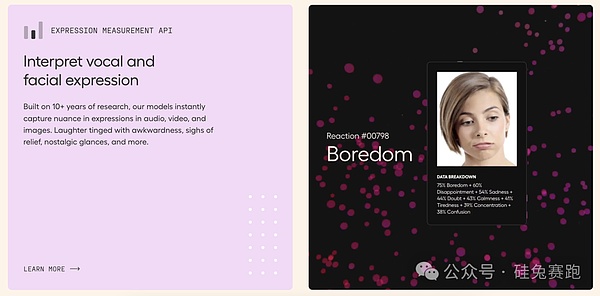

Hume AI has launched the world's first empathetic voice AI. It was founded by former Google scientist Alan Cowen, who was famous for pioneering the semantic space theory, which understands emotional experience and expression by revealing the nuances of voice, face and gesture. Cowen's research results have been published in many journals such as "Nature" and "Trends in Cognitive Sciences", involving the most extensive and diverse emotional samples to date.

Driven by this research, Hume developed the Conversational Voice API - EVI, which combines large language models and empathy algorithms to deeply understand and analyze human emotional states. It can not only recognize emotions in voice, but also make more delicate and personalized responses in interactions with users. Developers can use these features with just a few lines of code and build them into any application.

Hume AI

One of the main limitations of most current AI systems is that its instructions are mainly given by humans. These instructions and prompts are prone to errors and cannot tap the huge potential of artificial intelligence. The empathy large language model (eLLM) developed by Hume can adjust the words and tone it uses according to the context and the user's emotional expression. By taking human happiness as the first principle for machine learning, adjustment and interaction, it can bring users a more natural and real experience in multiple scenarios such as mental health, education and training, emergency calls, and brand analysis.

Just this March, Hume AI completed a $50 million Series B financing led by EQT Ventures. Investors also include Union Square Ventures, Nat Friedman & Daniel Gross, Metaplanet and Northwell Holdings.

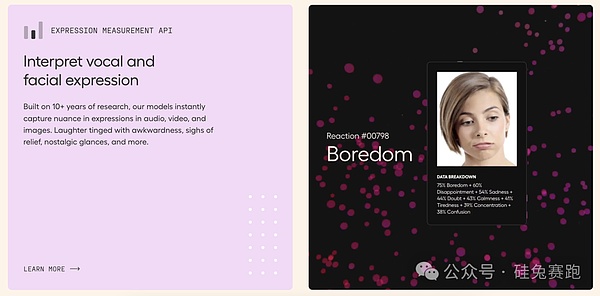

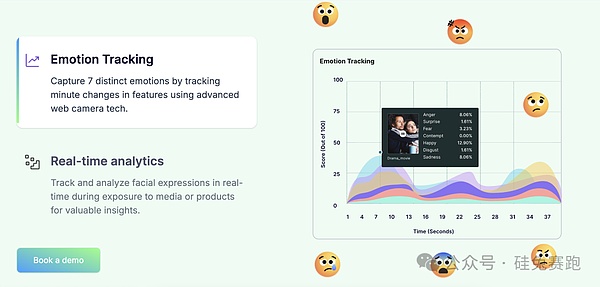

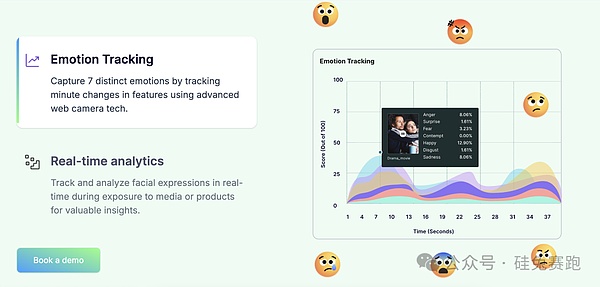

In this field, there is also Entropik, which specializes in measuring consumer cognitive and emotional responses. Through Decode, a function that combines the combined power of emotional AI, behavioral AI, generative AI and predictive AI, it can better understand consumer behavior and preferences, thereby providing more personalized marketing suggestions. Entropik recently completed a $25 million Series B financing in February 2023, with investors including SIG Venture Capital and Bessemer Venture Partners.

Entropik

Giant participation, a melee

Technology giants have also made arrangements in the field of emotional AI by relying on their own advantages.

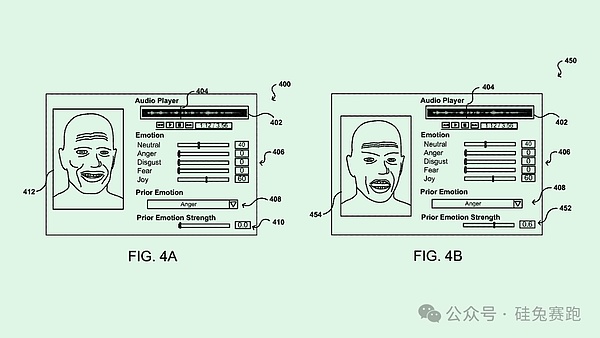

Including the emotion API of Microsoft Azure cognitive services, which can identify joy, anger, sadness, surprise and other emotions in pictures and videos by analyzing facial expressions and emotions;

IBM Watson's natural language understanding API can process large amounts of text data and identify the underlying emotional tendencies (such as positive, negative or neutral) to more accurately interpret user intentions;

Google Cloud AI's Cloud Vision API has powerful image analysis capabilities, which can quickly identify emotional expressions in images, and supports text recognition and emotion association;

AWS's Rekognition can also detect emotions, identify facial features and track changes in expressions, and can also be used in combination with other AWS services to become a complete social media analysis or emotion AI-driven marketing application.

Cloud Vision API

Some startups are moving faster in the field of emotional AI, and even tech giants are trying to poach them. For example, unicorn Inflection AI was favored by investor Microsoft for its AI team and model. After Microsoft, together with Bill Gates, Eric Schmidt, NVIDIA and other parties, invested $1.3 billion in Inflection AI, it extended an olive branch to Mustafa Suleyman, a leader in AI and one of the co-founders of Inflection AI. Suleyman subsequently joined Microsoft with more than 70 employees, and Microsoft paid nearly $650 million for the investment.

However, Inflection AI quickly regrouped and formed a new team with backgrounds in Google Translate, AI consulting, and AR, and continued to work on its core product Pi. Pi is a personal assistant that can understand and respond to user emotions. Unlike traditional AI, Pi focuses more on establishing an emotional connection with users, perceiving emotions by analyzing voice, text and other inputs, and showing empathy in conversations. Inflection AI sees Pi as a coach, confidant, listener, and creative partner, rather than a simple AI assistant. In addition, Pi has a powerful memory function that can remember the user's multiple conversation histories to enhance the continuity and personalized experience of the interaction.

Inflection AI Pi

Development Road,Attention and Doubt Coexist

Although emotional AI carries our expectations for a more humane way of interaction, like all AI technologies, its promotion is accompanied by attention and doubt. First of all, can emotional AI really accurately interpret human emotions? In theory, this technology can indeed enrich the experience of services, equipment and technology, but from a realistic perspective, human emotions are inherently vague and subjective. As early as 2019, researchers had questioned this technology, saying that facial expressions cannot reliably reflect human real emotions. Therefore, relying solely on machines to simulate human facial expressions, posture and tone of voice to understand emotions has certain limitations.

Secondly, strict regulatory supervision has always been a stumbling block on the road to AI development. For example, the EU AI Act prohibits the use of computer vision emotion detection systems in fields such as education, which may limit the promotion of certain emotion AI solutions; states such as Illinois in the United States also have laws prohibiting the collection of biometric data without permission, which directly limits the premise of using certain emotional AI technologies. At the same time, data privacy and protection is an even more important issue. Emotional AI is usually used in fields such as education, health, and insurance that have particularly strict requirements for data privacy. Therefore, ensuring the security and legal use of emotional data is a topic that every emotional AI company needs to face.

Finally, communication and emotional interpretation between people from different cultures and regions are both difficult problems, and even more challenging for AI. For example, different regions have different ways of understanding and expressing emotions, which may affect the effectiveness and integrity of emotional AI systems. In addition, emotional AI may also face considerable difficulties in dealing with racial, gender, and gender identity biases.

Emotional AI not only promises to reduce manpower efficiency, but also has the thoughtfulness of reading people's hearts. But can it really become a universal solution for human interaction, or will it become an intelligent assistant similar to Siri, performing mediocrely in tasks that require real emotional understanding? Perhaps in the future, AI's "mind reading" will subvert human-computer and even human interaction, but at least for now, truly understanding and responding to human emotions may still require more human participation and prudence.

Reference source:

Uniphore Announces $400 Million Series E (Uniphore)

Hume AI Announces $50 Million Fundraise and Empathic Voice Interface (Yahoo Finance)

p>Introducing Pi, Your Personal AI (Inflection AI)

'Emotion AI' may be the next trend for business software, and that could be problematic (TechCrunch)

EMERGING TECH RESEARCH Enterprise Saas Report (PitchBook)

Weatherly

Weatherly