Author: Wang Shu, Postdoctoral Fellow, Tencent Research Institute

As early as 2001, studies have pointed out that the field of game artificial intelligence has great potential to achieve or create human-level artificial intelligence (human-level AI) [1]. As the starting point of artificial intelligence research, games, with their complexity and diversity of task scenarios, provide a guarantee for artificial intelligence to approach human intelligence in terms of breadth, depth and flexibility.

Currently, with the rapid development of generative AI and decision-making AI technologies, the development trend of resonance and symbiosis between games and artificial intelligence is more obvious.At the global game top conference GDC2024 (Global Game Developers Conference 2024), AI became the focus of the conference, with 64 speeches on AI as the theme, accounting for 8%. In the field of generative AI, 62% of game industry respondents are using AI tools to create game content [2]. In the field of decision-making AI, Google DeepMind team launched SIMA (Scalable Instructable Multiworld Agent), a general-purpose game agent, after Alphastar, which can perform more than 600 tasks in various 3D game worlds according to human natural language instructions.

Technology Proving Ground:

General AI Agent Practice Based on Game Environment

Games provide clear measurement standards for decision-making AI. Using clear and quantifiable rules in games to evaluate the ability of decision-making AI can solve the problem of lack of artificial intelligence research scenarios and greatly improve the efficiency of technology iteration and testing. At present, most decision-making AI research teams, including OpenAI and DeepMind, choose games as training scenarios, and are committed to creating general-purpose agents in different types of game scenarios, and building general artificial intelligence based on this.

On March 13, 2024, the Google DeepMind team released an AI agent called SIMA (Scalable Instructable Multiworld Agent), which can understand a wide range of 3D game worlds and can follow natural language instructions like humans to perform more than 600 tasks in various 3D game worlds. The powerful natural language understanding and transfer learning capabilities have made many researchers regard the emergence of SIMA as the "ChatGPT moment of the agent".

In its technical report, DeepMind elaborated on the basic principles and technical paths of SIMA, defining it as a scalable and instructable general-purpose game agent in multiple 3D virtual worlds. The DeepMind team selected 9 popular 3D online games and 4 3D scenes made with the Unity engine as the training environment for the SIMA agent, and collected a large amount of behavior and operation data of human players from the games to train the agent. During the specific training process, the intelligent agent will continuously observe and learn the game image information on the screen, and combine it with the various operation instructions of the player in the game, and then realize the output through the keyboard and mouse to control the character in the game to perform various operations [3].

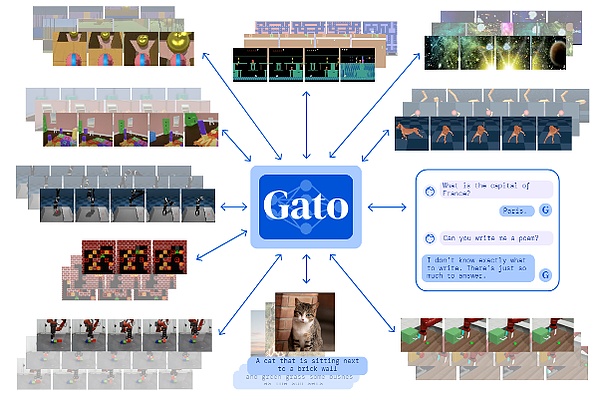

Figure 1 Overview of the SIMA Intelligent Agent Project

The SIMA project is an important milestone of the DeepMind team in the field of general artificial intelligence (AGI) research. From the Go artificial intelligence AlphaGO and AlphaZero, to the AlphaStar based on the game "StarCraft 2", to the current SIMA based on the large language model, the DeepMind team has been testing and researching general intelligent agents based on the game environment. In DeepMind's view, the decision-making and action capabilities trained by intelligent agents in the game environment are expected to be transferred to real-world scenarios, providing new ideas and new practices for incubating general artificial intelligence.

Early before the release of SIMA, there were already several general-purpose game agent research projects in the industry. Two of the more representative works are Gato released by DeepMind and Minedojo released by NVIDIA.

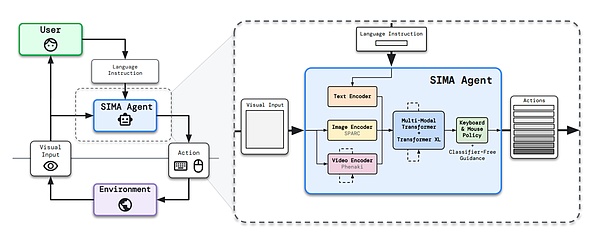

Gato was released by the DeepMind team in November 2022. It can play Atari games and control real robot arms to stack blocks. Gato uses a large language model architecture similar to GPT, and its training materials include images, text, robot arm joint data, and other multimodal datasets [4]. In a study published in March 2023, Microsoft pointed out that large models such as Gato that integrate multimodal information are very likely to give birth to early intelligence [5].

Figure 2 Gato created by DeepMind

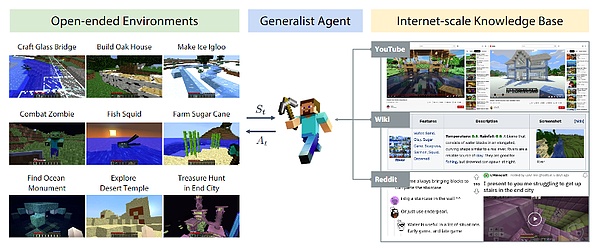

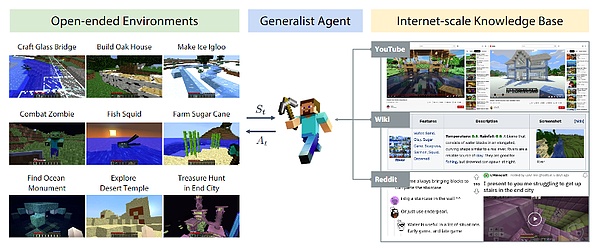

Similar to Gato, there is MineDojo, an intelligent agent jointly created by NVIDIA, Caltech, Stanford and other research institutions based on the game Minecraft. Minedojo uses player videos (YouTube), encyclopedia (Wiki) and user community (Reddit) information of the game Minecraft as training materials to train a general intelligent agent that can complete various tasks in the game Minecraft according to text prompts. Minedojo can not only complete some simple programmatic tasks, but also complete a series of creative tasks based on simple descriptions, such as building a library according to descriptions [6].

Figure 3 Minedojo Capability Model

Gato and Minedojo correspond to two different ideas in artificial intelligence research: solving enough tasks or solving a sufficiently complex task. However, the above two studies also have certain limitations. For example, Minedojo is only a special intelligence for a specific game type, and can only complete various tasks in a single game, and does not have the ability of transfer learning; although Gato has certain transfer learning capabilities, its main application environment is some 2D games, not 3D game environments, which is quite different from real-world scenes.

Currently, training general AI Agents based on game environments has become an industry consensus. In his TED AI 2023 speech, Nvidia senior scientist Jim Fan proposed the concept of foundation agent, believing that the next frontier of AI research will be to create a "foundation agent" that can generalize in the virtual and real worlds, master a wide range of skills, control many bodies, and generalize to multiple environments. The training of this model is also inseparable from the game environment [7]. In China, Tencent has also taken the lead in building an open research platform for AI multi-agents and complex decision-making - Kaiwu, which relies on the core advantages of Tencent AI Lab and "Honor of Kings" in algorithms, computing power, and experimental scenarios to provide academic researchers and algorithm developers with a leading application exploration platform in China.

New breakthrough in capabilities:

SIMA achieves effective integration of large language models

with AI Agent training

The emergence of SIMA combines large language models with agent training, achieving a breakthrough in the decision-making ability and generalization of AI agents. SIMA can not only better understand various 3D game environments, but also perform various tasks in various 3D game worlds according to natural language instructions like humans. It also far exceeds other agents in decision-making efficiency and capabilities, and has decision-making capabilities similar to those of humans[8]. DeepMind founder and CEO Demis Hassabis said in an interview: "The field of combining large language models, AI agent training and game environments has great development prospects, and DeepMind will continue to increase its research investment in this field in the future. [9]" Overall, SIMA's characteristics and breakthroughs compared with other SIMAs are mainly reflected in the following aspects: First, SIMA uses a game environment for training, but pays more attention to the consistency between the agent's behavior and the instructions it receives. In the view of the DeepMind team, "games are an important testing ground for artificial intelligence (AI) systems. Like the real world, games are a rich learning environment with responsive real-time settings and ever-changing goals." Compared with the game agents previously released by the DeepMind team, SIMA is similar in that it also observes and learns a large amount of behavioral data of human players during its training. The difference is that the purpose of SIMA training is not to defeat human players or get high scores in the game, but to learn to follow natural language instructions issued by humans in various game environments and behave in accordance with the instructions in the game environment.

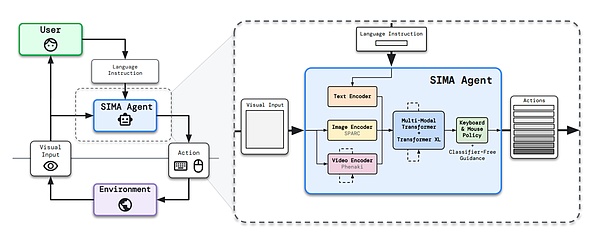

Second, SIMA combines a large language model with agent training and adopts a unified and user-friendly interactive interface. "Learning language and environment complement each other. By learning natural language, the agent's ability to understand general representations and abstract concepts can be improved, and learning efficiency can be improved." Compared with various previous game-based agents, SIMA introduced a large language model in training. The entire training process follows the rule of language priority, and all training behaviors are directly driven by natural language. In other words, SIMA does not need to access the source code of the game or customize the API. It only needs two inputs: image information on the screen, and natural language instructions provided by the user, and the keyboard and mouse can be used to control the characters in the game to execute these instructions. In terms of specific interaction methods, SIMA uses a unified and user-friendly interactive interface, which humans can directly call to issue natural language instructions to SIMA (as shown in Figure 4 below).

Figure 4 SIMA agent architecture

Third, SIMA has good generalization ability and can maintain a high level of ability in different virtual scenes.According to the data currently released by the DeepMind team, SIMA has been evaluated through 600 basic skills, covering navigation (such as turning left), object interaction (climbing ladders) and menu usage (opening maps), and has shown a higher performance level than similar agents in multiple game environments. DeepMind researchers evaluated SIMA's ability to complete nearly 1,500 specific in-game tasks according to instructions, some of which were evaluated by humans. The results showed that SIMA's performance far exceeded that of similar agents in any game environment (as shown in Figure 5).

Figure 5 Performance comparison of multiple agents in different environments

New application scenarios:

AI helps game creation

Improve the efficiency of content creation

Games have become a testing ground and incubator for building general AI agents, and are constantly promoting the updating and iteration of decision-making AI technology. At the same time, with the maturity of generative AI technologies such as Stable Diffusion and Transformer, AI technology has also begun to reversely assist the content creation of games and the broader cultural industry. More and more practitioners can generate digital assets such as pictures, text, audio and video, NPCs, etc. at a lower cost, improve product development efficiency, and further reduce the threshold for interactive content production.

At the application level, generative AI models have become a powerful assistant for game developers. According to the "2024 Unity Game Industry Report", after using AI technology, 71% of game studios said that their R&D and operational efficiency has been improved. This efficiency improvement is not only reflected in empowering individual content creators, but also in effectively reducing the communication costs of workers in different links.

On the production side of game content, generative AI has been widely used in text generation, 2D art creation, code generation and detection, level design generation and other links. Before AI tools were introduced into the game art workflow, it took game artists about a week to complete a high-quality illustration. After using generative AI tools such as Stable Diffusion, the time to generate a high-quality illustration can be shortened to one day.

Figure 6 Illustration character drawing process based on AIGC tools

Generative AI also has a huge application space in reducing the communication costs of different types of workers. For example, in the process of game production, especially when setting the tone and selecting the game art style, the communication between game planners and art workers often takes a lot of time. The intervention of generative AI tools can help planners quickly implement and present their ideas, greatly reducing communication costs.

At the tool level, as generative AI improves the efficiency of game development, various game companies have begun to integrate it into their respective content production tools. In June 2023, game chip company NVIDIA released the AI tool platform NVIDIA ACE for Games for game developers, allowing game developers to build and deploy customized AI models such as voice, dialogue and animation in games, greatly improving the efficiency of game content production and production; at GDC 2024, NVIDIA and Inworld jointly announced a new digital human technology Covert Protocol. Game NPCs created based on this technology can interact with players in real time and can generate gameplay in real time based on interactive content[10].

Figure 7 NVIDIA released Covert Protocol technology demo

Game engine companies Unity and Unreal have also released new products based on generative AI. Unity released two new products based on artificial intelligence technology in July 2023: Sentis and Muse. It is reported that the two products can increase the efficiency of traditional content creation by ten times; Unreal has also integrated a large number of AIGC tools into its own engine, such as Metahuman creator, a digital human production tool, to try to use artificial intelligence technology to accelerate the creation of high-quality characters and large-scale scene generation efficiency.

Game production companies have also fully embraced AI technology, empowering content production tools with AI, and continuously improving content research and development efficiency. Take Tencent as an example. Tencent AI Lab launched its self-developed game full-lifecycle AI engine “GiiNEX” at GDC 2024. The engine uses Tencent’s self-developed generative AI and decision-making AI models to provide a variety of AIGC capabilities including 3D graphics, animation, cities, and music for AI-driven NPCs, scene production, content generation, and other fields. With the help of the GiiNEX engine, the city modeling task that originally took 5 days to complete now only takes 25 minutes, increasing efficiency by 100 times[11].

Figure 8 Architecture diagram of Tencent’s game AI engine GiiNEX

Conclusion

Since the Dartmouth Conference in 1956, early computer scientists in the field of artificial intelligence have defined AI as “the intelligence that makes a machine react in the same way as a person acts”[12]. Later, almost all artificial intelligence research followed the path of “simulating” human intelligence, trying to create artificial intelligence that can hear, see, speak, think, learn, and act, and enhance its ability to perceive and understand the real world and make decisions.

To this day, artificial intelligence research still follows the path and goal of simulating humans. If we say that large generative AI models represented by ChatGPT, Sora, etc. have improved artificial intelligence's "perception" and "cognition" capabilities of things, and completed the first step towards general artificial intelligence. Then the decision-making AI model that enables artificial intelligence to make appropriate "choices" through machine learning in complex and diverse game environments gives artificial intelligence the ability to "act" and make autonomous decisions based on its own and environmental information, achieving a crucial step towards general artificial intelligence.

Although current artificial intelligence research still has a long way to go to achieve AGI, the combination of generative AI and decision-making AI has undoubtedly opened up new possibilities for achieving AGI, and games, as a testing ground for training AI, are playing an increasingly important role in general artificial intelligence research. We have seen that based on the combination of large language models and AI agents, we have been able to create general-purpose game agents like SIMA, which can not only make effective decisions in a given environment, but also continuously learn and adapt to unknown environments, and complete various complex tasks according to natural language instructions, showing human-like intelligence. In the future, with the continuous increase in training environments, general-purpose game agents may have the understanding and ability to understand more complex and advanced language instructions, and people are expected to create more flexible, adaptable, and closer to human intelligence AI systems. We also look forward to the day when general-purpose agents can pass the test of the small world of games and smoothly move to the broad stage of the real world to serve the thousands of industries in human society.

Thanks to Cao Jianfeng, Liu Lin, Wang Peng and others for their guidance in the writing of this article!

JinseFinance

JinseFinance