Golden Web3.0 Daily | Trump nominates Fox host as Secretary of Defense

Golden Finance launches Golden Web3.0 Daily to provide you with the latest and fastest news on games, DeFi, DAO, NFT and Metaverse industries.

JinseFinance

JinseFinance

Author: Mohit Pandit, IOSG Ventures

Abstract

GPU shortage is a reality, supply and demand A tight, yet underutilized number of GPUs are available to meet today's tight supply needs.

An incentive layer is needed to facilitate cloud computing participation and then ultimately coordinate the computing tasks for inference or training. The DePIN model fits exactly this purpose.

Because of supply-side incentives, the demand side finds this attractive because of lower computational costs.

Not everything is rosy, and certain trade-offs must be made when choosing a Web3 cloud: such as 'latency'. Compared with traditional GPU cloud, the trade-offs faced also include insurance, service level agreements (Service Level Agreements), etc.

DePIN model has the potential to solve the GPU availability problem, but fragmented models will not make the situation better. For situations where demand is growing exponentially, fragmented supply is the same as no supply.

Given the number of new market players, market convergence is inevitable.

Introduction

We are on the edge of a new era of machine learning and artificial intelligence. While AI has been around in various forms for some time (AI are computer devices that are told to perform things that humans can do, such as washing machines), we are now witnessing the emergence of sophisticated cognitive models capable of performing tasks that require intelligent human behavior Task. Notable examples include OpenAI’s GPT-4 and DALL-E 2, and Google’s Gemini.

In the rapidly growing field of artificial intelligence (AI), we must recognize the dual aspects of development: model training and inference. Inference includes the functions and outputs of an AI model, while training includes the complex process (including machine learning algorithms, data sets, and computing power) required to build intelligent models.

Taking GPT-4 as an example, all end users care about is inference: getting output from the model based on text input. However, the quality of this inference depends on model training. In order to train effective AI models, developers need access to comprehensive underlying data sets and huge computing power. These resources are mainly concentrated in the hands of industry giants including OpenAI, Google, Microsoft and AWS.

The formula is simple: Better model training>> leads to enhanced reasoning capabilities of the AI model>> thereby attracting more users> ;> With more revenue comes increased resources for further training.

These major players have access to large underlying data sets and, more critically, control large amounts of computing power, creating barriers to entry for emerging developers. As a result, new entrants often struggle to acquire sufficient data or leverage the necessary computing power at an economically feasible scale and cost. With this scenario in mind, we see that networks have great value in democratizing access to resources, primarily related to accessing computing resources at scale and reducing costs.

GPU supply issues

NVIDIA CEO Jensen Huang It was said at CES 2019 that "Moore's Law is over." Today's GPUs are extremely underutilized. Even during deep learning/training cycles, GPUs are underutilized.

Here are typical GPU utilization numbers for different workloads:

Idle (just booted into Windows operating system): 0-2%

General Production tasks (writing, simple browsing): 0-15%

Video playback: 15 - 35%

PC Games: 25 - 95%

Graphics Design/Photo Editing Active Workload (Photoshop, Illustrator): 15 - 55%

Video Editing (Active): 15 - 55 %

Video editing (rendering): 33 - 100%

3D rendering (CUDA/OptiX): 33 - 100% (often reported incorrectly by Win Task Manager - using GPU-Z)

Most consumer devices with GPUs fall into the first three categories.

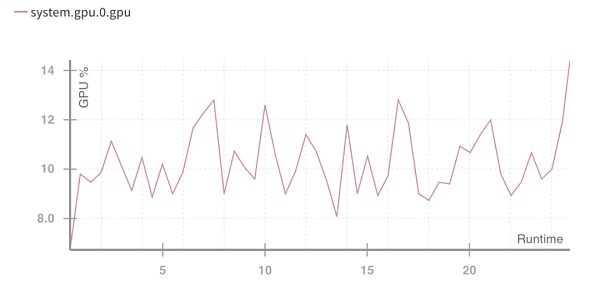

GPU runtime utilization %. Source: Weights and Biases

The above situation points to a problem: poor utilization of computing resources.

Needs better utilization of consumer GPU capacity, even when GPU utilization spikes are sub-optimal. This clarifies two things to be done in the future:

Resource (GPU) aggregation

p>Parallelization of training tasks

In terms of the types of hardware that can be used, there are now 4 types for supply:

· Data center GPU (e.g., Nvidia A100s)< /p>

· Consumer GPU (e.g., Nvidia RTX3060)

· Custom ASICs (e.g., Coreweave IPU)

· Consumer SoCs (e.g., Apple M2)

In addition to ASICs (since they are built for a specific purpose), other hardware can be brought together to be utilized most efficiently. With many of these chips in the hands of consumers and data centers, a converged supply-side DePIN model may be the way to go.

GPU production is a volume pyramid; consumer GPUs produce the most, while premium GPUs like NVIDIA A100s and H100s produce the least (but have higher performance). These advanced chips cost 15 times more than consumer GPUs to produce, but sometimes don't deliver 15 times the performance.

The entire cloud computing market is worth approximately $483 billion today and is expected to grow at a compound annual growth rate of approximately 27% in the next few years. By 2023, there will be approximately 13 billion hours of ML computing demand, which equates to approximately $56 billion in spending on ML computing in 2023 at current standard rates. This entire market is also growing rapidly, doubling every 3 months.

GPU demand

Computing demand mainly comes from AI developers (researchers and engineers). Their main needs are: price (low-cost computing), scale (large amounts of GPU computing), and user experience (ease of access and use). Over the past two years, GPUs have been in huge demand due to the increased demand for AI-based applications and the development of ML models. Developing and running ML models requires:

A lot of computation (from access to multiple GPUs or data centers )

Able to perform model training, fine tuning and inference, each task is deployed on a large number of GPUs for parallel execution< /p>

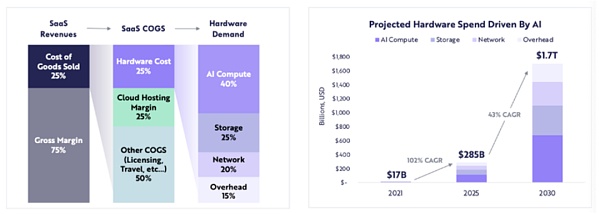

Computing-related hardware spending is expected to grow from $17 billion in 2021 to $285 billion in 2025 (about 102% compound annual growth rate), ARK expects computing-related hardware spending to reach $1.7 trillion by 2030 (43% compound annual growth rate).

ARK Research

With a large number of LLMs in the innovation stage and competition driving the computational need for more parameters, as well as retraining, we can expect that in the next few years Continued demand for high-quality computing throughout the year.

As the supply of new GPUs tightens, where does blockchain come into play?

When resources are insufficient, the DePIN model will provide help:

Start the supply side and create a large number of supplies

Coordinate and complete tasks

Ensure tasks are completed correctly

Properly reward providers for getting work done

Aggregating any type of GPU (consumer, enterprise, high performance, etc.) may cause problems with utilization. When computing tasks are divided, the A100 chip is not supposed to perform simple calculations. GPU networks need to decide what types of GPUs they think should be included in the network, based on their go-to-market strategy.

When the computing resources themselves are dispersed (sometimes globally), a choice needs to be made by the user or the protocol itself as to which type of computing framework will be used . Providers like io.net allow users to choose from 3 computing frameworks: Ray, Mega-Ray or deploy a Kubernetes cluster to perform computing tasks in containers. There are more distributed computing frameworks, such as Apache Spark, but Ray is the most commonly used. Once the selected GPU completes the computational task, the output is reconstructed to give the trained model.

A well-designed token model will subsidize computing costs for GPU providers, and many developers (demand parties) will find such a solution more attractive. Distributed computing systems inherently have latency. There is computational decomposition and output reconstruction. So developers need to make a trade-off between the cost-effectiveness of training a model and the time required.

Does a distributed computing system need its own chain?

The Internet operates in two ways:

Charged by task (or calculation cycle) or charged by time

Charged by time unit

The first method can build a proof-of-work chain similar to what Gensyn is trying, in which different GPUs share the "work" And get rewarded for it. For a more trustless model, they have the concept of verifiers and whistleblowers, who are rewarded for maintaining the integrity of the system, based on the proofs generated by the solvers.

Another proof-of-work system is Exabits, which instead of task partitioning treats its entire GPU network as a single supercomputer. This model seems to be more suitable for large LLMs.

Akash Network has added GPU support and begun to aggregate GPUs into this field. They have an underlying L1 to agree on status (showing the work done by the GPU provider), a marketplace layer, and a container orchestration system like Kubernetes or Docker Swarm to manage the deployment and scaling of user applications.

If a system is trustless, the proof-of-work chain model will be most effective. This ensures the coordination and integrity of the protocol.

On the other hand, systems like io.net do not structure themselves as a chain. They chose to address the core issue of GPU availability and charge customers per time unit (per hour). They don't need a verifiability layer because they essentially "rent" the GPU and use it as they please for a specific lease period. There is no division of tasks in the protocol itself, but is done by developers using open source frameworks like Ray, Mega-Ray or Kubernetes.

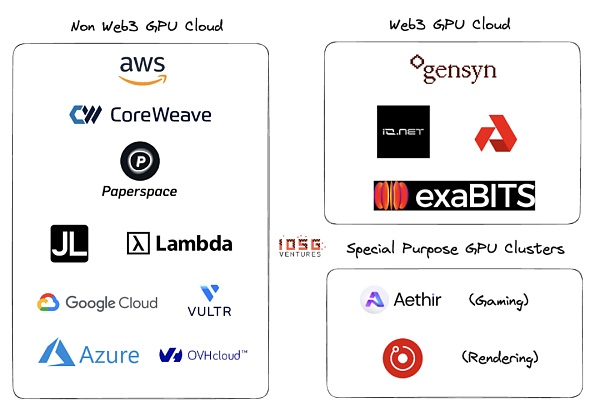

Web2 and Web3 GPU Cloud

Web2 in GPU Cloud Or there are many players in the GPU-as-a-service space. Major players in this space include AWS, CoreWeave, PaperSpace, Jarvis Labs, Lambda Labs, Google Cloud, Microsoft Azure and OVH Cloud.

This is a traditional cloud business model. Customers can rent a GPU (or multiple GPUs) by a unit of time (usually one hour) when they need computing. There are many different solutions for different use cases.

The main difference between Web2 and Web3 GPU clouds is the following parameters:

1. Cloud setup cost

Due to token incentives, the cost of setting up a GPU cloud is significantly reduced. OpenAI is raising $1 trillion for the production of computing chips. It appears that without token incentives, it would take at least $1 trillion to defeat the market leader.

2. Computation time

Non-Web3 GPU cloud will will be faster because the rented GPU cluster is located within a geographical area, while the Web3 model may have a more widely distributed system, and the latency may come from inefficient problem partitioning, load balancing, and most importantly bandwidth.

3. Computational cost

Due to token incentives, The cost of Web3 computing will be significantly lower than existing Web2 models.

Computational cost comparison:

These numbers may change as more supply and utilization clusters provide these GPUs. Gensyn claims to offer A100s (and their equivalents) for as low as $0.55 per hour, and Exabits promises a similar cost-savings structure.

4. Compliance

In permissionless systems , compliance is not easy. However, Web3 systems like io.net, Gensyn, etc. do not position themselves as permissionless systems. Compliance issues such as GDPR and HIPAA were handled during the GPU online, data loading, data sharing and result sharing stages.

Ecosystem

Gensyn, io.net, Exabits , Akash

Risk

1. Demand risk

I think the top LLM players will either continue to accumulate GPUs or use GPU clusters like NVIDIA's Selene supercomputer, which has a peak performance of 2.8 exaFLOP/s. They won't rely on consumers or long-tail cloud providers to pool GPUs. Currently, top AI organizations compete on quality more than on cost.

For non-heavy ML models, they will seek cheaper computing resources, like blockchain-based token-incentivized GPU clusters that can optimize existing GPUs Provide services at the same time (the above is an assumption: those organizations prefer to train their own models instead of using LLM)

2. Supply risk

With massive amounts of capital being poured into ASIC research, and inventions like the Tensor Processing Unit (TPU), this GPU supply issue may go away on its own. If these ASICs can offer good performance:cost trade-offs, then existing GPUs hoarded by large AI organizations may return to the market.

Do blockchain-based GPU clusters solve a long-term problem? Although blockchain can support any chip except GPU, what the demand side does will completely determine the development direction of projects in this field.

Conclusion

Fragmented network with small GPU clusters Won't solve the problem. There is no place for "long tail" GPU clusters. GPU providers (retail or smaller cloud players) will gravitate toward larger networks because the incentives for the network are better. Will be a function of a good token model and the ability of the supply side to support multiple computing types.

GPU clusters may see a similar convergence fate as CDNs. If the big players were to compete with existing leaders like AWS, they might start sharing resources to reduce network latency and the geographic proximity of nodes.

If the demand side grows larger (more models need to be trained, more parameters need to be trained),Web3 players must Very active in business development. If there are too many clusters competing from the same customer base, there will be fragmented supply (which invalidates the entire concept) while demand (measured in TFLOPs) grows exponentially.

Io.net has stood out from many competitors and started with an aggregator model. They have aggregated GPUs from Render Network and Filecoin miners to provide capacity, while also bootstrapping supply on their own platform. This could be the winning direction for DePIN GPU clusters.

Part.2 IOSG post-investment project progress

EigenLayer TVL breaks $10 billion

* Restaking

According to the official website information of Ethereum re-pledge agreement EigenLayer , EigenLayer TVL has approximately 2.93 million ETH, worth approximately US$10.05 billion.

OKX Web3 wallet has become the official designated partner wallet for the Babylon testnet staking event

* Staking

OKX Web3 wallet becomes Babylon test The official designated partner wallet for online staking activities. From February 28 to March 6, 2024, the first 100,000 users who participate in the Babylon testnet staking activity through the OKX Web3 wallet plug-in will be eligible for the Pioneer Pass whitelist for a limited time. Users who have obtained whitelist qualifications can mint in the OKX Web3 wallet NFT market Drops section from March 7, 2024.

It is reported that Papillon Chain is the first trustless Bitcoin staking protocol, unlocking 21 million Bitcoins to ensure a decentralized economy. OKX Web3 Wallet is the industry's leading one-stop Web3 portal. It now supports 85+ public chains. App, plug-in, and web pages are unified, covering 5 major sectors: wallet, DEX, DeFi, NFT market, DApp exploration, and supports Ordinals market. , MPC and AA smart contract wallets, exchange Gas, connect hardware wallets, etc.

StarkWare launches new cryptographic prover "Stwo" to increase transaction speed and reduce fees

* Layer2

Ethereum Layer2 developer StarkWare announced Thursday at ETHDenver that it is building a new cryptographic prover called Stwo. The StarkWare team says that with faster provers, the cost of processing transactions should be lower, which will actually lower fees for users and speed up transactions.

Last August, StarkWare open sourced its existing prover (called Stone). According to a press release, the new prover "Stwo" is named after the combination of Stone and Two, and anyone can run it and inspect its codebase. StarkWare said that the Starknet application chain currently using Stone will eventually benefit from the Stwo prover.

A week ago, StarkWare and Polygon announced Circle STARKS, a new type of cryptographic proof designed to enable zero-knowledge rollup (ZK-rollup) transactions Faster and cheaper.

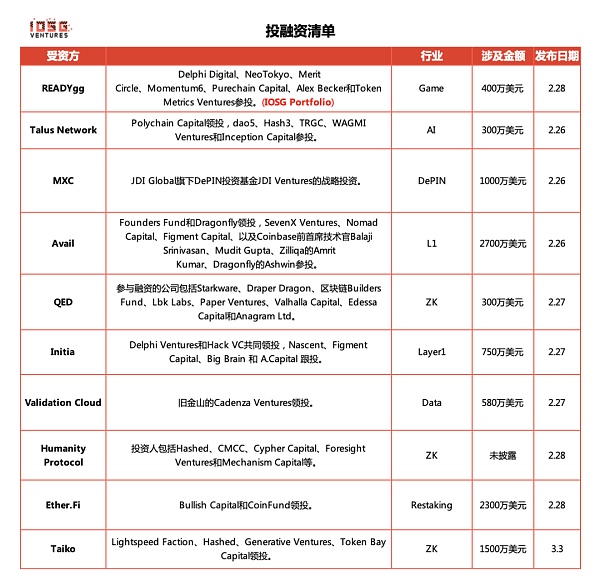

READYgg, a blockchain gaming infrastructure startup, completed US$4 million in financing, with participation from Delphi Digital and others

* Game

Chain game infrastructure startup READYgg announced Completed a new round of financing of US$4 million, with participation from Delphi Digital, NeoTokyo, Merit Circle, Momentum6, Purechain Capital, Alex Becker and Token Metrics Ventures, but the valuation information of this round of financing was not disclosed. READYgg mainly helps developers implement NFT, encrypted wallets and other Web3 technology tools in games, while "advocating community-centered games to empower players." In addition, former PlayStation executive Shawn Layden announced that he has joined the company. It is reported that Shawn Layden also served as a consultant at the technology giant Tencent.

Ethereum expansion project Taiko completed $15 million in Series A financing

* ZK

Ethereum expansion project Taiko completed $15 million in Series A financing, Lightspeed Faction, Hashed, Generative Ventures, and Token Bay Capital led the investment, with participation from Wintermute Ventures, Presto Labs, Flow Traders, Amber Group, OKX Ventures, GSR, and WW Ventures. Taiko has completed a total of US$37 million in financing through three rounds of financing. Taiko is a decentralized Type-1 ZK-EVM that plans to launch its mainnet at the end of the first quarter of this year.

Avalanche will activate the Durango upgrade on the mainnet in the early morning of March 7

* Layer1

The Avalanche interoperability era began at 0:00 Beijing time on March 7, and the production code for the proposed Durango upgrade ([email protected]) has been released. If there are enough pledges to support Durango, it will be activated on the mainnet at 0:00 Beijing time on March 7. In addition, Avalanche Korea officially issued an announcement today confirming that the Durango upgrade activation time is expected to be 0:00 on March 7, Beijing time.

It is reported that the Durango upgrade will introduce Avalanche Warp Messaging into the C chain, enabling local cross-chain communication for each EVM chain in the Avalanche ecosystem and providing future virtualization support. Machine (VM) uses AWM to establish standards.

Part.3 Investment and Financing Events

Talus Network, a blockchain platform focusing on AI, completed US$3 million in financing, led by Polychain Capital

* Layer1

Talus Network, a blockchain platform focusing on decentralized artificial intelligence, completed US$3 million The first round of financing was led by Polychain Capital, with participation from dao5, Hash3, TRGC, WAGMI Ventures and Inception Capital. In addition, angel investors from major technology and blockchain companies such as NVIDIA, IBM, Blue7, Symbolic Capital and Render Network also Contributed to support the team's mission to democratize artificial intelligence on the blockchain.

Arbitrum’s DePIN dedicated chain MXC has completed a US$10 million financing led by JDI

* DePIN

MXC announced that it has received strategic investment from JDI Ventures, a DePIN investment fund under JDI Global, with an investment amount of US$10 million. Since its establishment in Berlin, Germany in 2017, MXC has focused on developing the decentralized IoT space and has launched the first Layer3 zkEVM solution in Arbitrum. The technical team is led by former zkSync core members and aims to become the DePIN infrastructure in the Ethereum ecosystem. JDI Global will provide funding, hardware R&D and market influence support to accelerate the construction of the DePIN ecosystem.

Modular blockchain Avail completed a $27 million seed round of financing, led by Founders Fund and Dragonfly

* DA

Venture capital firms Founders Fund and Dragonfly led the investment, with participation from SevenX Ventures, Nomad Capital, Figment Capital, and former Coinbase CTO Balaji Srinivasan, Mudit Gupta, Zilliqa’s Amrit Kumar, and Dragonfly’s Ashwin. . Avail, which was spun out of Polygon in March 2023 and is led by Polygon co-founder Anurag Arjun, will use the seed funding to develop three core products: its data availability solution (DA), Nexus and Fusion.

Avail DA is the first core component that provides data space data for auxiliary "Layer2 networks" or "rollups", aiming to outperform basic blocks such as Ethereum The chain processes transactions faster and cheaper. The new DA project is expected to be commissioned in early second quarter of 2024.

ZK native blockchain protocol QED completed US$3 million in financing, led by Arrington Capital

* ZK

zk native Blockchain protocol QED today announced it has raised $3 million in a round of funding led by Arrington Capital, with participation from companies including Starkware, Draper Dragon, Blockchain Builders Fund, Lbk Labs, Paper Ventures, Valhalla Capital, Edessa Capital and Anagram Ltd. QED aims to promote the combination of the scalability of zero-knowledge (ZK) proofs with the liquidity and security of Bitcoin (BTC). According to reports, QED is Bitcoin’s native execution layer and is designed to solve the challenges of Web3 development.

Initia, a blockchain platform focused on Rollup, completed US$7.5 million in financing, with Delphi Ventures and Hack VC co-leading the investment

* Infra

The former Terra and Cosmos developer successfully raised $7.5 million to develop Rollup-focused blockchain platform Initia, with plans to launch the mainnet in the second quarter. The round was co-led by Delphi Ventures and Hack VC, with participation from Nascent, Figment Capital, Big Brain and A.Capital. Angel investors include anonymous cryptocurrency trader Cobie, DCF God, Split Capital co-founder Zaheer Ebtikar, Fiskantes and WSB Mod. Celestia COO Nick White also weighed in. Initia is designed to support multiple second-layer networks and has incentives to promote the growth of these networks. The platform is currently in the closed testnet stage, and some projects have built DeFi applications on the testnet. It plans to launch an incentivized testnet in early April.

Web3 data flow and infrastructure company Validation Cloud completed US$5.8 million in financing, led by Cadenza Ventures

* Infra

Validation Cloud, a Web3 data streaming and infrastructure company based in Zug, Switzerland, announced the completion of its first round of external financing totaling $5.8 million, led by San Francisco-based Cadenza Ventures. This oversubscribed financing round also attracted participation from well-known global companies including Blockchain Founders Fund, Bloccelerate, Blockwall, Side Door Ventures, Metamatic, GS Futures and AP Capital. Validation Cloud, known as the "Cloudflare of Web3", has established a proprietary system architecture to provide a fast, scalable and intelligent global platform that supports Staking, node API and data services.

Humanity Protocol announced that it has received strategic investment from 20 institutions including Hashed

* Layer2

Humanity Protocol announced strategic investments from more than 20 well-known crypto-native venture capital funds, including Hashed, CMCC, Cypher Capital, Foresight Ventures, and Mechanism Capital, among others. In addition, there are personal investments from Animoca Brand founder Yat Siu and Polygon founder Sandeep Nailwal. The exact amount was not disclosed.

It is reported that Humanity Protocol uses palmprint recognition technology and zero-knowledge proof to ensure user privacy and security, aiming to provide human proof in building Web3 applications. An easily accessible and non-invasive method.

Liquidity re-pledge protocol Ether.Fi completed $23 million in Series A financing, led by Bullish Capital and CoinFund

* Restaking

Bullish Capital and CoinFund raised $23 million in Series A financing, with participation from OKX Ventures, Foresight Ventures, Consensys and Amber. Ether.Fi’s total value locked (TVL) has grown from $103 million to $1.66 billion since the beginning of the year. The TVL of the entire liquidity re-hypothecation ecosystem has exceeded $10 billion. Ether.Fi allows Ethereum stakers to re-stake ether through EigenLayer in exchange for eETH, a liquidity token that can be used in the decentralized finance (DeFi) market.

According to Ether.Fi’s official Twitter, together with the previously unannounced SAFE round of financing that was closed at the end of last year, Ether.Fi’s total financing amount has reached 27 million. Dollar.

Part.4 Industry Pulse

Pyth Network launches price feeding service on Hedera

* Oracle

The oracle project Pyth Network announced the launch of Price Feeds and Pyth Benchmarks on the open source proof-of-stake blockchain Hedera. According to the press release, more than 400 Python price feeds will be available on Hedera. Pyth will provide a pull oracle design, enabling Hedera users and developers to get the latest price updates from more than 400 ultra-low latency price sources covering cryptocurrencies, FX, commodities, stocks and exchange-traded funds (ETFs).

Dune launches blockchain data sharing solution Dune Datashare on Snowflake Market

* Data

Dune announced the launch of Dune Datashare, a new blockchain data sharing solution on the Snowflake Marketplace, providing enterprises with seamless access to Dune's entire set of curated encrypted data.

Polygon zkEVM prepares for the Ethereum Dencun upgrade and plans to significantly reduce costs through EIP-4844

* Layer2

Polygon zkEVM co-founder and technical leader Jordi Baylina said that the main challenge facing ZK rollups is the cost of data availability, and EIP-4844 may play an important role in reshaping the capacity and gas costs of Polygon zkEVM. He further predicted that after Dencun upgrades and implements EIP-4844, Ethereum's total data availability capacity will increase by 3 times; after combining EIP-4844 with data compression, the potential cost reduction ranges from 10 times to 50 times, but in reality Cost savings will depend on various factors.

When talking about the technical preparations for the upgrade, Baylina emphasized that although the transition to Dencun may be more straightforward for Optimism and Arbitrum, integrating EIP-4844 Polygon zkEVM using ZK rollup technology requires specific technical modifications. Looking ahead to the full integration of EIP-4844, Baylina noted the need for a new attestation system to support data compression on zkEVM in order to optimize the benefits of EIP-4844 for Polygon zkEVM, and work to develop such a system is ongoing. Baylina added that efforts are being made to ensure that all necessary components are aligned with the activation of the Dencun hard fork on mainnet.

Telegram advertising platform will use the TON blockchain for advertising revenue payment and withdrawal

* Layer1

Telegram founder Pavel Durov just said in his TG personal channel that starting next month, Telegram channel owners will be able to receive financial rewards for their work. Currently, Telegram broadcast channels generate 1 trillion views per month, but only 10% of the views are monetized through Telegram Ads, a promotional tool designed with privacy in mind. In March, the Telegram advertising platform will be officially opened to all advertisers in nearly 100 new countries. Channel owners in these countries will start receiving 50% of the revenue Telegram makes from display ads on their channels. To ensure fast and safe advertising payments and withdrawals, the TON blockchain will be used exclusively, and advertising will be sold through Toncoin and revenue will be shared with channel owners.

Metis plans to integrate Chainlink’s cross-chain interoperability protocol Chainlink CCIP

* Layer2

Ethereum Layer 2 network Metis plans to integrate Chainlink’s interoperability solution Chainlink CCIP. The team said that as part of this integration, the Metis bridge interface will be upgraded to leverage Chainlink CCIP as the official cross-chain infrastructure to power the canonical Metis token bridge, with an initial focus on converting some mainstream stablecoins from Ethereum. The Fangzhu network is bridged to the Metis network.

Frax Finance plans to announce the proposed income distribution plan within 10 days

* DeFi

Stablecoin protocol Frax Finance plans to announce a revenue distribution proposal within 10 days. Sam Kazemian, founder of Frax Finance, said: “While Uniswap will implement rewards for the first time, Frax Finance will reverse the previous decision to stop rewards. There was a previous vote to stop income distribution. But now we feel it is the right time to turn on the huge switch. This will bring A lot of income."

Uniswap launches limit order function with no gas fees

* Layer2

Uniswap Labs announced the launch of limit order functionality. Users can set limit orders for Ethereum ERC-20 tokens on the Uniswap web application. Limit orders are powered by UniswapX, leveraging on-chain and off-chain liquidity with no gas fees and no minimum trade size requirements. However, users need to pay Gas fees when canceling limit orders.

OKX DEX has integrated Jupiter API

* DEX

Users conduct Solana through OKX DEX When exchanging online assets and cross-chain transactions, you can enjoy better quotes, more comprehensive liquidity, and a smoother trading experience. Jupiter is a decentralized trading liquidity aggregator on Solana, able to connect all DEX markets and AMM pools to span different decentralized exchanges and provide the best token prices.

It is reported that the OKX Web3 Wallet DEX section is a DEX and cross-chain aggregator. It has now aggregated more than 10 cross-chains, more than 20 public chains, and more than 300 DEX, etc., through X Routing intelligent routing, one transaction can use multiple DEX at the same time, providing users with the best price, best liquidity and 0 transaction service fees, and also has DEX market section, limit order, KYT security detection, etc. Function.

Puffer Finance will update the EigenLayer points and pufETH points system on March 5th

* Restaking

Ethereum liquidity staking protocol Puffer Finance will update the EigenLayer points and pufETH points systems starting from 16:00 on March 5, Beijing time. Among them, only users who staked before the deadline announcement on February 9 are eligible to earn EigenLayer points. If an eligible user transfers pufETH out of the wallet, EigenLayer points will stop accumulating. Additionally, providing pufETH liquidity to DeFi protocols that Puffer has integrated will not affect EigenLayer points accumulation.

Yuga Labs: "Dookey Dash: Unclogginged" plans to launch a trial version on March 6 and will provide over 1 million US dollars in game rewards

* Game

"Dookey Dash: Unclogginged", the free version of the Dookey Dash game launched by Yuga Labs, will return this spring and will provide value to participating users For game rewards exceeding US$1 million, it is reported that Yuga Labs plans to explore using ApeCoin to pay rewards, but the company currently refuses to clarify the matter and said that more details will be announced soon.

Yuga Labs also confirmed that the new game will launch a 72-hour trial version for 10,000 players from March 6, during which the player with the highest score will receive MAYC NFT rewards, based on the NFT floor price of 4 ETH, the minimum reward value is approximately US$12,750. In addition, Yuga Labs stated that the new version of the game supports PC, MAC, iOS and Android operating systems. Players do not need to hold NFT to participate, and Bored Apes, Mutant Apes, Bored Ape Kennel Club, HV-MTL and Otherside Kodas specific NFT Holders can receive additional benefits.

Lens Protocol has transitioned to the "permissionless" stage

* Social

Decentralized social protocol Lens Protocol announced that it has transitioned to a "permissionless" phase, allowing all users to create on-chain NFTs for their profiles. Anyone can create their profile for 10 Matic tokens (currently worth $10.30), or pay a similar amount using a credit card. Previously profile creation was limited to selected users.

Uniswap V3 is now deployed to Filecoin VM

< em>* DeFi

Following the community’s Uniswap governance proposal in October last year, Uniswap V3 is now available on Filecoin VM (Filecoin virtual machine). Users can trade FIL on OKU.trade, and developers will also be able to use ceWETH, ceUSDC, axIUSDC, axiDAI and other bridge assets on Filecoin with the support of Celer and Axelar.

Golden Finance launches Golden Web3.0 Daily to provide you with the latest and fastest news on games, DeFi, DAO, NFT and Metaverse industries.

JinseFinance

JinseFinanceForbes teams up with Memeland for 'No Sleep NYC' NFT event, signaling commitment to Web3. Collaboration aims to foster community and innovation in dynamic digital space. Memecoin (MEME) sees activity post-announcement, reflecting market excitement.

Xu Lin

Xu LinThe 'Token2049 Side Event,' a collaborative endeavour hosted by GasZero, the pioneering blockchain infrastructure platform known for its zero transaction fees on Web3, in partnership with Tencent Cloud and PolygonLabs, came to fruition in Singapore in 2023.

Olive

OliveThe summit's primary objective is to provide a platform for investors, developers, and Web3 gaming enthusiasts to discuss the industry's developmental trajectory and its future prospects.

Samantha

SamanthaPromising an array of thought-provoking sessions, sessions will be carefully curated to foster discussions and offer unique perspectives on the rapidly evolving fintech landscape.

Olive

OliveThe event, titled "Web 3.0 | Art Meets Metaverse," promises a multifaceted experience combining an exhibition, a talkshow, and an interactive zone. Attendees will explore the impact of technology on art throughout history, the present state of affairs, and gain insights into the future of the metaverse.

Davin

DavinCleanSpark is acquiring two Bitcoin mining campuses for $9.3 million. The facilities will host over 6,000 Bitcoin miners — adding 15% to its current capacity.

TheBlock

TheBlock Coinlive

Coinlive  Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist