Source: Quantum Bit

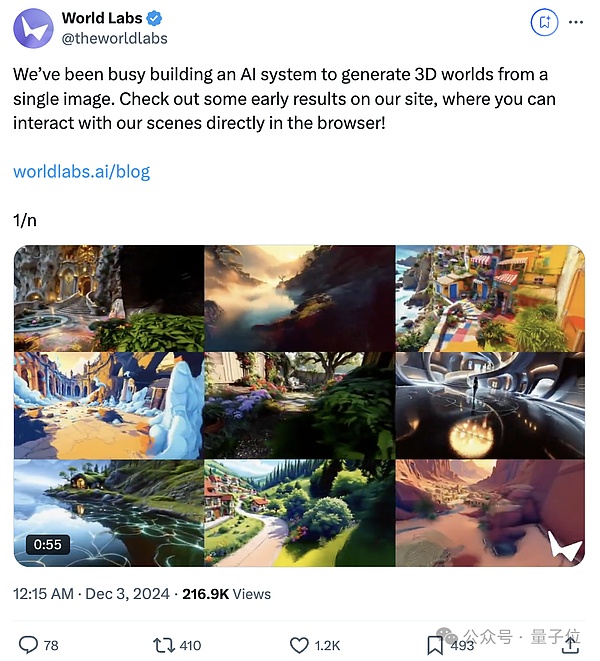

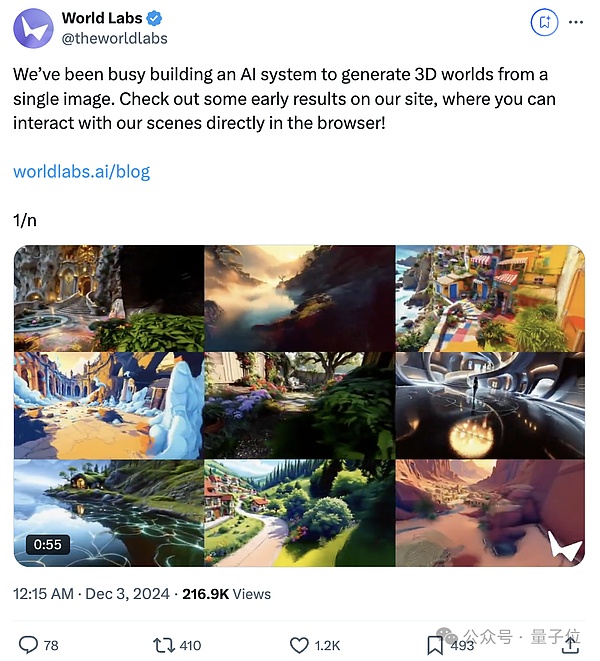

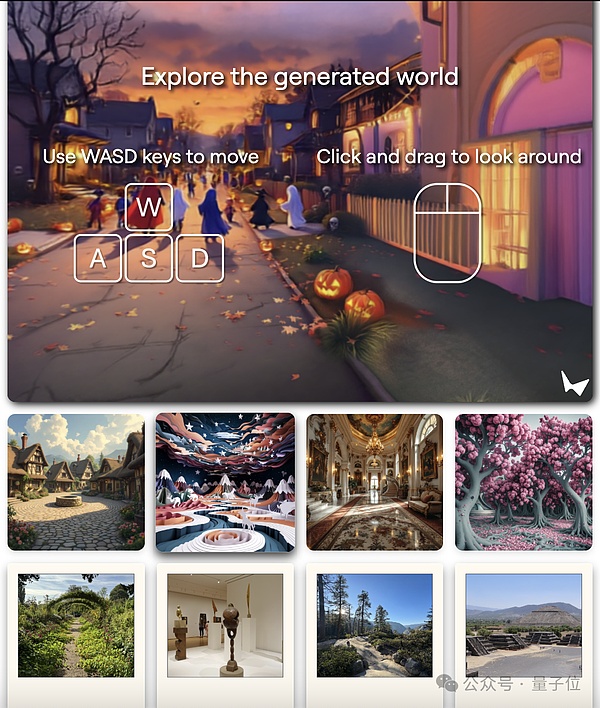

Just now, Fei-Fei Li’s first space intelligence project was suddenly released:

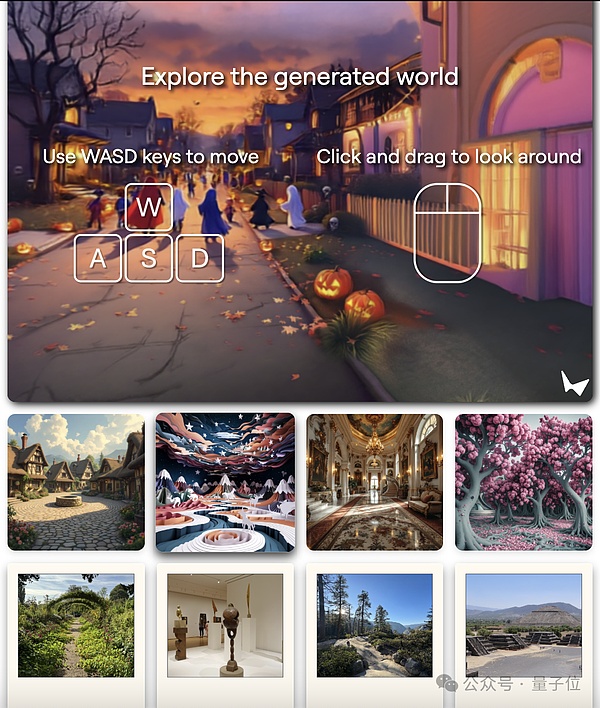

With only one picture, an AI system can generate a 3D game world!

The key point is that the generated 3D world is interactive.

You can freely move the camera to explore this 3D world like playing a game, and operations such as shallow depth of field and Hitchcock zoom are all possible.

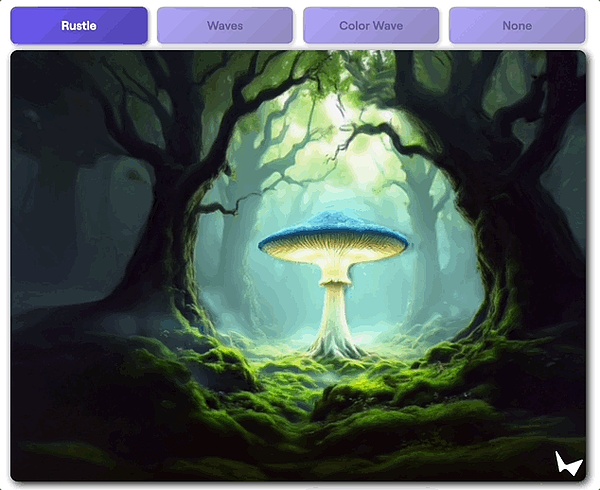

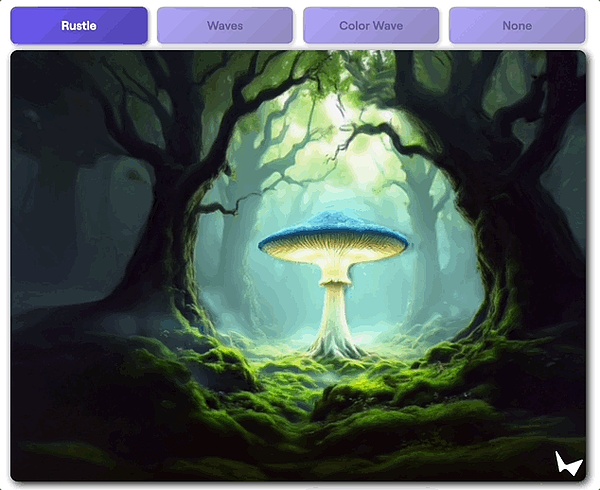

Enter any image:

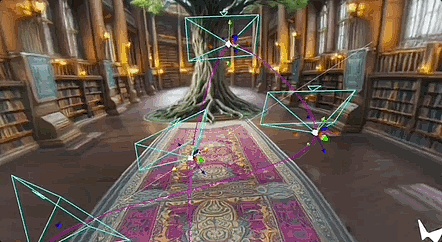

Except for this image, everything in the explorable 3D world is AI-generated:

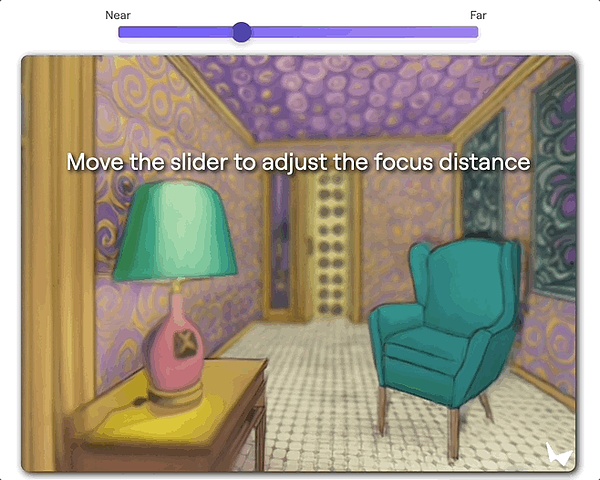

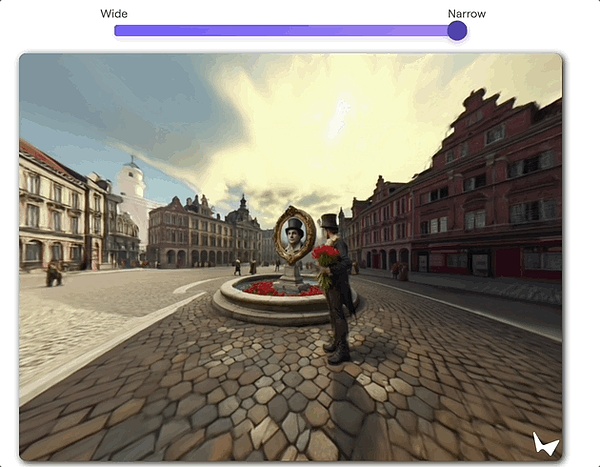

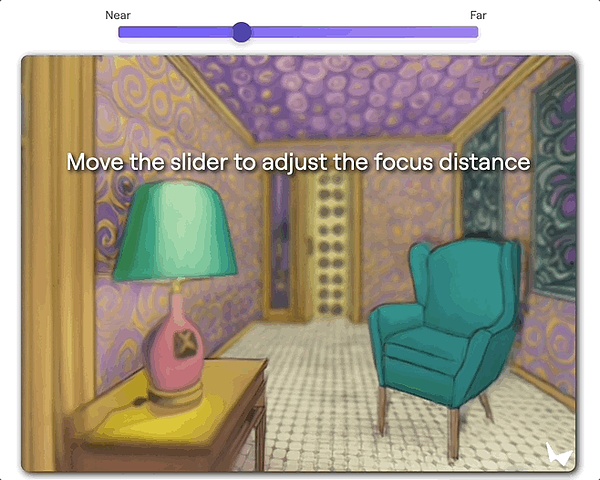

These scenes are rendered in real time in the browser, equipped with controllable camera effects and adjustable simulated depth of field (DoF).

You can even change the color of objects, dynamically adjust background lighting and shadows, and insert other objects into the scene.

In addition, most previous generative models predicted pixels, while this AI system directly predicts 3D scenes.

So the scene does not change when you look away and come back, and follows basic 3D geometric physics rules

Netizens were immediately blown up, and the word "unbelievable" was directly flooded in the comment area.

There are many well-known people who like it, such as Tobi Lutke, the founder of Shopify:

There are also many netizens who think that this directly opens up a new world for VR.

The official said that "this is just a microcosm of the future of 3D native generation AI":

We are working hard to get this technology into the hands of users as soon as possible!

Li Feifei also shared this achievement and said:

No matter how you theorize this idea, it is difficult to describe the experience of 3D scene interaction generated by a photo or a sentence. I hope you like it.

Currently, the application for the waiting list has been opened, and some content creators have already used it.

Envy drooled from the corners of my eyes.

The official blog post stated that today, World labs has taken the first step towards spatial intelligence:

Released an AI system that generates a 3D world from a single image.

Beyond the input image, all is generated.

And it is any image input.

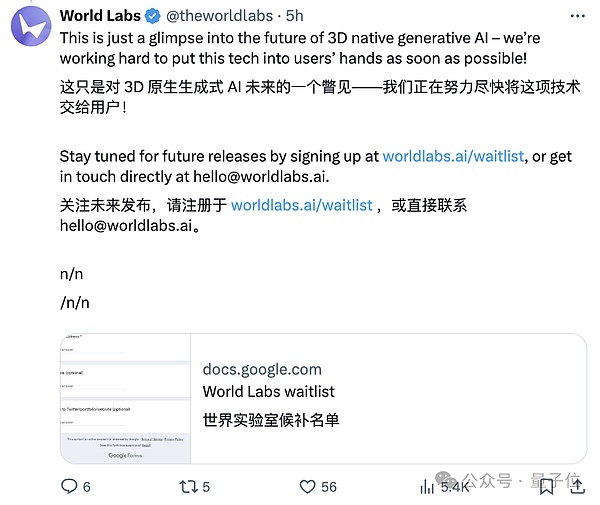

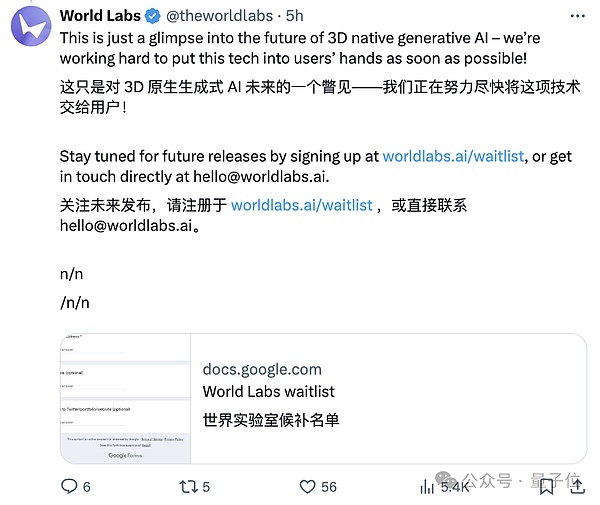

And it is an interactive 3D world - users can use the W/A/S/D keys to control the up, down, left, and right viewing angles, or use the mouse to drag the screen to explore this generated world.

The official blog post contains many demos that can be played.

This time, I really recommend everyone to try it out, the experience of getting started is very different from watching videos or animated images.

(The through train is placed at the end of the article as usual)

Okay, the question is, what details are worth exploring in the 3D world generated by this AI system?

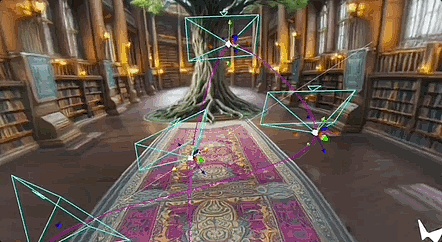

Camera effect

World Labs said that once generated, this 3D world will be rendered in real time in the browser, giving people the feeling of looking at a virtual camera.

Moreover, users can precisely control this camera.

The so-called "precise control" has two ways of playing,

One is to simulate the depth of field effect, that is, only objects at a certain distance from the camera can be clearly focused.

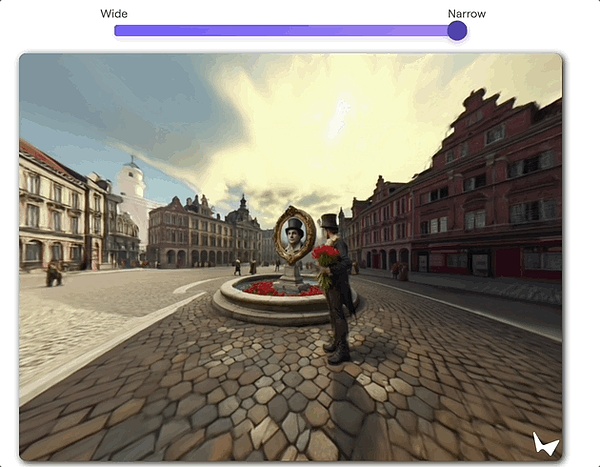

Second, it can simulate sliding zoom (Dolly Zoom), which is the very classic Hitchcock zoom in film shooting techniques.

Its characteristic is that "the size of the subject in the lens remains unchanged, while the size of the background changes".

Many travelers who go to Tibet and Xinjiang hope to use Hitchcock zoom to shoot videos, which has a strong visual impact.

In the World Labs demonstration, the effect is as follows (but in this gameplay, there is no way to control the perspective):

3D effect

World Labs said that most generative models predict pixels, and unlike them, our AI predicts 3D scenes.

The official blog post lists three benefits:

First, persistent reality.

Once a world is generated, it will always exist.

The scene from the original perspective will not change just because you look at another perspective and then look back.

Second, real-time control.

After the scene is generated, users can control it with the keyboard or mouse to move freely in this 3D world in real time.

You can even observe the details of a flower carefully, or observe secretly in a certain place, and pay attention to every move of the world from a God's perspective.

Third, follow the correct geometric rules.

The world generated by this AI system follows the basic rules of 3D set physics.

Although the effect of some AI-generated videos is very dreamlike, they don't have the real sense of depth like ours (doge).

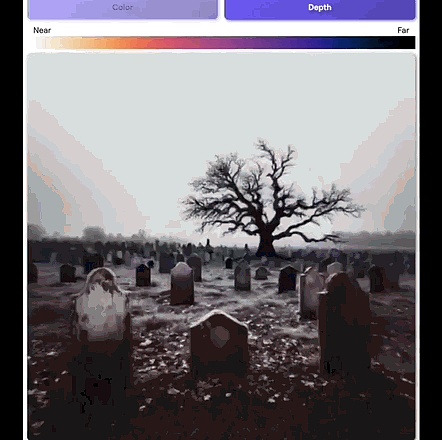

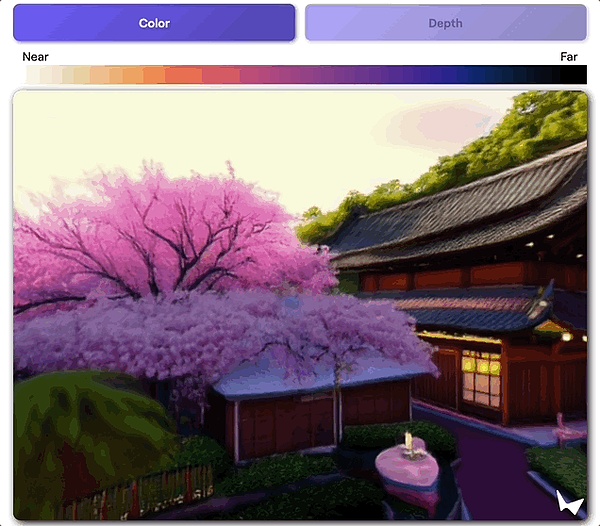

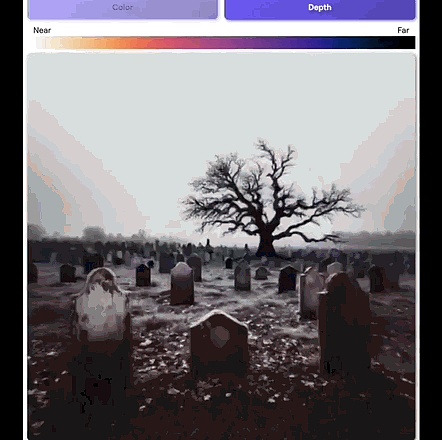

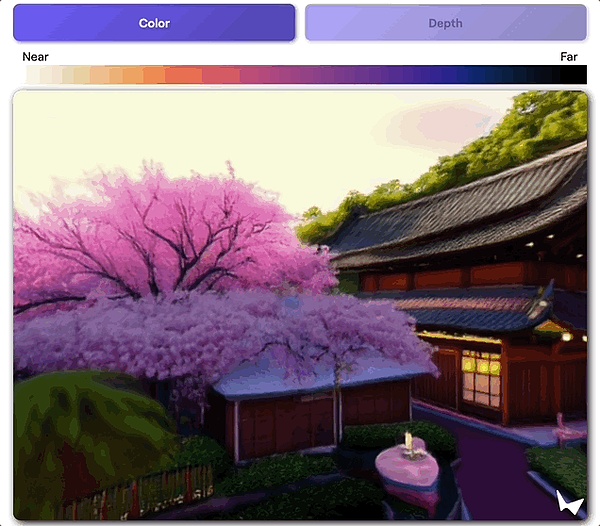

The official blog also wrote that the easiest way to create a visual 3D scene is to draw a depth map.

The color of each pixel in the picture is determined by its distance from the camera.

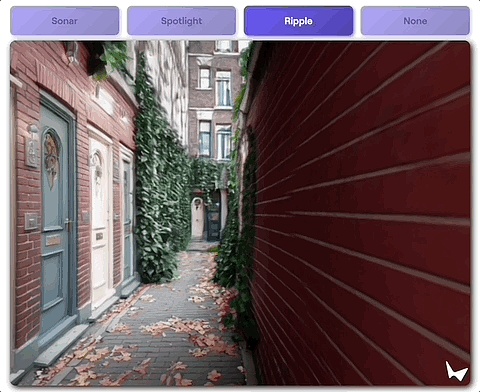

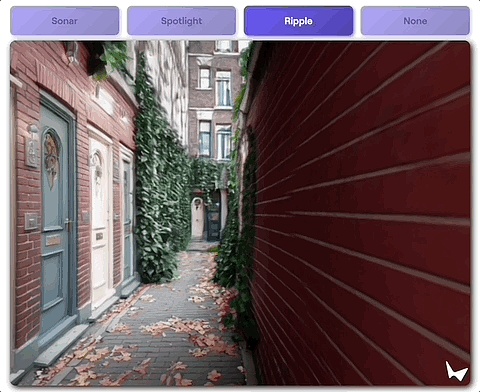

Of course, users can use the 3D scene structure to build interactive effects---click to interact with the scene, including but not limited to suddenly putting a spotlight on the scene.

Animation effect?

That's so easy.

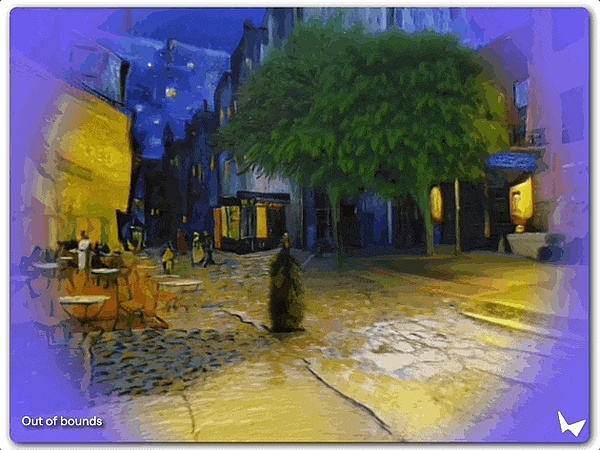

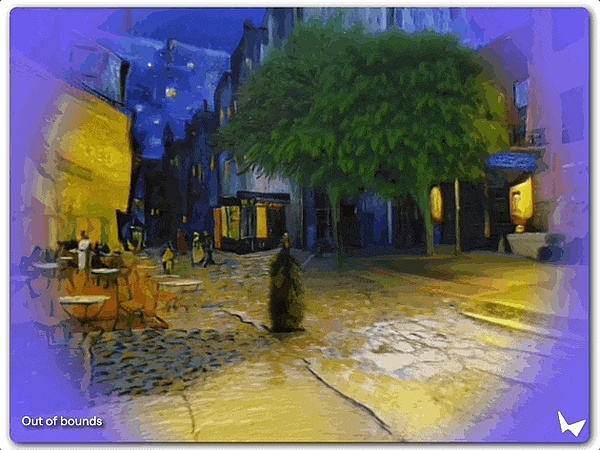

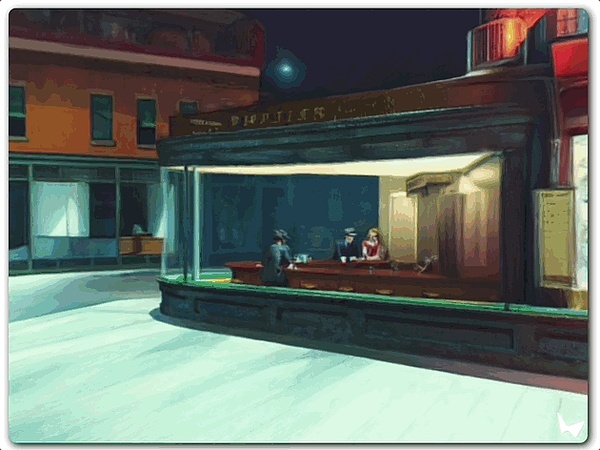

Enter the world of painting

The team also played around and experienced some classic works of art in a "new way".

New, not only in the interactive way, but also in the input of the picture can complete the part that is not in the original painting.

Then it becomes a 3D world.

This is Van Gogh's "Café Terrace at Night":

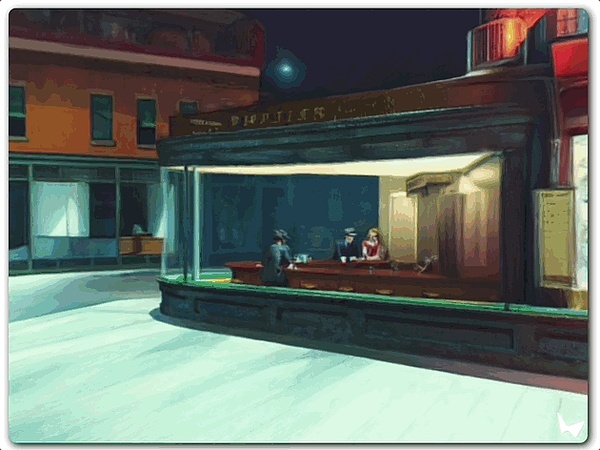

This is Edward Hopper's "Night Walker":

Creative workflow

The team said that 3D world generation can be combined with other AI tools very naturally.

This allows creators to experience new workflows with the tools they are already familiar with.

For example:

You can first use the text-based image model to move from the text world to the image world.

Because different models have their own style characteristics, the 3D world can migrate and inherit these styles.

Under the same prompt, input different styles of Wenshengtu model generated pictures, you can create different 3D worlds:

A vibrant cartoon-style teenage bedroom, with a colorful blanket on the bed, computers cluttered on the table, posters on the wall, and sports equipment scattered. A guitar leans against the wall, with a comfortable patterned carpet in the middle. The light from the window adds a touch of warmth and youth to the room.

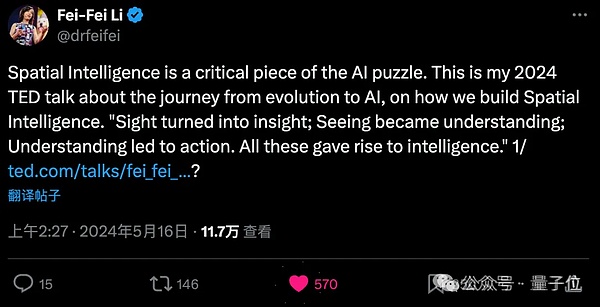

World Labs and Spatial Intelligence

"World Labs" was founded by Stanford University professor and AI godmother Fei-Fei Li in April this year.

This is also her first venture that has been exposed.

Her entrepreneurial direction is a new concept - spatial intelligence, that is:

Visualization becomes insight; seeing becomes understanding; understanding leads to action.

In Fei-Fei Li's view, this is "the key puzzle to solving the problem of artificial intelligence."

In just 3 months, the company exceeded the valuation of 1 billion US dollars and became a new unicorn.

Public information shows that a16z, NEA and Radical Ventures are the leading investors, and Adobe, AMD, Databricks, and Huang's Nvidia are also among the investors.

There are also many big names among individual investors: Karpathy, Jeff Dean, Hinton...

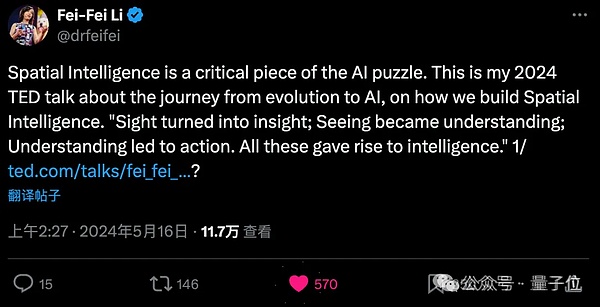

In May this year, Fei-Fei Li had a public 15-minute TED talk.

She shared more thoughts on spatial intelligence in a fluent manner, including the following points:

Visual ability is believed to have triggered the Cambrian explosion, a period when animal species entered the fossil record in large numbers. Initially a passive experience, simply positioning to let light in, it soon became more active, and the nervous system began to evolve... These changes gave birth to intelligence.

For many years, I have been saying that taking pictures and understanding are not the same thing. Today, I want to add one more point: It is not enough to just see. See in order to act and learn.

If we want AI to go beyond current capabilities, we don’t just want AI that can see and speak, we also want AI that can act. The latest milestone of spatial intelligence is to teach computers to see, learn, act, and learn to see and act better.

With the accelerated progress of spatial intelligence, a new era is unfolding before our eyes in this virtuous cycle. This cycle is catalyzing robot learning, which is a key component of any embodied intelligent system that needs to understand and interact with the 3D world.

The company's target customers reportedly include video game developers and film studios. In addition to interactive scenes, World Labs also plans to develop some tools that are useful to professionals such as artists, designers, developers, filmmakers and engineers.

Now with the release of the first project of spatial intelligence, what they are going to do has gradually become concrete.

But World Labs said that what is currently released is just an "early preview":

We are working hard to improve the scale and realism of the world we generate, and try new ways for users to interact with it.

Reference link:

[1]https://www.worldlabs.ai/blog

[2]https://mp.weixin.qq.com/s/3MWUv3Qs7l-Eg9A9_3SnOA?token=965382502&lang=zh_CN

[3]https://x.com/theworldlabs/status/1863617989549109328

JinseFinance

JinseFinance