Source: InfoQ

At 4:00 am Beijing time, on the other side of the ocean at the San Jose Convention Center in San Jose, California, the GTC 2024 conference, known as the NVIDIA Technology Feast, is in full swing. As the opening act of NVIDIA 2024, the billionaire leader Huang, wearing his iconic leather jacket, stood in the center of the stage and calmly launched another series of "nuclear bomb" super chips after H100 and A100.

The reason why this year’s GTC is attracting so much attention is because Nvidia has achieved great success in terms of financial performance in the field of AI in the past year. From the Volta V100 GPU series to the latest Ampere A100 and Hopper H100 chips, the company has been claiming the title of king of AI chips.

1. The GPU family has added a "newcomer", the new Blackwell architecture chip is booming

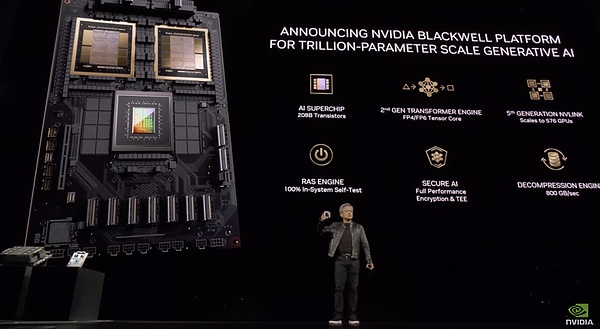

Before the start of this GTC conference, foreign media had already begun to spread rumors: Huang Renxun will be at GTC A new product of the GPU family was released in 2024, and sure enough, the B200 and GB200 series chips using the Blackwell architecture arrived as scheduled.

According to NVIDIA, the Blackwell architecture series of chips is the most powerful AI chip family to date.

According to Lao Huang, the B200 has 208 billion transistors (while the H100/H200 has 80 billion transistors). It uses TSMC’s 4NP process and can support AI models with up to 10 trillion parameters. OpenAI's GPT-3 consists of 175 billion parameters. It also delivers 20 petaflops of AI performance from a single GPU—a single H100 can deliver up to 4 petaflops of AI compute.

But it is worth noting that the Blackwell B200 is not a single GPU in the traditional sense. It consists of two tightly coupled chips connected via a 10 TB/s NV-HBI (Nvidia High Bandwidth Interface) connection to ensure they function as a single, fully coherent chip.

The GPU platform, named after mathematician David Harold Blackwell, inherits the Hopper architecture that Nvidia introduced two years ago, and a series of products based on this architecture have sent Nvidia's business and its stock price soaring.

This architecture is another important step forward in AI security. Blackwell delivers secure AI with 100% in-system self-testing RAS services and full-performance encryption, meaning data is not only secure in transit, but also at rest and when computed.

Blackwell will be integrated into Nvidia's GB200 Grace Blackwell superchip, which connects two B200 Blackwell GPUs to a Grace CPU. Nvidia did not disclose pricing.

The new chip is expected to be available later this year. Nvidia said AWS, Dell Technologies, Google, Meta, Microsoft, OpenAI and Tesla plan to use Blackwell GPUs.

"Generative artificial intelligence is The defining technology of our time," Huang said in his speech. "Blackwell GPUs are the engine driving this new industrial revolution. Working with the world's most dynamic companies, we will realize the promise of artificial intelligence for every industry."

NVIDIA also released the GB200 NVL72 liquid The cold-rack system, which contains 36 GB200 Grace Blackwell superchips and has 1,440 petaflops (aka 1.4 exaflops) of inference power, has nearly two miles of cables inside, with a total of 5,000 individual cables.

Nvidia says the GB200 NVL72 delivers up to 30x performance improvements compared to the same number of H100 Tensor Core graphics processing units used for inference purposes. In addition, the system can reduce costs and energy consumption by up to 25 times.

GB200 NVL72

For example, training a 1.8 trillion parameter model previously required 8,000 Hopper GPUs and 15 MW of power. Today, only 2,000 Blackwell GPUs are needed to do this, while consuming just 4 megawatts of power.

On the GPT-3 benchmark with 175 billion parameters, Nvidia says GB200 performs 7 times better and trains 4 times faster than H100.

In addition, Nvidia said it will also launch a server motherboard called the HGX B200, which is based on using 8 B200 GPUs and an x86 CPU (possibly two CPUs) in a single server node. Each B200 GPU is configurable for up to 1000W, and the GPUs deliver up to 18 petaflops of FP4 throughput, making them 10% slower than the GPUs in the GB200.

Currently, enterprise customers can access the B200 through the HGX B200 and GB200, which combine the B200 GPU with NVIDIA's Grace CPU.

2. Comprehensive upgrade of software services

The market is heating up, and competition in both hardware and software is intensifying. At this GTC, Nvidia is not only taking on the competition with new hardware innovations, but also showing how its AI software strategy is helping to define its leadership in the field and how it will evolve in the coming years.

Huang Renxun is also focusing on promoting its AI software subscription service package. This is obviously in line with the company's new strategy of "selling hardware with software" and is also completely different from the past strategy of "selling software with hardware". bid farewell.

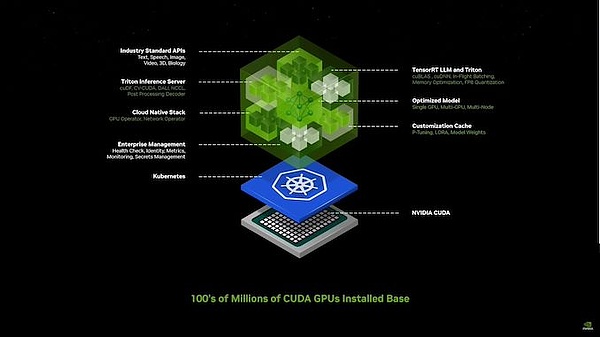

Nvidia has access to a large number of models across all domains, but they believe they are still too difficult for enterprises to use. They launched Nvidia Inference Microservices (NIM), which consolidates models and dependencies into a concise package, optimized for the user's stack and connected with an easy-to-use API.

The packaged and optimized pre-trained model can be installed on NVIDIA Run, containing all the software needed to run it. CUDA libraries, APIs, etc. are basically containerized AI packages optimized for NV GPUs with a simple API to access them.

Lao Huang pointed out: "This is how we will write software in the future" - by assembling a bunch of artificial intelligence.

Lao Huang introduced how NVIDIA uses NVIDIA Inference Microservices (NIM) to create An internal chatbot designed to address common questions encountered when building chips. “We needed a simulation engine to digitally represent the world to the robot,” he said, and that’s what Omniverse was. These “microservices” will allow developers to quickly create and deploy “co-pilots” or AI assistants using proprietary and custom models.

He said robotics is a key pillar of Nvidia, along with artificial intelligence and Ominverse/Digital Twin work, all of which work together to get the most out of the company's systems.

It is reported that Omniverse is a platform designed for building and operating Metaverse applications, which are essentially shared virtual worlds where people can interact, work and create. The Omniverse platform enables the creation of digital twins and advanced simulations. NVIDIA's vision for Omniverse includes becoming the foundational platform for the Metaverse, where creators and businesses can collaborate in a shared virtual space. Digital twins created in Omniverse can be used in a variety of applications in Metaverse, such as virtual training, product design, and predictive maintenance.

Lao Huang said that NVIDIA has launched dozens of enterprise-level generative AI microservices. Enterprises can use these services to make applications on their own platforms while retaining full ownership and control of their intellectual property rights. right.

Lao Huang also announced Omniverse Cloud streaming to Apple Vision Pro headphones.

He also said that NVIDIA is seriously considering fundamentally redesigning the entire bottom layer. The software stack hopes to use the power of AI to generate better code for humans.

The reason for this idea is very simple: for decades, the world has been subject to the traditional computing framework developed around CPUs, where humans write applications to retrieve prepared data from databases. information.

Huang Renxun pointed out at the press conference, "Our computing method today requires first determining who wrote and created the information, which means that the information must first be recorded."

Nvidia's GPUs have opened up a new path to algorithmic computing for accelerated computing, and can rely on creative reasoning (rather than inherent logic) to determine relevant results.

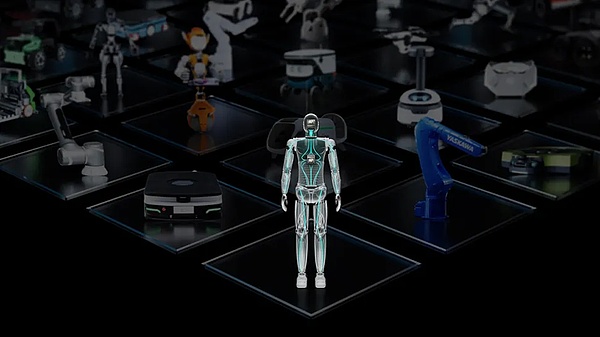

In addition, Nvidia hopes to promote the development of humanoid robots by releasing another new API collection, Project GROOT.

Project GROOT is a humanoid robot model that Nvidia produces in conjunction with the Jetson Thor, an SoC that is also an upgraded version of the Nvidia Isaac. NVIDIA says the GROOT robot will understand natural language and learn dexterity by imitating human movements. Jetson Thor runs a Blackwell-based GPU that delivers 800 teraflops of AI performance at 8-bit data processing.

Lao Huang revealed that robots driven by the platform will be designed to understand Natural language and imitate robot movements, observing human behavior. This allows GROOT robots to quickly learn the coordination, flexibility, and other skills to navigate, adapt to, and interact with the real world—and definitely without leading to robot rebellion.

“Building a basic model for a universal humanoid robot is one of the most exciting problems we can solve in the field of artificial intelligence today,” Lao Huang said. "These enabling technologies are coming together to enable leading robotics experts around the world to make giant leaps in artificial general-purpose robots."

3. Impact on developers

According to Experts predict that in five years, information in the form of text, images, videos, and speech will all be input into large language models (LLM) in real time. By then, computers will have direct access to all information sources and will continuously improve themselves through multimodal interactions.

Huang Renxun said, "In the future, we will enter the era of continuous learning. We can decide whether to deploy the results of continuous learning, and the interaction with the computer will no longer rely on C++."

This is what AI technology is about - humans can, after reasoning, ask a computer to generate code to achieve a specific goal. In other words, in the future, people will be able to communicate smoothly with computers using simple languages instead of C++ or Python.

“In my opinion, the value of programming itself is quietly crossing the historical turning point of decline.” Huang Renxun also added that AI is already bridging the gap between humans and technology.

“Right now, there are about tens of millions of people relying on their computer programming knowledge to find jobs and earn income, while the remaining 8 billion people are far behind. The future situation There will be changes."

In Huang Renxun's view, English will become the most powerful programming language, and personalized interaction is a key factor in narrowing the technology gap.

Generative AI will become a macro-level operating system where humans can instruct computers to create applications using simple language. Huang Renxun said that large language models will help humans transform their inspiration into reality through computers.

For example, humans can already ask big languages to generate Python code for domain-specific applications, with all prompts written in plain English.

"How do we make the computer do what we want? How do we fine-tune the instructions on the computer? The answer to these questions is prompt word engineering, and it is more of an art than a pure technology ."

In other words, humans will be able to focus on domain expertise, while generative AI will make up for the shortcomings of programming skills. Huang Renxun believes that this will completely subvert the software development landscape.

Huang Renxun has previously compared large language models to college graduates who have been pre-trained and bright-minded. NVIDIA is providing expertise in areas such as healthcare and finance around large models to provide efficient support to enterprise customers.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Edmund

Edmund Edmund

Edmund Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist Bitcoinist

Bitcoinist