Original author: Faust, Geek web3

From the summer of inscription in 2023 to now, Bitcoin Layer 2 has always been the highlight of the entire Web3. Although the rise of this field is much later than Ethereum Layer 2, with the unique charm of POW and the smooth landing of spot ETFs, Bitcoin, which does not need to worry about the risk of "securitization", has attracted tens of billions of dollars of capital attention for the derivative track of Layer 2 in just half a year.

In the Bitcoin Layer 2 track, Merlin, which has billions of dollars of TVL, is undoubtedly the one with the largest volume and the most followers. With clear staking incentives and considerable yields, Merlin suddenly sprang up in a few months, creating an ecological myth that surpassed Blast. As Merlin gradually became popular, the discussion about its technical solutions has become a topic of concern for more and more people.

In this article, Geek Web3 will focus on the Merlin Chain technical solution, interpret its publicly available documents and protocol design ideas, and we are committed to letting more people understand the general workflow of Merlin, have a clearer understanding of its security model, and let everyone understand how this "head Bitcoin Layer 2" works in a more intuitive way.

Merlin's decentralized oracle network: an open off-chain DAC committee

For all Layer 2, whether it is Ethereum Layer 2 or Bitcoin Layer 2, DA and data publishing costs are one of the most pressing issues to be resolved. Since the Bitcoin network itself has many problems and does not inherently support large data throughput, how to use this DA space has become a difficult problem that tests the imagination of Layer 2 project parties.

One conclusion is obvious: if Layer 2 "directly" publishes unprocessed transaction data to the Bitcoin block, it can neither achieve high throughput nor low fees. The most mainstream solutions are to either compress the data size as small as possible through high compression before uploading it to the Bitcoin block, or to publish the data directly off the Bitcoin chain.

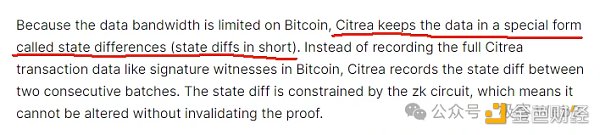

Among the Layer 2 projects that adopt the first approach, the most famous one may be Citrea, which intends to upload the state changes (state diff) of Layer 2 over a period of time, that is, the state change results on multiple accounts, together with the corresponding ZK proofs, to the Bitcoin chain. In this case, anyone can download the state diff and ZKP from the Bitcoin mainnet, and then monitor the state change results of Citrea. This method can compress the size of the data on the chain by more than 90%.

Although this can greatly compress the data size, the bottleneck is still obvious. If a large number of accounts change their status in a short period of time, Layer 2 must summarize and upload all the changes of these accounts to the Bitcoin chain. The final data release cost cannot be kept very low, which can be seen in many Ethereum ZK Rollups.

Many Bitcoin Layer 2 projects simply take the second path: directly use the DA solution under the Bitcoin chain, either build a DA layer by yourself, or use Celestia, EigenDA, etc. B^Square, BitLayer, and the protagonist of this article, Merlin, all use this off-chain DA expansion solution.

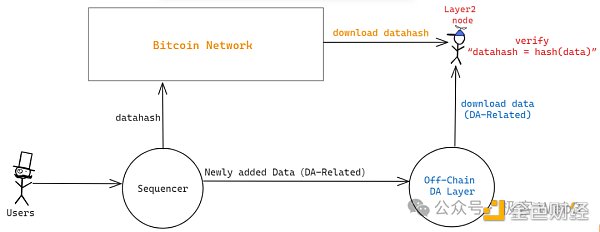

In Geekweb3's previous article, "Analysis of the New Technical Roadmap of B^2: The Necessity of DA and Verification Layers Off-Chain in Bitcoin", we mentioned that B^2 directly imitated Celestia and built a DA network off-chain that supports data sampling, named B^2 Hub. "DA data" such as transaction data or state diff is stored off-chain in Bitcoin, and only datahash / merkle root is uploaded to the Bitcoin mainnet.

This actually treats Bitcoin as a trustless bulletin board: anyone can read datahash from the Bitcoin chain. When you get DA data from the data provider off-chain, you can check whether it corresponds to the datahash on the chain, that is, hash (data 1) == datahash 1? If there is a correspondence between the two, it means that the data provider off-chain gives you the right data.

The above process can ensure that the data provided to you by the off-chain node is associated with certain "clues" on Layer 1, preventing the DA layer from maliciously providing false data. But here is a very important evil scenario: if the source of the data, the Sequencer, did not send out the data corresponding to the datahash at all, but only sent the datahash to the Bitcoin chain, but deliberately withheld the corresponding data and did not let anyone read it, what should we do at this time?

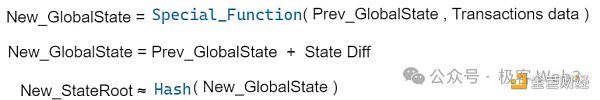

Similar scenarios include but are not limited to: only publishing ZK-Proof and StateRoot, but not publishing the corresponding DA data (state diff or Transaction data). Although people can verify ZKProof and confirm that the calculation process from Prev_Stateroot to New_Stateroot is valid, they do not know which accounts' states have changed. In this case, although the user's assets are safe, everyone cannot be sure of the actual state of the network, which transactions are packaged on the chain, and which contract status has been updated. At this time, Layer 2 is basically equivalent to downtime.

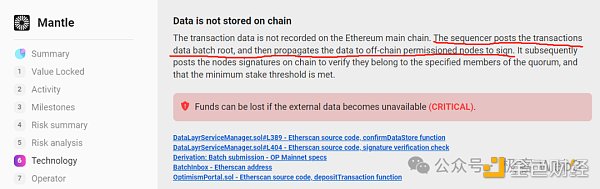

This is actually "data withholding". Dankrad of the Ethereum Foundation once briefly discussed similar issues on Twitter in August 2023. Of course, he mainly targeted something called "DAC".

Many Ethereum Layer 2s that use off-chain DA solutions often set up several nodes with special permissions to form a committee, the full name of which is Data Availability Committee (DAC). This DAC committee acts as a guarantor, claiming that the Sequencer has indeed released complete DA data (transaction data or state diff) off-chain. Then the DAC nodes collectively generate a multi-signature. As long as the multi-signature meets the threshold requirements (for example, 2/4), the relevant contracts on Layer 1 will default to the Sequencer having passed the inspection of the DAC committee and truthfully released the complete DA data off-chain.

The DAC committees of Ethereum Layer 2 basically follow the POA model, and only allow a few nodes that have passed KYC or are officially designated to join the DAC committee, which makes DAC synonymous with "centralization" and "alliance chain". In addition, in some Ethereum Layer 2s that adopt the DAC model, the sorter only sends DA data to DAC member nodes and rarely uploads data to other places. Anyone who wants to obtain DA data must obtain permission from the DAC committee, which is essentially the same as the alliance chain.

There is no doubt that DAC should be decentralized, and Layer 2 may not upload DA data directly to Layer 1, but the access rights of the DAC committee should be open to the outside world to prevent a few people from conspiring to do evil. (For a discussion of DAC malicious scenarios, please refer to Dankrad's previous remarks on Twitter)

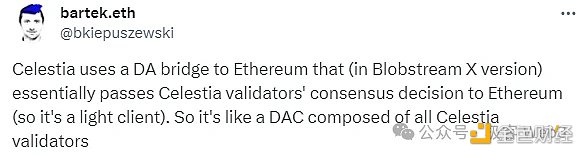

BlobStream previously proposed by Celestia is essentially to replace the centralized DAC with Celestia. The Ethereum L2 sorter can publish DA data to the Celestia chain. If 2/3 of the Celestia nodes sign for it, the Layer 2 exclusive contract deployed on Ethereum will believe that the sorter has truthfully released the DA data, which actually makes the Celestia node act as a guarantor. Considering that Celestia has hundreds of Validator nodes, we can think that this large DAC is relatively decentralized.

The DA solution adopted by Merlin is actually similar to Celestia's BlobStream. Both open the access rights of DAC in the form of POS, making it decentralized. Anyone can run a DAC node as long as they pledge enough assets. In Merlin's document, the above-mentioned DAC node is called Oracle, and it is pointed out that it will support asset pledge of BTC, MERL and even BRC-20 tokens to achieve a flexible pledge mechanism, and also support proxy pledge similar to Lido. (The oracle's POS pledge agreement is basically one of Merlin's core narratives, and the pledge rates it provides are relatively high)

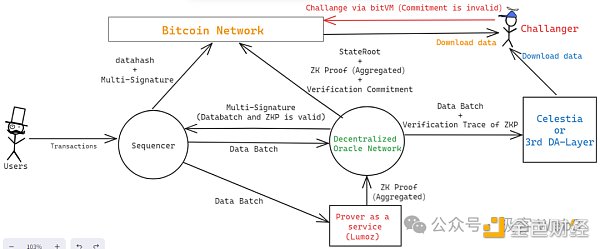

Here we briefly describe Merlin's workflow (picture below):

After receiving a large number of transaction requests, the Sequencer aggregates them and generates data batches, which are passed to the Prover node and the Oracle node (decentralized DAC).

Merlin's Prover node is decentralized and uses Lumoz's Prover as a Service service. After receiving multiple data batches, the Prover mining pool will generate the corresponding zero-knowledge proof, and then the ZKP will be sent to the Oracle node for verification.

The Oracle node will verify whether the ZK Proof sent by Lmuoz's ZK mining pool corresponds to the data batch sent by the Sequencer. If the two can correspond and do not contain other errors, the verification is passed. During this process, the decentralized Oracle nodes will generate multi-signatures through threshold signatures, declaring to the outside world that the sequencer has sent out the DA data in full, and the corresponding ZKP is valid and has passed the verification of the Oracle node.

The sequencer collects multi-signature results from the Oracle node, and when the number of signatures meets the threshold requirement, it sends these signature information to the Bitcoin chain, along with the datahash of the DA data (data batch), for the outside world to read and confirm.

The Oracle node performs special processing on the calculation process of its verification ZK Proof, generates a Commitment, and sends it to the Bitcoin chain, allowing anyone to challenge the "commitment". The process here is basically the same as the fraud proof protocol of bitVM. If the challenge is successful, the Oracle node that issued the Commitment will be subject to economic penalties. Of course, the data that Oracle wants to publish to the Bitcoin chain also includes the hash of the current Layer 2 state - StateRoot, and ZKP itself, which must be published on the Bitcoin chain for external detection.

There are a few details that need to be explained here. First of all, the Merlin roadmap mentions that in the future, Oracle will back up DA data to Celestia. In this way, Oracle nodes can appropriately eliminate local historical data and do not need to store data permanently locally. At the same time, the Commitment generated by Oracle Network is actually the root of a Merkle Tree. It is not enough to disclose the root. The complete data set corresponding to the Commitment must be made public. This requires finding a third-party DA platform, which can be Celestia or EigenDA, or other DA layers.

Security Model Analysis: Optimistic ZKRollup+Cobo MPC Service

We have briefly described the workflow of Merlin above, and I believe everyone has already mastered its basic structure. It is not difficult to see that Merlin, B^Square, BitLayer, and Citrea all basically follow the same security model - optimistic ZK-Rollup.

The first reading of this word may make many Ethereum enthusiasts feel strange. What is "optimistic ZK-Rollup"? In the cognition of the Ethereum community, the "theoretical model" of ZK Rollup is completely based on the reliability of cryptographic calculations, and there is no need to introduce trust assumptions. The word "optimism" introduces trust assumptions, which means that most of the time, people should be optimistic that Rollup has no errors and is reliable. Once an error occurs, the Rollup operator can be punished by fraud proof. This is the origin of the name of Optimistic Rollup, also known as OP Rollup.

For the Ethereum ecosystem, which is the home of Rollup, the optimistic ZK-Rollup may seem a bit out of place, but this is exactly in line with the current status of Bitcoin Layer 2. Due to technical limitations, ZK Proof cannot be fully verified on the Bitcoin chain, and can only be verified under special circumstances. Under this premise, the Bitcoin chain can actually only support the fraud proof protocol. People can point out that there is an error in a certain calculation step of ZKP during the off-chain verification process, and challenge it through fraud proof. Of course, this cannot be compared with Ethereum-style ZK Rollup, but it is already the most reliable and secure security model that Bitcoin Layer 2 can achieve.

Under the above optimistic ZK-Rollup scheme, assuming that there are N people with the authority to initiate challenges in the Layer 2 network, as long as one of the N challengers is honest and reliable and can detect errors and initiate fraud proofs at any time, the state transition of Layer 2 is safe. Of course, the optimistic Rollup with a higher degree of completion needs to ensure that its withdrawal bridge is also protected by the fraud proof protocol. At present, almost all Bitcoin Layer 2 cannot achieve this premise and needs to rely on multi-signature/MPC. Therefore, how to choose the multi-signature/MPC scheme has become a problem closely related to the security of Layer 2.

Merlin chose Cobo's MPC service for the bridge solution, and adopted measures such as cold and hot wallet isolation. The bridged assets are jointly managed by Cobo and Merlin Chain. Any withdrawal behavior needs to be handled by Cobo and Merlin Chain's MPC participants. In essence, the reliability of the withdrawal bridge is guaranteed by the credit endorsement of the institution. Of course, this is only a stopgap measure at this stage. As the project gradually improves, the withdrawal bridge can be replaced by an "optimistic bridge" with 1/N trust assumptions by introducing BitVM and fraud proof protocols, but it will be more difficult to implement (almost all official Layer 2 bridges currently rely on multi-signatures).

Overall, we can sort out that Merlin introduced DAC based on POS, optimistic ZK-Rollup based on BitVM, and MPC asset custody solutions based on Cobo. It solved the DA problem by opening DAC permissions; introduced BitVM and fraud proof protocols to ensure the security of state transitions; and introduced the MPC service of Cobo, a well-known asset custody platform, to ensure the reliability of the withdrawal bridge.

Two-step verification ZKP submission scheme based on Lumoz

Previously, we sorted out the security model of Merlin and introduced the concept of optimistic ZK-rollup. In the technical roadmap of Merlin, decentralized Prover is also discussed. As we all know, Prover is a core role in the ZK-Rollup architecture. It is responsible for generating ZKProof for the batch released by Sequencer. The generation process of zero-knowledge proof is very hardware-intensive and a very difficult problem.

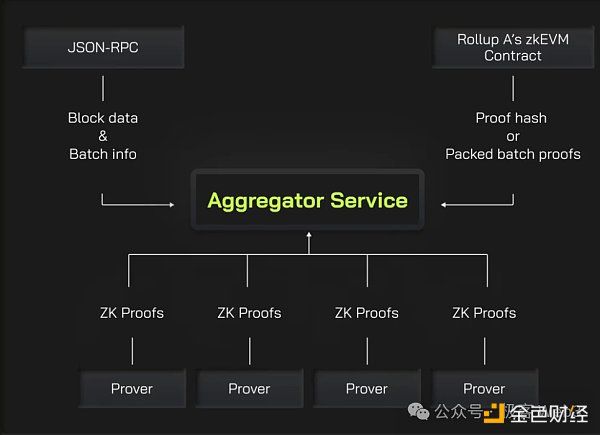

To speed up the generation of ZK proofs, parallelizing and splitting tasks is a basic operation. The so-called parallelization actually means dividing the task of generating ZK proofs into different parts, which are completed separately by different Provers, and finally the Aggregator aggregates multiple segments of Proof into a whole.

In order to speed up the generation process of ZK proofs, Merlin will adopt Lumoz's Prover as a service solution, which is actually to bring together a large number of hardware devices to form a mining pool, and then assign computing tasks to different devices and allocate corresponding incentives, which is somewhat similar to POW mining.

In this decentralized Prover solution, there is a type of attack scenario, commonly known as a front-running attack: suppose an Aggregator has built a ZKP, and it sends the ZKP out in the hope of getting a reward. After other Aggregators see the content of the ZKP, they preemptively publish the same content before him, claiming that this ZKP was generated by themselves first. How to solve this situation?

Perhaps the most instinctive solution that everyone thinks of is to assign a specified task number to each Aggregator. For example, only Aggregator A can take Task 1, and others will not get a reward even if they complete Task 1. But there is a problem with this method, which is that it cannot resist single-point risk. If Aggregator A has a performance failure or is offline, Task 1 will be stuck and cannot be completed. Moreover, this method of assigning tasks to a single entity cannot improve production efficiency through competitive incentives, which is not a good solution.

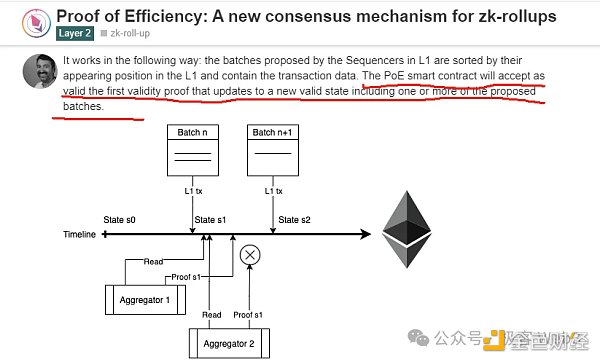

Polygon zkEVM once proposed a method called Proof of efficiency in a blog post, which pointed out that different Aggregators should be encouraged to compete with each other through competitive means, and incentives should be allocated on a first-come, first-served basis. The Aggregator that first submits ZK-Proof to the chain can get a reward. Of course, he did not mention how to solve the MEV preemptive problem.

Lumoz adopts a two-step verification ZK proof submission method. After an Aggregator generates a ZK proof, it does not need to send out the complete content first, but only publishes the hash of the ZKP. In other words, it publishes the hash (ZKP+Aggregator Address). In this way, even if other people see the hash value, they don't know the corresponding ZKP content and cannot rush to buy it;

If someone simply copies the entire hash and releases it first, it is meaningless, because the hash contains the address of a specific aggregator X. Even if aggregator A releases the hash first, when the original image of the hash is revealed, everyone will see that the aggregator address contained in it is X's, not A's.

Through this two-step verification ZKP submission scheme, Merlin (Lumoz) can solve the problem of jumping the gun in the ZKP submission process, and then achieve highly competitive zero-knowledge proof generation incentives, thereby increasing the speed of ZKP generation.

Merlin's Phantom: Multi-chain interoperability

According to Merlin's technical roadmap, they will also support interoperability between Merlin and other EVM chains. The implementation path is basically the same as the previous Zetachain idea. If Merlin is used as the source chain and other EVM chains as the target chain, when the Merlin node perceives the cross-chain interoperability request issued by the user, it will trigger the subsequent workflow on the target chain.

For example, an EOA account controlled by the Merlin network can be deployed on Polygon. When a user issues a cross-chain interoperability instruction on the Merlin Chain, the Merlin network first parses its content and generates a transaction data executed on the target chain. The Oracle Network then performs MPC signature processing on the transaction to generate a digital signature for the transaction. After that, Merlin's Relayer node releases the transaction on Polygon and completes subsequent operations through the assets in the Merlin EOA account on the target chain.

When the operation requested by the user is completed, the corresponding assets will be directly forwarded to the user's address on the target chain, and in theory, they can also be directly transferred to Merlin Chain. This solution has some obvious advantages: it can avoid the handling fee wear and tear generated by the cross-chain bridge contract when traditional assets cross-chain, and the security of cross-chain operations is directly guaranteed by Merlin's Oracle Network, without relying on external infrastructure. As long as users trust Merlin Chain, they can assume that such cross-chain interoperability is not a problem.

Summary

In this article, we briefly interpret the general technical solution of Merlin Chain, and believe that more people can understand the general workflow of Merlin and have a clearer understanding of its security model. Considering the current booming Bitcoin ecosystem, we believe that this type of technology popularization is valuable and needed by the general public. We will follow up on Merlin, bitLayer, B^Square and other projects in the long term and conduct a more in-depth analysis of their technical solutions. Please stay tuned!

JinseFinance

JinseFinance

JinseFinance

JinseFinance Huang Bo

Huang Bo JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Cointelegraph

Cointelegraph