DeepSeek really speeds up the big model circle -

Just now, OpenAI urgently released the latest reasoning model, the o3-mini series late at night.

There are three versions in total: low, medium and high.

Among them, o3-mini and o3-mini-high are already online:

According to the official caliber, the o3 series model aims to push the boundaries of low-cost reasoning.

ChatGPT Plus, Team and Pro users can access OpenAI o3-mini from today, and enterprise-level access will be available a week later.

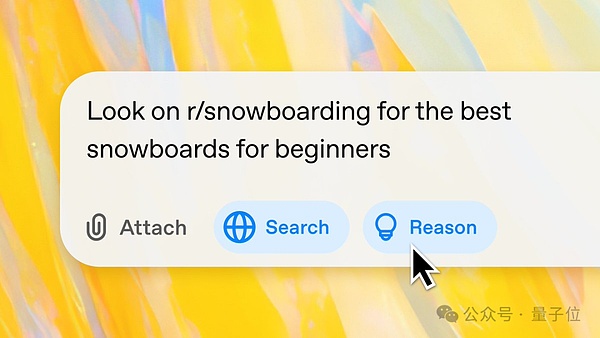

Free users can also use o3-mini to experience search by selecting "Search+Reason".

Perhaps because DeepSeek forced them to do so, this is the first time OpenAI has offered a free reasoning model to users.

Even in the subsequent Reddit "Ask and Answer" event, CEO Altman also rarely publicly reflected:

On the issue of open source weighted AI models, (personally, I think) we are on the wrong side of history.

At the same time, within just a few hours, netizens have begun to test it crazily...

It is optimized for STEM reasoning, but the price is still sky-high compared to DeepSeek-R1

Let's take a look at what the technical report says.

At the end of last year, OpenAI launched the o3-mini preview version, which once again refreshed the capabilities of small models. (It is comparable to o1-mini in cost and low latency)

At that time, CEO Ultraman announced that the official version would be released in January this year. And stuck at the last moment of DDL, the official version of o3-mini finally came to the table.

Overall, similar to the previous generation o1-mini, it is also optimized for STEM (Science, Technology, Engineering, Mathematics), continuing the small and beautiful style of the mini series.

Only the o3-mini (medium) not only performs as well as the o1 series in math coding, but also responds faster.

Human expert evaluations show that in most cases the o3-mini produces more accurate and clearer answers than the o1-mini, gaining a 56% preference, while reducing the rate of major errors when dealing with complex real-world problems by 39%.

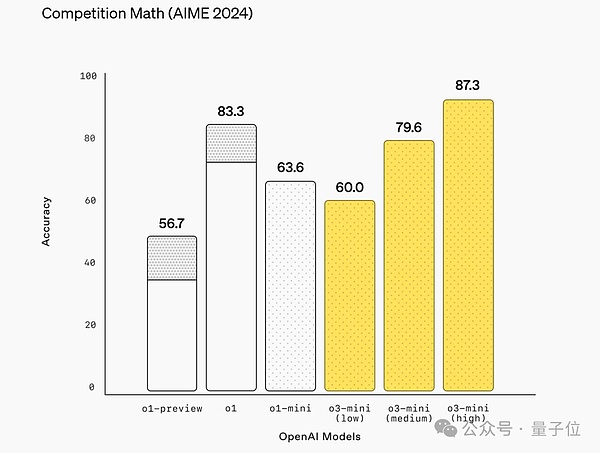

In terms of mathematical ability, o3-mini (low) at low reasoning intensity is comparable to o1-mini; at medium reasoning intensity, its ability is comparable to the full-blooded version of o1; and once the reasoning intensity is full (high), its performance directly surpasses all models in the o1 series.

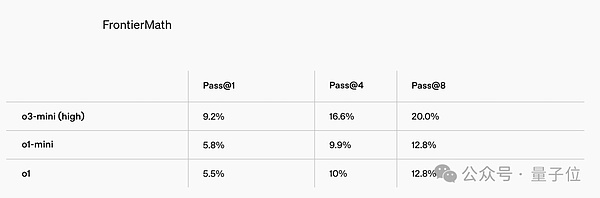

In the FrontierMath difficult test prepared by more than 60 top mathematicians, o3-mini at high reasoning intensity has also improved significantly compared to the o1 series.

The official even specifically noted that if used with Python tools, o3-mini (high) solved more than 32% of the problems on the first try, including more than 28% of T3-level problems.

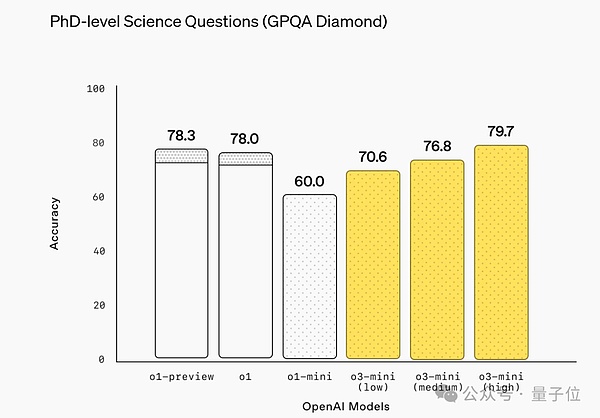

In terms of scientific ability, on PhD-level physics, chemistry and biology problems, the o3-mini with low reasoning intensity has already opened up a level with the o1-mini.

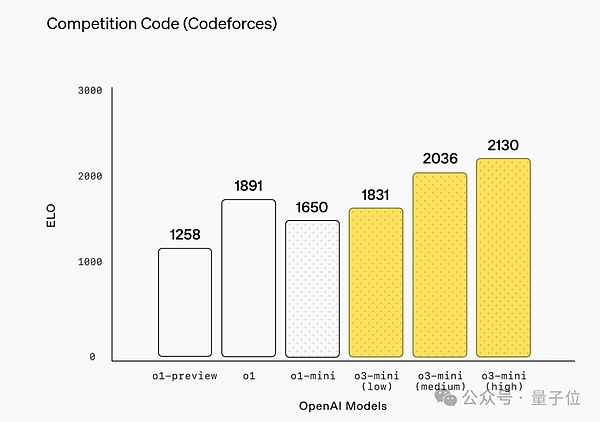

Of course, in the important ability of coding, the o3-mini is ahead of the o1 series at all levels.

Based on their performance in LiveBench, it can be seen that with the upgrade of inference intensity, the advantage of o3-mini is still expanding.

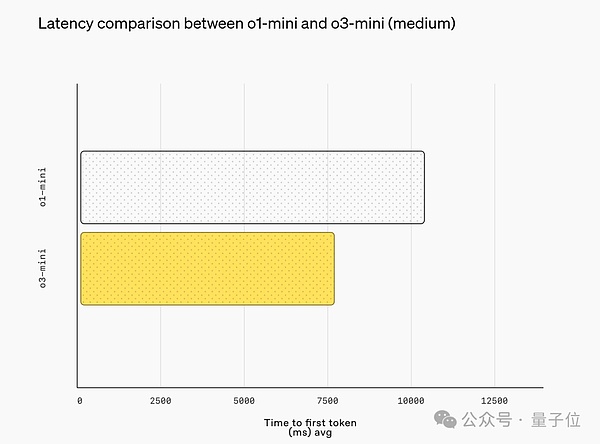

And it should be reminded that while achieving the above-mentioned lead, o3-mini is also faster to respond, with an average response time of 7.7 seconds, a 24% increase from o1-mini's 10.16 seconds.

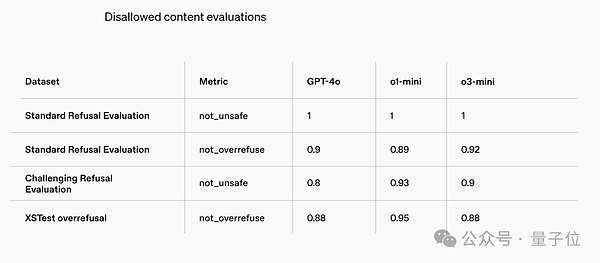

Finally, in terms of security assessment, o3-mini clearly surpassed GPT-4o in multiple security assessments.

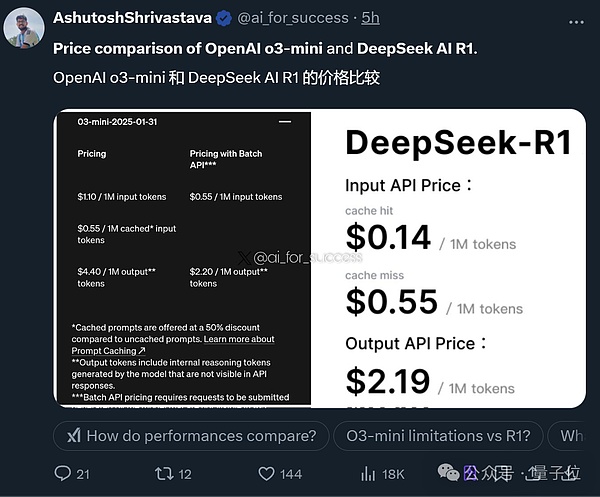

In terms of price, compared to DeepSeek-R1, which has an input/output of $0.14/0.55 respectively, o3-mini is still sky-high.

According to netizens' hot comments, DeepSeek-R1 is still the king of cost performance: faster, better, and cheaper.

BTW, OpenAI announced the team behind o3-mini as usual. It can be seen that this time it is led by Altman himself, and the research project supervisors are Carpus Chang and Kristen Ying (there are also many familiar old friends on the list, such as Ren Hongyu, Zhao Shengjia, etc.).

Netizens are frantically testing

As we just mentioned, netizens have already started frantically testing.

However, judging from the reviews, everyone has mixed opinions on the performance of o3-mini.

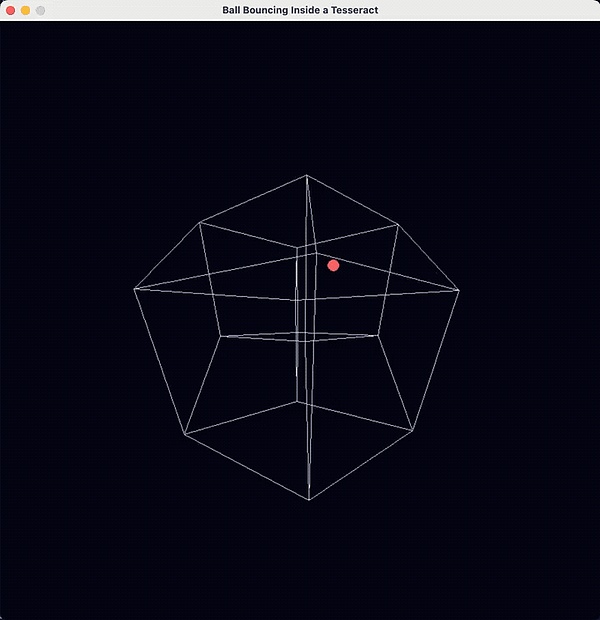

For example, in the task of implementing "a ball bouncing inside a 4D volume" with Python, some people think that o3-mini is the best LLM:

The effect is like this:

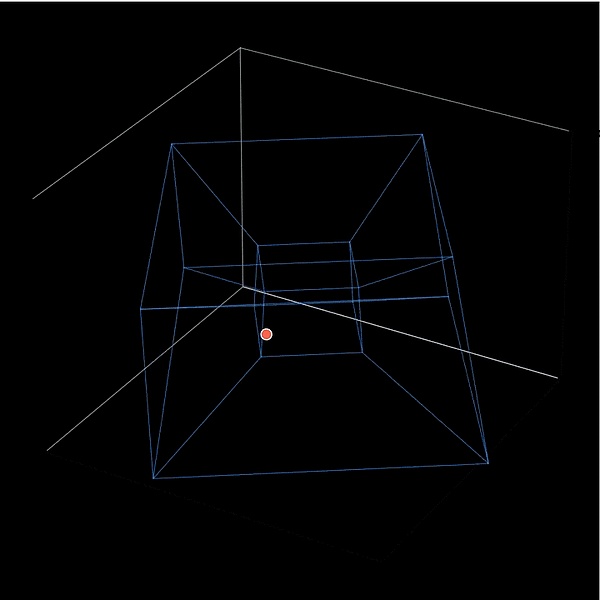

Then some netizens tried to use DeepSeek to do the same task. From the effect point of view, o3-mini is slightly better:

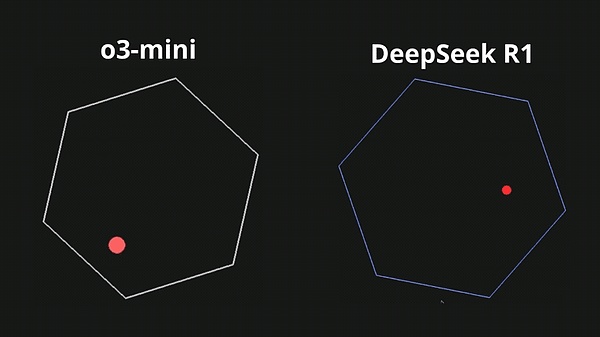

For a more direct comparison, let a ball bounce in a rotating hexagon. The ball should be affected by gravity and friction. The difference in effect between o3-mini and DeepSeek R1 is quite obvious:

Including more complex tasks, creating 100 bouncing yellow balls in a sphere, o3-mini can now do it:

For example, let o3-mini design a game in which two snakes compete with each other:

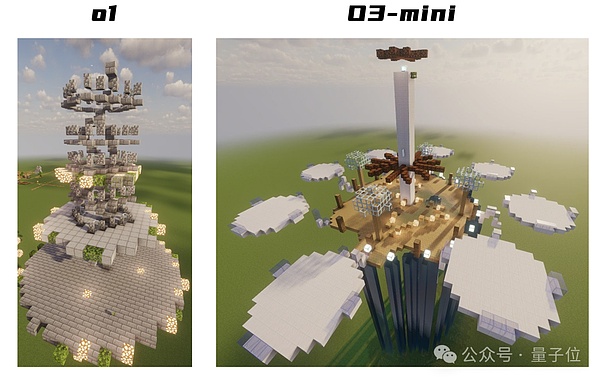

In addition to DeepSeek, netizens also compared the effects of o1 and o3-mini, such as generating a huge, amazing, epic floating city.

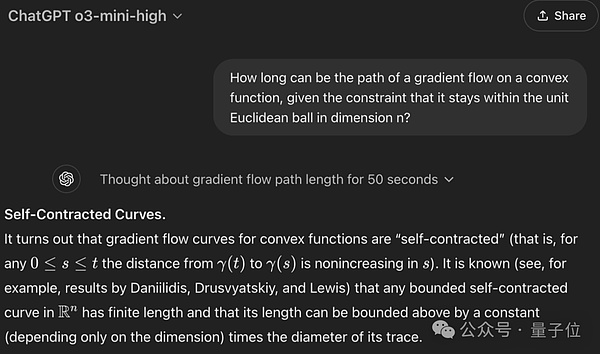

Another netizen asked a confusing question that almost all large models would get wrong, but what shocked him was that o3-mini actually answered it correctly:

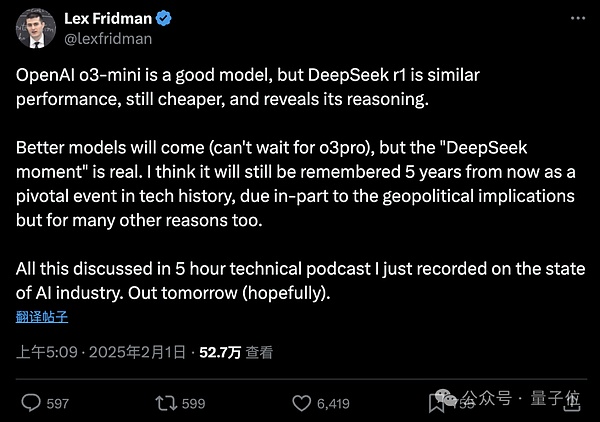

However, Lex Fridman, a well-known podcast blogger, commented on o3-mini:

OpenAI o3-mini is a good model, but DeepSeek R1 has similar performance, a lower price, and reveals its reasoning process.

Better models will appear (can't wait for o3-pro), but the "DeepSeek moment" is real. I think it will still be remembered five years from now as a turning point in the history of technology.

One More Thing

Just a few hours after o3-mini went online, Altman himself and his team participated in Reddit's "Ask and Answer" event.

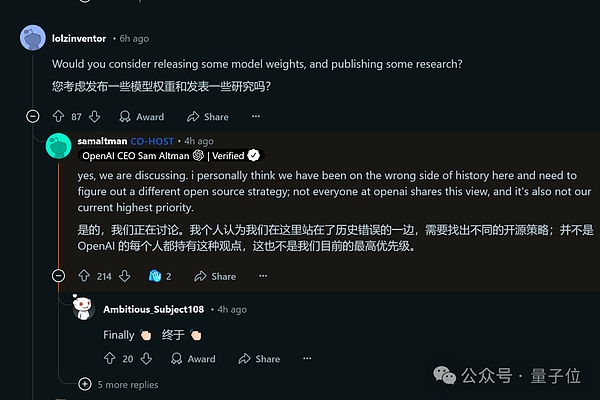

Considering that the open source DeepSeek has recently stirred up the AI circle, Altman rarely publicly reflected:

On the issue of open source weighted AI models, (personally I think) we are on the wrong side of history.

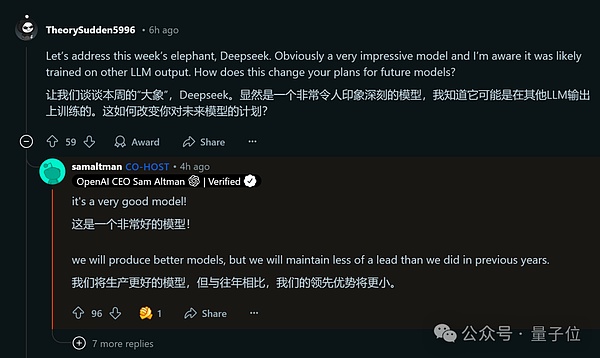

Even admitting that OpenAI's lead will not be as big as before.

DeepSeek is indeed excellent, and we will continue to develop better models, but the lead will be smaller.

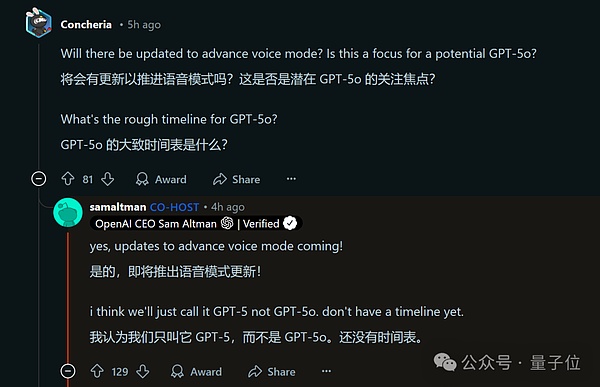

At the same time, some of OpenAI's future plans have also been exposed.

For example, the advanced voice model is about to be updated, and OpenAI will directly call it GPT-5 instead of GPT-5o, but there is no specific timetable yet.

In addition, the inference model will also support the calling of more tools.

Finally, the full-blooded version of o3 was also mentioned, but it seems that it is still quite far away...

Brian

Brian

Brian

Brian Alex

Alex Joy

Joy Joy

Joy Joy

Joy Joy

Joy Joy

Joy Alex

Alex Anais

Anais