Author: Chen Jian Source: X, @jason_chen998

At this stage, there are only three bonus tracks in the entire currency circle: AI, TG and Meme, and VCs cannot enter the latter two. In addition, the currency circle was too easy to make money in the last cycle. A16Z, Paradigm, and Polychain easily raised a lot of money and are waiting to invest it. So now they can only squeeze into AI madly. Therefore, from the perspective of financing digestion ability, in addition to the large public chains, AI has taken over all these funds. For example, in addition to Sahara that I talked about with you before, Vana has just officially announced that it has obtained investment portfolios from Paradigm, Coinbase, and Polychain.

If AI+Web3 projects are classified from bottom to top, there can be three layers: data, computing power and applications. From the perspective of the industrial chain process, first there is data → then the model is superimposed → then it is trained with computing power → finally it is delivered to the application. Therefore, as the upstream of the industrial chain and its scarcity, data is also the sector with the highest valuation ceiling.

But the data problem in the field of AI, and why Web3 can solve this problem, I believe everyone has heard it so many times that their ears are worn out. The same old story is repeated over and over again about evil and shameless big companies selling the data contributed by users for money. The main reason is that this problem is very serious now, but it has not been solved. The most typical case is Facebook's Meta pixel. 30% of the websites in the world have installed this script, which will send the data you use directly to Facebook, and the 60 million US dollar contract reached between Reddit and Google to directly give users' data to Google for training. Needless to say, Youtube and Twitter must have ulterior motives as well. So if blockchain can solve such a big problem of equal rights for AI data in the world's most profitable industry, then it will truly rise from being labeled a casino to being the hope of the whole village. This is a glorious and profitable track that can raise a lot of money, so those big VCs like to invest in it.

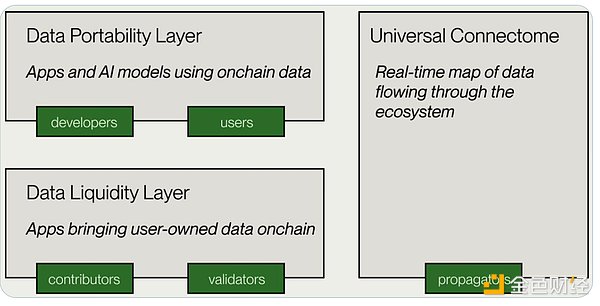

In recent years, there have been many projects to solve the problem of data equality, and the ideas are also varied, but the systematic development is visibly maturing. From the beginning, simply uploading data to the chain to complete the action of property rights confirmation, to the current one-stop platform improvement, the figure below is the architecture of Vana, which is actually very simple with only two layers. The bottom data liquidity layer DLP is responsible for completing the stage of collecting data and uploading to the chain, and the upper data application layer DPL provides the application with regular and usable data. The core of the entire Vana is DPL, which is also the largest section in the economic model and airdrop.

There is not just one DLP, but many. Each DLP has its own independent smart contract, verification method, node and other systems. It is worth noting that although there is no threshold to apply for the creation of DPL, there will be only 16 DPL slots on the main network. In the current economic model, the creators and participants of DPL will receive 17.5% of the total Token incentives in two years, and the higher the ranking of the DPL, the greater the incentive ratio will be. Therefore, this piece of meat is very fat and the competition is fierce. The operating mechanism of DPL is also the core of Vana. DPL is equivalent to a "data DAO" that can be contributed by multiple people. It directly corresponds to a specific type of data scenario, and everyone can contribute data to this DAO together, such as TwitterDAO, RedditDAO, etc. You can export your data on these platforms, or crawlers and other forms to get it out, and then upload it to contribute data to make money. The logic is that since these platforms take your data to sell, why don't I just make an open data purchasing market, where the data is uploaded by the data owner itself, but the problem is how to ensure the validity and comprehensiveness of this data. What if it's just a pool where everyone can stuff data into it at will? Vana builds the DPL system through two methods: technology and economic model governance.

As shown in the figure below, you can do four things in DPL. You can directly create a DPL. As the creator of DPL, you can get 40% of the corresponding tokens, but it is also very difficult and not just a simple form filling. In addition to defining the data source, type and other basic information of your DPL, you also have to solve a series of problems such as smart contracts and data verification methods by yourself, because each type of data is different, and you have to ensure that the data uploaded by the user is valid, so different DPL verification methods are also completely different. For example, as the creator of the DPL TwitterDAO, you must first find a way to tell users who want to contribute data how to effectively get their Twitter data out. Secondly, more importantly, you have to develop corresponding verification technology for this purpose. That is, every time a user uploads data, you need to verify whether the data is from Twitter, whether the format and content of the data is valid, whether it is junk data created by robots, and so on. Therefore, each DLP creator is no less than a professional studio specializing in solving data in a certain field.

So it is still very difficult for ordinary people to create a DPL directly, but if you are an internal staff member responsible for data analysis in ByteDance or Alibaba, or you come from a professional big data company, then it is very easy to create a DPL. Once you are selected into the main network, you can make money easily.

If you do not have the ability to create a DPL, you can be the node above, that is, after others write the verification code, you as a node are responsible for running code verification and broadcasting user-submitted data to make money.

Secondly, as an ordinary user, you can choose to join a DPL, export the data according to the corresponding documents and contribute it. If your data is used, you can make money. As mentioned earlier, in addition to the limited 16 DPLs, there will be rankings. The higher the ranking, the more money you will get. This ranking is based on the number of staked Vana coins. As a Vana holder, you can vote for the DPL you think is the best through staking, and then the ranking of this DPL will rise, and you will get more incentives. The more money you make, the more validators and contributors there will be, forming a positive spiral. As a staker, you can get 20% of the corresponding DPL incentives. The following figure shows the DPL ranking in the current testnet stage. Some of them are already available. For example, I just tested exporting my ChatGPT data and uploaded it to one of the DPLs. 16 of these testnet DPLs will eventually win and enter the mainnet, so if you are interested, you can create a DPL to see if you can grab a position. But at the same time, the question is how to ensure that DPL’s data will not be leaked, otherwise what if DPL steals data from each other, or a third party gets the data directly without buying it through the platform? First of all, although Vana is a Layer 1 of EVM, not all of this data will be uploaded to the chain. Only the root data and proof data will be uploaded to the chain, and the rest of the data will be physically isolated through Intel SGX through the Trusted Execution Environment (TEE) to prevent data leakage. This is consistent with the bite protection technology used by @puffer_finance that I introduced to you before.

Finally, although AI may become the hope of the entire cryptocurrency community, the current narrative of Web3+AI is still just a narrative. Whether this set of designed systems can be truly implemented is still a very long process. In addition to technology, the more serious challenge is whether it is really commercially viable. For example, as Google wants to purchase data training models, should it directly spend 60 million to let Reddit export the ready-made, regular data in the database to it, or go to a certain platform to purchase data maintained and contributed by users. The logic can be self-consistent within the circle, but whether it can be self-consistent in the real business war of competing for ROI is still a long way to go.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Clement

Clement Coinlive

Coinlive  Davin

Davin Beincrypto

Beincrypto Coindesk

Coindesk Cointelegraph

Cointelegraph Cdixon

Cdixon Cdixon

Cdixon Bitcoinist

Bitcoinist