Original title: Why I support privacy

Author: Vitalik, founder of Ethereum; Translator: Baishui, Golden Finance

Special thanks to Balvi volunteers Paul Dylan-Ennis, pcaversaccio, vectorized, Bruce Xu and Luozhu Zhang for discussions and feedback.

Recently, I have been paying more and more attention to improving the privacy status of the Ethereum ecosystem. Privacy is an important guarantee of decentralization: whoever holds information has power, so we need to avoid centralized control of information. In the real world, people's concerns about centralized technical infrastructure are sometimes about operators accidentally changing rules or delisting user platforms, but it is equally common to worry about data collection. While the cryptocurrency space originated with projects like Chaumian Ecash that prioritized digital financial privacy, it has recently downplayed privacy for a very bad reason: we couldn’t provide privacy in a decentralized way before ZK-SNARKs, so we downplayed its importance and focused on other safeguards we could provide at the time. Today, however, privacy can no longer be ignored. Artificial intelligence is dramatically increasing the power of centralized data collection and analysis, while also greatly expanding the scope of data we voluntarily share. In the future, new technologies like brain-computer interfaces will bring even more challenges: we may actually be talking about AI reading our minds. At the same time, we have more powerful tools to protect privacy than the cypherpunks of the 1990s could have imagined, especially in the digital realm: efficient zero-knowledge proofs (ZK-SNARKs) can protect our identities while revealing enough information to prove we are trustworthy; fully homomorphic encryption (FHE) lets us compute on data without looking at it; and obfuscation techniques may soon offer even more capabilities.

Privacy doesn’t mean isolation, it means solidarity.

At this point, it’s worth recalling the question: Why do we need privacy? Everyone’s answer is different. In this post, I’ll give my answer, which is divided into three parts:

Privacy is freedom:Privacy gives us the space to live in a way that suits our needs without having to worry about how our behavior will be viewed in various political and social games.

Privacy is order:Many of the mechanisms that form the basis of the basic operation of society rely on privacy to function properly.

Privacy is progress:If we can find new ways to selectively share information while protecting it from abuse, we can unlock tremendous value and accelerate technological and social progress.

Privacy is freedom

Back in the early 2000s, there was a time when ideas similar to those outlined in David Brin’s 1998 book The Transparent Society were prevalent: technology would make information around the world more transparent, and while this would bring some drawbacks and require constant adaptation, it was generally a very good thing, and we could make it fairer by ensuring that citizens could monitor (or, more precisely, monitor) governments. In 1999, Sun Microsystems CEO Scott McNealy famously said: “Privacy is dead. Accept it!” This mentality was prevalent during the early conception and development of Facebook, when it banned pseudonymous identities. I personally remember experiencing the tail end of this mentality firsthand at a Huawei event in Shenzhen in 2015 when a (Western) speaker casually mentioned that “privacy is dead.”

The “Transparent Society” embodied the best and brightest that the “privacy is here” ideology had to offer: it promised a better, more just, more equitable world that used the power of transparency to hold governments accountable rather than oppress individuals and minorities. Yet, in hindsight, even this philosophy was clearly a product of its time, written at the height of enthusiasm for global cooperation and peace and the “end of history,” and it relied on a series of overly optimistic assumptions about human nature. Main manifestations:

The top of global politics will generally be well-intentioned and rational, making vertical privacy (i.e., not revealing information to powerful people and institutions) increasingly unnecessary.Abuses of power will generally be localized to specific regions, so the best way to combat these abuses is to expose them to the light of day.

Culture will continue to progress until horizontal privacy (i.e., not revealing information to other members of the public) is no longer necessary.Nerds, homosexuals, and eventually everyone else can stop hiding in the closet because society will stop being harsh and judgmental about people's unique traits and become more open and inclusive.

Today, no major country can universally agree that the first assumption is correct, while many major countries generally believe that it is wrong. On the second aspect, cultural tolerance is also rapidly regressing - just searching phrases such as "bullying is good" on Twitter is one piece of evidence, although it is easy to find more similar evidence.

I personally have the misfortune to often encounter the disadvantages of the "transparent society", because every move I make outside has the potential to accidentally become a public report in the media:

The worst part is that someone filmed a one-minute video of me using my laptop in Chiang Mai, and then posted it on Xiaohongshu, which immediately received thousands of likes and reposts. Of course, my own situation is far from the human norm — but this is always true of privacy: People whose lives are relatively normal need privacy less, while people whose lives deviate from the norm need it more, in whatever direction. Once you add up all the important directions, the number of people who actually need privacy is quite large — and you never know when you might be one of them. This is a big reason why privacy is often underestimated: it’s not just about your situation and information today, but also about the unknowns of what will happen to that information in the future (and how it will affect you). Today, privacy in corporate pricing mechanisms is a niche issue even among AI advocates, but it may become a growing problem with the rise of AI-based analytical tools: the more companies know about you, the more likely they are to offer you a personalized price, thereby maximizing the profit they can extract from you multiplied by the likelihood that you will pay.

I can express my general argument for privacy as freedom in one sentence as follows:

Privacy gives you the freedom to live your life in a way that best suits your personal goals and needs, without having to constantly balance every action between the “private game” (your own needs) and the “public game” (how various other people, through various mechanisms including social media cascades, business incentives, politics, institutions, etc., will view and respond to your actions).

Without privacy, everything becomes a constant struggle over “what other people (and bots) will think of what I do”—whether it’s powerful people, corporations, or peers, both present and future. With privacy, we maintain a balance. Today, that balance is rapidly eroding, especially in the physical realm. The default path of modern tech capitalism, eager to find a business model that extracts value from users without them explicitly paying, is further eroding that balance (even into highly sensitive areas like, eventually, our own minds). So we need to counteract that influence and be more explicit in our support for privacy, especially in the realm where we can most practically do so: in the digital realm.

But why not allow the government backdoors?

There is a common response to the above reasoning: the privacy evils I have described are largely about the public having too much access to our private lives, and even when it comes to abuses of power, it is about corporations, bosses, and politicians having too much access. But we won’t let the public, corporations, bosses, and politicians have all that data. Instead, we’ll let a small group of well-trained, well-vetted law enforcement professionals look at data from wiretaps on street surveillance cameras and internet cables and chat apps, with strict accountability procedures in place so that no one else finds out.

This is a quietly but widely held position, so it is critical to address it explicitly. Even when implemented with good intentions and high quality standards, such strategies are inherently unstable for the following reasons:

In reality, not just governments, but all sorts of corporate entities are at risk of data breaches, and the quality varies.In the traditional financial system, KYC and payment information is held by payment processors, banks, and a variety of other intermediaries. Email providers look at vast amounts of data of all kinds. Telecom companies know your location and regularly resell it illegally. Overall, regulating all of these entities rigorously enough to ensure that they truly value user data is a huge effort for both the regulators and the regulated, which may be incompatible with maintaining a competitive free market.

There will always be concerns about the misuse of data (including sale to third parties) by individuals with access. In 2019, several Twitter employees were charged and subsequently convicted of selling personal information of dissidents to Saudi Arabia.

Data can be hacked at any time. In 2024, data that US telecommunications companies were legally required to collect was hacked, allegedly by hackers from the Chinese government. In 2025, a large amount of sensitive personal data held by the Ukrainian government was hacked by Russian hackers. On the other hand, highly sensitive Chinese government and corporate databases have also been hacked, including by the US government.

Regime change is possible. A government that is trustworthy today may not be trustworthy tomorrow. A person in power today may be persecuted tomorrow. A police agency that maintains impeccable standards of respect and decorum today may be reduced to all kinds of cruelty that people take pleasure in 10 years later.

From an individual's perspective, if their data is stolen, they cannot predict whether and how it will be misused in the future. By far, the safest way to handle large-scale data is to collect as little data centrally as possible from the beginning. Data should be held by users themselves to the greatest extent possible, and encryption should be used to aggregate useful statistics without compromising personal privacy.

The argument that the government should be able to obtain any information with a warrant because that’s how things have always worked misses a key point: Historically, the amount of information obtained with a warrant was far less than it is today, or even less than would be obtained if the strictest internet privacy protections were universally adopted. In the 19th century, the average person had only one voice conversation, and it was never recorded by anyone. The moral panic over “information privacy” is therefore completely ahistorical: ordinary conversations, even financial transactions, have been completely and unconditionally private for thousands of years.

An ordinary conversation in 1950. No one other than the people participating in the conversation at the time it was conducted recorded, monitored, "lawfully intercepted", subjected to AI analysis, or otherwise viewed the conversation at any time.

Another important reason to minimize centralized data collection is that a large portion of global communications and economic interactions are international in nature. If everyone is in the same country, then it is at least arguable that the "government" should have access to the data on their interactions. But what if people are in different countries? Of course, in principle you could try to come up with a "galactic brain" scheme that maps everyone's data to some entity that has legal access to them - although even then you'd have to deal with a lot of edge cases involving multiple people's data. But even if you could do that, that's not the realistic default outcome. The realistic default outcome of government backdoors is that data is centralized in a few central jurisdictions that own everyone's data because they control the apps - essentially global technological hegemony. Strong privacy is by far the most stable alternative.

Privacy is order

For more than a century, it has been recognized that the key technical element for a functioning democracy is secret voting: no one knows who you voted for, and you can't prove it to anyone even if you really want to. If secret voting isn’t the default, voters are subject to all sorts of fringe incentives to influence how they vote: bribes, promises of retroactive rewards, social pressure, threats, and so on.

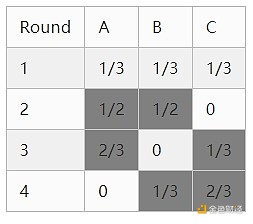

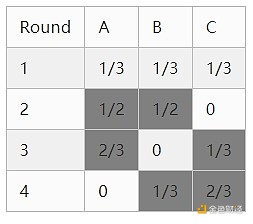

A simple mathematical argument shows that such fringe incentives completely undermine democracy: in an election with N people, your chance of influencing the outcome is only about 1/N, so any consideration of which candidate is better or worse is divided by N. Meanwhile, “fringe games” (e.g., voter bribery, coercion, social pressure) affect you directly based on how you vote (rather than based on the overall voting outcome), so they don’t divide by N. So unless fringe games are tightly controlled, they will by default overwhelm the entire game, drowning out any consideration of which candidate’s policies are actually better.

This doesn’t just apply to national-scale democracy. In theory, it applies to almost any principal-agent problem in business or government:

Judges decide how to decide cases

Government officials decide which companies to award contracts or grants

Immigration officials decide whether to issue or deny visas

Social media company employees decide how to enforce content moderation policies

Company employees participate in business decisions (e.g., which suppliers to purchase from)

The fundamental problem in all cases is the same: if agents act honestly, they derive only a small portion of the benefit from their actions for the entity they represent; if they follow certain side game incentives, they reap the full benefit. So even today, we still need a lot of moral goodwill to ensure that all our institutions are not completely swallowed up by a chaotic vortex of mutually overturning side games. If privacy is further weakened, these side games will become even more powerful, and the moral goodwill required to keep society functioning might become impractically high.

Can social systems be redesigned to avoid this problem? Unfortunately, game theory makes it all but certain that this is impossible (with one exception: total dictatorship). In versions of game theory that focus on individual choice—that is, that assume that each player makes decisions independently, and that do not allow for the possibility of multiple groups of agents working together for a common good—mechanism designers have a great deal of freedom to “design” games to achieve a variety of specific outcomes. In fact, there are mathematical proofs showing that any game must have at least one stable Nash equilibrium, making the analysis of such games tractable. But in the version of game theory that allows for the possibility of coalitions cooperating (i.e., “colluding”), known as cooperative game theory, we can show that there are a large number of games (called “cores”) that do not have any stable outcomes. In such games, some coalition will always be able to profit no matter what the current state of affairs is.

If we take the math seriously, we arrive at this conclusion: The only way to have a stable social structure is to have some limit on the amount of coordination that can occur between the players—which implies a high degree of privacy (including deniability). If you don’t take the math seriously for its own sake, it’s enough to look at the real world, or at least think about what some of the principal-agent scenarios above would look like if they were taken over by edge games.

Note that this leads to another argument for why government backdoors are risky. If everyone has unlimited ability to coordinate with everyone else on everything, the result is chaos. But if only a few can do so because they have privileged access to information, the result is that they dominate. One party having a backdoor into the communications of another party could easily spell the end of the viability of a multiparty system.

Another important example of a social order that depends on limiting collusion to function is intellectual and cultural activity. Participation in intellectual and cultural activity is essentially an intrinsically motivated public good: it’s hard to find extrinsic incentives for making a positive contribution to society, precisely because intellectual and cultural activity is, to some extent, an activity that determines what actions in society are positive. We can create some approximations of business and social incentives to point in the right direction, but they also need to be supplemented by strong intrinsic motivations. But this also means that such activities are extremely vulnerable to imbalanced external incentives, especially edge cases such as social pressure and coercion. To limit the impact of such imbalanced external incentives, privacy becomes necessary again.

Privacy is progress

Imagine a world without public and symmetric key cryptography. In this world, it would be inherently more difficult to send messages securely over long distances - not impossible, just very difficult. This would lead to a lot less international cooperation, and as a result, more cooperation would still take place through face-to-face offline channels. This would make the world a poorer and more unequal place.

I think that’s where we are today, relative to a hypothetical future world, when stronger forms of cryptography are widely used—particularly programmable cryptography, coupled with stronger full-stack security and formal verification to give us strong assurance that they are being used correctly.

Egyptian God Protocol: Three powerful and highly general structures that allow us to perform computation on data while keeping it completely private.

Healthcare is a great example. If you talk to anyone who has worked in longevity, fighting epidemics, or other health fields over the past decade, they will all tell you that the future of treatment and prevention will be personalized, and that effective responses are highly dependent on high-quality data, both personal and environmental. Effectively protecting people from airborne diseases requires knowing which areas have high and low air quality, and where pathogens are present at specific times. The most advanced longevity clinics will provide customized advice and treatment plans based on data about your body, dietary preferences, and lifestyle.

However, all of this also poses huge privacy risks. I personally know of an incident where a company equipped an employee who "phoned home" with an air monitor that collected enough data to determine when the employee had sex. For similar reasons, I expect that much of the most valuable data will be collected by default, precisely because people are afraid of privacy risks. Even when data is collected, it is almost always not shared widely or made available to researchers—partly for business reasons, but just as often for privacy concerns.

The same pattern repeats itself in other areas. The documents we write, the messages we send on various applications, and our behavior on social media contain a wealth of information about ourselves that can be used to more effectively predict and provide the things we need in our daily lives. There is also a wealth of information about how we interact with our physical environment that is not related to health care. Today, we lack the tools to effectively use this information without creating a dystopian privacy nightmare. But in the future, we may have those tools.

The best way to address these challenges is to use strong encryption, which allows us to reap the benefits of sharing data without the downsides. In the age of artificial intelligence, the need to access data (including personal data) will only become more important, because the ability to train and run "digital twins" locally to make decisions on our behalf based on high-fidelity approximations of our preferences will bring tremendous value. Ultimately, this will also involve using brain-computer interface (BCI) technology to read high-bandwidth input from our brains. To avoid leading to a highly centralized global hegemony, we need to find ways to achieve this while respecting privacy. Programmable encryption is the most trustworthy solution.

My AirValent air quality monitor. Imagine a device that collects air quality data, publishes aggregate statistics on an open data map, and rewards you for providing the data - all using programmable encryption to avoid revealing your personal location data and verify the authenticity of the data.

Privacy can promote social security progress

Programmable cryptographic techniques such as zero-knowledge proofs are very powerful because they are like Lego blocks in the flow of information. They can fine-tune who can see what information, and more importantly, what information can be viewed. For example, I can prove that I hold a Canadian passport that shows that I am over 18 years old, without revealing any other personal information.

This makes all kinds of interesting combinations possible. I can give a few examples:

Zero-knowledge proof of personhood:Prove that you are a unique person (via various forms of ID: passport, biometrics, identity based on decentralized social graphs) without revealing any other identity information. This can be used for "prove you are not a robot", various "maximum N per person" use cases, etc., while fully protecting privacy without revealing that the rules have not been broken.

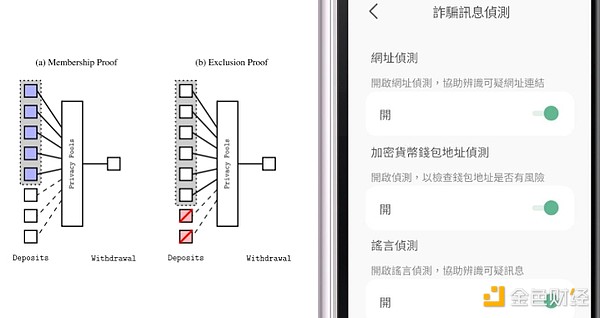

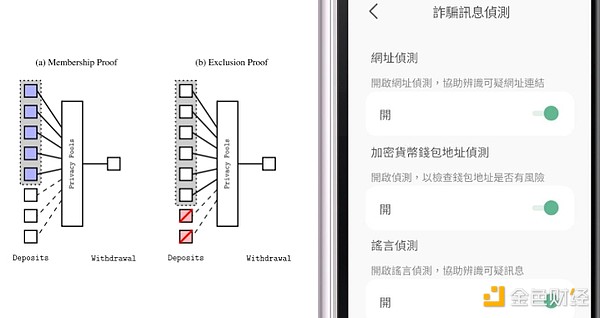

Privacy Pools are a financial privacy solution that excludes bad actors without backdoors. When spending, users can prove that the origin of their tokens is not on a public list of hacks and thefts; only hackers and thieves themselves cannot generate such proofs, so they cannot hide. Railgun and privacypools.com are currently using this type of scheme.

On-device anti-fraud scanning: This does not rely on zero-knowledge proofs (ZKP), but it feels like it belongs in this category. You can use the device's built-in filters (including LLM) to check incoming messages and automatically identify potential fake information and scams. If you do this on the device, it does not compromise the user's privacy, and can be done in a user-authorized way, giving each user the choice of which filters to subscribe to.

Proof of physical provenance: Using a combination of blockchain and zero-knowledge proofs, it is possible to track various properties of an item along its manufacturing chain. This can, for example, price environmental externalities without publicly disclosing the supply chain.

Left: Diagram of the privacy pool. Right: The Message Checker app, where users can choose to turn on or off multiple filters, from top to bottom: URL check, cryptocurrency address check, rumor check

Privacy and Artificial Intelligence

Recently, ChatGPT announced that it will begin inputting your past conversations into AI as background information for your future conversations. This trend will inevitably go in this direction: AI looking back at your past conversations and gaining insights from them is fundamentally very useful. In the near future, we may see AI products developed that invade privacy even more deeply: passively collecting your internet browsing patterns, email and chat history, biometric data, and more.

In theory, your data is private to you. But in practice, this does not always seem to be the case:

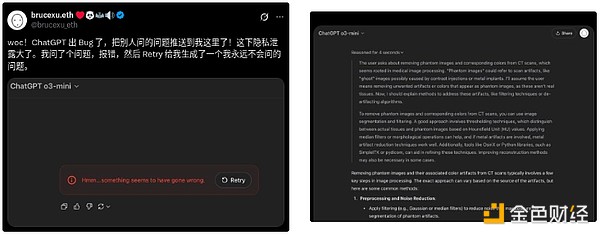

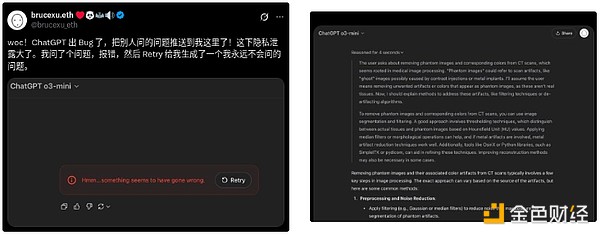

Wow! ChatGPT has a bug that pushes questions asked by others to me! This is a serious privacy breach. I asked a question, it failed, and then “retry” generated a question I would never have asked.

It’s entirely possible that the privacy protections were working perfectly, and that in this case the AI was hallucinating and answering a question Bruce never asked. But there’s no way to verify that. Likewise, there’s no way to verify that our conversation was used for training.

This is all deeply concerning. Even more troubling are the explicit surveillance use cases for AI, which collect and analyze users’ (physical and digital) data on a massive scale, without their consent. Facial recognition technology is already helping repressive regimes suppress political dissent on a massive scale. But most worrying of all is the final frontier for AI data collection and analysis: the human mind.

In theory, brain-computer interface technology has the amazing power to enhance human potential. Take the story of Noland Arbaugh, Neuralink’s first patient last year:

The experimental device gave Arbaugh, now 30, a sense of independence. Before, he needed someone to hold him upright to use the mouth stick. If it fell, someone had to pick it up for him. And he couldn’t use it for long periods of time or he’d get sores. With the Neuralink device, he has almost complete control over his computer. He can browse the web, play computer games, and Neuralink says he’s set a record for human control of a cursor using a brain-computer interface (BCI).

Today, these devices are powerful enough to help the sick and injured. In the future, they’ll be powerful enough to give perfectly healthy people the opportunity to collaborate with computers and communicate telepathically with each other at an efficiency we can hardly imagine (!!). But to actually interpret brain signals and make this communication possible requires artificial intelligence.

The combination of these trends could naturally lead to a dark future in which we see silicon super-agents devouring and analyzing everyone’s information, including what they write, what they do, and how they think. But there is also a brighter future: We can enjoy the benefits of these technologies while protecting our privacy.

This can be achieved by combining several techniques:

Run computation locally whenever possible - Many tasks (e.g., basic image analysis, translation, transcription, basic brainwave analysis for BCIs) are simple enough to be done entirely in computation running locally. In fact, computation running locally even has advantages in terms of reduced latency and increased verifiability. If something can be done locally, it should be done locally. This includes computations that involve various intermediate steps such as accessing the internet, logging into a social media account, etc.

Use cryptography to make remote computations completely private — Fully homomorphic encryption (FHE) can be used to perform AI computations remotely, without allowing the remote server to see the data or results. Historically, FHE was very expensive, but (i) recently its efficiency has been rapidly improving, and (ii) LLM is a uniquely structured form of computation, and asymptotically almost all of it is linear, which makes it well suited for ultra-efficient FHE implementations. Computations involving multiple parties’ private data can be done with multi-party computation; the common two-party case can be handled extremely efficiently with techniques like obfuscated circuits.

Extend guarantees to the physical world with hardware verification — We can insist that hardware that can read our thoughts (whether from inside or outside our skulls) must be open and verifiable, and use techniques like IRIS to verify it. We can do this in other areas, too: for example, we could install security cameras that provably save and forward video streams only when a local LLM flags it as physical violence or a medical emergency, and delete them in all other cases, and use IRIS to conduct community-driven random checks to verify that the cameras are implemented correctly.

The Imperfect Future

In 2008, libertarian philosopher David Friedman wrote a book called The Imperfect Future, in which he outlined the changes that new technologies might bring to society, not all of which were good for him (or for us). In one section, he describes a potential future in which there will be a complex interplay between privacy and surveillance, with the growth of digital privacy offsetting the growth of real-world surveillance: There is no point in having strong encryption for my email if a video mosquito is perched on the wall watching me type. Strong privacy protections in a transparent society therefore require some way to protect the interface between my physical body and cyberspace… A low-tech solution is typing under a hood. A high-tech solution is some kind of connection between mind and machine that doesn’t go through fingers—or any channel visible to an outside observer. 24

The conflict between transparency in real space and privacy in cyberspace also manifests itself in the other direction…My PDA encrypts my information with your public key and transmits it to your PDA, which decrypts the information and displays it through your VR glasses. To ensure that nothing can read the glasses from over your shoulder, the goggles do not display the image through a screen but write it into your retina with a tiny laser. With luck, the inside of your eyeball remains a private space.

We may eventually live in a world where our physical activities are completely public and information transactions are completely private. It has some attractive features. Ordinary citizens can still use the strong privacy advantages to find killers, but the cost of hiring a killer may be beyond their ability to bear, because in a sufficiently transparent world, all murders will be solved. Every killer will go directly to prison after performing a task.

What about the interaction between these technologies and data processing? On the one hand, modern data processing technologies make the transparent society a threat - without data processing technologies, it would be useless even if you recorded everything that happened in the world, because no one could find the six-inch tape he wanted among the millions of miles of tape produced every day. On the other hand, technologies that support strong privacy offer the possibility of re-establishing privacy, even in a world with modern data processing technologies, by protecting your transaction information from anyone who can access it.

Such a world may be the best of all possible worlds: if all goes well, we will see a future with little physical violence, but at the same time, our online freedoms are maintained and ensure the basic functioning of political, civic, cultural and intellectual processes in society, which depend on certain restrictions on complete information transparency for their continued operation.

Even if it is not ideal, it is much better than a version in which physical and digital privacy is reduced to zero, including, ultimately, the privacy of our own thoughts. By the mid-2050s, we’ll see commentaries that it’s of course unrealistic to expect thoughts to be immune to lawful interception. And responses to these commentaries include links to a recent incident in which an AI company’s LLM was exploited to leak the private inner monologues of 30 million people over a year to the entire internet.

Society will always depend on a balance between privacy and transparency. In some cases, I support restrictions on privacy. To take an example that is completely different from the usual arguments people make in this regard, I support the US government’s move to ban non-compete clauses in contracts not primarily because they directly affect employees, but because they can force companies to open source some of their implicit domain knowledge. Forcing companies to be more open than they want is a restriction on privacy—but I think it’s a restriction of net benefit. But from a macro perspective, the most pressing risk of technology in the near future is that privacy will approach historic lows, and in a wildly lopsided way, with the most powerful individuals and the most powerful countries getting a lot of data on everyone, while everyone else sees almost nothing. Therefore, supporting privacy for everyone and making the necessary tools open source, universal, reliable, and secure is one of the great challenges of our time.

Catherine

Catherine