Compiled by: Deng Tong, Jinse Finance

During the 2024 Hong Kong Web3 Carnival, Ethereum co-founder Vitalik Buterin delivered a keynote speech "Reaching the Limits of Protocol Design". Jinse Finance has compiled the content of the speech as follows for readers.

Blockchain and ZK-SNARKS

The types of technology we use to build protocols have changed a lot in the past 10 years. So, when Bitcoin was born in 2009, it actually used a very simple form of cryptography, right? The only cryptographic techniques you see in the Bitcoin protocol are - you have hashing, you have ECDSA signatures with elliptic curves, you have proof of work. Proof of work is just another way of using hashing, and if you look at the techniques that were used to build the protocol in 2000, you move on to this much more sophisticated set of techniques that really just emerged 10 years ago.

Now, a lot of this stuff has certainly been around for a long time. Technically, we've had ZK-SNARKS since the PCP theorem, which is decades old. So in theory, these technologies have been around for a long time, but in practice, there's been a huge efficiency barrier between what you can do in an academic paper and what you can do in real-world applications.

I actually think there's a lot of credit for blockchain itself for actually enabling the adoption of these technologies and actually putting them into practice.

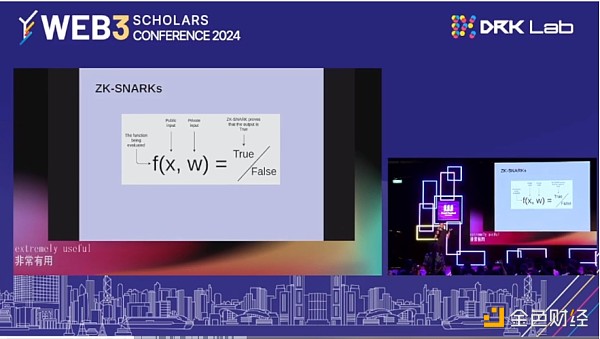

The landscape today has changed over the last decade, and there's been a huge amount of progress. That includes a lot of different things. The protocols that we have today are increasingly reliant on all of these technologies. If you look at the protocols that were built in 2000, all of these things were considered key components from day one. ZK-SNARKS is the first big thing here, right? ZK-SNARKS is a technology. You can verify proofs in such a way that it's faster then running the computation yourself. You can also verify proofs without hiding a lot of information in the original input. So looking at ZK-SNARKS is very useful in terms of privacy, and it's also very useful in terms of scalability.

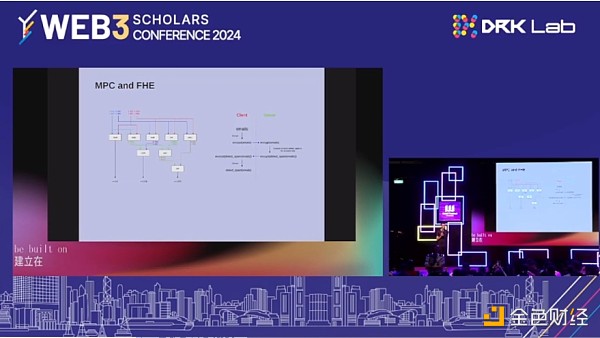

Now, what does blockchain do? Blockchains give you a lot of benefits, they give you openness, they give you global verifiability. But all of this comes at the expense of two very big things. One is privacy, and the other is security. ZK-SNARKS, give you privacy, give you security. In 2016, we saw the Zcash protocol. Then, we started to see more and more things in the ecosystem theory. Today, almost everything is starting to be built on ZK, starting with multi-party computation and fully homomorphic encryption. But there are things you can't do with ZK-SNARKS. So privacy protection, computation, running on people's private data. Voting is actually an important use case where you can get some degree of benefit. So voting with ZK-SNARKS, but if you want to get the really best properties, then MPC and FHE are what you have to use.

Many cryptographic, AI applications will also end up using MPC and FHE, both primitives that have been rapidly improving in efficiency over the past decade.

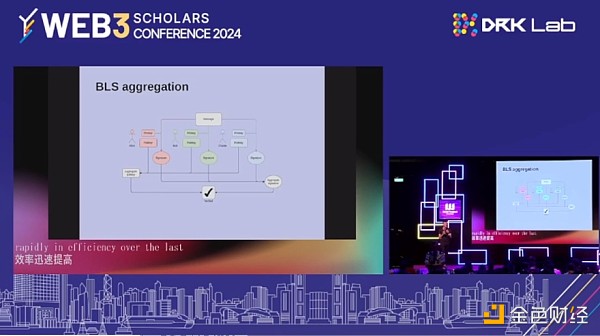

BLS key aggregation is an interesting technique that basically allows you to take a whole bunch of signatures from a whole bunch of different participants, potentially tens of thousands of participants, and then verify the combined signature as quickly as possible, just like verifying one signature.

That's powerful. BLS key aggregation is actually a technique that's at the heart of modern state consensus proof theory.

If you look at state consensus proofs that were built before BLS key aggregation, a lot of times the algorithms would typically only support a few hundred verifications, like one theorem currently has about 30,000 verifications because they're submitting signatures, one every 12 seconds. This is possible because this new form of cryptography has really only been optimized enough in the last 5 to 10 years to be useful.

Efficiency, Security, and Extended Capabilities

So a lot of things are enabled by these new technologies. They've gotten stronger very quickly. Today's protocols use all of these technologies heavily. We've really gone through a big shift from special-purpose cryptography to general-purpose cryptography, where to create a new protocol, you have to understand how cryptography works yourself. You have to create a special-purpose algorithm for a special-purpose application to a more general purpose. In this world, to create an application that uses what I've talked about in the last 5 minutes, you don't even need to be a cryptographer. You can write a piece of code and compile it into approvals and validators, and you have an application that seeks history.

So what are the challenges here? I think the two big ones right now: one is efficiency, the other is security. Now, there's a third one, which is, you know, extended capabilities. I think it is more important to improve the efficiency and security of what we have today.

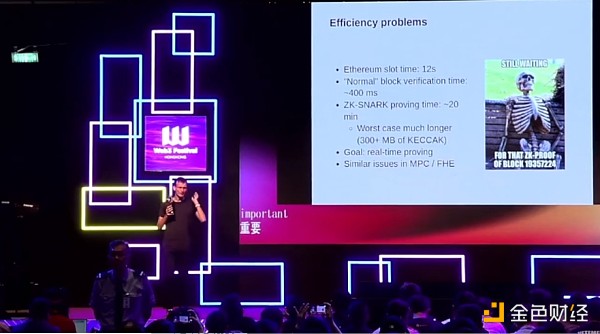

Let's talk about efficiency. Let's talk about a specific example, which is the theory of blockchain. In theory, if the block time is 12 seconds, that is, the average interval between one block and the next block is 12 seconds. On normal block verification time. This is the time it takes for the lower-level node to verify the block, which is about 400 milliseconds. Now the time it takes to find a trap, prove the average theory, and block is about 20 minutes. But two years ago, this is improving rapidly. Before, it was 5 hours. Now, it takes 20 minutes on average. We have still made great progress compared to two years ago.

Now, what is our goal? The goal is to do real-time proofs. The goal is that when a block is created, you can get the proof before the next block is created, and when we achieve real-time proofs, every user in the world can easily become a fully verified user of the protocol. If we can get to a world where every Ethereum wallet, including browser wallets, including mobile wallets, including smart contract wallets for other chains, is actually fully verifying the theory of the consensus rules.

So they don't even believe if they prefer proof of stake, because they are actually directly verifying the rules and directly ensuring that the blocks are correct. How do we use history to do this? For this to really work, ZK-SNARKS proofs need to be in real time, but there needs to be a way to prove the theory and the blocks in 5 seconds. So the question is, can we do it? Now, MPC and FHE have similar problems. As I mentioned before, a strong use case for MPC and FHE is voting, right? It's actually starting to emerge.

The current problem with MPC is that some of its security properties rely on a central server. Can we decentralize it? We can, but it requires the protocol to be much more efficient. These protocols are very expensive. How do we achieve this? For ZK-SNARKS, I think there are three main categories of efficiency gains. One of them is parallelization and aggregation. So if you imagine in a theory about a block, in one verification, a block takes at most about 10 million computational steps. You do each computational step and you prove it separately. And then you do proof aggregation. After about 20 of the above steps, you have a big proof that represents the correctness of the entire block. This is something that can be done today with existing technology. And it can prove inferior blocks in 5 seconds. It requires a lot of parallel computing, so can we optimize it? Can we optimize aggregate proofs? The answer is yes, there are a lot of theoretical ideas about how to do it, but this really needs to be turned into something practical. ASICs are able to hash about 100 times faster than GPUs for the same hardware cost and the same electricity cost. The question is, can we get exactly the same benefits with rigorous proofs? I think the answer is we should be able to. There are a lot of companies that have started to actually build products specifically for proving ZK-SNARKS, but in reality, it should be very general. Can we get 20 minutes down to 5 seconds and make it more efficient?

So we have the GKR protocol, we have 64 bits, we have ZK-SNARKS and all these different ideas. Can we make the algorithm more efficient? Can we create more ZK-SNARKS, friendly hash functions, more ZK-SNARKS, friendly signature algorithms? There are a lot of ideas here, and I strongly encourage people to do more work on these ideas. We have all these amazing forms of cryptography, but will people trust them? If people are worried that there is some kind of flaw in it, whether it is ZK-SNARKS, or zkevm circuits, they have 7,000 lines of code. If they do it very effectively. Theoretically, there are 15 to 50 bugs per thousand lines of code. We try hard. Less than 15 per thousand lines, but also more than zero. If you have these systems that hold billions of dollars of people's assets, if one of them has a bug, then no matter how advanced the cryptography is, that money is lost.

The question is, what can we do to actually take the cryptography that exists and reduce the number of bugs in it?

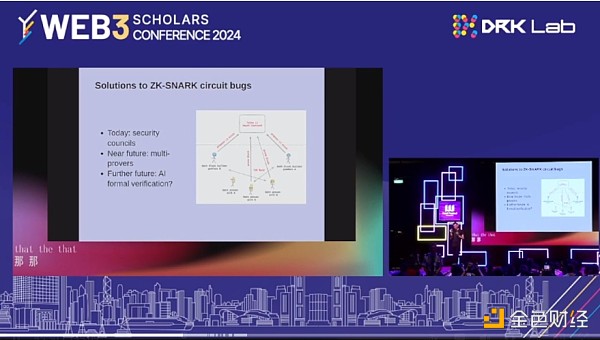

Right now, I think if 9 out of 12 people in a group, more than 75%, agree that there is a bug, then they can overturn whatever the proof system says. So it's quite centralized. In the near future, we will have multiple proofs. In theory, you can reduce the risk of a part of any one of them being wrong. You have three proof systems. If one of them is wrong, then hopefully the other two will not be wrong in exactly the same place.

Formal Verification with AI Tools

Finally, One interesting thing that I think is worth investigating in the future is using AI tools for formal verification. Actually, mathematically proving that something like ZK-EVM is bug-free. But can you actually prove that, for example, the ZK-EVM implementation is verifying the exact same functions as the theorem implementation in Gas. For example, can you prove that they have exactly one output for any possible input?

In 2019, no one thought that AI could make really beautiful pictures today. We've made a lot of progress, and we've seen AI do it.

The question is, can we try to turn similar tools toward similar tasks. Like automatically generating mathematical proofs for complex statements in programs that span thousands of lines of code. I think that's an interesting open challenge for people to understand the efficiency of signature aggregation. So today Ethereum has 30,000 validators, the requirements to run a node are pretty high right? I have a node theory on my laptop, and it works, but it's not a cheap laptop. And I did have to upgrade the hard drive myself. The desired goal is theoretical, we want to support as many validations as possible. We want proof of stake to be as democratized as possible so that people can directly participate in validation at any scale. We want the requirements to run in node theory to be very low and very easy to use. We want the theory and the protocol to be as simple as possible.

So what is the limitation here? The limitation is that all the data per slot per participant needs to be 1 bit, because you have to broadcast the information of who participated in the signature and who did not participate. This is the most basic limitation on top of this. If that's the case, there are no other limits. Computation, no lower bound. You can do proof aggregation. You can recurse through each tree, and you can do signatures. You can do all kinds of signature aggregation. You can use SNARKS, you can use cryptography, like you can use 32-bit SNARKS, all kinds of different techniques.

Thinking about peer-to-peer networks

The question is, how much can we optimize signature aggregation -- peer-to-peer security? People don't think enough about peer-to-peer networks. That's what I really want to highlight. I think in crypto in general there's too much of a tendency to create fancy structures on top of peer-to-peer networks and then assume that peer-to-peer networks will work. There are a lot of demons hiding there, right? I think those demons are going to get more complicated, just like the way peer-to-peer networks work in Bitcoin. There are all kinds of attacks, like Sybil attacks, denial of service attacks, etc. But when you have a very simple network, and the only task of the network is to make sure everyone gets everything, the problem is still fairly simple. The problem is that, as a scaling theory, peer-to-peer networks are becoming more and more complex. Today's Ethereum peer-to-peer network has 64 shards - in order to do a signature aggregation, in order to handle 30,000 signatures. First, as we do today, we have a peer-to-peer network that is divided into 64 different shards, and each node is just part of one or a few of the networks. So layering the two projects and allowing for very low fees for Rollups is a gifted scalability solution. This also relies on a more complex peer-to-peer architecture. Can each node only download 1/8 of the total data? Can you really make such a network secure? How do we save data? How do we improve the security of peer-to-peer networks?

Conclusion

So, What we need to think about are protocols that can achieve the limits of cryptography. Our cryptography is already much stronger than it was decades ago, but it can be even stronger, and I think now we really need to start thinking about what the ceiling is and how do we really reach it?

There are two equally important directions here:

One is to continue to improve efficiency. We want to prove everything in real time. We want to see a world where every message passed in a block on a decentralized protocol has ZK-SNARKS attached to it by default, proving that the message and everything that message depends on follows the rules of the protocol. How can we improve efficiency to achieve this? The second is to improve security. Fundamentally reduce the possibility of problems and get us to a world where the actual technology behind these protocols can be very powerful and very trustworthy.

But as we've seen many times, multi-signatures can get hacked. In many cases, the tokens in these Layer 2 projects are actually controlled by multi-signatures. If five out of nine people get hacked at the same time, a lot of money can be lost. If you want to avoid these problems, then we need trust - to be able to use the technology and cryptographically enforce compliance with the rules, rather than trusting a small group of people to ensure the security of the system.

But to really achieve this, the code has to be trustworthy. The question is, can we make the code trustworthy? Can we make the network trustworthy? Can we make the economics of these products of these protocols trustworthy? I think these are the core challenges and I hope we can continue to work together to improve them. Thank you.

JinseFinance

JinseFinance

JinseFinance

JinseFinance Wilfred

Wilfred JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Alex

Alex Decentralised.co

Decentralised.co Ftftx

Ftftx