What does Meme Coin bring to the current crypto market?

As Memecoin losses mount, the ratio of long positions to short positions for SOL in the futures market is showing a bearish trend.

JinseFinance

JinseFinance

Author: Can Gurel, delphidigital Compiler: BTCdayu Source: X, @BTCdayu

< p style="text-align: left;">PointsEveryone executes every transaction The overall chain is inherently non-scalable. Therefore, a multi-chain world in some form is inevitable. We believe modular blockchain may be the best approach for a multi-chain world.

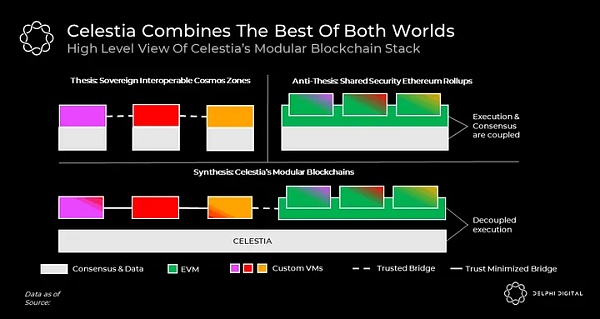

Celestia is the first truly modular blockchain. “Its vision is to combine the sovereign interoperability zone of Cosmos with rollup-centric Ethereum with shared security.”

Celestia extends by decoupling execution from consensus and introducing data availability sampling. This allows Celestia to emulate the characteristics of BitTorrent, one of the most scalable and decentralized protocols to date. For the foreseeable future, Celestia is expected to effectively support any potential user needs while keeping verification costs fairly stable.

Reimagining blockchain as a modular stack has implications beyond scalability. Celestia’s unique advantages include trust-minimized bridges, sovereign chains, efficient resource pricing, simpler governance, easy chain deployment, and flexible virtual machines.

Modular blockchains are a paradigm shift in blockchain design, and we expect their network effects to change over the next few years. It's becoming more and more obvious. In particular, Celestia’s mainnet is expected to launch in 2023.

1. Introduction

A monolithic chain where everyone executes every transaction is inherently unscalable. In fact, this is why almost every major ecosystem is building a multi-chain world.

As we explained in our previous article, ecosystems differ in how they envision a multi-chain world. The two approaches attracting the most activity today are Ethereum and Cosmos.

In short, Ethereum envisions a future centered around Rollup. Rollup tends to be more expensive and less flexible than L1, but they can share security with each other.

In contrast, Cosmos is an ecosystem of interoperable sovereign L1s (called "zones"). While zones are cheaper and more flexible than rollups, they cannot share complete security with each other.

Celestia combines the best of both worlds. As a wise man once said, "Celestia's vision is to combine the sovereign interoperability zone of Cosmos with Rollup-centric Ethereum with shared security."

If you don’t fully understand the chart above ,do not worry. We’ll unpack it all in this post as we delve into Celestia’s paradigm-shifting modular blockchain design. We will spend the first half of the article answering the “how” of Celestia and the second half addressing the “why” of Celestia. If you are familiar with how Celestia works, we recommend that you skip to the second half of this article where we list its 8 unique properties. You might be surprised to learn that Celestia has a deeper, more intense meaning than it appears on the surface.

2. The working principle of Celestia - decoupled execution

To understand how Celestia "principles" we must first define its problem statement. Celestia was born out of a search for answers to the question: “What is the least a blockchain can do to provide shared security to other blockchains (i.e. rollups)?”

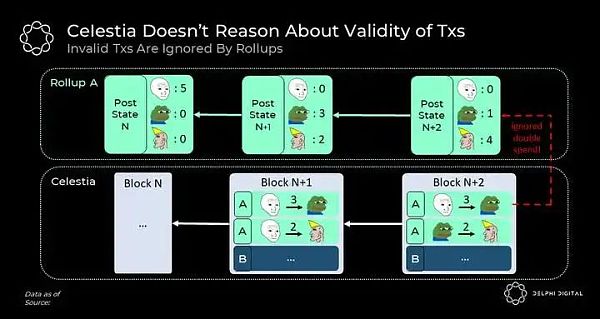

Generally, consensus and validity are the same thing. However, it is possible to view these concepts as independent. Validity rules determine which transactions are considered valid, while consensus allows nodes to agree on the order of valid transactions.

Like any L1 blockchain, Celestia implements a consensus protocol (Tendermint) to order transactions. However, unlike typical blockchains, Celestia does not reason about the validity of these transactions and is not responsible for executing them. Celestia treats all transactions equally; if the transaction pays the necessary fees, it will accept it, order it, and copy it.

All transaction validity rules are enforced on the client side by aggregation nodes. Rollup nodes monitor Celestia to identify and download transactions belonging to them. They then execute them to calculate their status (such as determining each person's account balance). If any transactions are considered invalid by the aggregation nodes, they ignore them.

As you can see, as long as Celestia's history remains unchanged, rollup nodes running software with the same validity rules can compute the same state.

This brings us important results. Rollup does not require another chain to perform any execution to share security. Instead, all they need is to agree on a shared history of ordered transactions. How does Celestia scale?

While decoupling execution from consensus lays the foundation for Celestia's unique capabilities, the level of scalability achieved by Celestia cannot be achieved with decoupled execution alone. explain.

The obvious advantage of decoupled execution is that nodes are free to perform transactions relevant to the applications they are interested in, rather than the default for everyone perform all transactions. For example, a node for a gaming application (application-specific aggregation) does not have to be interested in executing transactions for a DeFi application.

That said, the scalability benefits of decoupled execution are still limited because they come at the expense of composability.

Let's imagine a situation where two applications want to exchange some tokens with each other. In this case, the state of each application will be dependent on the other; to calculate the state of one application, a node must execute transactions related to both applications.

In fact, as each new application adds these interactions, the number of transactions to be performed must increase. In the extreme case, if all applications wanted to interact with each other, we would be back to every aspect of a single chain, with everyone downloading and executing every transaction.

So what does it matter about how Celestia achieves unparalleled scalability and decouples execution from consensus?

3. Scalability bottleneck

Scalability Often described as increasing the number of transactions without increasing the cost of the verification chain. To understand where the scalability bottlenecks are, let’s briefly review how blockchains are verified.

In a typical blockchain, consensus nodes (validators, miners, etc.) generate blocks and then distribute them to a network consisting of full nodes and light nodes the rest of the network.

Full nodes have highly available resources and fully verify the contents of received blocks by downloading and executing all transactions within them. In contrast, due to limited resources, light nodes (99% of users) cannot verify the contents of these blocks and can only track the block header (a digest of the block data). Therefore, the security guarantee of light nodes is much lower than that of full nodes; they always believe that the consensus is honest.

Note that full nodes do not make this assumption. Contrary to popular belief, malicious consensus can never trick full nodes into accepting invalid blocks because they will notice invalid transactions (such as double-spend transactions or invalid minting) and stop following the chain.

The most notorious scalability bottleneck in the blockchain space is called state bloat. As more transactions occur, the state of the blockchain (the information needed to execute transactions) increases and the cost of running a full node becomes higher. This leads to the undesirable situation where the number of full nodes starts to decrease and the number of light nodes starts to increase, causing the network to concentrate around consensus nodes.

Since most chains value decentralization, they want their full nodes to run on consumer hardware. This is why they limit how fast their state can grow by enforcing block/gas size limits.

Fraud and Proof of Validity

The invention of fraud/validity proof effectively removes this bottleneck. These are concise proofs that light nodes can effectively perform to verify that the contents of a block are valid without executing transactions within it. The advantage of this solution is that any single node with the complete chain state can generate these proofs. This is extremely powerful because it means that light nodes can operate with nearly the same security guarantees as full nodes while consuming orders of magnitude fewer resources.

Here is a simplified fraud prevention example. In anti-fraud, full nodes provide enough data for light nodes to allow them to identify invalid transactions independently. The first step of this proof requires the full node to indicate to the light node that a specific piece of data (such as a tx claiming to be invalid) belongs to the block.

This is fairly simple as Merkle trees can be used to do this. By using Merkle trees, full nodes can effectively prove to light nodes that a specific transaction is included in a block without requiring them to download the entire block.

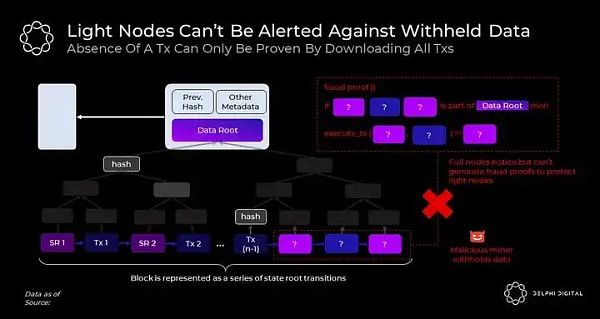

However, while proving the inclusion of a transaction is straightforward, proving the absence of a transaction is not. This is problematic because, as we will see in the next section, proving the absence of a transaction is just as important as proving the inclusion of a transaction for the fraud/validity proof to function effectively.

Data availability issues

In order for full nodes to be generated first For fraud/validity proofs, they must be able to calculate state - account balances, contract code, etc.

This requires the full node to download and execute "all" transactions. But what if a malicious consensus releases the block header but retains some transactions in the block body?

In this attack scenario, full nodes can easily notice the loss of data in the body and thus refuse to follow the chain. However, light nodes that only download the header will continue to follow it because they won't notice any difference.

Data availability is a prerequisite for fraud and validity proof

Note that this problem applies to solutions based on fraud and validity proofs, since honest full nodes cannot generate fraud/validity proofs without access to full data. In the event of a data withholding attack

In fraud prevention systems, light nodes will track the headers of potentially invalid chains. Therefore, honest nodes can no longer validate blocks.

In a system based on validity proofs, light nodes will track the header of a chain with a valid but unknown state. Therefore, honest nodes are no longer able to generate blocks. This means that the chain cannot proceed forward without the attacker's consent. This is similar to the attacker having custody of everyone's funds.

In either case, the light node will not notice the problem and will inadvertently fork off the full node.

The data availability issue is inherently a very delicate one, because the only way to prove that transactions don't exist is to download all of them, and that's exactly what Things that light nodes want to avoid doing due to resource constraints.

Celestia solves data availability problem

Now we have Having identified the problem, let's see how Celestia solves it. Earlier, when we distinguished between validity and consensus, we mentioned that Celestia does not care about the validity of transactions. However, what Celestia really cares about is whether the block producer has fully published the data behind the header.

Celestia is extremely scalable because the availability rules can be autonomously executed by light nodes with limited resources. This is accomplished through a novel process called data availability sampling.

Data Availability Sampling (DAS)

DAS depends on A long-standing data protection technique called erasure coding. While the way Celestia implements erasure coding is beyond the scope of this report, it is important to understand its basic principles.

Applying erasure coding to a piece of data can extend it in a way that the original data can be recovered from the fixed portion of the extended data. For example, a piece of data can be erasure-coded to double its size, and can be fully recovered from *any* 50% of the expanded data. By erasure-coding blocks in a specific way, Celestia enables light nodes with limited resources to randomly sample a few fixed small-sized chunks of data from the block, with a high probability of guaranteeing that all other chunks are already available to the network. This probability guarantee is due to the number of nodes participating in the sampling process.

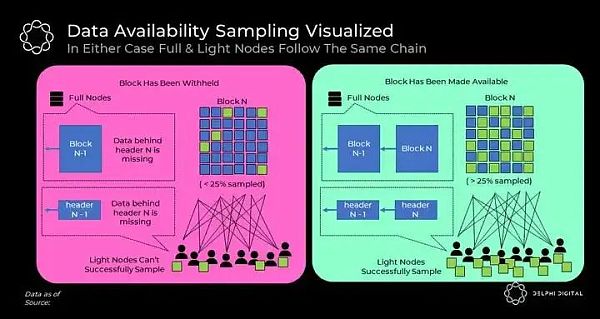

Think of DAS as a game where malicious block producers try to hide data in blocks without being noticed by light nodes. Block producers publish headers. Based on the submitted data root in the header, each light node starts requesting random blocks from the block (and the corresponding Merkle proof proving that the data is contained in the block).

The game has two outcomes:

1. Data is available ->Malicious block producer based on light node request Release the block from the block. Freed blocks are propagated through the network. Although each sampling light node only samples a small number of blocks, any honest full node in the network will be able to recover the original blocks from the broadcast blocks, considering that they collectively sample more than 25% of the erasure coded blocks. With the full block now available to the network, all light nodes will finally see their sampling tests succeed and be confident that the full data behind the header is indeed available to full nodes.

By autonomously verifying that data is available, light nodes can now rely solely on fraud/validity proofs because they know that any single honest full node can Generate these proofs for them.

2. Data is withheld ->The malicious block producer does not release the requested block. Light nodes notice that their sampling tests failed.

Note that this no longer poses a serious threat to security, as malicious consensus can no longer trick light nodes into accepting chains rejected by full nodes. Therefore, blocks with missing data will appear as active failures for both complete and data-sampling light nodes. In this case, the chain can be safely restored through the ultimate security mechanism of all blockchains - social consensus.

In summary, in either case, the full data sampling light node will ultimately follow the same chain and therefore operate with nearly the same security guarantees.

How much scale can Celestia offer?

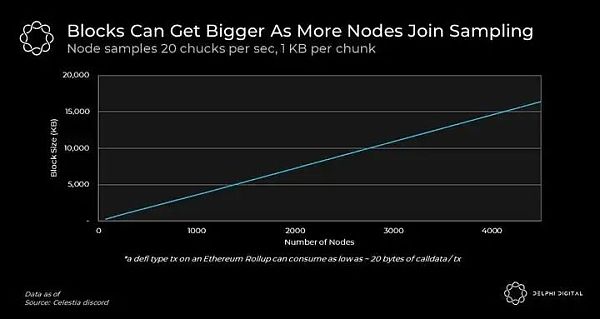

A key property of DAS is that the more data collectively sampled, the same probabilistic availability guarantee can be provided for larger data volumes. In the context of Celestia, this means that chunks can be safely increased (i.e. support higher tps) by having more nodes participate in the sampling process.

However, there are inherent trade-offs with DAS. For technical reasons (which we will not discuss here), the block headers of data sampling light nodes grow proportionally to the square root of the block size. Therefore, a light node wishing to have nearly the same security as a full node will experience an O(√n) bandwidth cost, where n is the block size.

In terms of scalability, there are two dominant factors at work;

How much data can be sampled in a concentrated manner

The target block header size of light nodes

Any of these will limit Celestia's DA throughput.

Below we share current estimates from the Celestia team's study, taking into account the first influencing factor.

Importantly, the block size can be much larger than shown here because DAS can be performed by a large audience using limited resources. Even smartphones can participate in the sampling process and contribute to Celestia’s security and throughput. In fact, this is an example of a smartphone contributing to Celestia’s security!

In practice, we expect the number of sampled nodes to be fairly relevant to user needs. This is very exciting because it defines Celestia’s block space supply as a function of demand. This means that, unlike monolithic chains, Celestia can provide lower stability fees as user demand grows.

Now let's zoom in on the second factor; the size of the light node block header grows proportionally to sqrt of the block size. While this may appear to be a limiting factor, over time the increased resource requirements may be offset by improvements in network bandwidth.

Also note that DAS has a multiplier effect on bandwidth improvements. If the average light node's bandwidth capacity grows by X, Celestia's DA throughput can safely grow by X²!

Finally, unlike Moore's Law of Computing, which is expected to end sometime in the 2020s, Nielsen's Law of Internet Bandwidth seems likely to be will continue to apply for decades. Thus, by taking computation completely off-chain, Celestia can take advantage of exponential increases in network bandwidth.

All things considered, Celestia is expected to be able to actually support any potential user demand for the foreseeable future while keeping verification costs fairly stable. By abandoning execution and introducing DAS, Celestia can mimic the scalability properties of BitTorrent, the most scalable decentralized protocol known to the Internet.

4. Features of modular Celestia Stack

Now Now that we’ve covered how Celestia works, let’s look at the benefits of modular blockchains. Reimagining blockchain as a modular stack has implications beyond pure DA scalability. Below we cover 8 unique design attributes of the modular Celestia stack that may not be immediately obvious.

Self-sovereignty

Rollups are now the baby of Ethereum Chain operation. This is because they publish their headers on Ethereum and their fraud/validity proofs are performed on-chain. Therefore, their canonical status is determined by a series of smart contracts on Ethereum.

Recognizing this is important because it means rollups must have on-chain governance mechanisms by default. However, on-chain governance has risks such as low voter participation, vote buying, and centralization. Due to these complexities, on-chain governance has not yet been adopted by most blockchains as the preferred governance method.

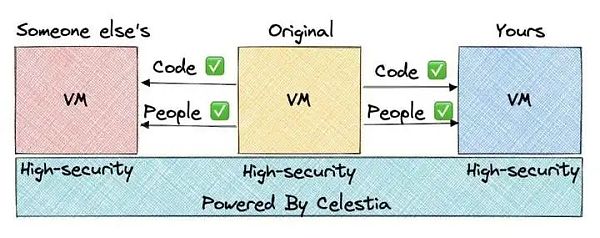

Rollups on Celestia work very differently. As we saw before, Celestia makes no sense of the data it stores and leaves all interpretation to the summary nodes. Therefore, the canonical state of the aggregate on Celestia is determined independently by the nodes chosen to run specific client software. In fact, this is exactly how L1 blockchains generally operate today.

Therefore, Rollup on Celestia is essentially a self-sovereign blockchain. By upgrading the software and choosing to understand the underlying data differently, nodes are free to undergo hard/soft forks. For example, if the aggregation community is engaged in a contentious debate about changes in block size or token supply, opponents could update their software to follow different validity rules. When we think about its deeper implications, you'll notice that this feature is more exciting than it seems.

In the world of first-level blockchains, controversial hard forks are often considered risky because forking the chain ultimately weakens its security. As a result, people often avoid forking at all costs, thus inhibiting experimentation.

For the first time in blockchain history, Celestia brings the ability to fork to the blockchain without worrying about security dilution. This is because all forks will ultimately use the same DA layer without giving up the security benefits of the Celestia consensus layer. Imagine how smoothly the Bitcoin block size debate or the Ethereum DAO fork could have been resolved if the blockchain had worked this way from the beginning.

We expect this will accelerate experimentation and innovation in the blockchain space to levels beyond what is possible with today's infrastructure. The visualization below is from a thread that illustrates this perfectly.

Flexibility

Another force that will accelerate the pace of innovation in the virtual machine space in particular is the execution-agnostic nature of Celestia.

Unlike Ethereum rollups, rollups on Celestia are not necessarily designed for EVM interpretable fraud/validity proofs. This opens up the VM design space on Celestia to a larger developer community and exposes it to stiff competition.

Today, we've seen alternative virtual machines emerge and gain traction with products like Starkware, LLVM, MoveVM, CosmWasm, FuelVM, and others. Custom virtual machines can innovate in every aspect of execution; supported operations, database structures, transaction formats, software languages, and more to achieve optimal performance while solving specific use cases.

While Celestia itself does not directly scale execution, we expect that its execution-agnostic nature will serve as a catalyst for a highly competitive VM market seeking high-functioning, scalable execution. Lay the foundation.

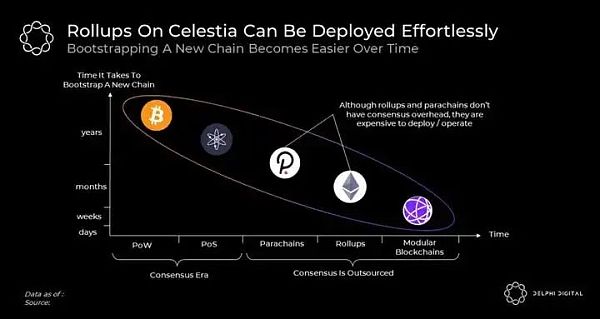

Easy deployment

If there is a trend in the cryptocurrency space What hasn’t changed over the years is how easy blockchain deployment has become.

In the early days, decentralized networks could not start without PoW hardware; this bottleneck was eventually eliminated with the introduction of PoS. Like PoS, mature developer tools like the Cosmos SDK make it easier to launch new blockchains. However, despite the progress, the overhead of bootstrapping PoS consensus is still far from ideal. Developers must find new sets of validators, ensure they have widely distributed coins and handle the complexities of consensus, among other things.

Although Polkadot parachains and Ethereum Rollup eliminate this bottleneck, the deployment cost of the former is still high, and the operating cost of the latter is still high.

Celestia seems to be the next evolution of this trend. The Celestia team is implementing the ORU specification using the Cosmos SDK called Optimint. This tool, along with others, addresses the future need for any chain to be deployed without developers having to worry about consensus overhead or expensive deployment/operational expenses. The new chain can be deployed in seconds and allows users to interact with it securely from day one.

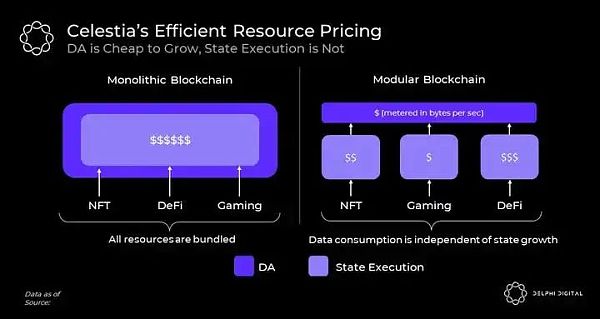

Efficient resource pricing

Ethereum plans in the future Its sharding plan will be rolled out in phases over several years. According to this, it will have pure data sharding and aggregation can only be used to publish data. As the data capacity of the base layer increases, this naturally leads to cheaper aggregation fees. However, this does not mean that Ethereum abandons its stateful execution environment on L1.

Ethereum has a divine execution power. To run a fully validated rollup node on Ethereum, one must also be interested in enforcing Ethereum's L1 state. However, Ethereum already has a huge state on which execution is by no means a cheap task. This massive country imposes a growing amount of technical debt on rollups.

Even worse, the same unit used to limit the L1 state size (i.e. L1 Gas) is also used to meter the aggregated historical data. Therefore, any time there is a surge in activity on L1, all aggregation charges will increase.

In Celestia's modular blockchain stack, active state growth and historical data are handled completely separately, as they should be. Celestia's block space only stores historical aggregate data measured and paid in bytes, and all state execution is metered via aggregation in its own independent unit. Because activity is affected by different fee markets, a surge in activity in one execution environment does not harm the user experience in another execution environment.

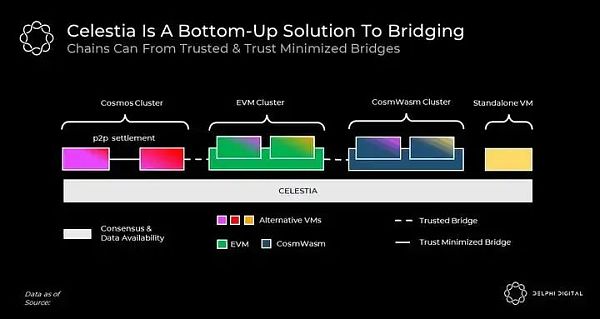

Trust Minimization Bridge

Understand the entire L1 and L2 One way to argue is to think of them as some chains and bridges.

Generally speaking, bridges come in two forms: trust and trust minimization. Trusted bridges rely on the consensus of counterparty chains, while trust-minimized bridges can be secured by any single full node.

In order for blockchains to form a trust-minimized bridge, they need two things: (i) Same DA guarantee (ii) Explain each other as fraudulent/valid Ways to prove sex.

Since L1 does not satisfy the previous condition of sharing DA, they cannot form a trust-minimizing bridge with each other. The best they can do is rely on each other's consensus to communicate, which necessarily means reduced security.

Rollups, on the other hand, communicate with Ethereum in a trust-minimized manner. Ethereum can access the rollup's data and perform its fraud/validity proof on-chain. This is why Rollup can have a trust-minimized bridge to Ethereum and can be secured by any single Rollup node.

Chains with trust-minimizing bridges can be considered clusters. Celestia provides the foundation for clusters to form between chains. However, this does not force them to do so. Chains on top of Celestia can be free and independent, or they can trust each other and trust-minimized bridges in a vast bridge design space.

Contrary to popular belief, proofs of fraud and validity do not have to be executed on-chain to be valid. They can also be distributed on the p2p layer (under a Cosmos cluster as shown above) and executed on the client side.

Minimum governance

Blockchain governance progress slow. Improvement recommendations often require years of community coordination to implement. While this was for security reasons, it significantly slowed down the pace of active development in the blockchain space.

Modular blockchain provides a superior approach to blockchain governance, where the execution layer can move quickly and break things independently, while the consensus layer can Stay resilient and robust.

If you look at the history of the EIP, you'll see that a large portion of the proposal has to do with implementation functionality and performance. They typically involve pricing operations, adding new opcodes, defining token standards, etc.

In a modular blockchain stack, these discussions will only involve participants in the corresponding execution layer and will not penetrate the consensus layer. This in turn means that there will be far fewer problems to solve at the bottom of the stack, and progress will necessarily be slow due to the high threshold for social coordination.

Decentralized block verification, not production

It is common for decentralization to mean different things to different teams.

Many projects value highly decentralized block production and emulate PoW's ability to decentralize block production in a PoS setting. Algorand’s random leader election, Avalanche’s subsampled voting, and Ethereum’s consensus sharding are all well-known examples of this. These design choices assume low resource requirements for block producers to enable highly decentralized block production.

While these are valuable technologies, it is difficult to say whether they actually lead to more meaningful decentralization than other technologies.

This is because, due to economies of scale outside the protocol, factors such as resource pools and cross-chain MEV are important catalysts, and block production has a tendency to be centralized. As a rule of thumb, despite the technology, stake/hash ultimately follows a Pareto distribution.

Besides these, there is a more important point about this topic that is often overlooked. The most important factor in decentralization is block verification rather than production.

As long as the actions of a small group of consensus nodes can be audited by a large number of participants, the blockchain will continue to function as the trust machine we love.

This is the core argument of Vitalik’s recent endgame article. Vitalik said, “So what is the result? Block production is centralized, and block verification is decentralized. Trusted and highly decentralized, and censorship remains blocked."

Similarly, while Celestia has higher resource requirements for block producers, Lower resource requirements for validators result in a highly decentralized, censorship-resistant network.

Simple

Clearly identify the blockchain’s Scalability bottlenecks help the Celestia team make the simplest design choices possible.

While Ethereum implemented DAS at the end of its sharding roadmap, Celestia prioritized it and explicitly chose not to go the overly complicated consensus sharding route.

Similarly, instead of implementing a new fancy consensus protocol, Celestia chose to use plain old Tendermint, with mature tooling and a wide range of developers/validators support.

We believe these design choices will make Celestia stand out over time and as Rollup increasingly seeks inexpensive data availability solutions. will be more appreciated when entering the market.

5. Future challenges/limitations

Celestia is Pioneer a new blockchain design. While we believe this is a superior model to existing solutions, there are still some unexplored challenges.

The first challenge we foresee has to do with determining the appropriate block size. As we explore in this post, Celestia’s block size can safely grow with the number of data sampling nodes in the network. However, data sampling is not a Sybil-resistant process. Therefore, there is no verifiable way to determine the number of nodes in the network. Furthermore, since nodes participating in sampling are not explicitly rewarded by the protocol, assumptions about sampling must rely on implicit incentives. The process of determining and updating the target block size will be subject to social consensus, which is a new challenge for consensus governance.

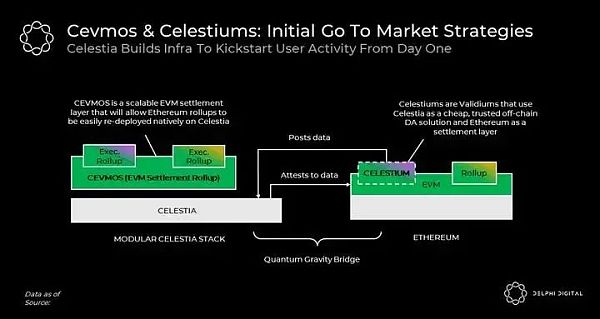

Another challenge ahead relates to Celestia’s bootstrapping network effects. Obviously, a dedicated DA layer without enforcement doesn't do much. Therefore, unlike other blockchains, Celestia will rely on other execution chains to initiate user activity. To this end, one of Celestia's initial use cases will be as an off-chain DA solution for validiums (i.e. Celestiums) on Ethereum. Celestium is the low-hanging fruit of the Celestia blockspace launch event.

Another ongoing project is Cevmos; a Cosmos SDK chain with built-in EVM specifically for aggregate settlement. Rollups on top of Cevmos will publish their data to Cevmos, which will then publish it to Celestia. Just like Ethereum today, Cevmos will implement proof of rollup as a settlement layer. The goal of Cevmos is to allow Ethereum rollups to launch natively on Celestia without requiring changes to its codebase.

Finally, we foresee limitations related to Celestia's native token utility. Just like any other chain, Celestia will have a fee market and its native token will accumulate value from the demand for Celestia block space. However, since Celestia does not perform state enforcement (except for very small state enforcement for PoS-related activities), unlike most chains, its token's utility as a source of liquidity for DeFi and other verticals will be somewhat limited. For example, unlike Ethereum, which can be moved freely between rollups and Ethereum in a trust-minimized manner, Celestia's native token must rely on trusted bridges in order to be ported to other chains.

6. Conclusion

We believe in modular blocks Chain is a paradigm shift in blockchain design and its network effects are expected to become increasingly evident in the coming years. In particular, Celestia’s mainnet is expected to launch in 2023.

By decoupling execution from consensus, Celestia not only achieves Bittorrent-style scalability and decentralization, but also provides unique advantages, including trust minimization Bridges, sovereign chains, efficient resource pricing, simpler governance, easy chain deployment and flexible virtual machines.

As the first dedicated DA layer, Celestia does less. By doing less, you achieve more.

As Memecoin losses mount, the ratio of long positions to short positions for SOL in the futures market is showing a bearish trend.

JinseFinance

JinseFinanceMoney never sleeps, new stories are on the way, and I hope you can win this time.

JinseFinance

JinseFinanceThe difference between Based Rollup and traditional Rollup.

JinseFinance

JinseFinanceAOVM is an AI layer protocol built on top of @aoTheComputer, combining AO's hyper-parallelism with AI large models.

JinseFinance

JinseFinanceWe can attribute this to the fact that retail investors can no longer make any money under the current market structure.

JinseFinance

JinseFinancePepe Coin's remarkable surge reflects the increasing influence of meme coins in the cryptocurrency domain, driven by speculation, social media buzz, and significant market activities.

Cheng Yuan

Cheng YuanModular blockchain, TIA, modular blockchain "division of labor" expansion, who is the next TIA? Golden Finance, high-profile modular blockchains have many investment plans from CEXs

JinseFinance

JinseFinanceAs a product of its times, Dogecoin has its own set of problems. DogeLayer is here to fix just that.

Max Ng

Max NgMetaverse is still very much important as the days of the internet are taking a new shape with the migration of different projects into Web3.

Nulltx

Nulltx Cointelegraph

Cointelegraph