Sam Bankman - Fried Appeals Fraud Conviction with 102-Page Filing, Alleges Judicial Bias and Unfair Trial

Sam Bankman-fried, founder of the collapsed crypto exchange FTX, hasn't finished telling his story.

XingChi

XingChi

One day in early December 2012, a secret auction was taking place in a casino hotel in Lake Tahoe, a ski resort in the United States.

Lake Tahoe is located at the junction of California and Nevada. It is the largest alpine lake in North America, with a sapphire-like lake surface and top-notch ski slopes. "The Godfather Part II" was filmed here, and Mark Twain once lingered here. Because it is only more than 200 miles away from the San Francisco Bay Area, it is often called the "backyard of Silicon Valley". Bigwigs such as Zuckerberg and Larry Ellison have also occupied mountains and built luxury houses here.

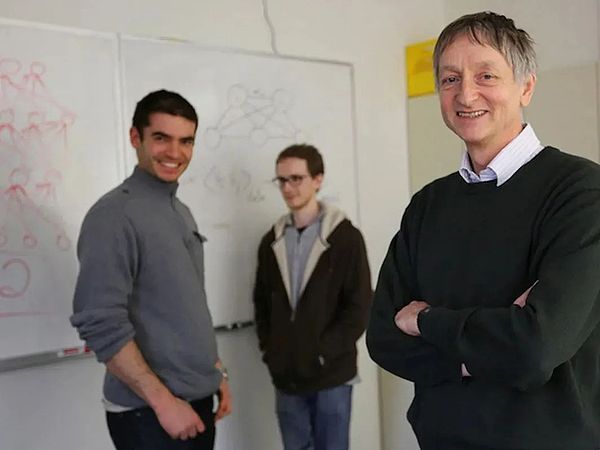

The object of the secret auction is a company that has just been established for one month and has only 3 employees - DNNresearch, founded by Geoffrey Hinton, a professor at the University of Toronto, and his two students.

The company has no tangible products or assets, but the identity of its suitors hints at its weight - the four buyers are Google, Microsoft, DeepMind and Baidu.

Harrah's Hotel, Lake Tahoe, 2012, where the secret auction was held

Shinton, 65, old, thin, and suffering from lumbar disc pain, sat on the floor of Room 703 of the hotel to set the rules for the auction - starting at $12 million, with bids of at least $1 million.

A few hours later, the bidders pushed the price to $44 million. Hinton felt a little dizzy, "It felt like we were in a movie," so he decisively called a halt and decided to sell the company to the last bidder, Google.

Interestingly, one of the sources of this $44 million bid came from Google six months ago.

In June 2012, Google Brain, a research department of Google, released the research results of the Cat Neurons project (i.e., "Google Cat"). Simply put, this project is to use algorithms to identify cats in YouTube videos. It was initiated by Andrew Ng, who jumped from Stanford to Google, and brought in Google legend Jeff Dean to join the team, and also asked for a large budget from Google founder Larry Page.

The Google Cats project built a neural network, downloaded a large number of videos from YouTube, did not mark them, let the model observe and learn the characteristics of cats by itself, and then used 16,000 CPUs throughout Google's data centers for training (the company refused to use GPUs on the grounds that they were too complicated and costly), and finally achieved a recognition accuracy of 74.8%. This number shocked the industry.

Andrew Ng retired from the "Google Cats" project before it was nearly finished and devoted himself to his own Internet education project. Before leaving, he recommended Hinton to the company to take over his job. Faced with the invitation, Hinton said that he would not leave the university and was only willing to go to Google "for a summer." Due to the particularity of Google's recruitment rules, Hinton, then 64, became the oldest summer intern in Google's history.

Hinton has been fighting at the forefront of artificial intelligence since the 1980s. As a professor, he has many students (including Andrew Ng) and is a master in the field of deep learning. Therefore, when he learned the technical details of the "Google Cat" project, he immediately saw the hidden flaws behind the project's success: "They ran the wrong neural network and used the wrong computing power."

For the same task, Hinton thought he could do better. So after the short "internship period", he immediately took action.

Hinton found two of his students, Ilya Sutskever and Alex Krizhevsky, both of whom were Jews born in the Soviet Union. The former was extremely talented in mathematics, and the latter was good at engineering implementation. The three worked closely together to create a new neural network, and then immediately participated in the ImageNet image recognition competition (ILSVRC), and finally won the championship with an astonishing 84% recognition accuracy.

In October 2012, Hinton's team introduced the champion algorithm AlexNet at the Computer Vision Conference held in Florence. Compared with Google Cats, which used 16,000 CPUs, AlexNet only used 4 NVIDIA GPUs, which caused a sensation in the academic and industrial circles. AlexNet's paper became one of the most influential papers in the history of computer science, and has been cited more than 120,000 times, while Google Cats was quickly forgotten.

DNNresearch Company Trio

Yu Kai, who won the first ImageNet competition, was extremely excited after reading the paper, "like an electric shock." Yu Kai is a deep learning expert born in Jiangxi. He just jumped from NEC to Baidu. He immediately wrote an email to Hinton, expressing his idea of cooperation. Hinton readily agreed and simply packaged himself and two students into a company, inviting buyers to bid, so there was the scene at the beginning.

After the auction, a bigger competition started: Google took advantage of the victory and acquired DeepMind in 2014, "all the heroes in the world are in its grasp"; DeepMind launched AlphaGo in 2016, shocking the world; Baidu, which lost to Google, was determined to bet on AI and invested 100 billion in ten years. Yu Kai later helped Baidu invite Andrew Ng, and he himself left a few years later to establish Horizon.

Microsoft seemed to be a step behind, but it eventually won the biggest trophy - OpenAI, whose founders include Ilya Sutskever, one of Hinton's two students. Hinton himself stayed at Google until 2023, during which time he won the ACM Turing Award. Of course, compared with Google's $44 million (Hinton received 40%), the $1 million Turing Award prize seems like pocket money.

From Google Cats in June, to the AlexNet paper in October, to the Lake Tahoe auction in December, in almost six months, the foreshadowing of the AI wave was almost completely buried - the prosperity of deep learning, the rise of GPU and Nvidia, the dominance of AlphaGo, the birth of Transformer, the emergence of ChatGPT... The grand movement of the silicon-based heyday sounded the first note.

In the 180 days from June to December 2012, the fate of carbon-based humans was forever changed - only very few people realized this.

Among these very few people, Stanford University professor Fei-Fei Li is one of them.

In 2012, when the results of Hinton's ImageNet competition came out, Fei-Fei Li, who had just given birth, was still on maternity leave, but the error rate of Hinton's team made her realize that history was being rewritten. As the founder of the ImageNet Challenge, she bought the last flight of the day to fly to Florence and personally presented the award to Hinton's team[2].

Fei-Fei Li was born in Beijing and grew up in Chengdu. At the age of 16, she immigrated to the United States with her parents. She helped in a laundry while finishing her studies at Princeton. In 2009, Fei-Fei Li joined Stanford as an assistant professor. Her research direction is computer vision and machine learning. The goal of this discipline is to enable computers to understand the meaning of pictures and images like humans.

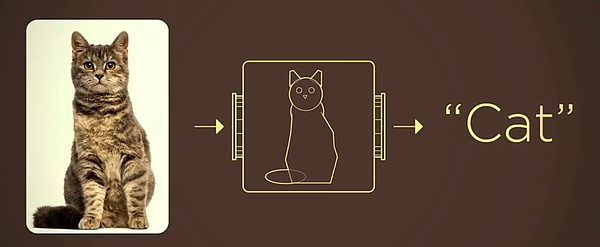

For example, when a camera takes a picture of a cat, it just converts light into pixels through the sensor and does not know whether the object in the lens is a cat or a dog. If the camera is compared to the human eye, the problem that computer vision solves is to equip the camera with a human brain.

The traditional way is to abstract things in the real world into mathematical models, such as abstracting the characteristics of a cat into simple geometric shapes, which can greatly reduce the difficulty of machine recognition.

Image source: Fei-Fei Li's TED speech

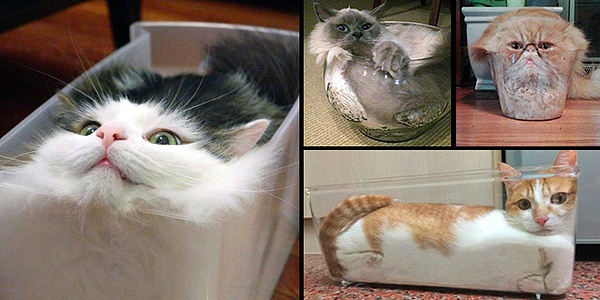

But this idea has very large limitations, because cats are likely to be like this:

In order to enable computers to recognize "liquid cats", a large number of deep learning pioneers such as Jeff Hinton and Yann LeCun began exploring since the 1980s. But they always hit the bottleneck of computing power or algorithms - good algorithms lack sufficient computing power drive, and algorithms with low computing power requirements are difficult to meet recognition accuracy and cannot be industrialized.

If the problem of "liquid cat" cannot be solved, the appeal of deep learning can only remain at the theoretical level, and industrial scenarios such as autonomous driving, medical imaging, and precise advertising push are just castles in the air.

In short, the development of deep learning requires the three pillars of algorithms, computing power, and data to drive it. Algorithms determine how computers recognize things; but algorithms require sufficiently large computing power to drive them; at the same time, the improvement of algorithms requires large-scale, high-quality data; the three complement each other and are indispensable.

After 2000, although the computing power bottleneck was gradually eliminated with the rapid development of chip processing capabilities, the mainstream academic community still had little interest in the deep learning route. Fei-Fei Li realized that the bottleneck might not be the accuracy of the algorithm itself, but the lack of high-quality, large-scale data sets.

Li Feifei's inspiration comes from the way three-year-old children understand the world - taking cats as an example, children will meet cats again and again under the guidance of adults, and gradually grasp the meaning of cats. If a child's eyes are regarded as a camera, and one eye movement is equivalent to pressing the shutter, then a three-year-old child has taken hundreds of millions of photos.

If this method is applied to a computer, if the computer is constantly shown pictures of cats and other animals, and the correct answer is written on the back of each picture. Every time the computer sees a picture, it checks it with the answer on the back. Then, as long as the number of times is enough, the computer may be able to grasp the meaning of cats like a child.

The only problem that needs to be solved is: Where can I find so many pictures with answers written on them?

Fei-Fei Li came to China in 2016 and announced the establishment of Google AI China Center

This was the opportunity for the birth of ImageNet. At that time, even the largest dataset PASCAL only had four categories with a total of 1,578 pictures, and Fei-Fei Li's goal was to create a dataset containing hundreds of categories with a total of tens of millions of pictures. It doesn't sound difficult now, but you have to know that it was 2006, and the most popular mobile phone in the world was still Nokia 5300.

Relying on the Amazon crowdsourcing platform, Fei-Fei Li's team solved the huge workload of manual annotation. In 2009, the ImageNet dataset containing 3.2 million pictures was born. With a picture dataset, algorithms can be trained on this basis to improve computer recognition capabilities. But compared to the hundreds of millions of photos of three-year-old children, 3.2 million is still too small.

In order to expand the data set continuously, Fei-Fei Li decided to follow the popular practice in the industry and hold an image recognition competition. Participants bring their own algorithms to identify the pictures in the data set, and the one with the highest accuracy wins. But the deep learning route was not mainstream at the time. At the beginning, ImageNet could only "hang" under the well-known European competition PASCAL to barely get enough participants.

By 2012, the number of pictures in ImageNet had expanded to 15 million pictures in 1,000 categories. Fei-Fei Li spent 6 years to make up for the short board of data. However, the best result of ILSVRC also had an error rate of 25%, and it still did not show enough persuasiveness in terms of algorithm and computing power.

At this time, Teacher Hinton came on stage with AlexNet and two GTX580 graphics cards.

The champion algorithm of Hinton's team, AlexNet, uses an algorithm called Convolutional Neural Networks (CNN). "Neural network" is an extremely high-frequency term in the field of artificial intelligence, and it is also a branch of machine learning. Its name and structure are taken from the working method of the human brain.

The process of human recognition of objects is that the pupil first takes in pixels, the cerebral cortex performs preliminary processing through edges and directions, and then the brain makes judgments through continuous abstraction. Therefore, the human brain can distinguish objects based on some features.

For example, without showing the whole face, most people can recognize who is in the picture below:

Neural networks are actually simulating the recognition mechanism of the human brain. In theory, the intelligent computers that can achieve the human brain can also achieve. Compared with methods such as SVM, decision trees, and random forests, only by simulating the human brain can we process unstructured data such as "Liquid Cat" and "Half Trump".

But the problem is that the human brain has about 100 billion neurons, and the nodes (that is, synapses) between neurons are as many as trillions, forming an extremely complex network. In contrast, the "Google Cat" composed of 16,000 CPUs has a total of 1 billion nodes inside, which was already the most complex computer system at the time.

This is why even the "father of artificial intelligence" Marvin Minsky was not optimistic about this route. When he published his new book "The Emotion Machine" in 2007, Minsky still expressed pessimism about neural networks. In order to change the long-term negative attitude of the mainstream machine learning community towards artificial neural networks, Hinton simply changed its name to Deep Learning.

In 2006, Hinton published a paper in Science, proposing the concept of "deep belief neural network (DBNN)" and providing a training method for multi-layer deep neural networks, which is considered a major breakthrough in deep learning. However, Hinton's method consumes a lot of computing power and data, and it is difficult to implement in practice.

Deep learning requires constantly feeding data to the algorithm, and the scale of the data set at that time was too small until ImageNet appeared.

In the first two competitions of ImageNet, the participating teams used other machine learning routes, and the results were quite mediocre. The convolutional neural network AlexNet adopted by Hinton’s team in 2012 was improved from another deep learning pioneer Yann LeCun. LeNet, proposed by him in 1998, allows the algorithm to extract key features of images, such as Trump’s blonde hair.

At the same time, the convolution kernel slides on the input image, so no matter where the object is located, the same features can be detected, greatly reducing the amount of calculation.

Based on the classic convolutional neural network structure, AlexNet abandoned the previous layer-by-layer unsupervised method and performed supervised learning on the input values, greatly improving the accuracy.

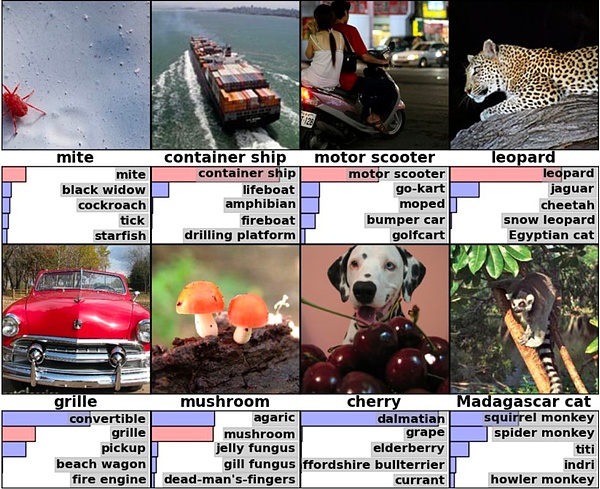

For example, in the picture in the lower right corner of the figure below, AlexNet did not actually recognize the correct answer (Madagascar cat), but it listed small mammals that can climb trees like Madagascar cats, which means that the algorithm can not only recognize the object itself, but also make inferences based on other objects[5].

Image source: AlexNet paper

What the industry is excited about is that AlexNet has 60 million parameters and 650,000 neurons, and a full training of the ImageNet dataset requires at least 262 quadrillion floating point operations. But the Hinton team only used two NVIDIA GTX 580 graphics cards during a week of training.

After the Hinton team won the championship, the most embarrassed was obviously Google.

It is said that Google also tested the ImageNet dataset internally, but the recognition accuracy was far behind that of the Hinton team. Considering that Google has hardware resources that are unmatched by the industry, as well as the huge data scale of search and YouTube, Google Brain is a special case that was specially selected by the leader. The results are obviously not convincing enough.

Without this huge contrast, deep learning may not have shocked the industry in a short period of time and gained recognition and popularity. The reason why the industry is excited is that Hinton's team only used four GPUs to achieve such good results, so computing power is no longer a bottleneck.

When training, the algorithm will perform layered operations on the functions and parameters of each layer of the neural network to obtain the output results, and GPUs happen to have very strong parallel computing capabilities. Andrew Ng actually proved this in a paper in 2009, but when he and Jeff Dean ran "Google Cats", they still used CPUs. Later, Jeff Dean specially ordered equipment worth 2 million US dollars, which still did not include GPUs[6].

Hinton was one of the few people who realized the great value of GPUs for deep learning very early on. However, before AlexNet topped the list, high-tech companies generally had an unclear attitude towards GPUs.

In 2009, Hinton was invited to Microsoft to be a short-term technical consultant for a speech recognition project. He suggested that the project leader Deng Li purchase the top-level NVIDIA GPU and match it with the corresponding server. This idea was supported by Deng Li, but Deng Li’s boss Alex Acero thought it was a waste of money[6], “GPUs are used to play games, not for artificial intelligence research.”

Deng Li

Interestingly, Alex Acero later jumped to Apple and was responsible for Apple’s speech recognition software Siri.

Microsoft’s lack of comment on GPUs obviously made Hinton a little angry. He later suggested in an email that Deng Li buy one set of equipment, while he would buy three sets, and said sarcastically [6]: After all, we are a Canadian university with strong financial resources, not a software seller with tight funds.

However, after the end of the ImageNet Challenge in 2012, all artificial intelligence scholars and technology companies made a 180-degree turn on GPUs. In 2014, Google’s GoogLeNet won the championship with a recognition accuracy of 93%, using NVIDIA GPUs. That year, the number of GPUs used by all participating teams soared to 110.

The reason why this challenge was regarded as the "big bang moment" was that the shortcomings of the three pillars of deep learning - algorithms, computing power, and data - were all made up, and industrialization was only a matter of time.

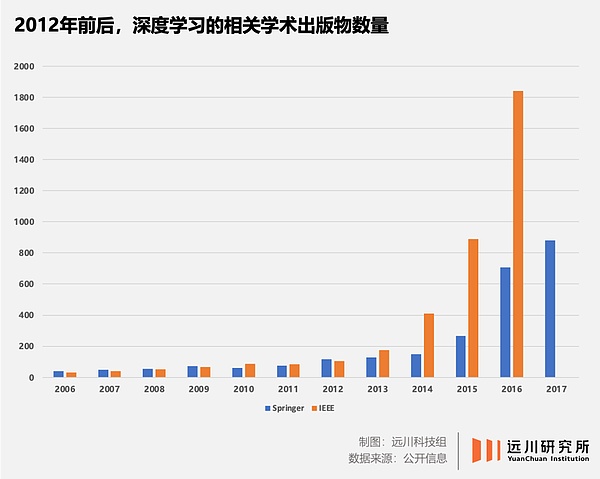

At the algorithm level, the paper on AlexNet published by Hinton's team became one of the most cited papers in the field of computer science. The original technical route of a hundred schools of thought has become the only one dominated by deep learning, and almost all computer vision research has turned to neural networks.

In terms of computing power, the GPU's super parallel computing capabilities and adaptability to deep learning were quickly recognized by the industry, and NVIDIA, which began to deploy CUDA six years ago, became the biggest winner.

In terms of data, ImageNet has become a touchstone for image processing algorithms. With high-quality data sets, the algorithm's recognition accuracy has improved by leaps and bounds. In the last challenge in 2017, the champion algorithm's recognition accuracy reached 97.3%, exceeding that of humans.

At the end of October 2012, Hinton's student Alex Krizhevsky published a paper at the Computer Vision Conference in Florence, Italy. Then, high-tech companies around the world began to do two things regardless of cost: one is to buy up all the graphics cards of Nvidia, and the other is to poach all the AI researchers in universities.

The $44 million in Lake Tahoe repriced the global deep learning masters.

From publicly available information, Yu Kai, who was still at Baidu at the time, was indeed the first person to poach Hinton.

At the time, Yu Kai was the head of Baidu's Multimedia Department, which was the predecessor of Baidu's Institute of Deep Learning (IDL). After receiving Yu Kai's email, Hinton quickly replied that he agreed to cooperate and also expressed his wish that Baidu would provide some funds. Yu Kai asked for the specific number, and Hinton said that $1 million would be enough - this number was so low that it was unbelievable that only two P8s could be hired. Yu Kai consulted Robin Li, who readily agreed. After Yu Kai replied that it was no problem, Hinton, perhaps sensing the industry's thirst for information, asked Yu Kai if he would mind asking other companies, such as Google. Yu Kai later recalled[6]: "I felt a little regretful at the time. I guess I answered too quickly, which made Hinton realize the huge opportunity. But I could only generously say that I didn't mind." In the end, Baidu missed out on Hinton's team. But Yu Kai was not unprepared for this result. On the one hand, Hinton had serious lumbar disc health problems and could not drive or fly, so it was difficult for him to travel across the Pacific Ocean to China. On the other hand, Hinton had too many students and friends working at Google, and the two sides had too deep a connection. The other three companies were essentially just bidding for the company.

If the impact of AlexNet is still concentrated in the academic circle, then the secret auction in Lake Tahoe completely shocked the industry - because Google spent $44 million to buy a company that was established less than a month ago, had no products, no income, only three employees and a few papers under the noses of global technology companies.

The most stimulated company was obviously Baidu. Although it failed in the auction, Baidu's management witnessed how Google invested in deep learning at all costs, which prompted Baidu to make up its mind to invest and announced the establishment of the Deep Learning Institute IDL at the annual meeting in January 2013. In May 2014, Baidu invited Andrew Ng, a key figure in the "Google Cat" project, and in January 2017, it invited Qi Lu who left Microsoft.

After winning the Hinton team, Google continued to work hard and bought the auction rival DeepMind in 2014 for $600 million.

At that time, Musk recommended DeepMind, which he had invested in, to Google founder Larry Page. In order to bring Hinton to London to test the quality of the company, the Google team chartered a private plane and modified the seats to solve the problem that Hinton could not take a plane[6].

The "British player" DeepMind defeated Lee Sedol in the Go game. In 2016,

it was Facebook that competed with Google for DeepMind. When DeepMind fell to Google, Zuckerberg turned to recruit LeCun Yang, one of the "three giants of deep learning." In order to bring LeCun under his wing, Zuckerberg agreed to many of his demanding requests, such as setting up an AI lab in New York, completely separating the lab from the product team, and allowing LeCun to continue working at New York University.

After the 2012 ImageNet Challenge, the field of artificial intelligence faced a very serious problem of "talent supply and demand mismatch":

Due to the rapid opening of industrialization spaces such as recommendation algorithms, image recognition, and autonomous driving, the demand for talent has skyrocketed. However, due to long-term pessimism, researchers in deep learning are a very small circle, and the number of top scholars can be counted on two hands, and the supply is seriously insufficient.

In this case, hungry technology companies can only buy "talent futures":poach professors, and then wait for them to bring their own students in.

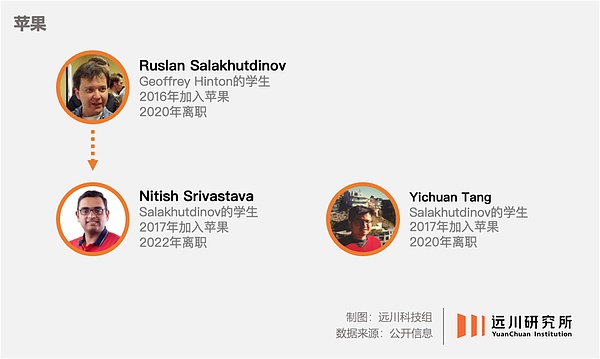

After LeCun joined Facebook, six students followed him to join the company. Apple, which is eager to try its hand at car manufacturing, hired Hinton's student Ruslan Salakhutdinov as its first AI director. Even hedge fund Citadel joined the talent war, poaching Deng Li, who worked with Hinton on voice recognition and later participated in a secret auction on behalf of Microsoft.

We know the history after that very well: face recognition, machine translation, autonomous driving and other industrial scenarios are advancing by leaps and bounds, GPU orders are floating like snowflakes to NVIDIA's headquarters in Santa Clara, and the theoretical edifice of artificial intelligence is also being poured day by day.

In 2017, Google proposed the Transformer model in the paper "Attention is all you need", opening up the current era of large models. A few years later, ChatGPT was born.

And the birth of all this can be traced back to the 2012 ImageNetChallenge.

So, in which year did the historical process that promoted the birth of the "Big Bang Moment" in 2012 appear?

The answer is 2006.

Great

Before 2006, the status quo of deep learning can be summarized by the famous saying of Baron Kelvin: the building of deep learning has been basically built, but under the sunny sky, there are three small dark clouds floating.

These three small dark clouds are algorithms, computing power and data.

As mentioned above, deep learning is a perfect solution in theory because it simulates the mechanism of the human brain. But the problem is that both the data it needs to consume and the computing power it needs to consume are at a science fiction level at the time. It is so science fiction that the mainstream view of deep learning in the academic community is: scholars with normal brains will not studyneural networks.

But three things happened in 2006 that changed this:

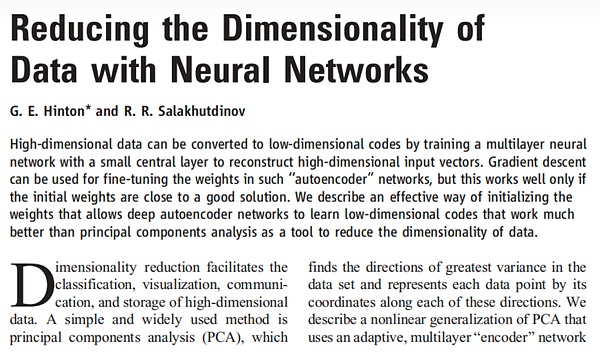

Hinton and his student Salakhutdinov (the one who later went to Apple) published a paper in Science titled Reducing the dimensionality of data with neural networks, which proposed for the first time an effective solution to the gradient vanishing problem, making a big step forward in the algorithm level.

Salakhutdinov (first from left) and Hinton (middle), 2016

Stanford University's Fei-Fei Li realized that if the data scale is difficult to restore the original appearance of the real world, then even the best algorithm will find it difficult to achieve the effect of "simulating the human brain" through training. So she started to build the ImageNet dataset.

NVIDIA released a new GPU based on the Tesla architecture, and then launched the CUDA platform, which greatly reduced the difficulty for developers to use GPUs to train deep neural networks, and the daunting computing power threshold was cut down by a large margin.

The occurrence of these three events blew away the three dark clouds over deep learning, and they met at the ImageNet Challenge in 2012, completely rewriting the fate of the high-tech industry and even the entire human society.

But in 2006, whether it was Jeff Hinton, Fei-Fei Li, Jen-Hsun Huang, or other people who promoted the development of deep learning, it was clear that they could not foresee the subsequent prosperity of artificial intelligence, let alone the role they played.

Papers by Hinton and Salakhutdinov

Today, the fourth industrial revolution driven by AI has begun again, and the evolution of artificial intelligence will only get faster and faster. If we can get much inspiration, it may be nothing more than the following three points:

1. The thickness of the industry determines the height of innovation.

When ChatGPT came out, the voices of "Why is it the United States again" came one after another. But if we extend the time, we will find that from transistors, integrated circuits, to Unix, x86 architecture, and now machine learning, American academia and industry are almost the leaders.

This is because, although there are endless discussions about the "hollowing out of industry" in the United States, the computer science industry with software as its core has never "flowed" to other economies, but has become increasingly advantageous. To date, almost all of the more than 70 ACM Turing Award winners are Americans.

The reason why Andrew Ng chose Google to cooperate in the "Google Cat" project is largely because only Google has the data and computing power required for algorithm training, which is based on Google's strong profitability. This is the advantage brought by the thickness of the industry - talents, investment, and innovation capabilities will all move closer to the heights of the industry.

China is also reflecting this "thickness advantage" in its own advantageous industries. The most typical example at present is new energy vehicles. On the one hand, European car companies charter planes to come to the Chinese auto show to learn from new forces, and on the other hand, Japanese car company executives frequently switch jobs to BYD - what are they looking for? Obviously, it is not just to be able to pay social security in Shenzhen.

2. The more cutting-edge the technology field, the more important the talent is.

The reason why Google was willing to spend $44 million to buy Hinton's company is that in cutting-edge technology fields such as deep learning, the role of a top scholar is often greater than that of 10,000 fresh graduates in computer vision. If Baidu or Microsoft had won the bid at that time, the development of artificial intelligence might have been rewritten.

This kind of "buying the entire company for you" behavior is actually very common. At the critical stage of Apple's self-developed chips, it bought a small company called PASemi just to dig up the chip architecture master Jim Keller - Apple's A4, AMD's Zen, and Tesla's FSD chip all received Jim Keller's technical poverty alleviation.

This is also the biggest advantage brought by industrial competitiveness - the attraction to talents.

None of the "three giants of deep learning" is American. The name AlexNet comes from Hinton's student Alex Krizhevsky, who was born in Ukraine under Soviet rule, grew up in Israel, and came to Canada to study. Not to mention the many Chinese faces who are still active in American high-tech companies.

3. The difficulty of innovation lies in how to face uncertainty.

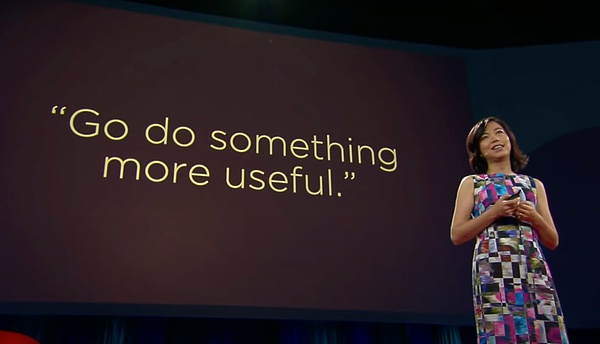

In addition to the "father of artificial intelligence" Marvin Minsky's opposition to deep learning, another well-known opponent of deep learning is Jitendra Malik of the University of California, Berkeley, who has ridiculed both Hinton and Andrew Ng. Fei-Fei Li also consulted Malik when building ImageNet, and the latter's advice to her was: Do something more useful.

Fei-Fei Li's Ted Speech

It was precisely the pessimism of these industry pioneers that led to decades of silence in deep learning. Even when Hinton broke through a ray of light in 2006, another of the Big Three, LeCun, was still repeatedly proving to the academic community that "deep learning also has research value."

LeCun began studying neural networks in the 1980s. While at Bell Labs, LeCun and his colleagues designed a chip called ANNA to try to solve the computing power problem. Later, AT&T required the research department to "empower the business" due to business pressure. LeCun's answer was "I just want to study computer vision. If you have the guts, fire me." In the end, he got what he asked for, and got N+1[6].

Any researcher in the field of cutting-edge technology must face a problem - What if this thing cannot be made?

Since entering the University of Edinburgh in 1972, Hinton has been fighting on the front line of deep learning for 50 years. When the ImageNet Challenge was held in 2012, he was already 65 years old. It is hard to imagine how much self-doubt and denial he had to overcome in the face of various doubts in the academic community for such a long time.

Now we know that Hinton in 2006 had persisted until the last darkness before dawn, but he himself may not know this, not to mention the entire academic and industrial circles. Just like when the iPhone was released in 2007, most people's reaction might be the same as that of Ballmer, the then CEO of Microsoft:

Currently, the iPhone is still the most expensive mobile phone in the world, and it has no keyboard

Those who promote history often cannot guess their own coordinates in the course of history.

Greatness is great not because of its stunning appearance when it suddenly appeared, but because it has to endure a long period of obscurity and misunderstanding in the endless darkness. It was not until many years later that people could follow these yardsticks and sigh that the stars were shining and geniuses emerged at that time.

In one field of scientific research after another, countless scholars never saw a glimmer of hope in their entire lives. So from a certain perspective, Hinton and other deep learning promoters are lucky. They have created greatness and indirectly promoted success after success in the industry. The capital market will give a fair price to success, and history will record the loneliness and sweat of those who create greatness.

References

[1] 16,000 computers looking for a cat together, The New York Times

[2] Fei-Fei Li's Quest to Make AI Better for Humanity, Wired

[3] Fei-Fei Li's TED Talk

[4] 21 seconds to see all the models that topped the ImageNet charts, with more than 60 model architectures performing on the same stage, Synced

[5] The road to godhood of convolutional neural networks: it all started with AlexNet, New Intelligence

[6] The deep learning revolution, Cade Metz

[7] To Find AI Engineers, Google and Facebook Hire Their Professors, The Information

[9] Eight years of ImageNet: Fei-Fei Li and the AI world she changed, Quantum Bit

[10] DEEP LEARNING: PREVIOUS AND PRESENT APPLICATIONS, Ramiro Vargas

[11] Review of deep learning: concepts, CNN architectures, challenges, applications, future directions, Laith Alzubaidi et al

[12] Literature Review of Deep Learning Research Areas, Mutlu Yapıcı et al

[13] The real hero behind ChatGPT: The leap of faith of Ilya Sutskever, Chief Scientist of OpenAI, New Intelligence

[14] 10 years later, deep learning ‘revolution’ rages on, say AI pioneers Hinton, LeCun and Li,Venturebeat

[15] From not working to neural networking,The Economist

[16] Huge “foundation models” are turbo-charging AI progress,The Economist

[17] 2012: A Breakthrough Year for Deep Learning,Bryan House

[18] Deep learning: the “magic wand” of artificial intelligence,Essence Securities

[19] Development of deep learning algorithms: from diversity to unity,Guojin Securities

Sam Bankman-fried, founder of the collapsed crypto exchange FTX, hasn't finished telling his story.

XingChi

XingChiHaniyeh died in Tehran attack; Baidu changed its page to black and white to commemorate him, causing controversy.

ZeZheng

ZeZhengChangpeng Zhao, the founder of Binance, introduces Giggle Academy, a nonprofit online education platform, amid legal challenges. Despite criticism, the initiative aims to provide free, gamified education globally. Zhao's vision seeks to address educational disparities, emphasizing accessibility and adaptability.

Sanya

SanyaGolden Finance launches the cryptocurrency and blockchain industry morning report "Golden Morning 8:00" Issue 2251, providing you with the latest and fastest digital currency and blockchain industry news.

JinseFinance

JinseFinanceRobinhood settles with Massachusetts securities regulator, agreeing to a $7.5 million penalty and revising digital engagement policies. The resolution addresses allegations of gamifying trading and misleading inexperienced investors. The settlement requires changes to practices, including the cessation of certain gamification elements, emphasizing investor protection and cybersecurity.

Xu Lin

Xu LinExplore Larry Fink's, BlackRock CEO, insights on Bitcoin, Ethereum, and the transformative potential of ETFs and tokenization in shaping the future of finance.

Miyuki

MiyukiBlackRock CEO Larry Fink says that there is increasing global interest in cryptocurrency and a...

dailyhodl

dailyhodlFink likened crypto to “digitizing gold” and outlined bitcoin as an “international asset” that could serve as a hedge against inflation.

cryptopotato

cryptopotatoAccording to OpenSea's official social media account, OpenSea has now launched an update to the collection card on the profile page, the best offer on the collection page (displaying the highest offer and other top indicators on the NFT collection page), and the activity Mint price (displaying Polygon in the activity feed) , Klaytn, and Ethereum minting prices) three new features.

链向资讯

链向资讯 Cointelegraph

Cointelegraph